User:Stchen

Introduction to Update and Adaptive Coherence Protocols on Real Architectures

In parallel computer architectures, cache coherence refers to the consistency of data that is stored throughout the caches on individual processors or throughout the shared memory. The problem here is that we have multiple caches on multiple processors. When an update to a single cache makes changes to a shared memory, you will need to have all the caches be coherent on the value change. This is better shown below.

According to <ref name="glasco">Glasco, D.B.; Delagi, B.A.; Flynn, M.J.; , "Update-based cache coherence protocols for scalable shared-memory multiprocessors," System Sciences, 1994. Proceedings of the Twenty-Seventh Hawaii International Conference on , vol.1, no., pp.534-545, 4-7 Jan. 1994 doi: 10.1109/HICSS.1994.323135 Paper</ref> there are two ways to maintain cache consistency. These are invalidation and update. The difference is that invalidation will "... purges the copies of the line from the other caches which results in a single copy of the line, and updating forwards the write value to the other caches, after which all caches are consistent"<ref name="glasco"></ref>. Since we have already talked about invalidation protocols, we will focus on update protocols. However, now there has been a new type of adaptive coherence protocols and that will also be discussed below.

According to Solihin textbook, page number 229, "One of the drawbacks of an invalidate-based protocol is that it incurs high number of coherence misses." What this means is that when a read has been made to an invalidated block, there will be a cache miss and serving this miss can create a high latency. To solve this, one can use a update coherence protocol, or an adaptive coherence protocol.

Update Coherence Protocol

Introduction

Update-based cache coherence protocols work by directly updating all the cache values in the system. This differs from the invalidation-based protocols because it achieves write propagation without having to invalidation and misses. This saves on numerous coherence misses, time spent to correct the miss, and bandwidth usage. One of the update-based protocols we will be discussing is the Dragon Protocol.

Dragon Protocol

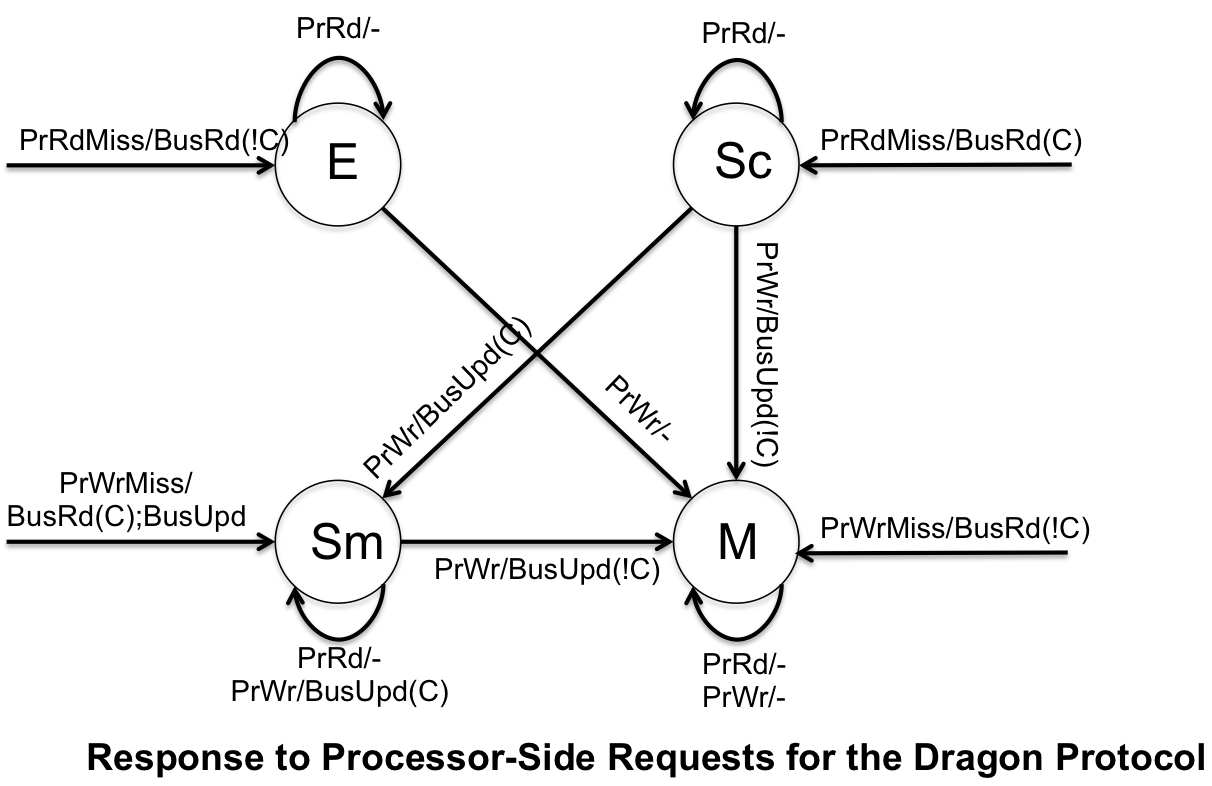

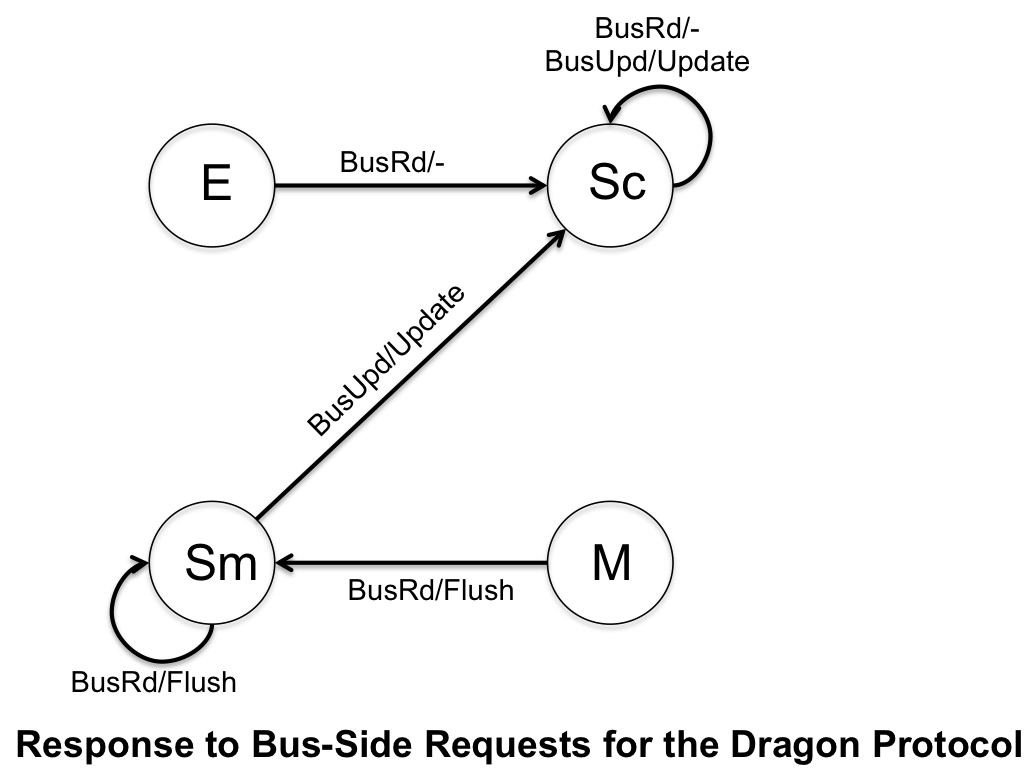

The Dragon Protocol is an implementation of update-based coherence protocol. It further saves on bandwidth by updating the specific words within the cache instead of the entire block. The caches use write allocate and write update policies. The Dragon Protocol is made up of four states (Modified (M), Exclusive (E), Shared Modified (Sm), Shared Clean (Sc)) and does not include an invalidation state, because if the block is in cache it is valid.

- Modified (M) - cache block is exclusively owned, however it can be different from main memory

- Exclusive (E) - cache block is clean and is only in one cache

- Shared Modified (Sm) - cache block resides in multiple caches and is possible dirty

- Shared Clean (Sc) - cache block resides in multiple caches and is clean

There is not an invalidation state, because if a block is cached then it assumed to be valid. However, it can differ from main memory.

The Dragon Protocol is implemented in the Cray CS6400 (also know as the Xerox Dragon multiprocessor workstation) and was developed by Xerox.

Firefly Protocol

File:Firefly Transition Diagram.png

Adaptive Coherence Protocol

Introduction

Power Considerations

Quiz

References

<references />