M1852 Implement missing WebAudio node support

Introduction

Servo

Servo is a modern, high-performance browser engine designed for both application and embedded use and written in the Rust programming language. It is currently developed on 64bit OS X, 64bit Linux, and Android.

More information about Servo is available here

Rust

Rust is a systems programming language focuses on memory safety and concurrency. It is similar to C++ but ensures memory safety and high performance.

More information about Rust can be found here

Web Audio API

The Web Audio API involves handling audio operations inside an audio context, and has been designed to allow modular routing. Basic audio operations are performed with audio nodes, which are linked together to form an audio routing graph. Several sources — with different types of channel layout — are supported even within a single context. This modular design provides the flexibility to create complex audio functions with dynamic effects.

Audio nodes are linked into chains and simple webs by their inputs and outputs. They typically start with one or more sources. Sources provide arrays of sound intensities (samples) at very small timeslices, often tens of thousands of them per second. These could be either computed mathematically (such as OscillatorNode), or they can be recordings from sound/video files (like AudioBufferSourceNode and MediaElementAudioSourceNode) and audio streams (MediaStreamAudioSourceNode). In fact, sound files are just recordings of sound intensities themselves, which come in from microphones or electric instruments, and get mixed down into a single, complicated wave.

Outputs of these nodes could be linked to inputs of others, which mix or modify these streams of sound samples into different streams. A common modification is multiplying the samples by a value to make them louder or quieter (as is the case with GainNode). Once the sound has been sufficiently processed for the intended effect, it can be linked to the input of a destination (AudioContext.destination), which sends the sound to the speakers or headphones. This last connection is only necessary if the user is supposed to hear the audio.

A simple, typical workflow for web audio would look something like this:

1. Create audio context

2. Inside the context, create sources — such as <audio>, oscillator, stream

3. Create effects nodes, such as reverb, biquad filter, panner, compressor

4. Choose final destination of audio, for example your system speakers

5. Connect the sources up to the effects, and the effects to the destination.

Problem Statement

Major browsers support the WebAudio standard which can be used to create complex media playback applications from low-level building blocks. Servo is a new, experimental browser that supports some of these building blocks (called audio nodes); the goal of this project is to to improve compatibility with web content that relies on the WebAudio API by implementing missing pieces of incomplete node types (OscillatorNode) along with entire missing nodes (ConstantSourceNode, StereoPannerNode, IIRFilterNode). Additional project information is available here

Proposed Design

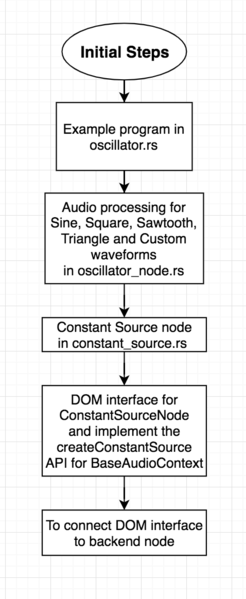

Our design and plan of work is split into two set of tasks- Initial steps and Subsequent steps.

Initial Steps

- To create a new example program- media/examples/oscillator.rs that exercises the different oscillator types

- To implement the processing algorithm for the different oscillator types like Sine, Square, Sawtooth, Triangle and a Custom wave based on user's input in media/audio/src/oscillator_node.rs so that the example programs in media/examples/oscillator.rs sound correct.

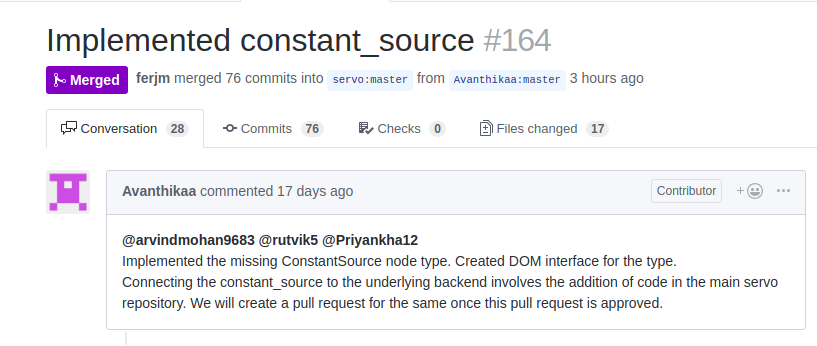

- To implement the missing ConstantSource node type in a new constant_source.rs file in the directory media/audio/src that produces a constant tone based a stored value that can be modified.

- To create the DOM interface for ConstantSourceNode and implement the createConstantSource API for BaseAudioContext

- To connect the DOM interface to the underlying backend node by processing the unimplemented message type.

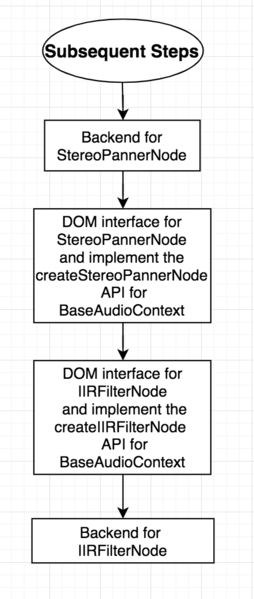

Subsequent Steps

- To implement the backend for StereoPannerNode in the media crate by creating a new node. To create a runnable example for the same.

- To create the DOM interface for StereoPannerNode and implement the createStereoPannerNode API for BaseAudioContext

- To create the DOM interface for IIRFilterNode and implement the createIIRFilterNode API for BaseAudioContext.

- To implement the backend for IIRFilterNode based on the specification's processing model and create a runnable example

Files (to be) modified

- media/audio/src/oscillator_node.rs

- media/audio/src/constant_source_node.rs

- media/audio/src/node.rs

- media/audio/src/lib.rs

- media/audio/src/render_thread.rs

- media/audio/src/param.rs

- media/examples/oscillator.rs

- media/examples/constant_source.rs

Implementation

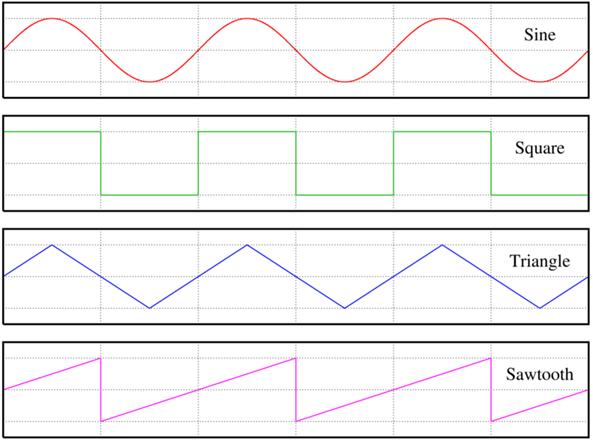

Our current implementation involves audio processing for Sine, Square, Sawtooth and Triangle waveforms and we are working on generating the Custom wave. We created a oscillator.rs file which includes examples of the given waveforms. These waveforms can be visualized using the given image:

The "OscillatorType" variable is a string which has the name of the waveform associated with it. For each waveform, we calculate the value which is used by the OscilaterNode class to generate a wave. Each type of wave has a particular output. The output is a function of phase, with each waveform having its own function as follows:

Code Snippet

The following changes have been done in the oscillator_node.rs file in the audio/src/ directory where the function to generate the waveforms is written

// Convert all our parameters to the target type for calculations

let vol: f32 = 1.0;

let sample_rate = info.sample_rate as f64;

let two_pi = 2.0 * PI;

// We're carrying a phase with up to 2pi around instead of working

// on the sample offset. High sample offsets cause too much inaccuracy when

// converted to floating point numbers and then iterated over in 1-steps

//

// Also, if the frequency changes the phase should not

let mut step = two_pi * self.frequency.value() as f64 / sample_rate;

while let Some(mut frame) = iter.next() {

let tick = frame.tick();

if tick < start_at {

continue

} else if tick > stop_at {

break;

}

if self.update_parameters(info, tick) {

step = two_pi * self.frequency.value() as f64 / sample_rate;

}

let mut value = vol;

//Based on the type of wave, the value is calculated using the formulae for the respective waveform

match self.oscillator_type {

OscillatorType::Sine => {

value = vol * f32::sin(NumCast::from(self.phase).unwrap());

}

OscillatorType::Square => {

if self.phase >= PI && self.phase < two_pi {

value = vol * 1.0;

}

else if self.phase > 0.0 && self.phase < PI {

value = vol * (-1.0);

}

}

OscillatorType::Sawtooth => {

value = vol * ((self.phase as f64) / (PI)) as f32;

}

OscillatorType::Triangle => {

if self.phase >= 0. && self.phase < PI/2.{

value = vol * 2.0 * ((self.phase as f64) / (PI)) as f32;

}

else if self.phase >= PI/2. && self.phase < PI {

value = vol * ( 1. - ( ( (self.phase as f64) - (PI/2.)) * (2./PI) ) as f32 );

}

else if self.phase >= PI && self.phase < (3.* PI/2.) {

value = vol * -1. * ( 1. - ( ( (self.phase as f64) - (PI/2.)) * (2./PI) ) as f32 );

}

else if self.phase >= 3.*PI/2. && self.phase < 2.*PI {

value = vol * (-2.0) * ((self.phase as f64) / (PI)) as f32;

}

}

The oscillator.rs file in the media/examples/ directory has been created by us which generates objects and calls methods to run the different oscillator type examples

extern crate servo_media;

use servo_media::audio::node::{AudioNodeInit, AudioNodeMessage, AudioScheduledSourceNodeMessage};

use servo_media::audio::oscillator_node::OscillatorNodeOptions;

use servo_media::audio::oscillator_node::OscillatorType::Sawtooth;

use servo_media::audio::oscillator_node::OscillatorType::Triangle;

//use servo_media::audio::oscillator_node::OscillatorType::Sine;

use servo_media::audio::oscillator_node::OscillatorType::Custom;

use servo_media::audio::oscillator_node::OscillatorType::Square;

use servo_media::ServoMedia;

use std::sync::Arc;

use std::{thread, time};

fn run_example(servo_media: Arc<ServoMedia>) {

let context = servo_media.create_audio_context(Default::default());

let dest = context.dest_node();

let mut options = OscillatorNodeOptions::default();

let osc1 = context.create_node(AudioNodeInit::OscillatorNode(options), Default::default());

context.connect_ports(osc1.output(0), dest.input(0));

let _ = context.resume();

context.message_node(

osc1,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

thread::sleep(time::Duration::from_millis(3000));

let _ = context.close();

thread::sleep(time::Duration::from_millis(3000));

options.oscillator_type = Square;

let context = servo_media.create_audio_context(Default::default());

let dest = context.dest_node();

let osc2 = context.create_node(AudioNodeInit::OscillatorNode(options), Default::default());

context.connect_ports(osc2.output(0), dest.input(0));

let _ = context.resume();

context.message_node(

osc2,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

thread::sleep(time::Duration::from_millis(3000));

let _ = context.close();

thread::sleep(time::Duration::from_millis(1000));

options.oscillator_type = Sawtooth;

let context = servo_media.create_audio_context(Default::default());

let dest = context.dest_node();

let osc3 = context.create_node(AudioNodeInit::OscillatorNode(options), Default::default());

context.connect_ports(osc3.output(0), dest.input(0));

thread::sleep(time::Duration::from_millis(3000));

let _ = context.resume();

context.message_node(

osc3,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

thread::sleep(time::Duration::from_millis(3000));

let _ = context.close();

thread::sleep(time::Duration::from_millis(1000));

options.oscillator_type = Triangle;

let context = servo_media.create_audio_context(Default::default());

let dest = context.dest_node();

let osc4 = context.create_node(AudioNodeInit::OscillatorNode(options), Default::default());

context.connect_ports(osc4.output(0), dest.input(0));

thread::sleep(time::Duration::from_millis(3000));

let _ = context.resume();

context.message_node(

osc4,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

thread::sleep(time::Duration::from_millis(3000));

let _ = context.close();

thread::sleep(time::Duration::from_millis(1000));

options.oscillator_type = Custom;

let context = servo_media.create_audio_context(Default::default());

let dest = context.dest_node();

let osc5 = context.create_node(AudioNodeInit::OscillatorNode(options), Default::default());

context.connect_ports(osc5.output(0), dest.input(0));

thread::sleep(time::Duration::from_millis(3000));

let _ = context.resume();

context.message_node(

osc5,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

thread::sleep(time::Duration::from_millis(3000));

}

fn main() {

if let Ok(servo_media) = ServoMedia::get() {

run_example(servo_media);

} else {

unreachable!();

}

}

After running the code, different sound patterns are created with respect to each waveform (Sine, Square, Sawtooth, Triangle)

We created the constant_source_node.rs file in the audio/src/ directory which has the function to generate a constant tone

use block::Chunk;

use block::Tick;

use node::{AudioNodeEngine, AudioScheduledSourceNodeMessage, OnEndedCallback};

use node::BlockInfo;

use node::{AudioNodeType, ChannelInfo, ShouldPlay};

use param::{Param, ParamType};

#[derive(Copy, Clone, Debug)]

pub struct ConstantSourceNodeOptions {

pub offset: f32,

}

impl Default for ConstantSourceNodeOptions {

fn default() -> Self {

ConstantSourceNodeOptions { offset: 1. }

}

}

#[derive(AudioScheduledSourceNode,AudioNodeCommon)]

pub(crate) struct ConstantSourceNode {

channel_info: ChannelInfo,

offset: Param,

start_at: Option<Tick>,

stop_at: Option<Tick>,

onended_callback: Option<OnEndedCallback>,

}

impl ConstantSourceNode {

pub fn new(options: ConstantSourceNodeOptions, channel_info: ChannelInfo) -> Self {

Self {

channel_info,

offset: Param::new(options.offset.into()),

start_at: None,

stop_at: None,

onended_callback: None,

}

}

pub fn update_parameters(&mut self, info: &BlockInfo, tick: Tick) -> bool {

self.offset.update(info, tick)

}

}

impl AudioNodeEngine for ConstantSourceNode {

fn node_type(&self) -> AudioNodeType {

AudioNodeType::ConstantSourceNode

}

fn process(&mut self, mut inputs: Chunk, info: &BlockInfo) -> Chunk {

debug_assert!(inputs.len() == 0);

inputs.blocks.push(Default::default());

let (start_at, stop_at) = match self.should_play_at(info.frame) {

ShouldPlay::No => {

return inputs;

}

ShouldPlay::Between(start, end) => (start, end),

};

{

inputs.blocks[0].explicit_silence();

let mut iter = inputs.blocks[0].iter();

let mut offset = self.offset.value();

while let Some(mut frame) = iter.next() {

let tick = frame.tick();

if tick < start_at {

continue;

} else if tick > stop_at {

break;

}

if self.update_parameters(info, frame.tick()) {

offset = self.offset.value();

}

frame.mutate_with(|sample, _| *sample = offset);

}

}

inputs

}

fn input_count(&self) -> u32{

0

}

fn get_param(&mut self, id: ParamType) -> &mut Param {

match id {

ParamType::Offset => &mut self.offset,

_ => panic!("Unknown param {:?} for the offset", id),

}

}

make_message_handler!(AudioScheduledSourceNode: handle_source_node_message);

}

We created the constant_source.rs file in the examples/ directory which realizes the implementation of Constant Source Node

extern crate servo_media;

use servo_media::audio::constant_source_node::ConstantSourceNodeOptions;

use servo_media::audio::gain_node::GainNodeOptions;

use servo_media::audio::param::{ParamType, RampKind, UserAutomationEvent};

use servo_media::audio::node::{AudioNodeInit, AudioNodeMessage, AudioScheduledSourceNodeMessage};

use servo_media::ServoMedia;

use std::sync::Arc;

use std::{thread, time};

fn run_example(servo_media: Arc<ServoMedia>) {

let context = servo_media.create_audio_context(Default::default());

let dest = context.dest_node();

let mut cs_options = ConstantSourceNodeOptions::default();

cs_options.offset = 0.;

let cs = context.create_node(

AudioNodeInit::ConstantSourceNode(cs_options.clone()),

Default::default(),

);

let mut gain_options = GainNodeOptions::default();

gain_options.gain = 0.1;

let gain = context.create_node(

AudioNodeInit::GainNode(gain_options.clone()),

Default::default(),

);

let osc = context.create_node(

AudioNodeInit::OscillatorNode(Default::default()),

Default::default(),

);

context.connect_ports(osc.output(0), gain.input(0));

context.connect_ports(cs.output(0), gain.param(ParamType::Gain));

context.connect_ports(gain.output(0), dest.input(0));

let _ = context.resume();

context.message_node(

osc,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

context.message_node(

gain,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

context.message_node(

cs,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

context.message_node(

cs,

AudioNodeMessage::SetParam(

ParamType::Offset,

UserAutomationEvent::RampToValueAtTime(RampKind::Linear, 1., 1.5),

),

);

context.message_node(

cs,

AudioNodeMessage::SetParam(

ParamType::Offset,

UserAutomationEvent::RampToValueAtTime(RampKind::Linear, 0.1, 3.0),

),

);

context.message_node(

cs,

AudioNodeMessage::SetParam(

ParamType::Offset,

UserAutomationEvent::RampToValueAtTime(RampKind::Linear, 1., 4.5),

),

);

context.message_node(

cs,

AudioNodeMessage::SetParam(

ParamType::Offset,

UserAutomationEvent::RampToValueAtTime(RampKind::Linear, 0.1, 6.0),

),

);

thread::sleep(time::Duration::from_millis(9000));

let _ = context.close();

}

fn main() {

if let Ok(servo_media) = ServoMedia::get() {

run_example(servo_media);

} else {

unreachable!();

}

}

Build

12/07- A Servo team member (@ferjm: Fernando Jimenez Moreno) merged the pull request (#164)

12/06- Completed the changes stated in the review for Constant Source Node example. The updated pull request can be accessed via here

11/9- The formatted changes have been committed as said in the review.The changes to the formatting can be seen here

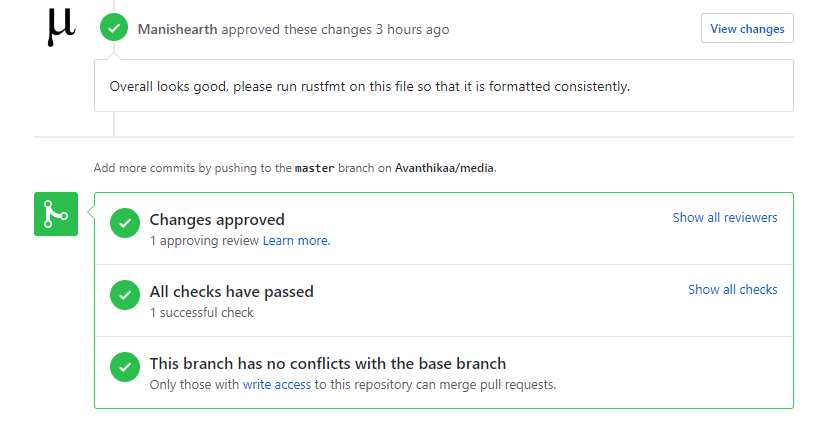

11/8- A Servo team member (@Manishearth) approved the pull request and advised us to make some minor formatting changes.

11/8- Submitted a pull request which contained the new oscillator.rs file having the examples of implementation of the different OscillatorType or waveforms

We have opened our pull request and are working on getting it merged based on the reviews received. Please use our forked repository till the pull request is merged.

Forked repository: https://github.com/servo/media

Clone command using git:

git clone https://github.com/servo/media.git

Once you have the forked repo, please follow steps here to do a build.

Note that build may take up to 45 minutes, based on your system configuration.

Installing dependencies

Installing Rust

The initial step is to install Rust. You can do so by installing Rustup, version 1.8.0 or more recent.

1. To install on Windows, download and run rustup-init.exe then follow the onscreen instructions.

2. To install on other systems, run:

curl https://sh.rustup.rs -sSf | sh

For other installation methds click here

Local build instructions for Debian-based Linuxes are given below:

1. Run ./mach bootstrap. If this fails, run the commands below:

sudo apt install git curl autoconf libx11-dev \

libfreetype6-dev libgl1-mesa-dri libglib2.0-dev xorg-dev \

gperf g++ build-essential cmake virtualenv python-pip \

libssl1.0-dev libbz2-dev libosmesa6-dev libxmu6 libxmu-dev \

libglu1-mesa-dev libgles2-mesa-dev libegl1-mesa-dev libdbus-1-dev \

libharfbuzz-dev ccache clang \

libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev libgstreamer-plugins-bad1.0-dev autoconf2.13

If you using a version prior to Ubuntu 17.04 or Debian Sid, replace libssl1.0-dev with libssl-dev. Additionally, you'll need a local copy of GStreamer with a version later than 12.0. You can place it in support/linux/gstreamer/gstreamer, or run ./mach bootstrap-gstreamer to set it up.

If you are using Ubuntu 16.04 run export HARFBUZZ_SYS_NO_PKG_CONFIG=1 before building to avoid an error with harfbuzz.

If you are on Ubuntu 14.04 and encountered errors on installing these dependencies involving libcheese, see #6158 for a workaround. You may also need to install gcc 4.9, clang 4.0, and cmake 3.2:

If virtualenv does not exist, try python-virtualenv.

Local build instructions for Windows environments are given below:

1. Install Python for Windows (https://www.python.org/downloads/release/python-2714/).

The Windows x86-64 MSI installer is fine. You should change the installation to install the "Add python.exe to Path" feature.

2. Install virtualenv.

In a normal Windows Shell (cmd.exe or "Command Prompt" from the start menu), do:

pip install virtualenv

If this does not work, you may need to reboot for the changed PATH settings (by the python installer) to take effect.

3. Install Git for Windows (https://git-scm.com/download/win). DO allow it to add git.exe to the PATH (default settings for the installer are fine).

4. Install Visual Studio Community 2017 (https://www.visualstudio.com/vs/community/).

You MUST add "Visual C++" to the list of installed components. It is not on by default. Visual Studio 2017 MUST installed to the default location or mach.bat will not find it.

If you encountered errors with the environment above, do the following for a workaround:

Download and install Build Tools for Visual Studio 2017

Install python2.7 x86-x64 and virtualenv

5. Run mach.bat build -d to build

If you have troubles with x64 type prompt as mach.bat set by default:

you may need to choose and launch the type manually, such as x86_x64 Cross Tools Command Prompt for VS 2017 in the Windows menu.)

cd to/the/path/servo

python mach build -d

Build instructions for all other environments are available here

Building locally

Once you complete the above steps to install dependencies, following are steps to build servo:

To build Servo in development mode. This is useful for development, but the resulting binary is very slow.

git clone https://github.com/servo/servo

cd servo

./mach build --dev

./mach run tests/html/about-mozilla.html

Or on Windows MSVC, in a normal Command Prompt (cmd.exe):

git clone https://github.com/servo/servo

cd servo

mach.bat build --dev

For benchmarking, performance testing, or real-world use, add the --release flag to create an optimized build:

./mach build --release

./mach run --release tests/html/about-mozilla.html

Note: mach build will build both servo and libsimpleservo. To make compilation a bit faster, it's possible to only compile the servo binary: ./mach build --dev -p servo.

Running a build

We can quickly verify if the servo build is working by running the command

'./mach run http://www.google.com'

This will open a browser instance rendering the Google homepage.

This should be straightforward on any environment that has rendering support - Linus, Windows, MacOS, Android

Test Plan

Testing Approach

As we have implemented the processing algorithm for oscillator different oscillator types, after running the rust file the user will be able to hear the different generated waveforms based on its type. (Sine, Square, Sawtooth, Triangle)

Testing Steps

Please read and perform the actions on the Build and Verification sections properly before testing.

1) Make sure you are in this directory: target/debug

2) Run the command ./oscillator

3) Different sounds based on the type of oscillator (waveform) must be generated after definite interval of time.

4) Run the command ./constant_source

Examples for the different sound waves can be found below:

References

https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API

https://webaudio.github.io/web-audio-api/