CSC/ECE 517 Spring 2023 - NTNX-2. Support provisioning mySQL databases via NDB Kubernetes Operator

Introduction

Nutanix Database Service

Nutanix Database Service is a unique hybrid cloud-based database solution that offers support for several popular database management systems including Microsoft SQL Server, Oracle Database, PostgreSQL, MongoDB, and MySQL. With Nutanix Database Service, you can manage hundreds to thousands of databases with ease. It simplifies tasks like provisioning new databases, automating routine administrative tasks such as backups and patches, and selecting the right operating systems, database versions, and extensions to meet your specific application and compliance requirements.

Nutanix Database Service Operator

The Nutanix Database Service (NDB) Operator is an innovative tool that automates and simplifies database administration, provisioning, and life-cycle management on Kubernetes. By utilizing the NDB Operator, developers can directly provision popular database management systems like PostgreSQL, MySQL, and MongoDB from their K8s cluster with ease. This results in significant time savings that could take days to weeks of effort if done manually. The NDB Operator is an open-source tool that can be accessed from your preferred Kubernetes platform, and also allows you to benefit from the full database life-cycle management that NDB provides.

Problem Statement

Our team's first task was to get familiar with the operator's codebase which was written in GoLang. We also had to understand basic cluster administration using Kubernetes and make ourselves familiar with the Go Operator SDK which is what is used to develop custom Kubernetes Operators. Our goal was to enhance the functionality of the Nutanix Database Service (NDB) Operator, which at the time could only support the provisioning of PostgreSQL databases. We had to extend the capabilities of the operator to include the provisioning and de-provisioning of MySQL databases. This required modifications to the existing operator to enable the provisioning of MySQL databases, as well as refactoring of the operator's code-base to support this added feature. We also had to make sure that the Operator's code was tested and that the provisioning and de-provisioning steps were tested end-to-end.

Tasks Implemented in Project 3

We set up our own Kubernetes cluster using Docker Desktop and cloned the ndb-operator repository to make changes to the code.

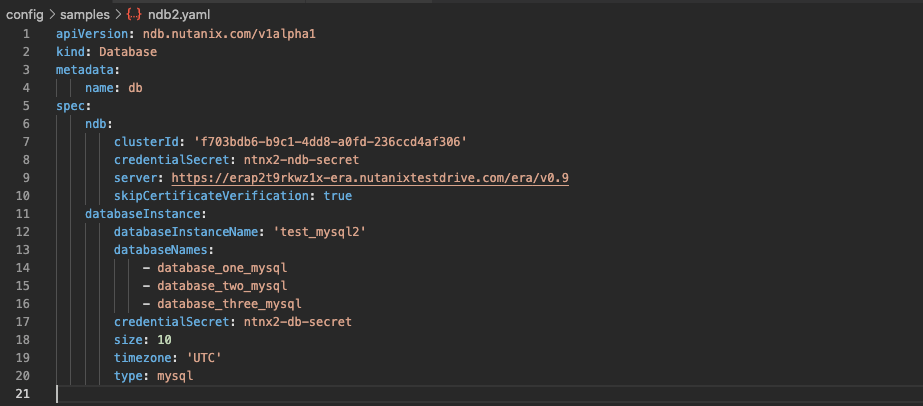

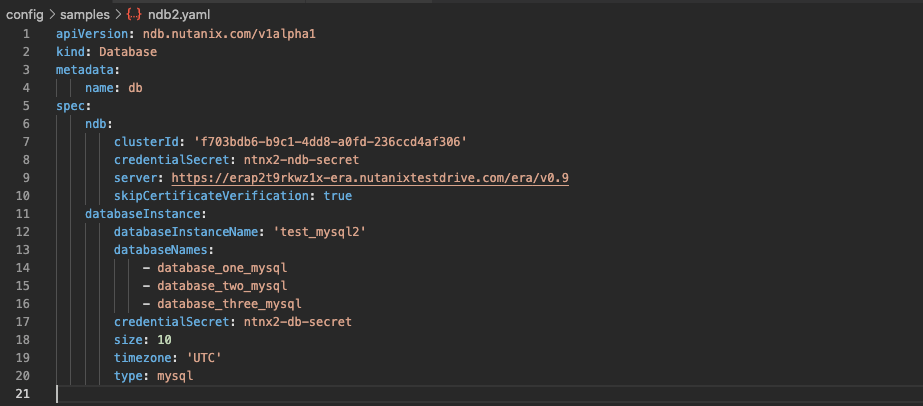

The manifest file used to generate the provisioning request was set up: This includes the Nutanix Database Service server that is being used as well as parameters such as database instance name, database names, credential secret (which is applied using a different manifest file), size, timezone and type of database that needs to be provisioned.

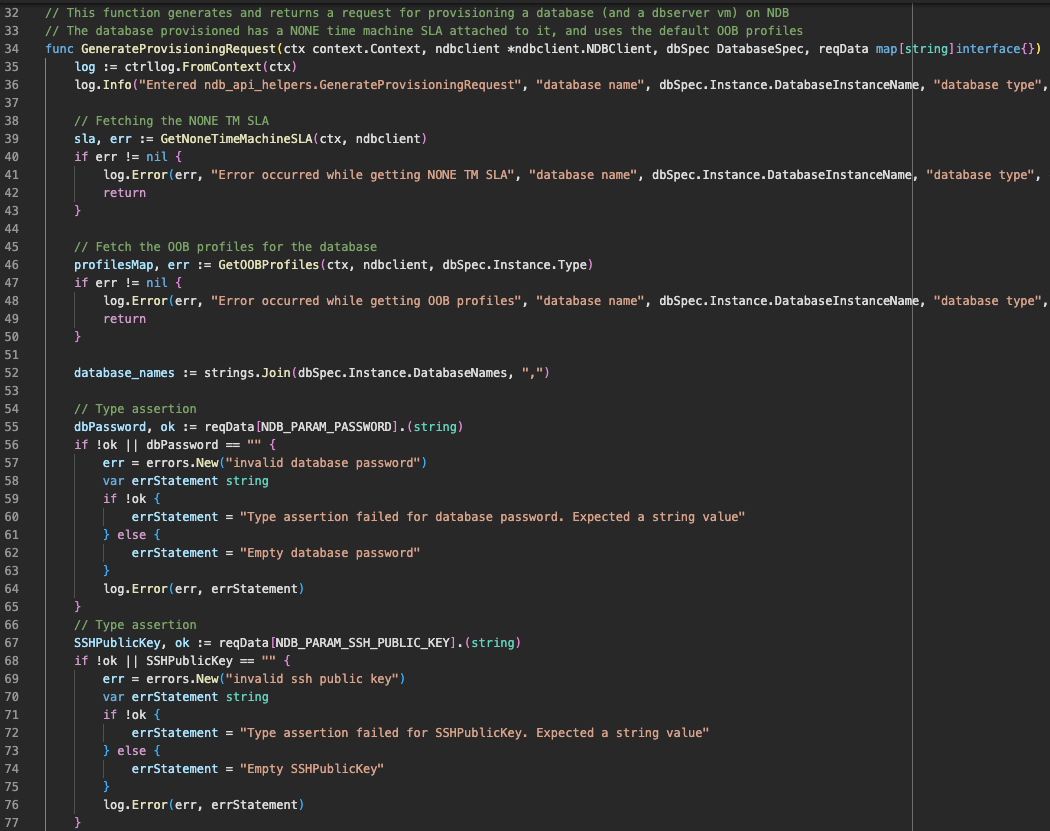

The GenerateProvisioningRequest takes the following arguments: a context, an instance of a struct called NDBClient, an instance of a struct called DatabaseSpec, and a map containing strings as keys and interface{} types as values. The function returns a pointer to an instance of a struct called DatabaseProvisionRequest and an error.

Within the function, a Time Machine Service Level Agreement (SLA) is fetched and as well as OOB profiles from the remote database client. If the function fails to do either of these things, it logs the error and returns.

The function then joins the database instance names together into a single string, and performs type assertions on the password and SSH public key contained within the map argument. If either of these assertions fail (i.e not of type string or empty) errors are thrown.

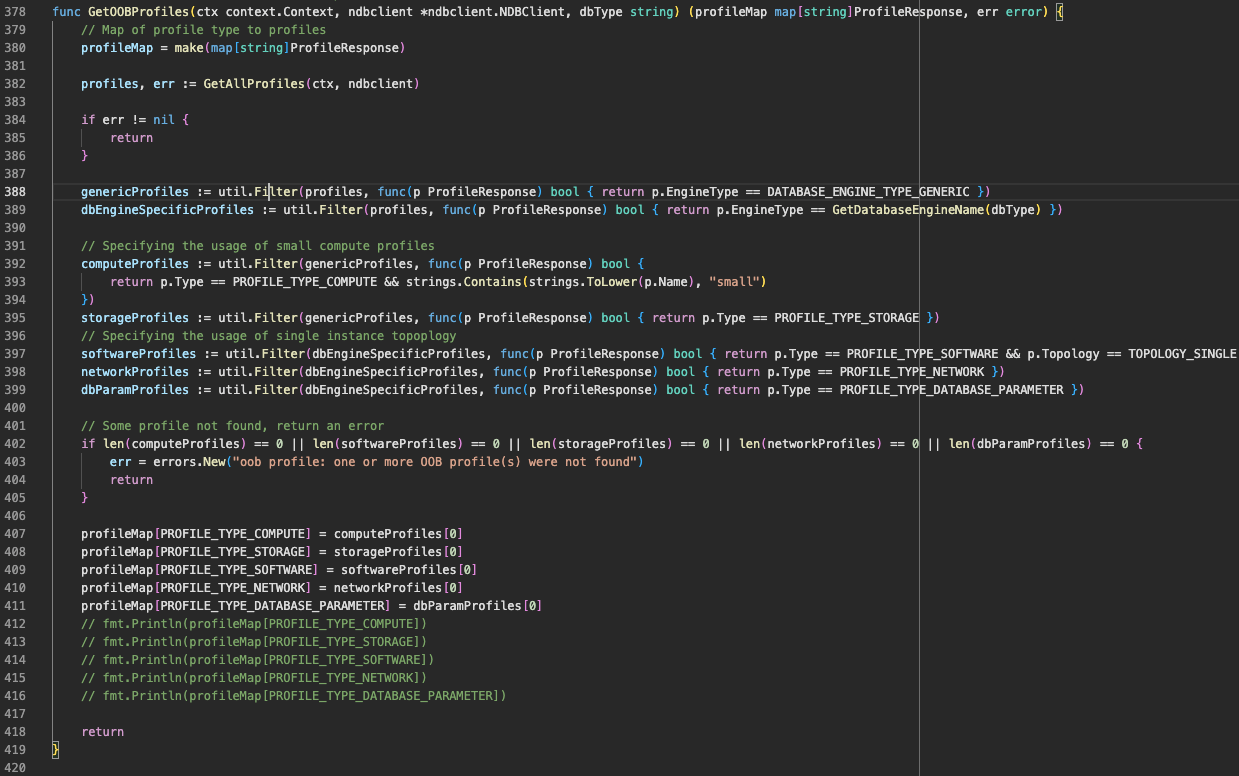

The GetOOBProfiles takes three parameters as input: ctx, ndbclient and dbType and returns a map of profiles and an error. Inside the function, the map of profiles is initialized and GetAllProfiles function is called.

The function then filters out the generic and specific database engine type profiles using the "util.Filter" function. It then again filters these profiles based on the profile type such as COMPUTE, STORAGE, SOFTWARE, NETWORK, and DATABASE_PARAMETER using the same "util.Filter" function.

The function checks if all the required profiles are present or not, and if any of the required profiles is not present, it returns an error. If all the profiles are present, it stores them in the map and returns the map of profiles.

So, basically, this function gets OOB profiles based on the input database engine type and populates a map of profiles.

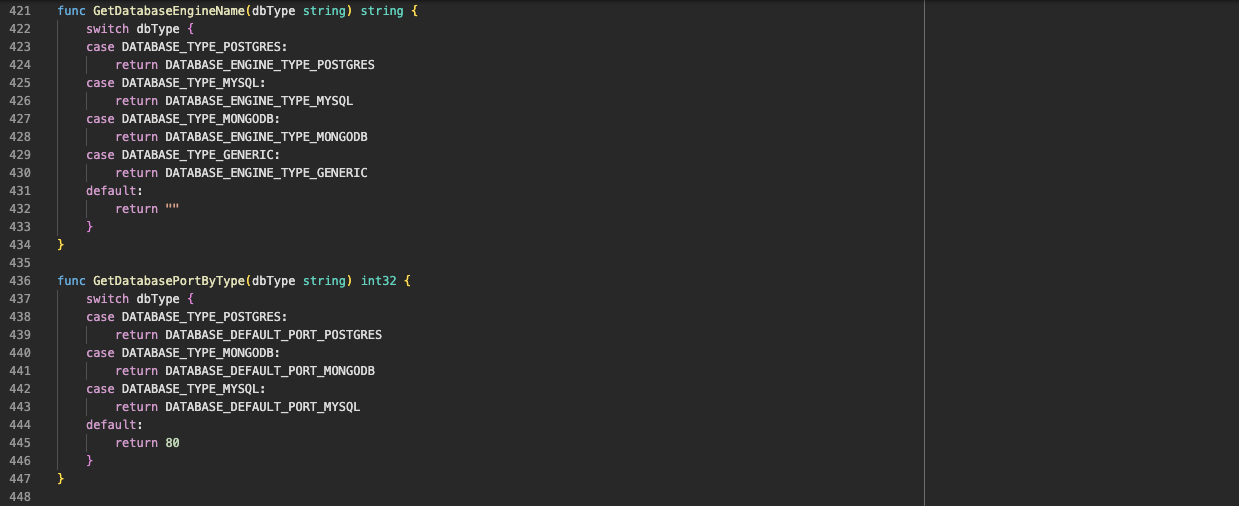

The GetDabaseEngineName and the GetDatabasePortByType functions also returns the database type depending on the definition in the manifest file.

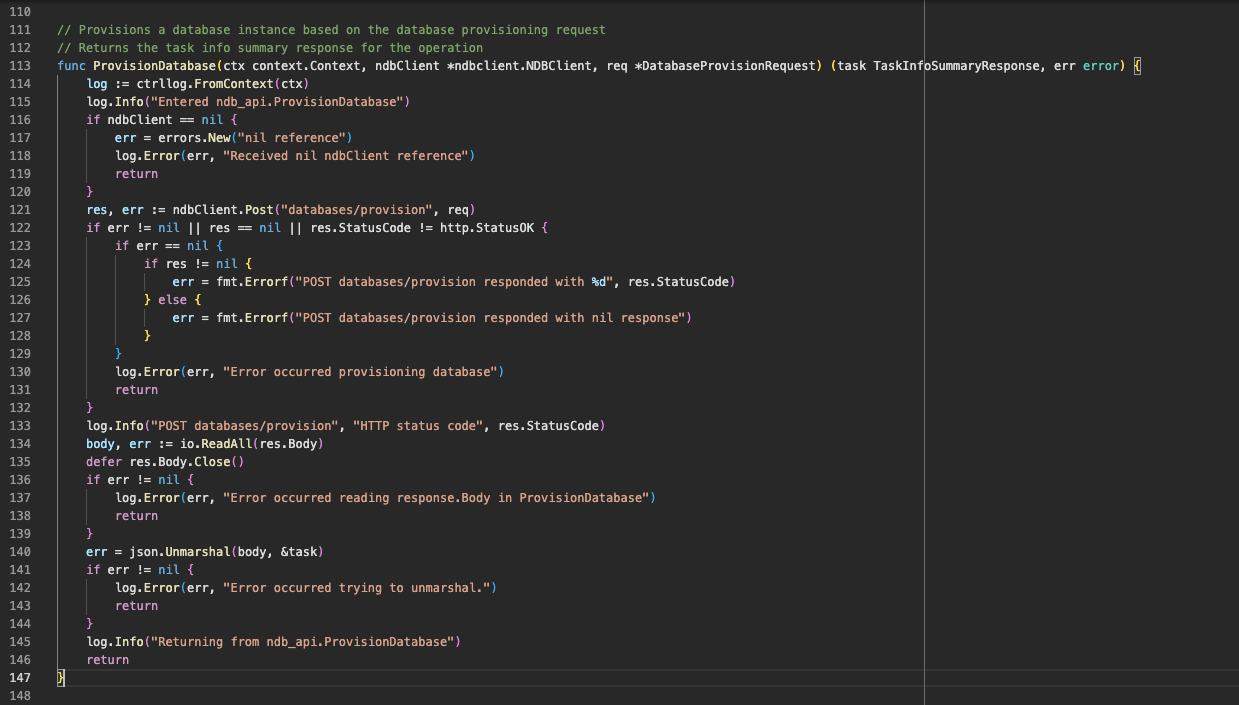

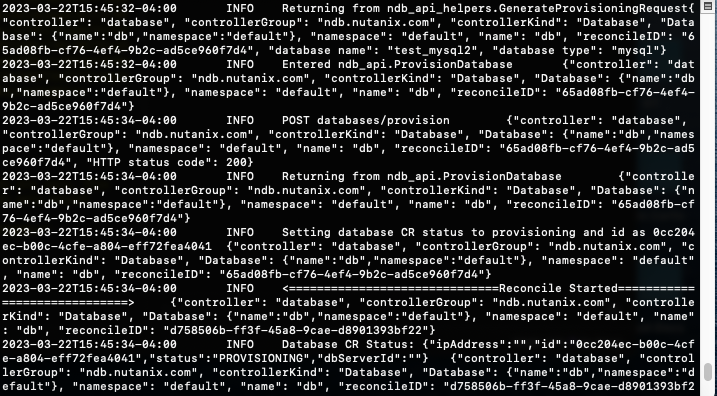

The ProvisionDatabase function sends a POST request to the "databases/provision" endpoint of the Network Database service using the ndbClient's Post method. It checks and logs any errors during the POST request. If the POST request is successful and returns a status code of 200 (http.StatusOK), the HTTP response body is read and unmarshaled into the task object.

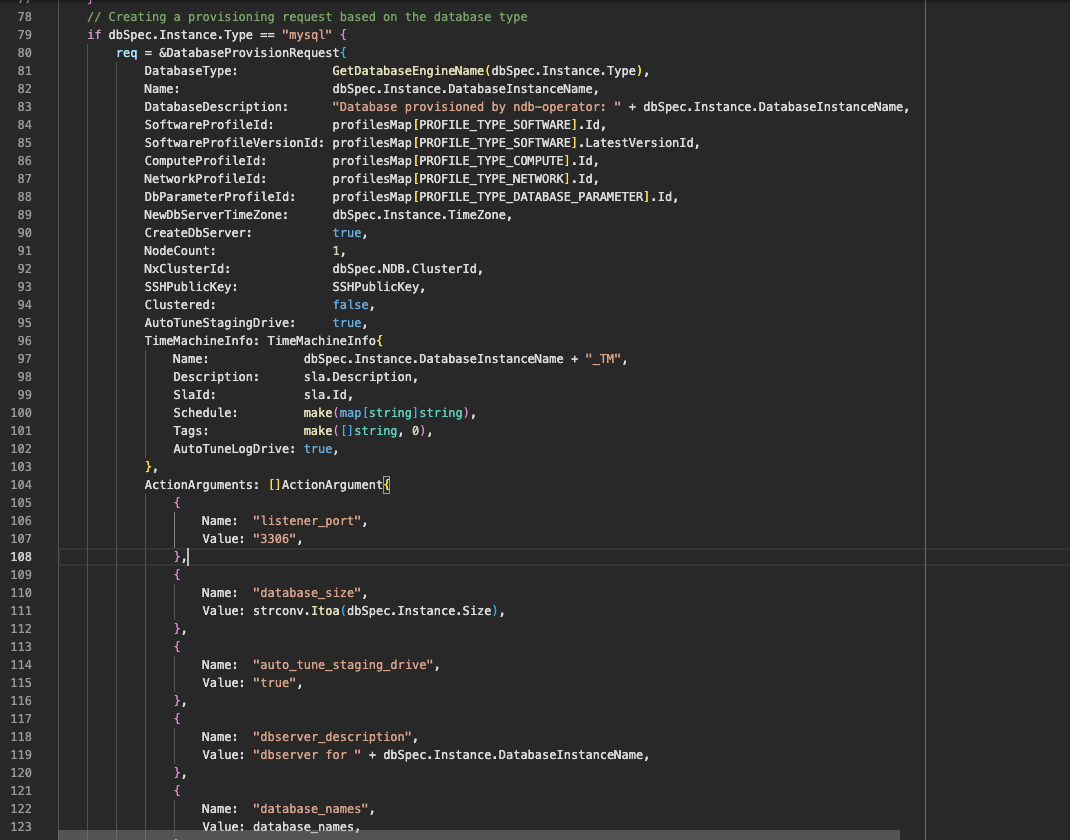

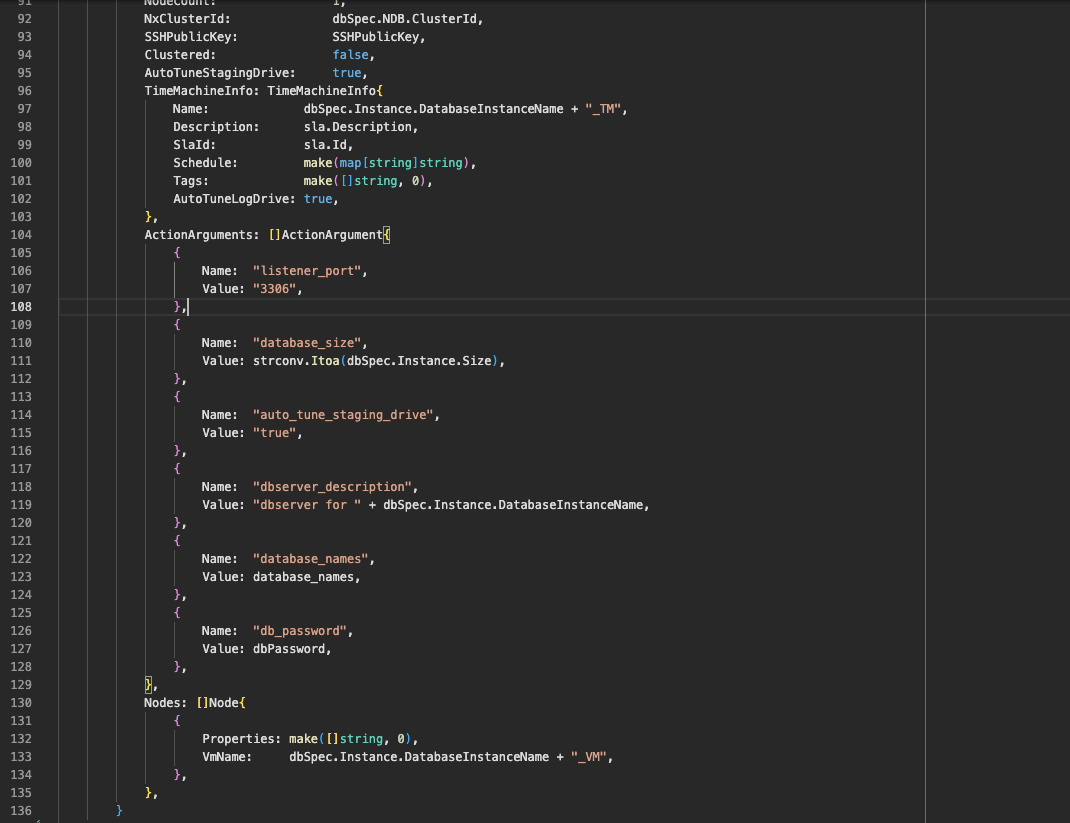

To provision the MySQL database the port had to be changed.

The ActionArguments was changed as well.

Design Patterns

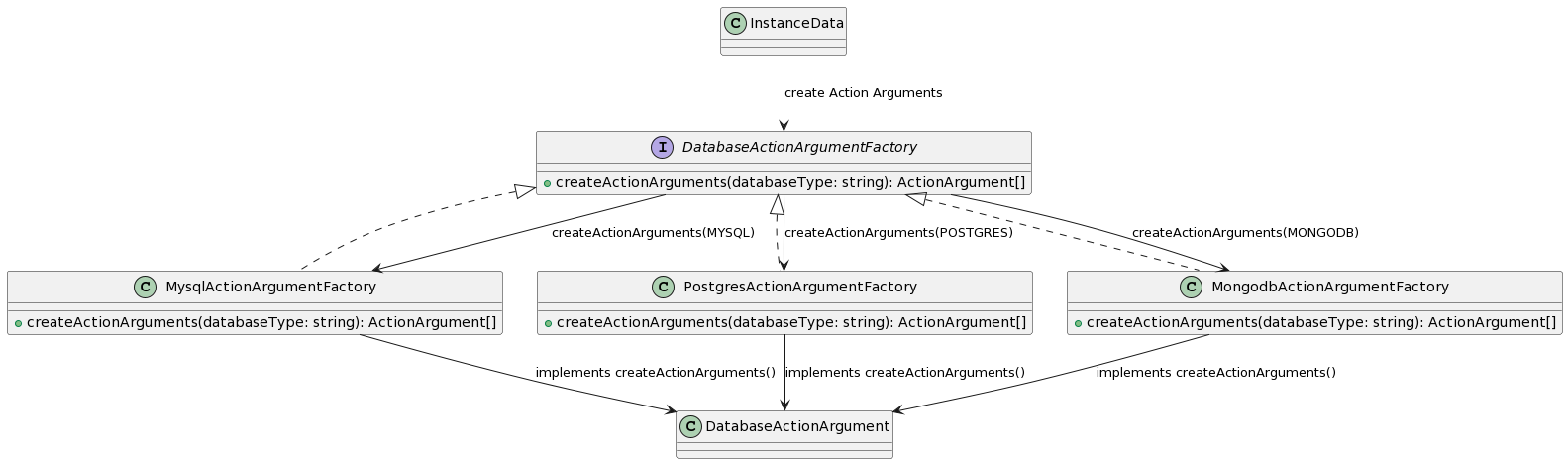

One design pattern that can be used to improve this code is the Factory design pattern. The Factory design pattern is used to define an interface for creating an object, but let sub classes decide which class to instantiate. Based on the instance type, the database provision request can be generated and a factory interface can be defined for this. Apart from this the DRY principle has also been followed by making use of profile maps and functions to get databases by name in order to have a single method to provision databases and not have different control statements to generate provisioning requests for different database instance types.

Design Plan for Final Project

We plan to implement the factory design pattern to further refine and DRY out our code. Our plan is to implement a DatabaseActionArgumentFactory Interface which will introduce a factory design pattern and this will handle the creation of the different types of database action arguments based on the database type.

To achieve this, the DatabaseActionArgumentFactory which has a single method which will create the action arguments. This method will take in a string representing the database type and return action arguments based on the database type passed in as string.

The implementation of this interface will be done by creating different factory structs for each database type, which will implement the method of the interface. These factory structures will be used to create the specific database action argument for each database type.

By using this approach, the code will be more modular and easier to maintain in the future as new database types can be added by simply adding a new factory struct and implementing the CreateActionArguments method for that type. Additionally, the use of an interface will allow for easier testing and mocking of the database action argument creation logic.

Class diagram:

Testing Plan for Final Project

To test the implementation of the DatabaseActionArgumentFactory, we will be writing unit tests that verify that the factory is correctly creating the appropriate action arguments for each database type.

The steps we will be following for implementation are as follows:

- Create test cases for each of the supported database types (MySQL, Postgres, MongoDB, etc.)

- For each test case, create a mock of the database instance with the appropriate type.

- Call the createActionArguments method on the DatabaseActionArgumentFactory with the mock database instance as input.

- Verify that the output is a list of the correct action arguments for the corresponding database type.

- Repeat the above steps for all the supported database types.

By following these steps, we will be making sure that the DatabaseActionArgumentFactory is correct and that it is creating the correct action arguments for each supported database type.

Additionally, we will also be able to test that the program is able to handle unsupported database types by passing in an unsupported database type and verifying that the output is nil or an empty list.

The system will then be tested by running the operator on a local Kubernetes cluster and then making provisioning requests to the Nutanix Database Service test drive cluster that was assigned.

This is similar to what we had done previously to provision a database using the steps given below:

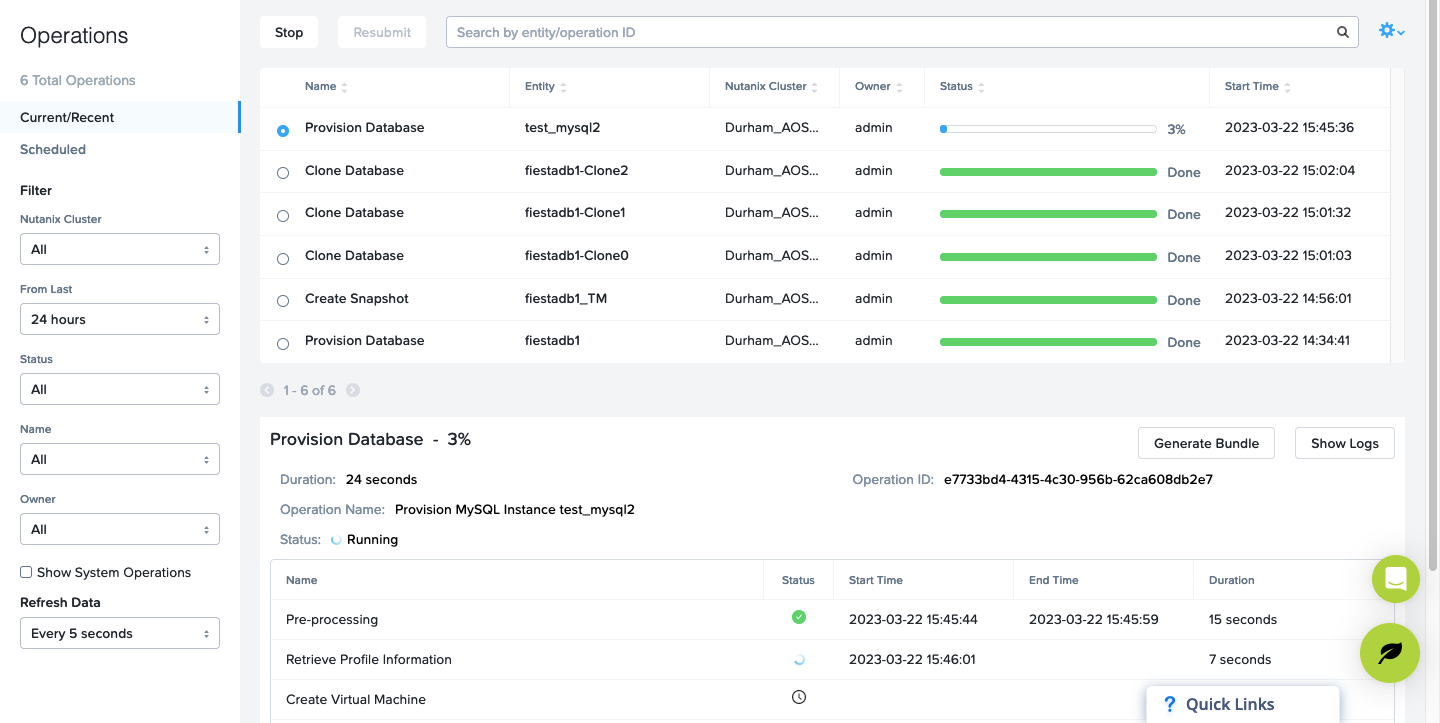

Step 1: First, the manifest for provisioning a mysql database was configured.

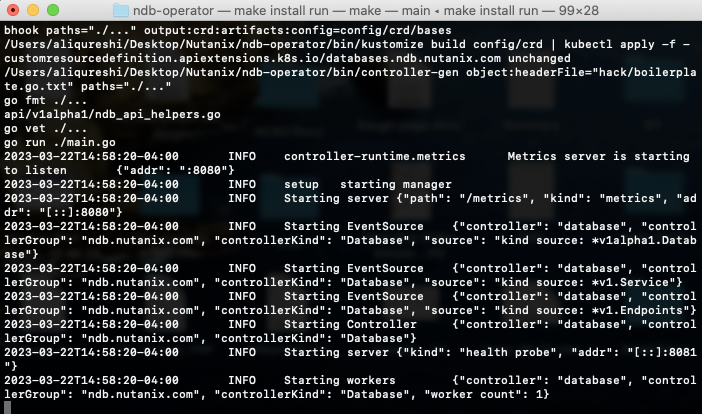

Step 2: The NDB operator was then run on a local cluster to make sure that the provisioning request was being completed.

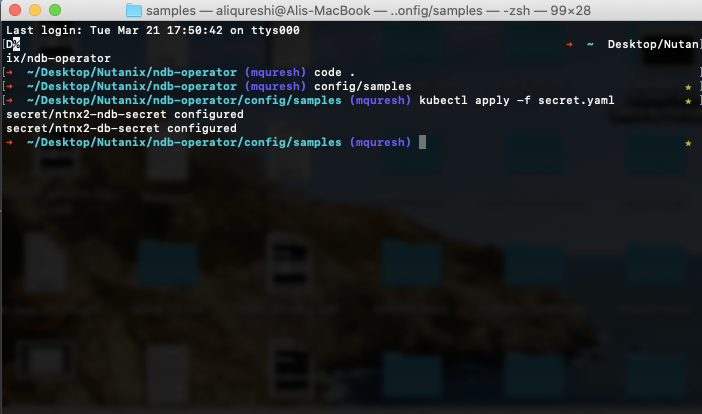

Step 3 : The secrets were configured

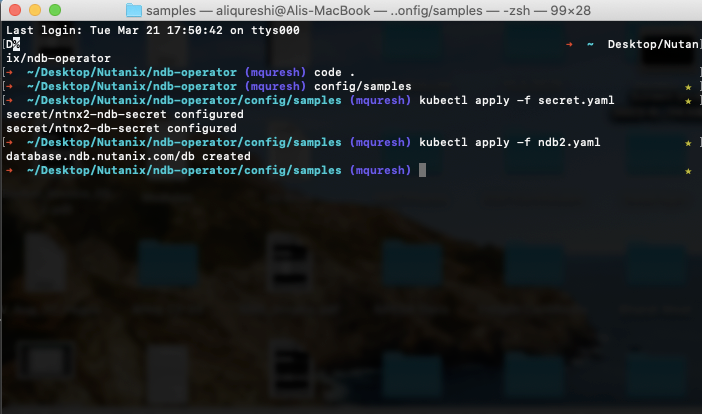

Step 4: The configuration for the database was applied.

A successful response is received by the operator.

Checked the test drive for status of provisioning.

References

- NDB Operator Document - https://docs.google.com/document/d/1-VykKyIeky3n4JciIIrNgirk-Cn4pDT1behc9Yl8Nxk/

- NDB Operator Github Repository - https://github.com/nutanix-cloud-native/ndboperator

- Go Documentation - https://go.dev/doc/

- Go Operator SDK Document - https://sdk.operatorframework.io/docs/buildingoperators/golang/tutorial/

- Overview of Kubernetes - https://kubernetes.io/docs/concepts/overview/

Github

Repo(Public): https://github.com/qureshi-ali/ndb-operator

Pull Request: https://github.com/nutanix-cloud-native/ndb-operator/pull/75

Mentors

- Prof. Edward F. Gehringer

- Krunal Jhaveri

- Manav Rajvanshi

- Krishna Saurabh Vankadaru

Contributors

- Muhammad Ali Qureshi(mquresh)

- Prasad Vithal Kamath (pkamath3)

- Boscosylvester John Chittilapilly (bchitti)