CSC/ECE 517 Spring 2023- NTNX-4. Extend NDB operator provision postregresql aws

NTNX-4. Extend Nutanix Database Service Kubernetes operator to provision Postgres Single Instance databases on AWS EC2

Note to the reviewer

Design Doc Link: https://docs.google.com/document/d/1Drglvj0b487qIACeIW1jYG83uRtJtl7fIchM6B1H9Bk/edit?usp=sharing This is the document that we are actively using. All the details in the design document are already written here in a more clear way.

A major part of the logic can only be written after we get access to the keys of an EC2 instance that we can connect to the Nutanix Database Service. This differs from other NTNX teams since we need to provision the database on AWS. Hence, we cannot use the Test Nutanix endpoint to create clusterID or Secret's name. We have added a Note section in the Implementation section to inform the parts which fall under this category. We have also added this information collectively under the Future Implementation section.

UPDATE: We have now received access and have added as much code as possible within the stipulated time. We have added a 'Resubmission progress' section to inform you about the changes we have done now. We have also updated the Future Implementation section accordingly.

Resubmission progress

- We have received the access now, and have added a bit of code after that. We have analyzed the JSON request that is needed to be sent to provision a database and created a struct of that type in database_types.go.

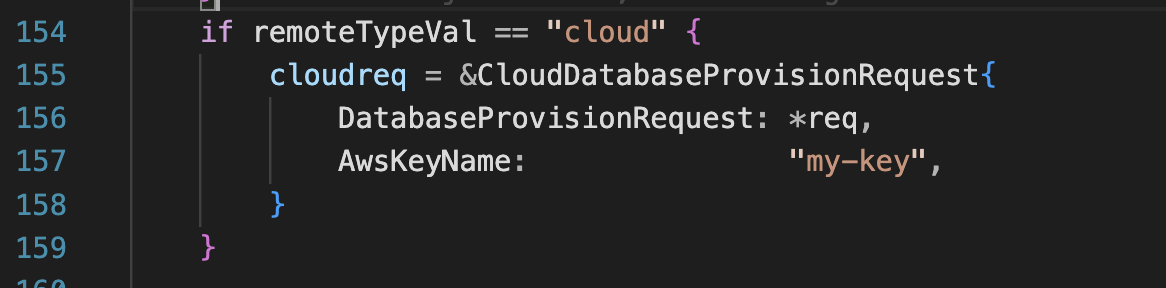

- We have stuck to the DRY principle of re-using an existing variable's fields by inheriting from it. CloudDatabaseProvisionRequest inherits from DatabaseProvisionRequest.

- Added logic to create a CloudDatabaseProvisionRequest object if the remoteType is 'cloud' - This is because the request to this requires extra fields

- We have also now added the remoteType field to be passed as a variable for it to be available in the controller helper logic.

- We fixed the test cases after adding this logic.

- We have added a google drive link with a video of the tests running.

- Updated the implementation section with what is left.

Problem Statement

Nutanix Database Service is the only hybrid cloud database-as-a-service for Microsoft SQL Server, Oracle Database, PostgreSQL, MongoDB, and MySQL. Currently, using the NDB operator, you can only provision a database on the Nutanix Cloud Infrastructure(on-prem).This project aims to extend the Nutanix Database Service Kubernetes operator to provision Postgres Single Instance databases on cloud - specifically on AWS EC2. We aim to do this in a scalable way so that changing the cloud provider to say Azure or GCP would require changes only to the configuration file(CRD Manifest).

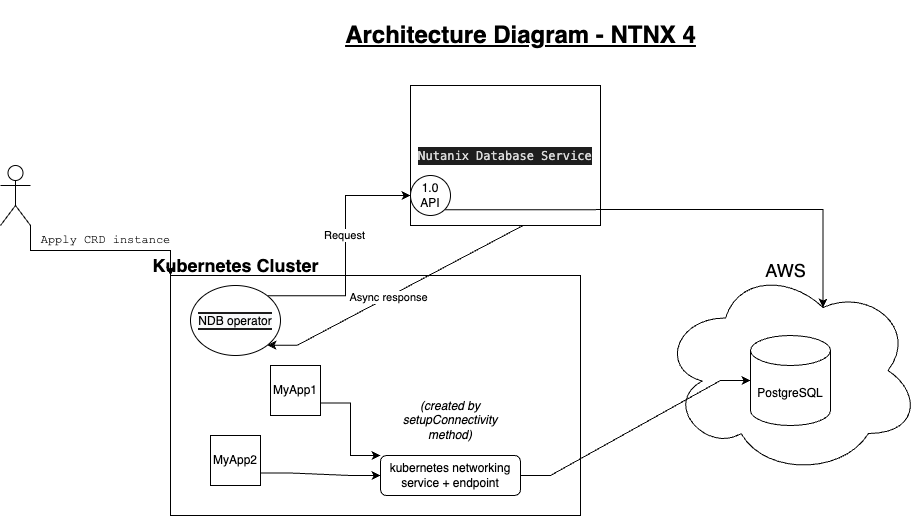

Architecture

The important components here are:

- Kubernetes Cluster

- Kubernetes Service that almost acts like a gateway to the provisioned database - The application pods that want to communicate with the database do so through the K8 service and endpoint that is created as part of the last step of provisioning. The workflow is explained in detail in the next section.

- Nutanix Database Service - The operator pod running in the K8 cluster communicates with this service to provision, de-provision, obtain status of the database etc.

- Cloud platform (AWS in our case)

Workflow

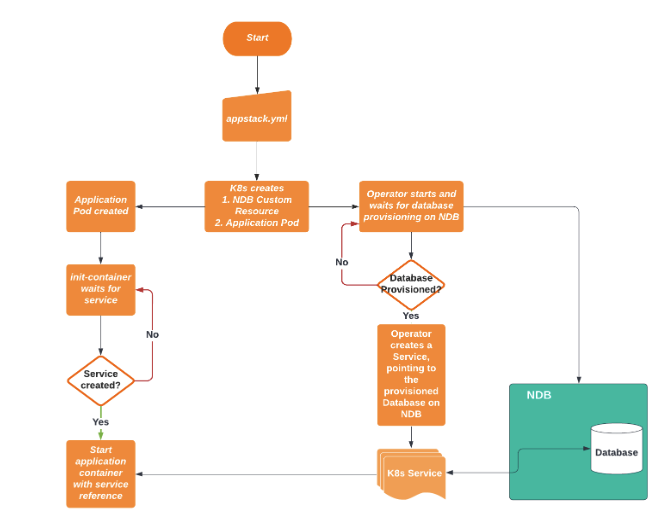

- Apply the instance CRD manifest file to create an instance of NDB resource in the K8 cluster

- The operator controller runs its logic to build a provisioning request and sends it to the Nutanix Database Service endpoint pertaining to provision in cloud(AWS in our case)

- The reconcile function loops to take actions depending on the status of provisioning - Empty, Provisioning and Ready

- Once the database is provisioned in AWS and the status is in Ready, we set up a Kubernetes networking service and an endpoint with the same name in the cluster. Any application pod in this cluster will communicate with this endpoint rather than communicating directly with the database.

Design

Single Responsibility Principle(SRP)

SRP is adopted here to separate the business logic of the operator from the reconciliation loop.

- The logic to handle remoteType : cloud is handled separately from the client (ndbClient) through helper methods - This ensures that the client is responsible for only making get/post requests to the specified endpoint without worrying about whether it is for on-prem or cloud.

- The reconciliation loop is responsible for ensuring that the state of the system matches the desired state declared by the operator. It listens to events from the Kubernetes API server and performs the necessary actions to reconcile the system to the desired state. The business logic of the operator, on the other hand, is responsible for implementing the higher-level functionality of the operator, such as managing the deployment of a complex application or managing a database cluster.

- Even within the reconciliation logic, there are clear indications of following SRP. We have a separate file utils/secret.go to implement GetDataFromSecret and GetAllDataFromSecret - logic to get data from the resource denoted by the name/namespace combination.

Operator Pattern

The operator pattern is based on the idea of declarative configuration management. Rather than writing imperative code to perform a series of steps to reach a desired state, an operator declares the desired state of the system and uses the Kubernetes API to perform the necessary actions to reconcile the system to that desired state.

We continue to follow this pattern for our implementation. We have a manifest file for a NDB custom resource responsible for provisioning and managing PostgreSQL in AWS and the logic for it is handled in the controller code (controllers/database_controllers.go)

EXAMPLE MANIFEST FILE

...

apiVersion: ndb.nutanix.com/v1alpha1

kind: Database

metadata:

name: db

spec:

ndb:

remoteType: "cloud"

clusterId: "Nutanix Cluster Id"

credentialSecret : ndb-secret-name

server: https://[NDB IP]:8443/era/v1.0

skipCertificateVerification: true

databaseInstance:

databaseInstanceName: "Database Instance Name"

databaseNames:

- database_one

- database_two

- database_three

credentialSecret: db-instance-secret-name

size: 10

timezone: "UTC"

type: postgres

...

The controller logic and related helper code will use the newly created 'remoteType' field to select the NDS endpoint and construct provisionRequest appropriately. We have distinguished the configuration(Manifest file) and the logic(controller code) this way.

This section will explain the changes that are made/to-be-made as part of this project.

NOTE: WE HAVE NOW RECEIVED ACCESS TO THE INSTANCE AND HAVE MADE AS MANY CHANGES AS POSSIBLE WITHIN THIS TIME. HAVE A LOOK AT THE RESUBMISSION PROGRESS SECTION FOR THE UPDATE We will tell the changes that have not been made and they will be made in the future

Generate Secrets manifest and set name field + credentials in it

This secrets.yaml manifest file is to create a Secret resource instance that is will have the data regarding the NDB instance name and database credentials.

Modify database_types.go to have allow remote types

We have added a remoteType field to the NDB struct along with validation markers ...

// +kubebuilder:validation:Required;Enum=cloud;on-prem RemoteType string `json:"remoteType"`

Add helper method to figure out endpoint depending on remote type

We currently have this logic as part of the ndbClient and should be moved to a separate helper method. The purpose of this function is to determine if the provisioning is for on-prem or cloud.

...

type NDBClient struct {

username string password string url string remoteType string client *http.Client

} ...

Modify helper methods to generate request, provision database and setup connectivity

Below are the functions that are core to the provisioning logic. They are written for provisioning a database in Nutanix cloud infrastructure(on-prem). These functions constitute the primary part of the provisioning workflow.

GenerateProvisioningRequest(database_reconciler_helpers.go)

This data structure should be modified/extended to include fields that are required to be part of the JSON request for the NDS endpoint that pertains to provisioning on cloud. Adding fields with appropriate data type is required here to serialize and deserialize requests properly.

UPDATE: This has now been implemented

The new request structure is as follows:

type CloudDatabaseProvisionRequest struct {

DatabaseProvisionRequest

AwsKeyName string `json:"awsKeyName"`

}

The logic to build the appropriate request structure depending on the remoteType:

ProvisionDatabase(database_reconciler_helpers.go)

This method takes care of posting a request to the NDS endpoint to provision database. Should be modified to allow the same on cloud. This method should take care of a helper method logic to determine the correct request data structure and endpoint to be used. We have currently implemented a part of this logic as part of the ndbClient. Note: We have now received access and will implemented the previous steps. This will be the next step to do

SetupConnectivity(database_reconciler_helpers.go)

This function checks and creates a new service (without label selectors) if it does not exists and also sets up the database as the owner for the created service. This also checks and creates an endpoints object for the service if it does not already exists.

Note: This is the last part of the serial steps. The previous steps must be completed in order to write this logic.

Test plan

Runing tests

Pull the code, cd into the directory and run:

make test

This runs all the required tests as specified in the Makefile.

Test Scenarios

- Testing construction of provision request - All test cases for this that are currently written for on-prem must work for cloud remoteType too.

- Additional tests for fields that are mandatory for requests constructed for provisioning in cloud

- Verify that the correct API endpoints are being hit based on the remote type specified - On-Prem should hit 0.9 API and cloud should hit 1.0 API

- Test the connectivity between the K8 service and the deployed DB on EC2.

Actions to be taken before Manual testing

Manual testing would require you to get access to the Nutanix Database Service

- Obtain access to Nutanix Database Service SASS and access keys for the AWS EC2 instance.

- Add the appropriate credentials to the secrets.yaml file.

- Apply your manifest instance using :

kubectl apply -f <your manifest instance file>

You can verify the status of provisioning in your NDB dashboard.

Future Implementation

- Obtain EC2 keys and connect NDS to the EC2 instance (DONE)

- Construct provision request for provisioning DB in cloud (DONE)

- Add logic to select proper endpoint and request based on the remoteType - We are currently passing this value as function parameter. This will be refactored into a helper method later.

- Add logic to de-provision the database.

Github

Repo(Public): https://github.com/arvindsrinivas1/ndb-operator Pull Request: https://github.com/nutanix-cloud-native/ndb-operator/pull/73 Test video link: https://drive.google.com/file/d/1eRMvXjzatbz9W8xYPcg3V72pZyZBfVmi/view?usp=sharing

Mentors

- Prof. Edward F. Gehringer

- Krunal Jhaveri

- Manav Rajvanshi

- Krishna Saurabh Vankadaru

Contributors

- Arvind Srinivas Subramanian (asubram9)

- Dhanya Sri Dasari (ddasari)

- Zhihao Wang (zwang238@ncsu.edu)