CSC/ECE 517 Spring 2022 - E2229: Track the time students look at other submissions

E2229. Track the time students look at other submissions

This is the wiki page of project E2229 of the final project in Spring 2022 for CSC/ECE 517.

For Peer Reviewers

When testing our deployed app, use the login credentials below:

Username: instructor6 , Password: password

Then follow along with the methods outlined in the testing section to help you get started!

Project Description

Background

Currently in Expertiza, students complete peer review questionnaires during the many rounds of an assignment. This is a great way to gather information about submissions and complete lots of testing while also exposing the reviewing student to practices and skills used in industry.

The instructor can view these reviews on the "Review Reports" screen. This can be found by getting the "Management" dropdown -> Assignments to list all assignments for that instructor. On the far right of each of these rows, one will see multiple buttons for possible actions, select "Review Report" ![]() . Once the page has loaded, clicking the "View" button while "Review Reports" is selected in the dropdown will open the reports. A list of students who have completed reviews, the teams they reviewed, the amount of written text they submitted in comments, as well as other metrics will be displayed.

. Once the page has loaded, clicking the "View" button while "Review Reports" is selected in the dropdown will open the reports. A list of students who have completed reviews, the teams they reviewed, the amount of written text they submitted in comments, as well as other metrics will be displayed.

The issue with this system is that there is no metric currently for how long a user spends on reviews.

Because this project involves logging data real-time, the interactions with the database also need to be minimal.

Motivations

This project was created to give the instructors more information about how students complete reviews, specifically the time they spend on a review. This will give the instructor insight into each review and allow them to improve the teaching experience when it comes to peer reviews.

This "review time" metric should be broken down by each link in a submission.

Previous Implementations

The previous project teams and their Pull Requests and Wikis are listed here

| Group | Pull Request | Wiki |

|---|---|---|

| E1791 | PR | Wiki |

| E1872 | PR | Wiki |

| E1989 | PR | Wiki |

| E2080 | PR | Wiki |

Requested Changes

With the general functionality outline by previous groups, our team was asked to:

- Design a database schema for logging time a reviewer spends on a submission

- Modify the Review Report to show the time spent on each submission

- Add tests for the code written

Our Implementation

Overview and Pattern

Overall, our implementation works using a proxy pattern for data storage and the façade pattern to get the requested data and statistics.

When the reviewer navigates to a page from the expertiza review page a submission_viewing_event is created for that url and a start time is saved. Once the reviewer closes this page an end time is saved in that same submission_viewing_event, a total time is calculated and this is saved to local storage using pstore. As the reviewer navigates to different links from the review page, submission_viewing_events are created for each link accessed and these accumulate a total time spent with the link open. This is done using polling for all external links. Once the reviewer saves or submits their review, these events are saved to the database from local storage, and deleted from local storage. This minimizes the amount of times the application is interacting with the actual database. If the user is returning to finish a saved review, these rows are pulled from the database, added to as stated above, and saved to local storage again until saved or submitted.

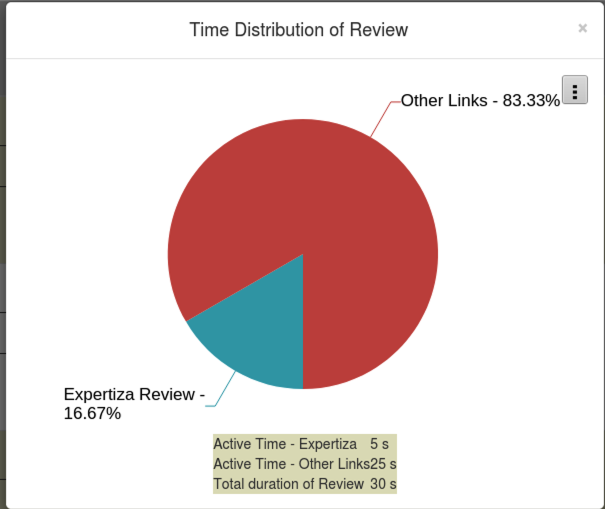

When the instructor goes to view the "Review Report", each row from that students review is pulled for display as seen here:

The pop-up shows:

- Percentage of total review time for the review page and other links in a pie chart

- The time the reviewer spent on the review page and other links

- The total time spent on the review

Development

*Links provided as scale of development is very large

Github Info

Our Pull Request can be found here

Tasks Identified

Along with the tasks above, upon reviewing existing code and discussing with our mentor we realized there was more to be done. A refactor of the previous teams code was needed as well as proper comments to document how this feature functioned. This extended the scope of our project significantly.

Our list of tasks included:

- Review and understand previous teams code

- Refactor and comment usable code from previous groups (Added after reviewing previous code)

- Create new UI for viewing data

- Improve current database schema to hold the total time spent on a review

- Add tests

Review Previous Code

Upon reviewing all the previous groups code, it seemed that E2080 (our previous group) had the best starting point for our project having improved their previous groups code. They had a way of tracking the time spent on a review, storing it in a local file, saving this file to the database, and displaying the data. We did however find the code was hard to follow and needed to be refactored if we were to have this feature merged with the main Expertiza repository.

Refactor and Comment

Pulling E2080s code as a base, we refactored this code to have comments, proper formatting, and move most functionality out of client side javascript to the rails controller.

- Most of the client side javascript is now located in app/assets/javascripts/review_time.js and app/assets/javascripts/window_manager.js

- Functionality like calculating time between entries is now done in app/controllers/submission_viewing_events_controller.rb using functions from app/helpers/submission_viewing_event_helper.rb to assist with any math.

Test Plan

When conducting live testing, you need to create a new review so the system can gather the timing data. (This is why most reviews show 0 as they were made before our implementation)

General Test

NOTE: This is our process. We have listed it here as getting to this feature and functionality can be confusing without knowledge of the Expertiza site. This is a guideline to help our peers. Please feel free to test the functionality beyond this.

To do this, here is our method:

- As instructor6, find assignment "Final project (and design doc)". This is a previously created assignment that functions correctly.

- Add a new participant to the assignment using the options to the right of the row. (We found student2150 and up to be easiest)

- Impersonate the added student. "Management" dropdown -> impersonate user

- You should now see a pending review for "Final project (and design doc)". Select this to get to the review page.

- Select the available submission and begin the review

- Now you should be taken tot he questionnaire for that assignment. Conduct the review however you like. Your activity will be timed.

- Note: You must open the teams submitted links and files from the review page for it to be timed

- Note: If you have a link open, you will not be able to open it again

- Once complete, submit the review

- You can now "Revert" back to your instructor account using the button in the Expertiza site heading

- Navigate to the "Review Report" page as outlined in the Background section of this site

- Find the student account you used and there will be an R1 or R2 next to your review. Click on these to see the breakdown of your review times for that respective round.

Edge Cases

Edge cases that we tested using the UI include:

- Not opening a few or all of the links submitted

- This will result in those links not being displayed in final breakdown

- User closes browser mid-review

- pstore contains data

- User spends 0 seconds on a review

- Displayed as "No Data" on report page

Automated Tests

Our automated testing spans our controller and our helper functions.

Submission Viewing Events Controller Tests

Rspec file: spec/controllers/submission_viewing_events_controller_spec.rb

Ensure: 1. action_allowed returns true 2. start_timing sets start_time and clears end time for link 3. start_timing sets start_time and clears end time for all links in round 4. end_timing sets end_time and updates total_time for link 5. end_timing sets end_time and updates total_time for all links 6. reset_timing sets end_time to current time, updates total_time, and restarts timing for link 7. reset_timing sets end_time to current time, updates total_time, and restarts timing for all links 8. hard_save saves storage proxy file to database and clears file 9. end_round_and_save stops all timing and saves to database

Local Storage Tests

Rspec file: spec/helpers/local_storage_spec.rb

Ensure: 1. save records to pstore file 2. sync fetches registry from pstore file 3. where retrieves single matching instance from pstore file 4. read saves instance to database 5. hard_save saves timing instance to database 6. hard_save_all saves all registry instances to database 7. remove removes instance from pstore file 8. remove_all removes all instances from pstore file

Local Submitted Content Tests

Rspec file: spec/models/local_submitted_content_spec.rb

Ensure: 1. initialize successfully creates object with params 2. to_h successfully converts object to hash 3. == successfully compares the content of two objects

Added / Changed Files

Due to the size of our feature, our PR contains many additions. We have limited these to new files as much as possible in order to maintain the current Expertiza files and avoid unnecessary conflicts.

Team Information

Leo Hsiang

Jacob Anderson