CSC/ECE 517 Spring 2021 - E2109. Completion/Progress view

Problem Statement

Expertiza allows users to complete peer reviews on fellow students' work. However, not all peer reviews are helpful, and some are more useful than others. Therefore, the application allows for the project's authors to provide feedback on the peer review, this is called "author feedback." The instructors have no easy way to access the author feedback while grading peer reviews, which would be a useful feature to have since this shows how helpful the peer review actually was to the group that received it. Thus, making the author feedback more accessible is the aim of this project. However, a group in 2018 was tasked with this project as well, and most of the functionality appears to have been implemented already, but that is in an older version of Expertiza. Our primary task is then to follow their implementation, refactor any code that may require it, make the suggested improvements that were left in their project feedback and make the feature compatible with the latest beta branch of Expertiza. Secondary tasks are to make the author feedback column toggleable and to refactor the function that averages author feedbacks so that it reuses existing code that exists somewhere in Expertiza already.

Goal

- Our primary goal is to take the author feedback column functionality from the 2018 branch and update it so that it merges successfully with the current beta branch.

- In the 2018 branch, there is an author feedback column to the review report, but the previous team's submission added new code to calculate the average. However, this most likely already exists somewhere else in the system, and so we will identify where that functionality exists and refactor the code to reuse it to follow the DRY principle.

- Part of the 2018 group's feedback said that the review report UI began to look somewhat crowded in its appearance. However, there is often no author feedback, and so the column for that should be made toggleable. So we will make it so that the column is dynamic and will only be present if there is author feedback to display. The user could then toggle the column to show or hide that information depending on their own preference.

Design

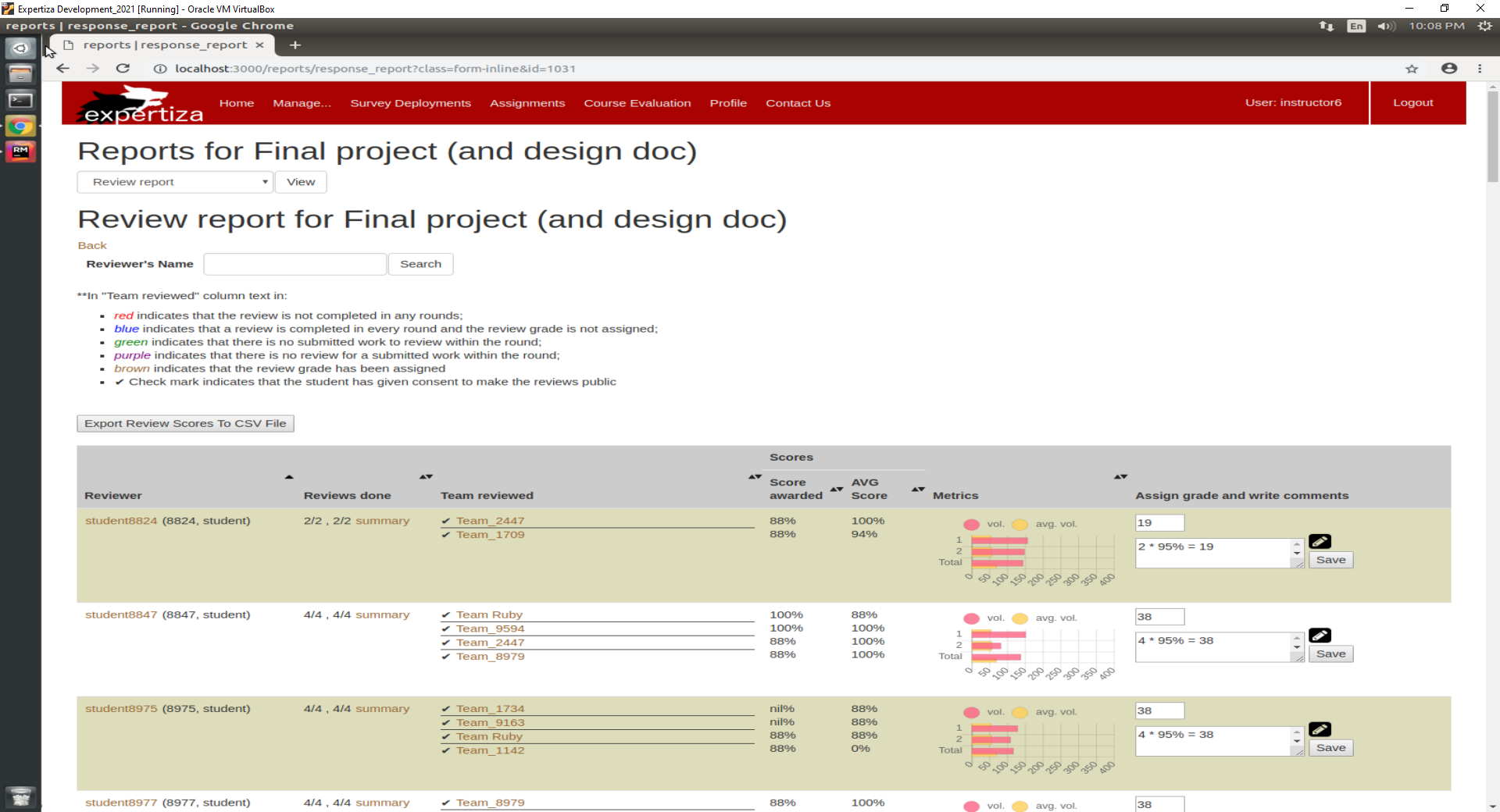

Below is the current implementation of the page we are going to edit on the beta branch. It can be seen that the "author feedback" column is not present in this view at all.

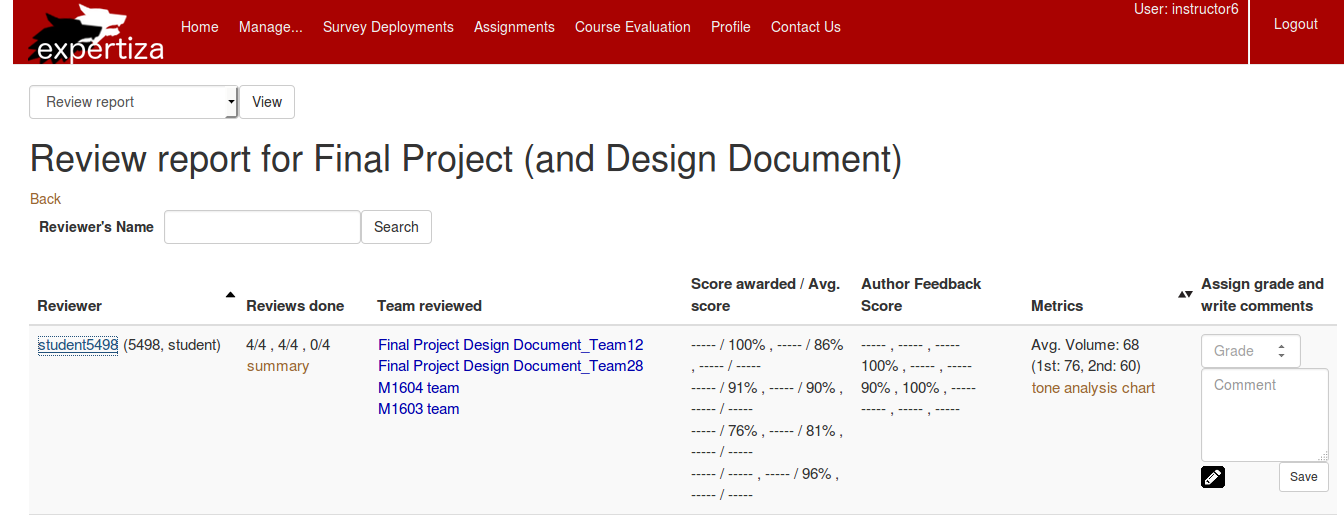

Here is the 2018 group's version of the page we are editing. It has the "author feedback" column as well as the necessary functionality. However, the user interface has clearly changed since 2018, and therefore, there will likely be merge conflicts between their branch and the current beta branch. Part of our task would then be to resolve those merge conflicts and port the functionality back over to the most recent beta branch. (Sourced from this link)

Currently, in the 2018 group's branch, the entries in the "Score awarded/Avg. score" and "Author Feedback Score" columns contain a lot of missing entries. Though, this does not appear to be the case in the most recent beta branch available in 2021. Therefore, we would add this filter (i.e. code that removes the missing entries represented by the dashes) to the missing entries available in the beta branch to the 2018 group's branch code.

In addition, the 2018 group's branch has the author feedback in its own column in-between "AVG score" and "Metrics". Instead, we will move it under the same header column "Scores" as seen in the current beta branch, just to the right of the "AVG Score" column. To accommodate for this additional column being introduced, the "Team reviewed" column width would be reduced a bit so that the "Scores" column renders approximately at the same location on the page.

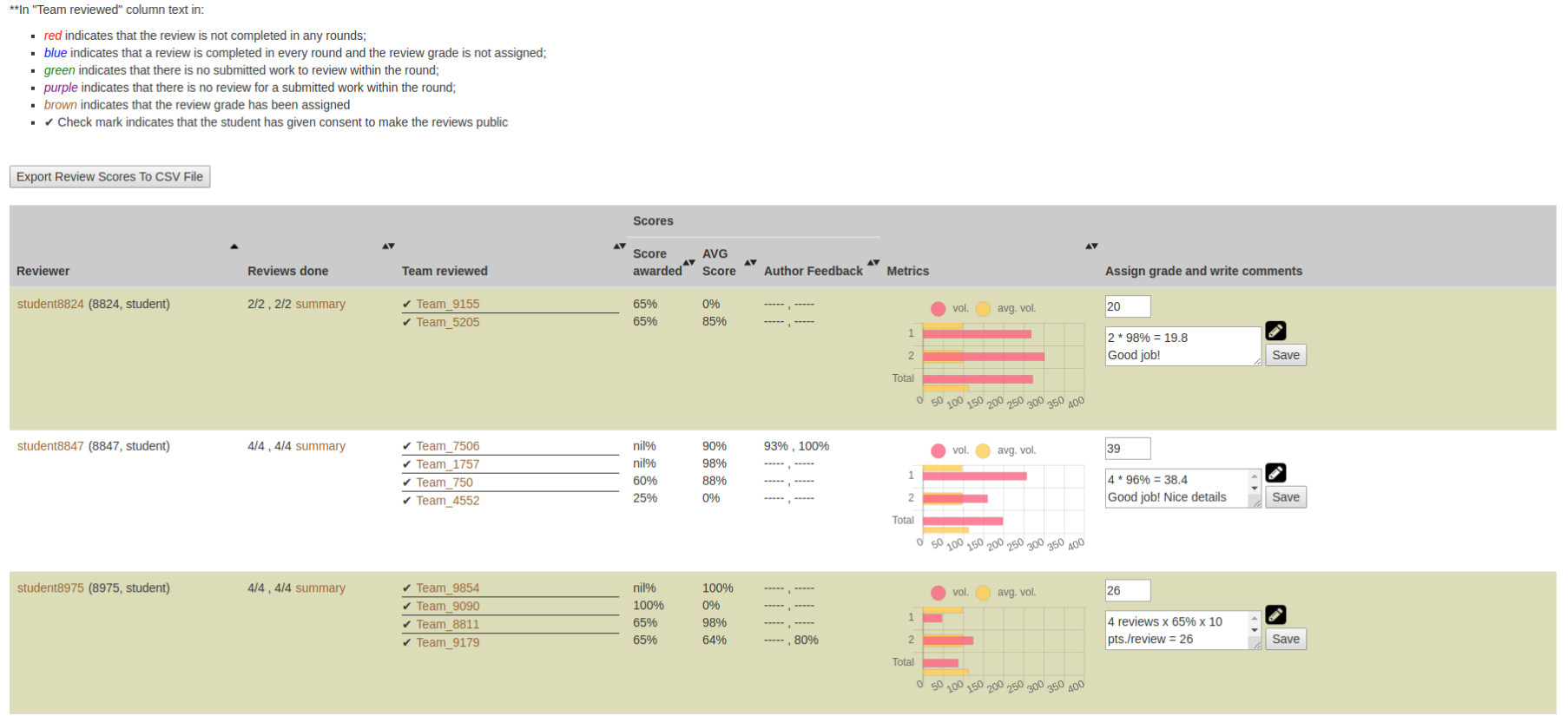

Below is the final product of our implementation. As can be seen, some columns that had unnecessarily large amounts of space given to their width e.g. reviewer and team reviewed, have been reduced to accommodate the new author feedback column. There is also still ample space for the assign grade and write comments column to be expanded.

Files Changed

The below files were expected to be edited by our group to complete this project:

- app/views/reports/_review_report.html.erb

- app/controllers/review_mapping_controller.rb

- app/models/on_the_fly_calc.rb

- app/views/reports/response_report.html.haml

- app/views/reports/_team_score.html.erb

However, the below files had to be edited (or added) in order to implement the author feedback column:

- app/helpers/report_formatter_helper.rb

- app/helpers/review_mapping_helper.rb

- app/models/on_the_fly_calc.rb

- app/views/reports/_review_report.html.erb

- app/views/reports/_team_score_author_feedback.html.erb

In the report_formatter_helper.rb file, an attribute had to be added to the helper (that is later mixed in with the reports_controller). This attribute gives the controller access to the model's author feedback scores that have been calculated.

The review_mapping_helper.rb file required an older method to be once again introduced, this method is called "get_each_round_score_awarded_for_review_report". It is required to properly read and display the computed author feedback scores from the hash they are stored in. Another method that is similar to it, "get_awarded_review_score", could not be used as it causes an exception to be thrown; this is due to the fact that the "get_awarded_review_score" method does not accommodate for when the hash does not contain the key it is trying to access (specifically, it is unable to handle when the "reviewer_id" does not exist in the hash's set of keys).

The on_the_fly_calc.rb file only required the expected changes as introduced in our implementation plan. However, one additional method, called "feedback_questionnaire_id" had to be added in order for the "calc_feedback_scores_sum" method (that is also in the same file) to work. This method used to exist else where in an older version of Expertiza, located in the assignment.rb model, but it is not needed beyond the scope of "calc_feedback_scores_sum", so it exists with private access in the on_the_fly_calc.rb file.

The _review_report.html.erb file had to be modified - the width of the other columns was reduced to make room for the author feedback column, the column header was added, and the necessary call to the author feedback column partial was also introduced.

Finally, a new file called _team_score_author_feedback.html.erb, was added to the repository. This file is the partial required to render the author feedback column, and is a reintroduction of the 2018 group's _team_feedback_score.html.erb file.

Implementation Plan

Like the 2018 group, we plan to add three methods to the on_the_fly_calc.rb file in Models. These methods are:

- compute_author_feedback_scores

- calc_avg_feedback_score(response)

- calc_feedback_scores_sum

The feedbacks are stored in the ResponseMaps table and are queried using the assignment_id/reviewer who gave the response/reviews (responses) in each round in the 'compute_author_feedback_scores' method. The method iterates through all the rounds done during the review process, and calls the 'calc_avg_feedback_score' method, providing a "response" argument. The response argument is the review a team provides for the author's work. Lastly, in order to obtain the final average score for a particular review (response) that the authors (team) have gotten, they obtain a sum of the feedback scores from each of the authors and divide it by the total number of feedbacks that were obtained. These methods have already been implemented by the 2018 group and are included here again for the reader's convenience.

def compute_author_feedback_scores

@author_feedback_scores = {}

@response_maps = ResponseMap.where('reviewed_object_id = ? && type = ?', self.id, 'ReviewResponseMap')

rounds = self.rounds_of_reviews

(1..rounds).each do |round|

@response_maps.each do |response_map|

response = Response.where('map_id = ?', response_map.id)

response = response.select {|response| response.round == round }

@round = round

@response_map = response_map

calc_avg_feedback_score(response) unless response.empty?

end

end

@author_feedback_scores

end

def calc_avg_feedback_score(response)

# Retrieve the author feedback response maps for the teammates reviewing the review of their work.

author_feedback_response_maps = ResponseMap.where('reviewed_object_id = ? && type = ?', response.first.id, 'FeedbackResponseMap')

author_feedback_response_maps.each do |author_feedback_response_map|

@corresponding_response = Response.where('map_id = ?', author_feedback_response_map.id)

next if @corresponding_response.empty?

calc_feedback_scores_sum

end

# Divide the sum of the author feedback scores for this review by their number to get the

# average.

if !@author_feedback_scores[@response_map.reviewer_id].nil? &&

!@author_feedback_scores[@response_map.reviewer_id][@round].nil? &&

!@author_feedback_scores[@response_map.reviewer_id][@round][@response_map.reviewee_id].nil? &&

!author_feedback_response_maps.empty?

@author_feedback_scores[@response_map.reviewer_id][@round][@response_map.reviewee_id] /= author_feedback_response_maps.count

end

end

def calc_feedback_scores_sum

@respective_scores = {}

if !@author_feedback_scores[@response_map.reviewer_id].nil? && !@author_feedback_scores[@response_map.reviewer_id][@round].nil?

@respective_scores = @author_feedback_scores[@response_map.reviewer_id][@round]

end

author_feedback_questionnaire_id = feedback_questionnaire_id(@corresponding_response)

@questions = Question.where('questionnaire_id = ?', author_feedback_questionnaire_id)

# Calculate the score of the author feedback review.

calc_review_score

# Compute the sum of the author feedback scores for this review.

@respective_scores[@response_map.reviewee_id] = 0 if @respective_scores[@response_map.reviewee_id].nil?

@respective_scores[@response_map.reviewee_id] += @this_review_score

# The reviewer is the metareviewee whose review the authors or teammates are reviewing.

@author_feedback_scores[@response_map.reviewer_id] = {} if @author_feedback_scores[@response_map.reviewer_id].nil?

@author_feedback_scores[@response_map.reviewer_id][@round] = {} if @author_feedback_scores[@response_map.reviewer_id][@round].nil?

@author_feedback_scores[@response_map.reviewer_id][@round] = @respective_scores

end

However, they calculate the average with a custom implementation that most likely exists somewhere else in Expertiza already as suggested by the instructors. As part of our project, we will identify where that functionality exists, and either reuse it or extend it so that it can be reused here instead. Though, we will first implement the functionality as the previous group did, and then slowly perform our refactor to ensure functionality is not broken by the refactoring process.

Since we will need to modify the user interface to introduce the author feedback column, we expect to add a column within the .erb files in the views folder that were identified above. These files were selected as they were the files necessary for the previous group to implement their UI changes.

They also add a 'calculate_avg_score_by_feedback' method to the controller for the file 'app/controllers/review_mapping_controller.rb'. The following method was written by the previous team to calculate the average scores for the feedback given by authors for the reviews of their work.

def calculate_avg_score_by_feedback(question_answers, q_max_score)

# get score and summary of answers for each question

# only include divide the valid_answer_sum with the number of valid answers

valid_answer_counter = 0

question_score = 0.0

question_answers.each do |ans|

# calculate score per question

unless ans.answer.nil?

question_score += ans.answer

valid_answer_counter += 1

end

end

if valid_answer_counter > 0 and q_max_score > 0

# convert the score in percentage

question_score /= (valid_answer_counter * q_max_score)

question_score = question_score.round(2) * 100

end

question_score

end

Unfortunately, it exists in the controller level logic, and perhaps isn't required to exist there. It is likely better for this method to be moved to this controller's corresponding model, and we will do so during our project to make the code follow the principles of MVC.

Test Plan

The 2018 group wrote a RSpec test when they wrote their function to calculate the author feedback scores. It was located in the on_the_fly_calc_spec.rb file and the method they wrote was called 'compute_author_feedback_scores'. Below is the snippet of the RSpec test that they wrote:

describe '#compute_author_feedback_score' do

let(:reviewer) { build(:participant, id: 1) }

let(:feedback) { Answer.new(answer: 2, response_id: 1, comments: 'Feedback Text', question_id: 2) }

let(:feedback_question) { build(:question, questionnaire: questionnaire2, weight: 1, id: 2) }

let(:questionnaire2) { build(:questionnaire, name: "feedback", private: 0, min_question_score: 0, max_question_score: 10, instructor_id: 1234) }

let(:reviewer1) { build(:participant, id: 2) }

let score = {}

let(:team_user) { build(:team_user, team: 2, user: 2) }

let(:feedback_response) { build(:response, id: 2, map_id: 2, scores: [feedback]) }

let(:feedback_response_map) { build(:response_map, id: 2, reviewed_object_id: 1, reviewer_id: 2, reviewee_id: 1) }

before(:each) do

allow(on_the_fly_calc).to receive(:rounds_of_reviews).and_return(1)

allow(on_the_fly_calc).to receive(:review_questionnaire_id).and_return(1)

end

context 'verifies feedback score' do

it 'computes feedback score based on reviews' do

expect(assignment.compute_author_feedback_scores).to eq(score)

end

end

end

UI Testing

The UI testing, isn't much of a functionality, but is rather the change in the view of the report page by the intructor. For anyone to test the UI, the following steps would be a suitable guide:

- First login to the expertiza website with Username as instructor6 and password as password.

- Next click on Manage and tab on top left of the screen and click Anonymized View.

- Once we get into ananoymized view, click on Assignments.

- After the assignment list loads up, on any given assignment, eg: Final Project Documentation, navigate to the right side and search for the icon with a person on it and says Review report.

- Click on that icon. This will take you to another page where there is a button Report View. Click on that.

- This will navigate you to the report page as seen above and you will be able to view the Author's Feedback column and score obtained for every student.