CSC/ECE 517 Spring 2021 - E2100. Tagging report for students: Difference between revisions

No edit summary |

|||

| Line 147: | Line 147: | ||

===Client-Side Implementation: "Alternate View" (view_my_scores.html.erb)=== | ===Client-Side Implementation: "Alternate View" (view_my_scores.html.erb)=== | ||

=====countTotalTags()===== | =====countTotalTags()===== | ||

A simple JS function to count all the tag elements on current page. | |||

[[File:countTotalTags.png]] | [[File:countTotalTags.png]] | ||

Revision as of 02:56, 19 March 2021

This page details project documentation for the Spring 2021, "E2100 Tagging report for students" project, which aims to assist students with finding, and completing, incomplete "review tags" on an assignment using a dynamically generated heatgrid.

Introduction to Expertiza

Expertiza is an Open Source Rails application which is used by instructors and students for creating assignments and submitting peer reviews. Expertiza allows the instructor to create and customize assignments, create a list of topics the students can sign up for, have students work on teams and then review each other's assignments at the end. The Source code of the application can be cloned from Github.

About Review Tagging

Review "tags" are a form of feedback on Expertiza where students "tag" (classify) text from peer reviews based on parameters specific to each tag deployment. The parameters can include helpfulness, positivity, suggestions, whether a review offered mitigation, and other parameters depending on the Answer Tag Deployment. These labeled data are then made available to Expertiza researchers for use in developing Natural Language Processing (NLP) / Machine Learning (ML) algorithms. Tagging is only collected for reviews where the text is a sufficient length to be useful as labeled data for NLP research.

Problem Statement

It can be difficult for students to find a tag they missed on the Team View page, and other teams are working with ML algorithms to "pre-tag" as many reviews as possible, leading to a granular field of completed/incomplete tags. For example, an assignment with two rounds of reviewing, ten questions per review, twelve reviews, and a 5-parameter tag deployment could contain as few as zero or as many as one thousand, two hundred tag prompts for a single student to complete.

At this time, the only tagging feedback students see are the tag prompts and a Javascript counter with a numeric representation of how many tags have not been completed. In order to find a missed tag, students have to scroll the page and manually search for tags that aren't done.

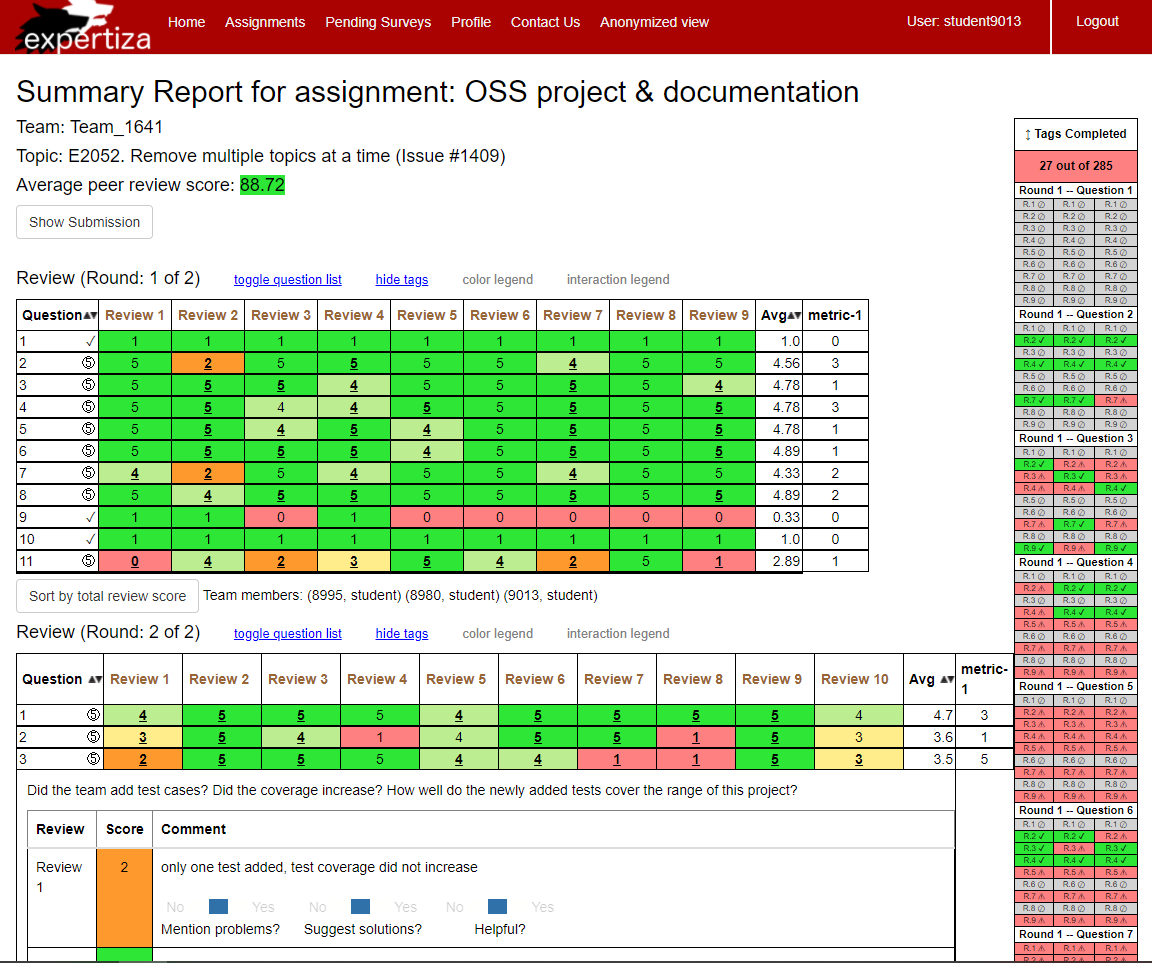

In order to help students complete all the tags for an assignment, we propose a new, dynamically generated heatgrid on the "Your Scores" view that breaks down reviews and tags by round, question, and review, which uses visual cues to help students find incomplete tags. The heatgrid shows both a total count of "complete" out of "available" tags, and individual tags with color-scaled feedback for completeness.

The new heatgrid must handle these requirements, and display visual feedback accordingly:

- Single and multiple round reviews

- Reviews with and without tag prompts

- Dynamically update as tags are completed

- Different tag deployments will use different numbers of tags per review

- Use of existing review row-sort functionality

- Not rely on the database for tag data, as AnswerTag database rows may not exist until tags are clicked.

- Give useable feedback for all users (characters as well as color-coding)

- Only be shown when a tags have been deployed to an assignment

- Show the total progress of tagging in the format, "249 out of 315"

Implementation

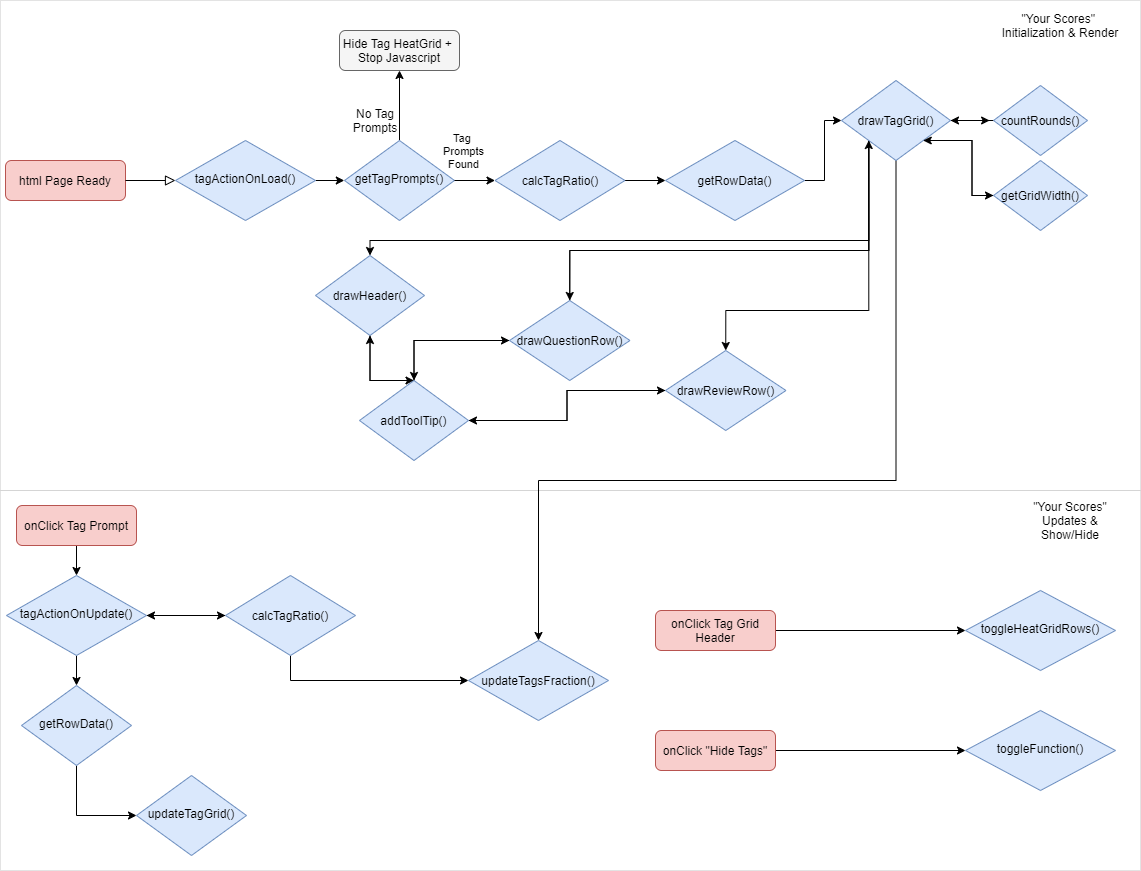

Our proposed solution is a visual feedback aid which exists purely for student users. Tagging data, when input, is stored to the database dynamically using jQuery. For these reasons, and to facilitate dynamic updates, we have chosen to implement this functionality entirely on the client-side of the application using Javascript and jQuery. The presence or absence of tag prompts are detected at page load, and the heat grid is rendered as appropriate. The flowchart below details the new Javascript functions which were implemented to accomplish this goal, and the associated program flow.

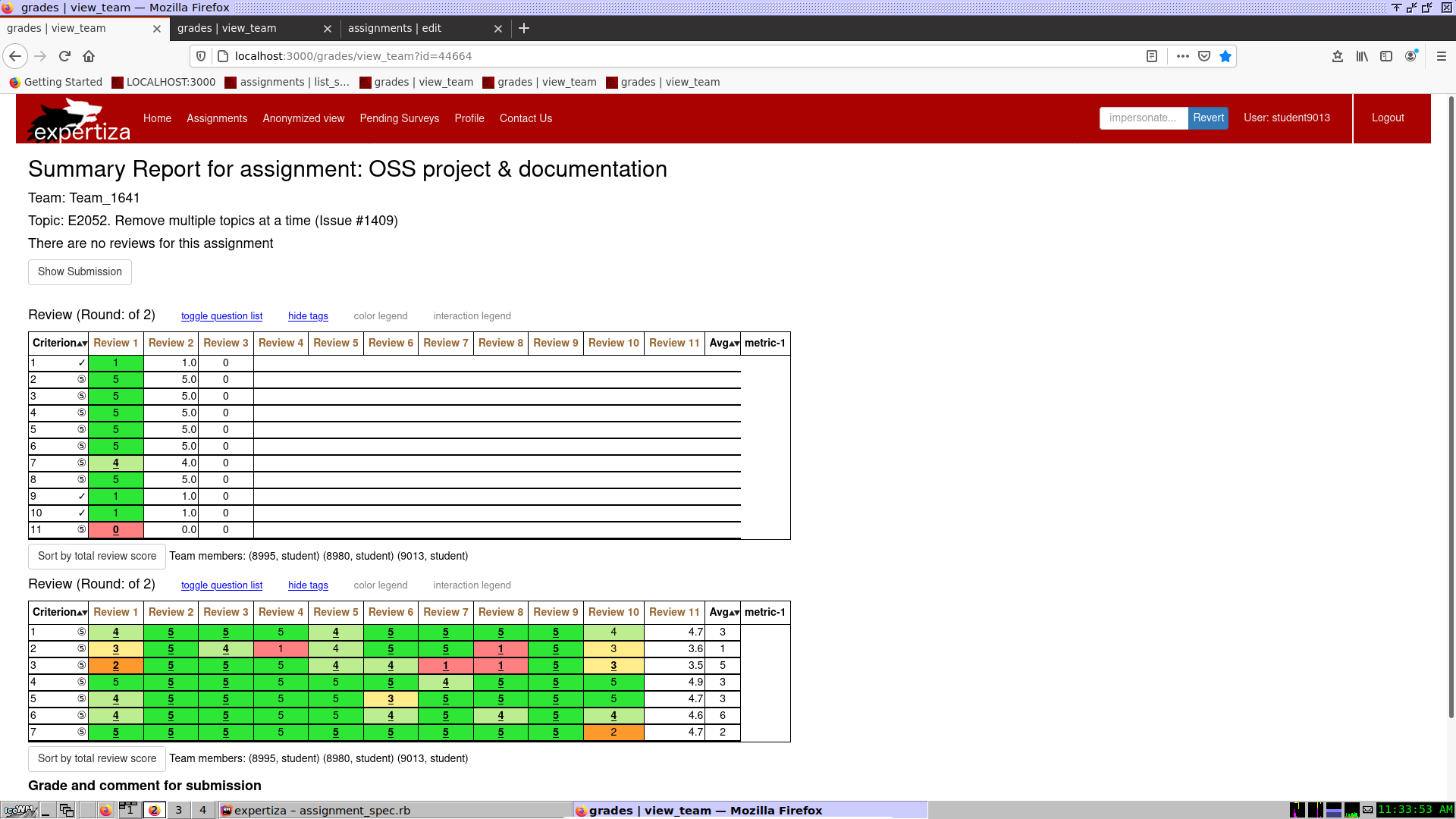

Heat grid for a two-round review

Code Style

A note on code style: Because this project utilizes so much Javascript, we tried to keep the code style consistent and true to best practices for both Ruby and Javascript, depending on which language we were working in. Therefore, any code or variables from the Ruby section should use snake_case, and code in the Javascript section should use lowerCamelCase.

Server-Side Implementation: "Your Scores" and "Alternate View"

Because most of the logic is implemented on the client-side, the server-side implementation is fairly simple.

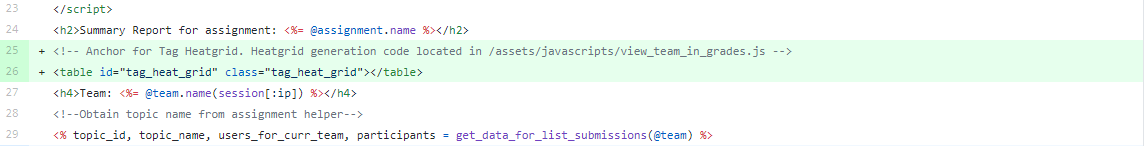

"Your Scores" view (/views/grades/view_team.html.erb)

We added an empty HTML <table> tag with id and tag information that is used to generate the heatgrid with Javascript.

In order to simplify the jQuery which parses the rendered page for tags, we added three numeric and one boolean jQueryable data- fields and a new id field to the td container tag which holds each review text (and the associated tags, if present). We used existing Rails variables to populate these data and id fields. The id is set using "rr" for reviewrow (shortened for brevity) plus the question and review number (j and index). We also created fields for data-round (round number) data-question_num (question number), data-review_num (review number), and data-has_tag (boolean whether tags exist).

A word on style choices: the "data-" is the key queried by jQuery, so a dash is used. The string after the dash is free for us to choose the style, so we used Ruby style to match the convention on the rest of the application.

Finally, we added a call inside the document.Ready() event handler to tagActionOnLoad(), which starts the rendering code for the client-side Javascript once the page has loaded and tags (if available) are present for counting. We also added a jQuery of our new heat grid table within the onClick for the "Hide Tags" link to ensure our heatgrid is hidden when tag prompts are hidden.

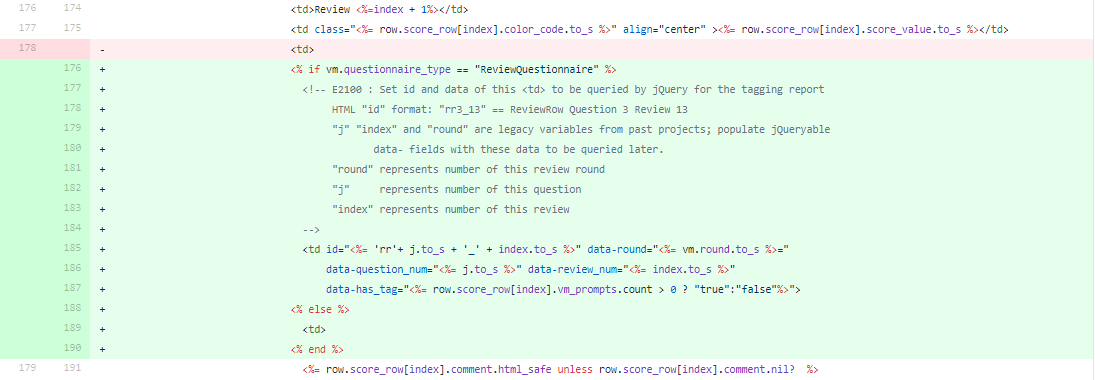

"Alternate View" (/views/grades/view_my_scores.html.erb)

We added function calls to countTotalTags() and countTaggedTags() inside the document.Ready() event handler to calculate the total number of tags and the total number of tagged tags on the page that currently being rendered. We also added div HTML element with id tag_stats and render this element with string "Tag Finished: tagged_tags/total_tags". For example, if there are 370 tags in total on this page and 10 of them has already been tagged, then 10/370 will be displayed. This element will also be updated dynamically when users make tag changes on this page.

Client-Side Implementation: "Your Scores" (view_team.html.erb)

The majority of the logic is implemented on the client side. After the page is loaded and the rest of the ReactJS interface is rendered, a dynamically rendered heatgrid is rendered on the "Your Scores" view. A series of functions included in Javascript asset files view_team_in_grades.js and answer_tags.js are called which render the appropriate element for each view. These functions are described below, organized in calling order.

Initialization and Rendering - view_team_in_grades.js

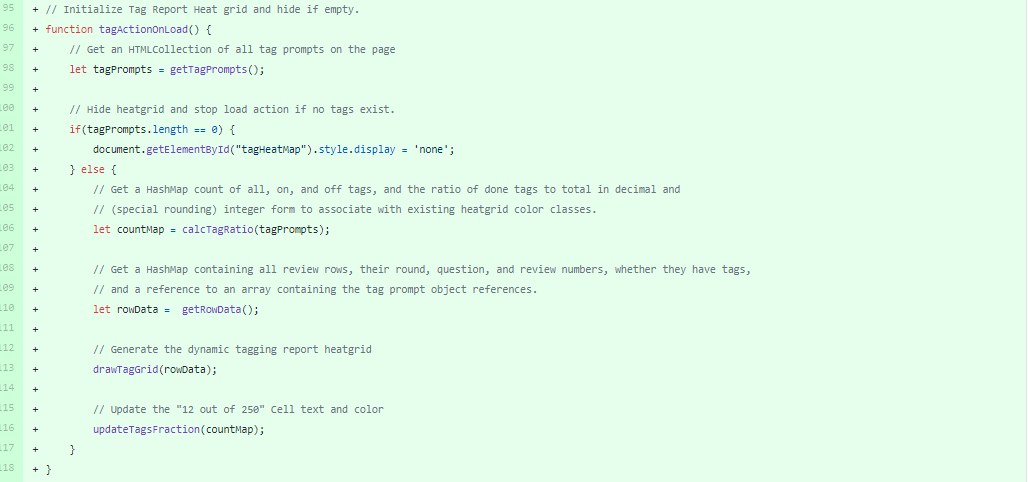

tagActionOnLoad()

Called by document.Ready() event handler, this function first calls getTagPrompts(), determines if zero exist, and exits if so. If tag prompts exist, it continues to call other functions which query the page for information and render the tag heatgrid.

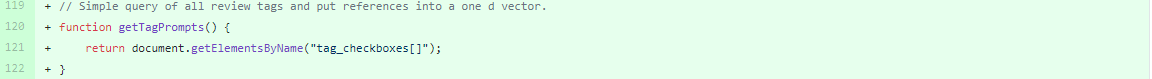

getTagPrompts()

A simple Javascript query that collects all the tag prompts as an HTMLCollection for use with other function.

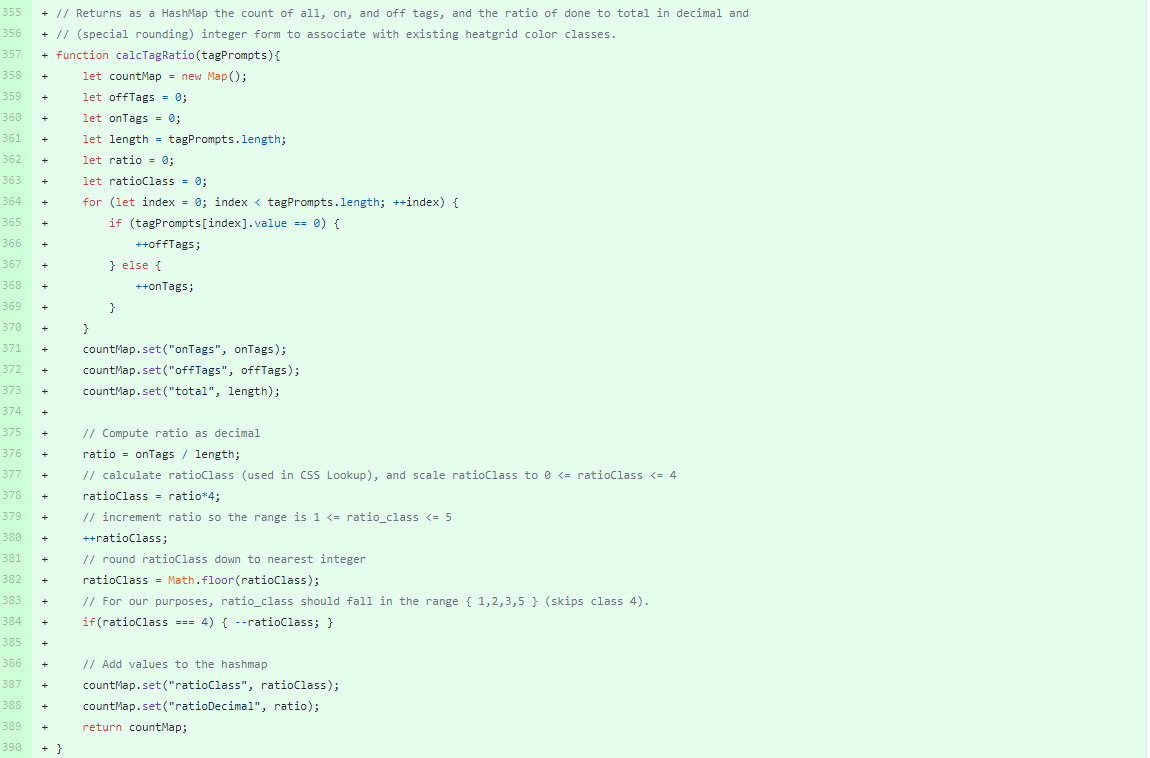

calcTagRatio()

A mathematics helper function that uses the tag prompt collection to gather data used in populating the tag heatgrid header ("tag fraction") which reads, for example, "25 out of 350". This function gathers all the numeric data, as well as calculating the completion ratio as a decimal and adapting this decimal to a value that can be used to reference heatgrid color classes (c0 grey, c1 red, c2 orange, c3 yellow, c4 light green, c5 dark green). Note that we avoided using the light green class because it's too easily confused with dark green -- meaning "all done" -- so logic in this function ensures we skip straight from c3 yellow @ .9999 completion, to c5 dark green at 1.0000 completion.

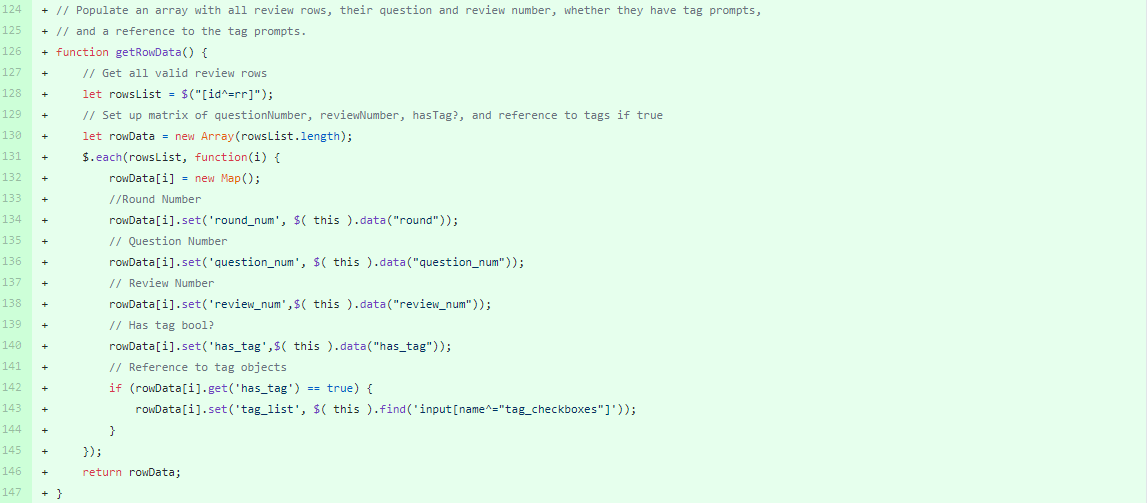

getRowData()

This method uses jQuery to retrieve the row containers for all questions in all rounds of this review, whether they contain tag prompts or not. This allows us to populate the heatgrid with 'grey - universal No-symbol' for reviews whose length metric is too low to have a tag prompt associated.

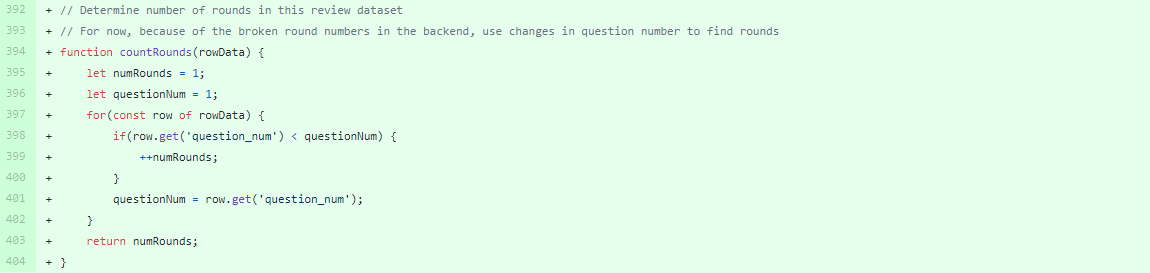

countRounds()

This function parses the rows of the review to determine how many rounds of reviews exist in the assignment. This allows us to intelligently print "Round 2 -- Question 3" for multi round reviews, or "Question 3" for single round reviews.

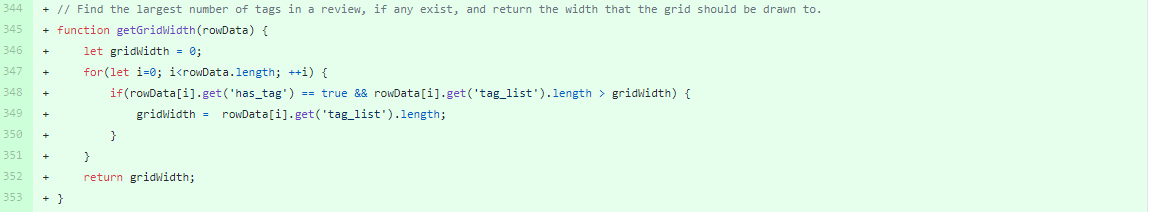

getGridWidth()

This function parses the row data to determine how many tag prompts were used in this deployment using a simple selection search for the largest number of tags in a review. Since different tag deployments use different numbers of prompts, the rendering of the heatgrid needs to address this flexibility. Furthermore, the design of this function will allow for future flexibility of tag deployments, i.e., where different reviews in the same assignment or round may have different numbers of tags.

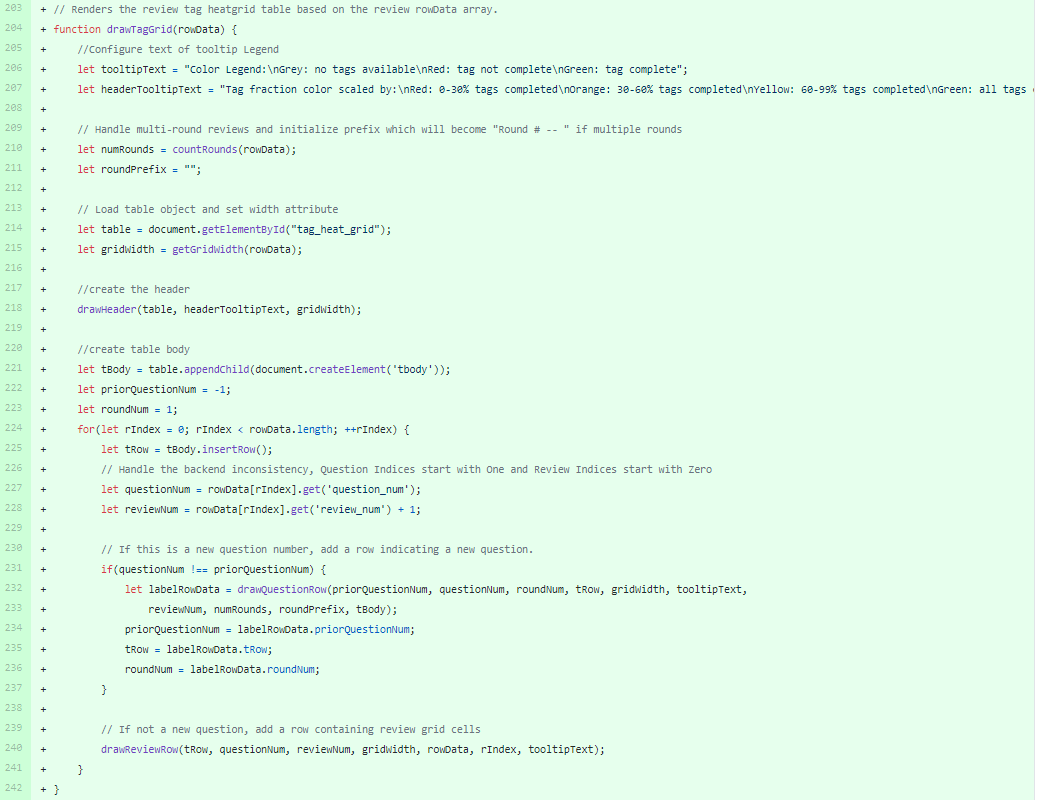

drawTagGrid()

This is the master function for the rendering of the tag grid. This function sets up tooltip text, calls countRounds() and getGridWidth() and passes control to the three sub-functions, drawHeader(), drawQuestionRow(), and drawReviewRow(), depending on what type of item needs to be rendered next.

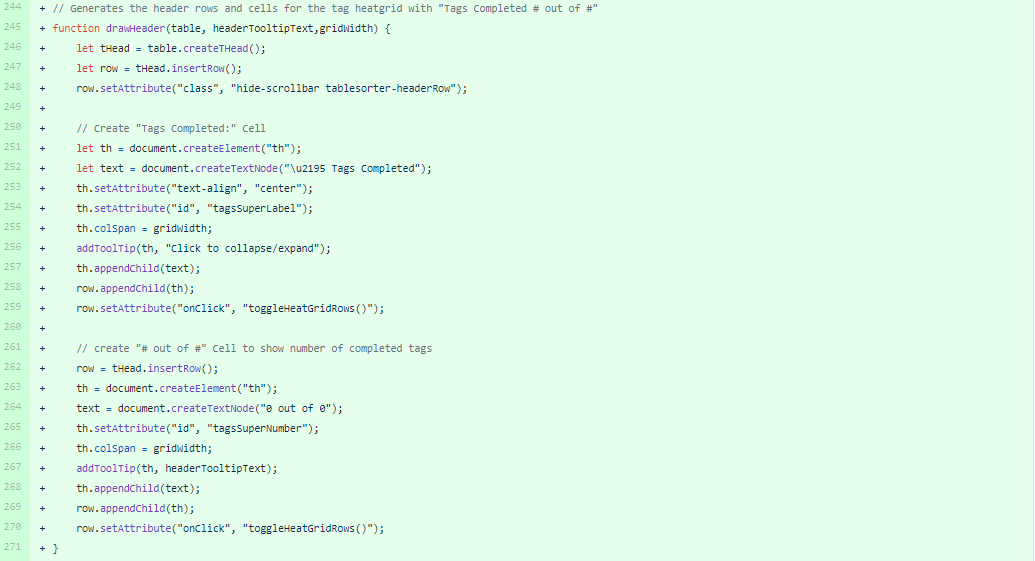

drawHeader()

This function draws the two-row header of the tag heatgrid which will contain "Tags Completed" and "15 out of 425". It also calls addToolTip() to add the tooltips for expand/contract, and the color legend for the tag fraction row.

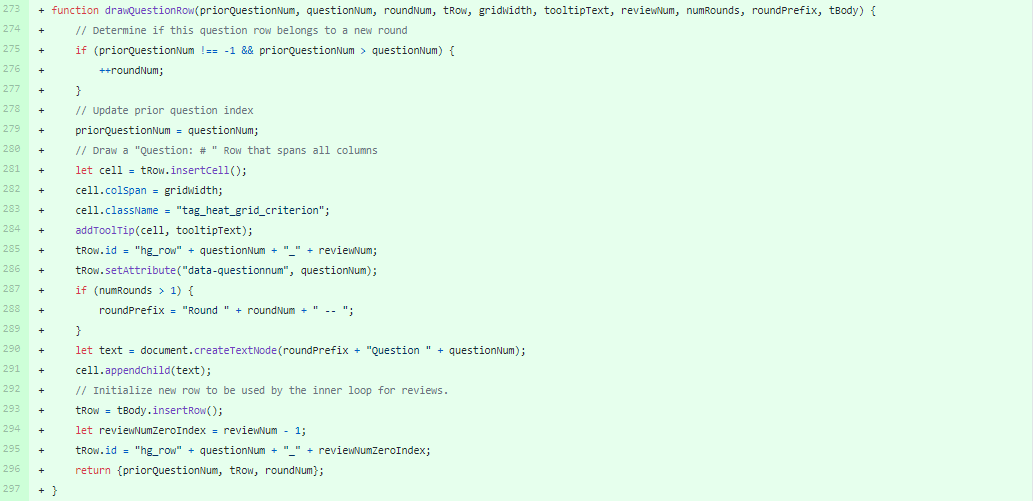

drawQuestionRow()

This function is called once per question during the rendering process, and draws the row which will read, "Question 3" or "Round 2 -- Question 3". This also calls addToolTip() to add a color legend tooltip for the body of the heatgrid.

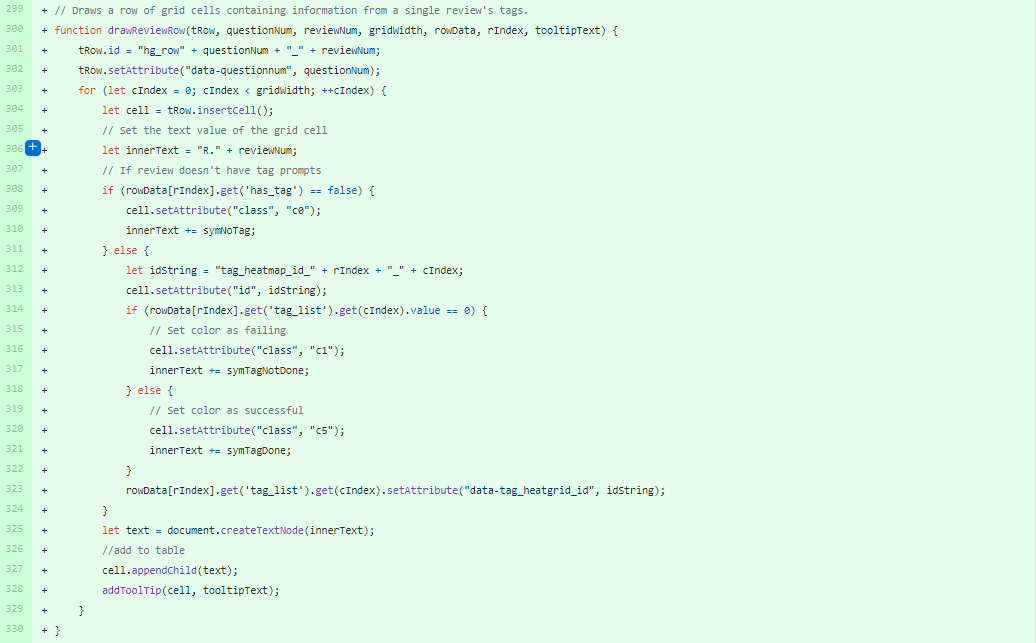

drawReviewRow()

This function draws each row containing the presence, or absence of, tag prompts for each review. These contain R.# (Review.number), and a unicode symbol (set in the global variables). The unicode symbol is to enable accessibility for users who cannot see the red/green spectrum clearly, and will be populated with either a Universal No to mean no tags available, a Warning symbol, to mean tags not done, or a Check-Mark to indicate tags done.

Lastly, a call is made to updateTagsFraction() as part of the initial render to update the "12 out of 230" header row in the tag grid.

Updating and Interaction Functions - view_team_in_grades.js

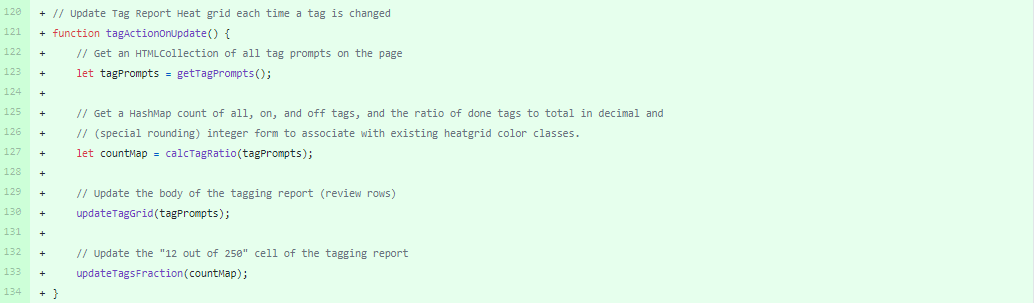

tagActionOnUpdate()

Each time a tag prompt is changed, this function is called to update the heatgrid. This function calls several other functions, some of which have been used previously it the rendering step.

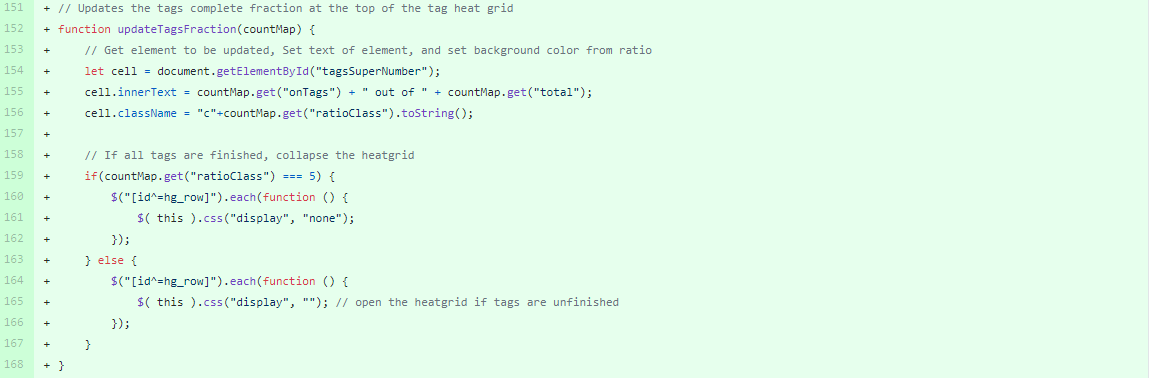

updateTagsFraction()

This function is used both once in the rendering step, and again each time a tag prompt is changed. This function takes the data from calcTagRatio() and updates the contents and color of the header row in the tag heatgrid ("12 out of 220")

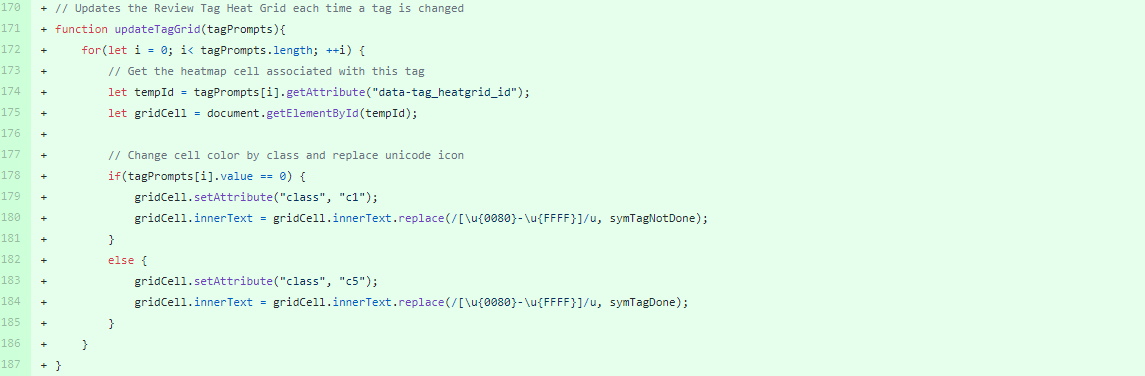

updateTagGrid()

This function queries the tag prompts on the page, and uses these data to update the tag heatgrid class and text.

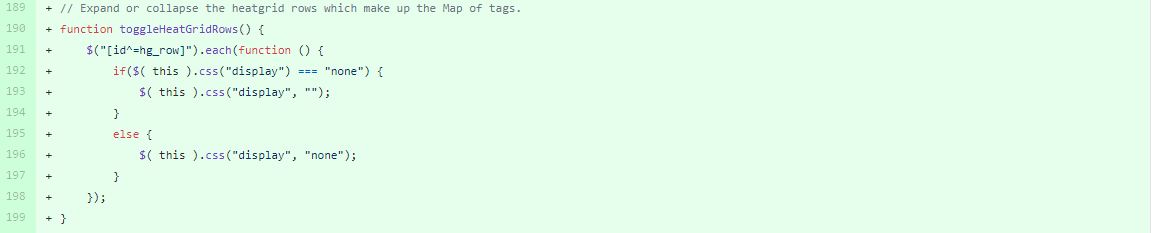

toggleHeatGridRows()

This function "Collapses" the heatgrid down to only the two header rows. Note that this function is called both onClick of the heatgrid header, so a user can collapse the grid manually, and automatically when the tag fraction is updated. This ensures that a user visiting the page with all tags complete will see a collapsed heatgrid by default.

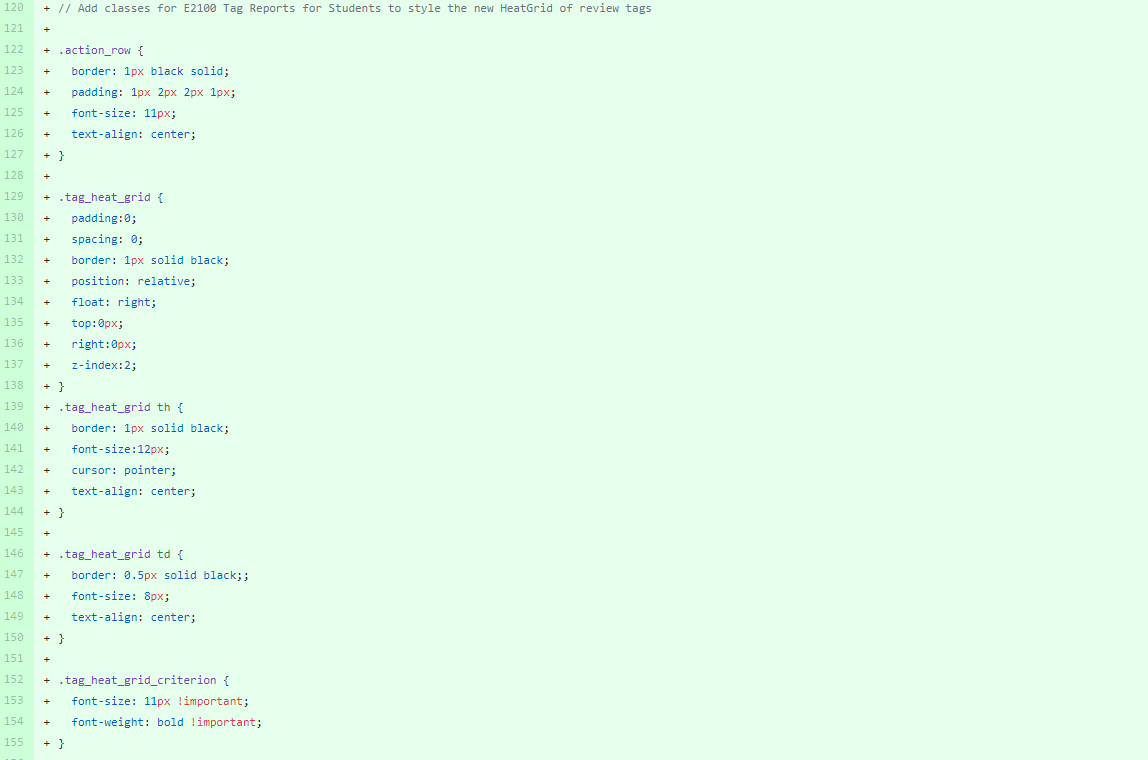

Stylesheets - grades.scss

We augmented the stylesheets used in grades so that the new heatgrid could be styled as small as possible to save page real estate. To do so, we added new classes in grades.scss

Client-Side Implementation: "Alternate View" (view_my_scores.html.erb)

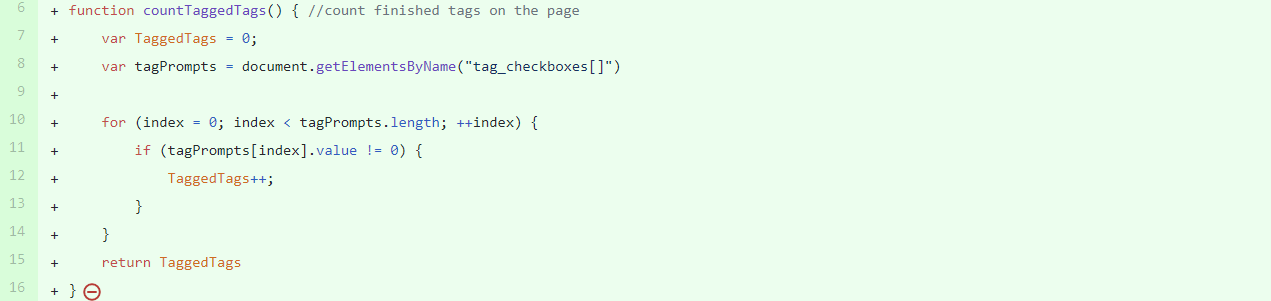

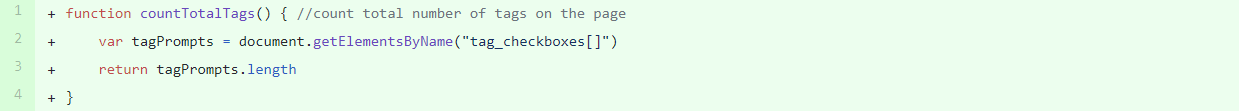

countTotalTags()

A simple JS function to count all the tag elements on current page.

countTaggedTags()

Testing

(Needs Completion) (Also need a note about no changes to models/controllers, only HTML views and JS)

(Needs Completion)

Testing HTML changes using Capybara

(Testing Information)

Additional Modifications -- Bugfix in Assignment Model and Grades Controller

In developing the tagging report, the team noticed a significant, pre-existing view bug on view_team in the beta branch of Expertiza. This bug was causing rows of the review heatgrid to not be populated when the page was rendered. The team is still in communication with the main Expertiza development team to review and verify the validity of these modifications. At the time of this document, all build tests are passing.

Screenshot with patch applied:

Origin of Bug

We discovered a twofold cause of this bug. In part, in the grades controller, calls were being made to an ActiveRecord query, @assignment.vary_by_round, which was always returning zero. Upon changing this query to use the helper method, varying_rubrics_by_round? from the Assignment model, we discovered that a recent change to the logic of varying_rubrics_by_round? was also causing unexpected behavior. We have included four modifications to our pull request that patch this bug.

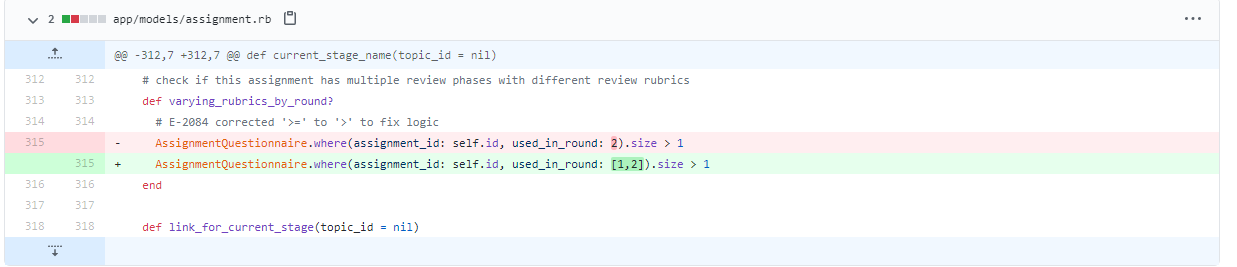

Patches to Assignment model

In the varying_rubrics_by_round? method in the Assignment model, we discovered a recent change to the logic that was causing this method to test whether there were 2 or more AssignmentQuestionnaires assigned to Round 2 of reviews. Instead, this method should test whether there are 2 or more AssignmentQuestionnaires used on the assignment. We changed this logic to query using used_in_round [1,2] instead of used_in_round 2, which should evaluate .size > 1 TRUE if each round has a different AssignmentQuestionnaire.

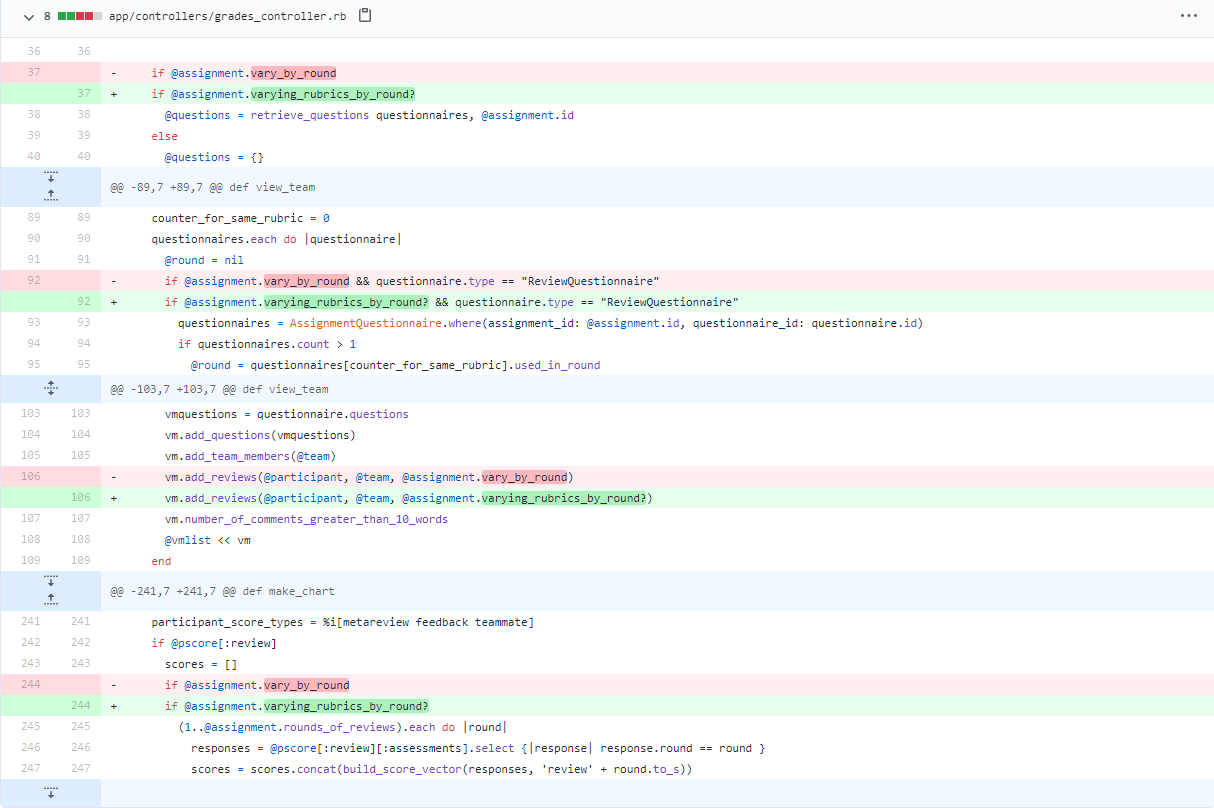

Patches to Grades Controller

In the grades controller, queries were being made to @assignment.vary_by_round instead of the helper method, @assignment.varying_rubrics_by_round?. These queries were always returning 0, because that DB field in our DB appears to be 0 for all table rows. We changed the 4 calls from this query to method calls.

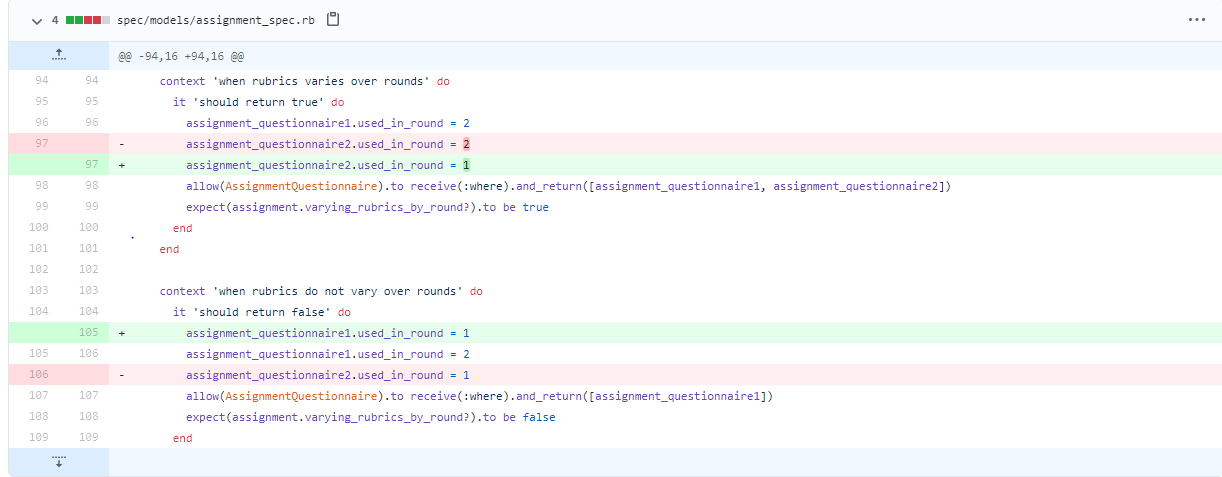

Updates to Assignment Tests

We also discovered an issue with how varying_rubrics_by_round? is tested. It seems, in the unmodified beta branch version, the test that should expect TRUE when rubrics are different for round 1 and 2, is actually testing whether Round 2 has more than 1 rubric assigned to it. We would expect this test to pass when using the old logic in varying_rubrics_by_round?. We modified the TRUE case to assign AssignmentQuestionnaire 1 to round 2, and AssignmentQuestionnaire 2 to round 1.

Also, it seemed that the test for FALSE was testing using one questionnaire in round 1, and a different questionnaire in round 2, which seems logically incorrect for this method. We believe the false case should test for the same rubric across two rounds, in which case the rubrics would not be varying by round. We modified the FALSE case to assign AssignmentQuestionnaire 1 to both round 1 and round 2.

Updates to Grades Controller Tests

Lastly, the changes to how varying_rubrics_by_round? is implemented meant that the two tests for the grades controller which rely on this method needed to be updated to expect the new logic. We implemented these updates to these two tests: