CSC/ECE 517 Spring 2020 - E2015. Conflict notification

Introduction

Expertiza Background

Expertiza is an open-source project written using the Ruby on Rails framework. The Expertiza platform aims to improve student learning by using active and cooperative learning, allowing distance education students to participate in active learning, and by discouraging plagiarism through the use of multiple deadlines. Expertiza helps to improve teaching and resource utilization by having students contribute to the creation of course materials and through peer-graded assignments. Expertiza manages objects related to a course such as instructors, TAs, students, and other users. It also manages assignments, teams, signup topics, rubrics, peer grading, and peer evaluations.

Reports Background

Students can form teams in Expertiza to work on an assignment in a group. Submissions made on Expertiza can be peer-reviewed by students within the course based on a rubric. Results of the peer review can be accessed by instructors and respective student teams in the form of statistical reports. The students have access to view their feedback in the form of a Summary Report. The student Summary Report shows the scores submitted by the reviewers for each question of the rubric for that assignment. If there are more than one round, it shows the scores separated by round number. The instructors have more options to view student submitted feedback in the form of Reviewees Summary Reports which shows the average review scores for an assignment for each round with each reviewer's comments, and Review Reports which shows what teams' assignment were reviewed by each student.

Review Conflict Background

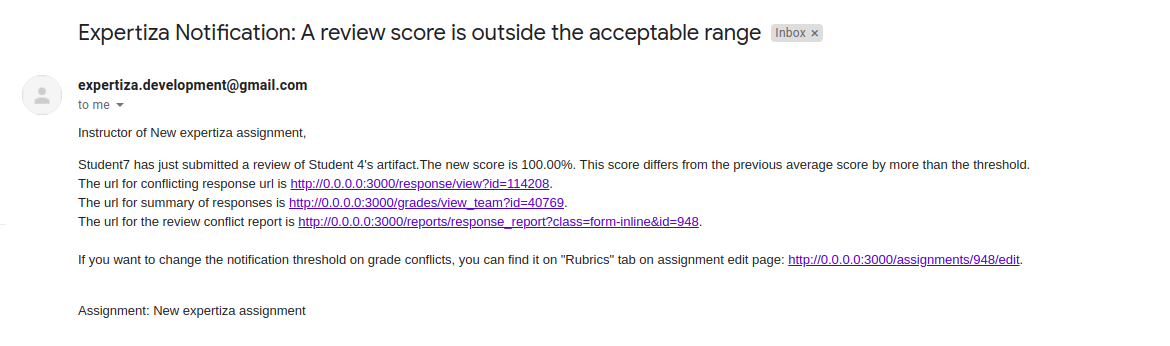

In the current implementation, during the peer review phase of an assignment, email notifications are sent out to the instructor whenever a submitted review score differs “significantly” from the average score of other submitted reviews for that submission. The threshold to trigger a notification is specified in the “Notification limit” on the Rubrics tab of assignment creation. The email that gets sent to instructors contains three links: a link to the conflicting review, a link to the summary page of grades for the reviewee, and a link to edit the assignment.

Problem Statement

When a review is submitted that triggers a conflict, an email gets sent to instructors that contains three links: a link to the conflicting review, a link to the summary page of grades for the reviewee, and a link to edit the assignment. Although the links sent in the email are helpful for reference, the process of examining a conflicting review could be made more efficient if we provide an easy to understand report of the conflicts. The information sent in the email should be updated to contain a link to a report page which should contain more details about the newest conflict as well as information on previous review conflicts for the assignment. The conflict report page should have an easy to understand visualization showing the assignment's reviews with scores that cause a conflict.

Existing Implementation

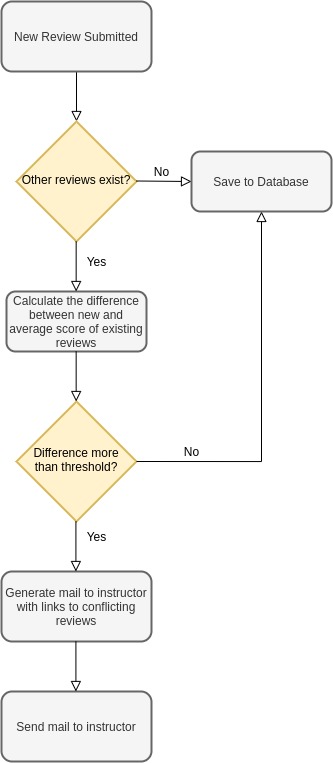

The existing system has the following implementation for conflict notification. On submitting a new review for a particular artifact, the submitted score is compared to the average score of the previously submitted reviews. If the difference between the average score and the newly submitted score is more than the notification threshold specified for the assignment, an email is sent out to the instructor. The email sent to the instructor contains the names of the reviewer and reviewee and a link to the conflicting review, a link to the summary of review responses, and a link to edit the assignment. The flowchart below delineates the entire process:

Problems with Existing Implementation

The existing implementation has the following flaws:

No dedicated report for analyzing the conflict

The current implementation does not provide any dedicated view or report containing a detailed analysis of the conflicts that occur during the entire review period. The link to the conflicting review is helpful, but doesn't provide any context for for why that review was in conflict. The link to the summary of review responses provides more insight into how the assignment is being scored overall, but it doesn't provide a clear picture of which reviews are in conflict and have scores that are outliers.

Incorrect email URLs

When an review is in conflict and an email gets set to the instructor, the body of the email contains links to the review, review summary page, and a link to edit the assignment. See a copy of the email message below as it is being sent by the current implementation.

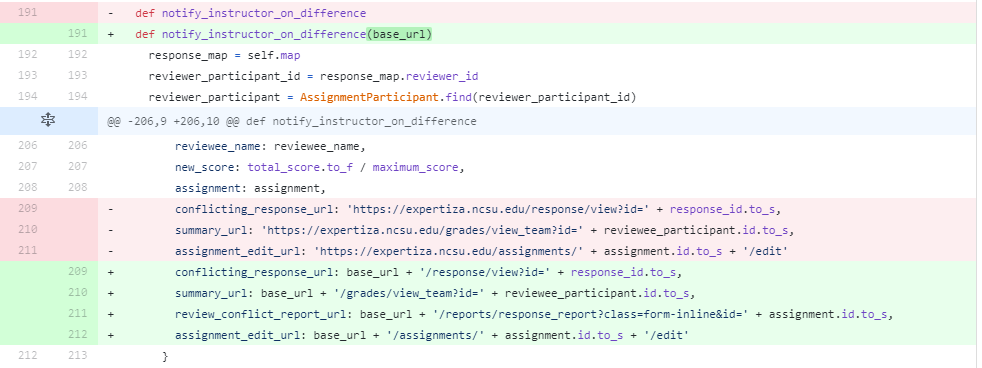

The current implementation uses hardcoded URLs mentioned in the notify_instructor_on_difference method of the models/response.rb file. See the code:

conflicting_response_url: 'https://expertiza.ncsu.edu/response/view?id=' + response_id.to_s, summary_url: 'https://expertiza.ncsu.edu/grades/view_team?id=' + reviewee_participant.id.to_s, assignment_edit_url: 'https://expertiza.ncsu.edu/assignments/' + assignment.id.to_s + '/edit'

Being hardcoded, these link would not work in on other servers where Expertiza is running. For example, the links won't be valid if the setup was done on the localhost.

Previous Work

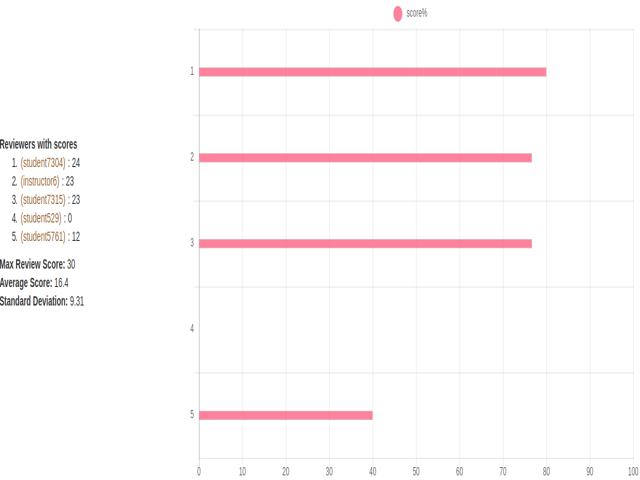

The Previous Work resolved the email related issues and also created a simple UI report page for analyzing the conflict. However, the implementation had quite a few issues that prevented it from being merged. See a screenshot from the previous implementation of a snippet of the information from the conflict report page:

Issues with Previous Work

1. The UI for the conflict report included a bar graph that showed the grades of reviews that were in conflict. The information in the bar graph was showing percentages while the scores shown beside it were in raw points. This is an inconsistency; they should display the data in the same units.

2. The bar graph contained too much white space and took up too much area on the screen.

3. It was also not obvious from the bar graph which review scores caused a conflict as they were not highlighted or distinguished in any way.

4. The bars in the chart were also not sorted in any meaningful way (i.e., ascending or descending)

5. The list of students who reviewed an submission extended vertically down the page which contributed to each row of the table taking up considerable screen space, especially for submissions with many reviews.

6. The metrics listed in the screenshot above don't specify the threshold for conflict. Therefore, you can't tell what review scores would actually cause a conflict.

7. The code for the conflict report view included a lot of logic.

8. There was a bug in the helper method team_members which gets the team members of an assignment based on their team name. The previous implementation fetched these team members solely off the specified team name param. Since team names are not unique, this posed a problem.

9. There was minimal effort spent on refactoring test cases and the test cases added in the pull request were shallow tests, meaning that they simply asserted that the expected value was not empty rather than the correct way of asserting the expected value matched a specific value.

Design of New Implementation

Proposed Solution

As the mailing functionality was implemented perfectly in the previous implementation, we plan to use their implementation as our starting point. Building on top of it, we plan to address the highlighted issues with the previous implementation and refactor the conflict report so that it is able to provide better insights into the review conflicts. Once the existing code determines that a conflict has occurred, the code will create a report view for the conflict. The report view will contain all the relevant statistics(mean, max, standard deviation, etc.) of all the reviews received for the submission. Next, a URL for the report page will be added to the email body. All URLs in the email will be relative URLs and therefore the URLs will be valid on all servers. Following files need to be updated in order to implement these changes:

- Related to Email:

app/mailers/mailer.rb- Modify method

notify_grade_conflict_messageto add the link to the conflict report in the email body.

- Modify method

app/models/response.rb- Modify method

notify_instructor_on_differenceto add a relative links to various pages that get included in the email body.

- Modify method

app/views/mailer/notify_grade_conflict_message.html.erb- Modify the html to add a link to the email message using which the conflict report viewed.

app/controllers/response_contrller.rb- Modify

updatemethod to addrequest.base_urlas a param sent tonotify_instructor_on_differencemethod - Modify

createmethod to addrequest.base_urlas a param sent tonotify_instructor_on_differencemethod

- Modify

- Related to the conflict report view:

app/views/reports/_searchbox.html.erb- Modify the html to add the option to view a conflict report.

app/views/reports/response_report.html.haml- Modify the html to render the conflict report partial if the option to view a conflict report was selected.

What we need to create:

- Related to the conflict report view:

App/views/reports/_conflict_report.html.erb- Need to create this entire file to make the view for a conflict report

app/helpers/report_formatter_helper.rb- Need to add a method

conflict_reponse_mapto make specific instance variables available to our conflict report view. This method will get called from the ReportsController.

- Need to add a method

app/helpers/summary_helper.rb- Need to add a method to get the maximum possible score of an assignment in each round

app/models/answer.rb- Need to add a method to get the answers from a review for an assignment submitted by a specific reviewer.

app/helpers/review_mapping_helper.rb- Need to add methods to get the review scores for a team in each round, calculate the average review score for a round, calculate the std. deviation of review scores for each round, and get the team members of each team. In addition, the code to create the formatted bar chart will also be placed in a helper method in this file. Apart from these, any other method to find and calculate data to be viewed in the report can be added to this helper file.

The conflict report view should:

- For each team that had a submission with a review that was in a conflict:

- List the team name and team members

- Provide a graphical view of the statistics displayed in the report

- For each round of reviews:

- List the students who reviewed the submission and the score they gave.

- Plot the review scores on a horizontal bar chart, highlighting those that caused a conflict.

- List the threshold for conflict review score, max review score, average review score, and standard deviation.

In order to resolve the issues with the previous team's pull request, we will:

1. Modify the bar chart code to show the point values as raw scores instead of percentages.

2. Modify the bar chart code to eliminate unnecessary white space.

3. Modify the bar chart code to highlight bars representing scores that cause conflict.

4. Modify the bar chart code so that the bars are sorted by ascending score.

5. List the students who reviewed the submission in multiple columns rather than a single long column.

6. Add additional metrics specifying threshold for conflict to the conflict report so you can see what scores would cause a conflict.

7. Put the necessary logic into helper methods in helper files to ensure logic stays out of our views.

8. Modify the team_members method to find the team members based off of both the team name and the assignment id.

9. Refactor the necessary affected test cases and write new RSpec tests in accordance with our proposed test plan to ensure adequate coverage.

UML Diagrams

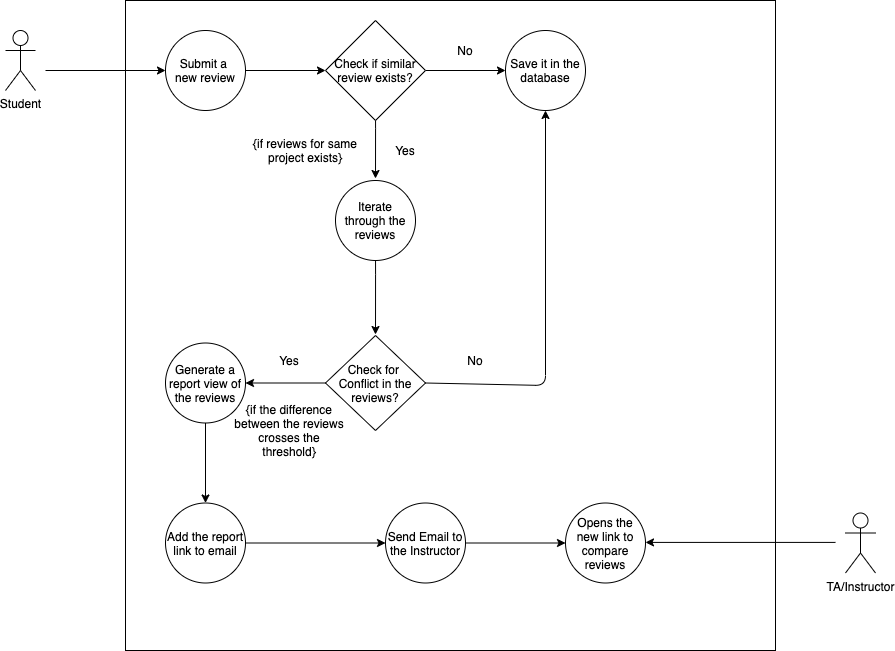

Use Case Diagram

The usecase diagram highlights the simple workflow of the proposed system when trigger by the user.

Actors participating:

- Student: In this use case, fills out the reviews for different projects submitted by the peers.

- TA/Instructor: In this use case, goes over the conflicting review submitted by the students for the same project.

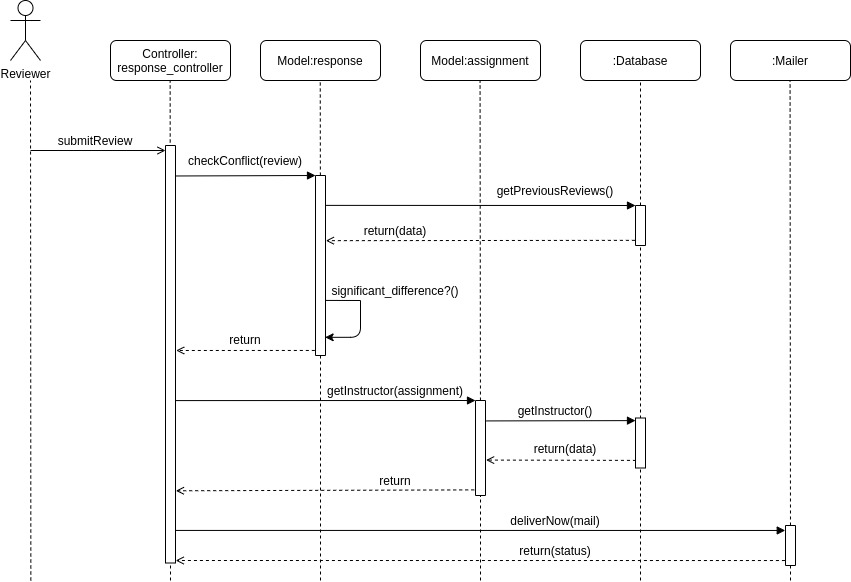

Sequence Diagram

The sequence diagram models the interactions that will take place between various components of the system for the above use-case.

New Implementation

Addressing Problems with Conflict Emails and Mailer

In order to address the problem of hard coded URLs being sent in the email to instructors, we modified the notify_instructor_on_difference method in the file app/models/response.rbto accept a base_url parameter which gets set based on the request.base_url. This allows the links to be correct, even when the server is running on localhost.

Addressing Problems with Conflict Report and Previous Implementation

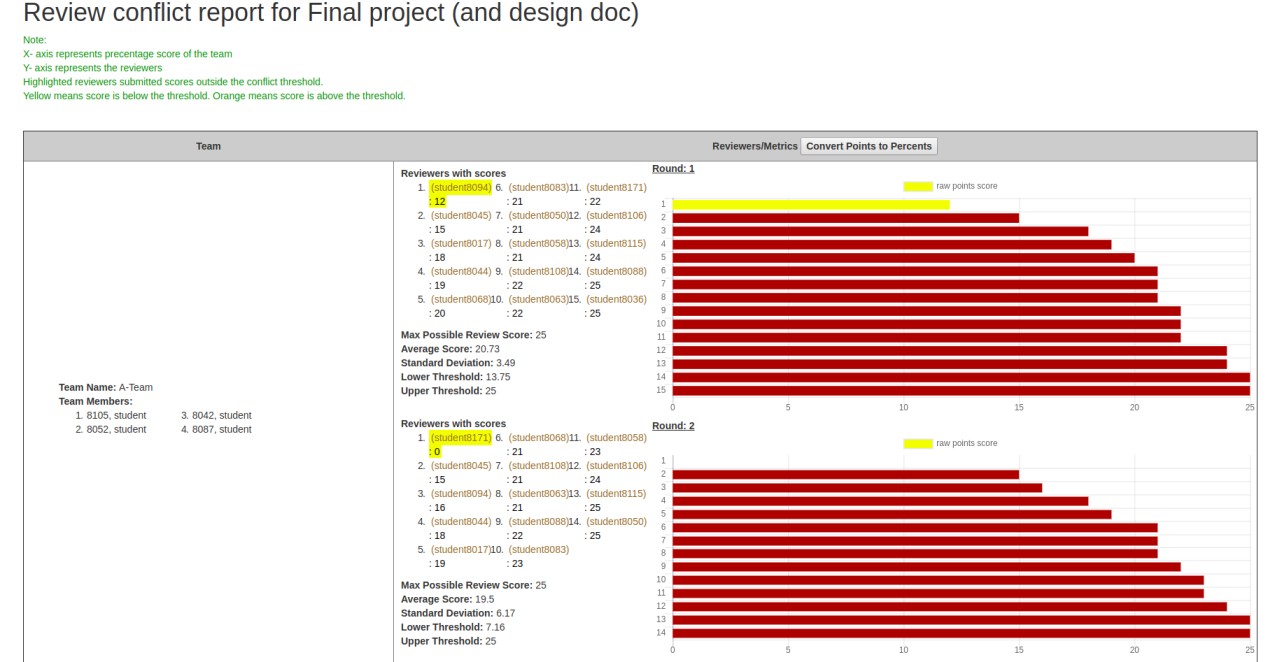

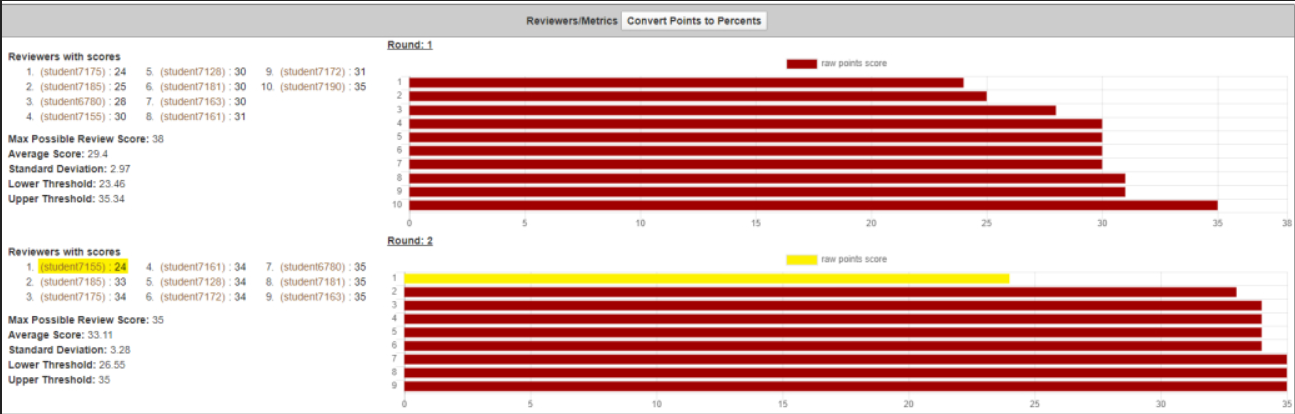

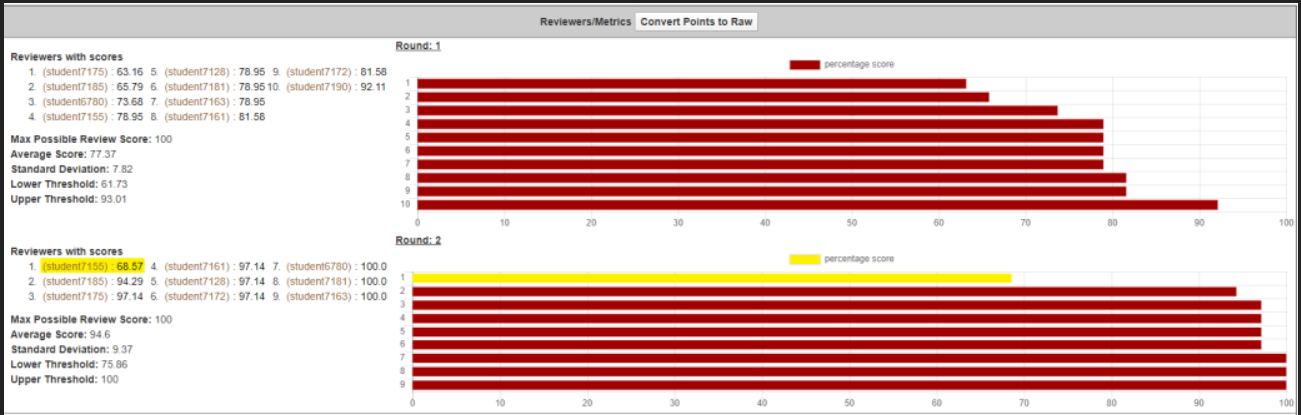

In our new implementation of the Review Conflict Report view, the graphs for each submission look like:

In the picture above you can see that issues with the previous implementation have been fixed by our code changes.

1. The UI for the conflict report included a bar graph that showed the grades of reviews that were in conflict.

The information in the bar graph was showing percentages while the scores shown beside it were in raw points. This is an inconsistency; they should display the data in the same units.

Solution: We changed the code of the bar chart to initially show the scores as raw point values to match the raw point values shown under "Reviewers with scores." However, we also added a button that converts the bar chart, review scores, and all metrics to percents and then back to raw points. This makes it very easy for an instructor to switch between analyzing conflicts using raw point values or percentages.

2. The bar graph contained too much white space and took up too much area on the screen.

Solution: We reduced the size of the graph by making the graph size proportional to the number of review scores. The code looks like: chartSize = 5 + 2*scores.length where scores is that array that contains the review scores for a specific round.

3. It was also not obvious from the bar graph which review scores caused a conflict as they were not highlighted or distinguished in any way.

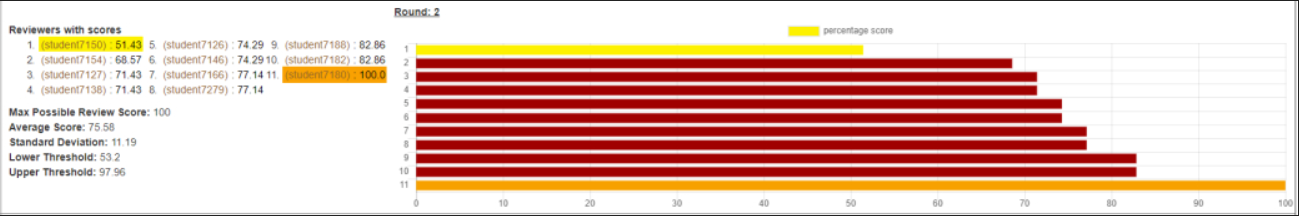

Solution: We highlighted the review scores that fell below the lower threshold in yellow and highlighted the review scores that fell above the upper threshold in orange. This can be seen in the screenshot below:

4. The bars in the chart were also not sorted in any meaningful way (i.e., ascending or descending)

Solution: We sorted the score data to be ascending before building the bar chart.

5. The list of students who reviewed an submission extended vertically down the page which contributed to each row of the table taking up considerable screen space,

especially for submissions with many reviews.

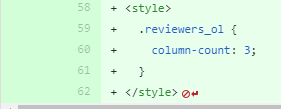

Solution: We added css styling to create three columns of reviewer names and scores.

6. The metrics listed in the screenshot above don't specify the threshold for conflict. Therefore, you can't tell what review scores would actually cause a conflict.

Solution: We added the lower and upper threshold metrics to the report page so users could understand why certain review scores caused a conflict.

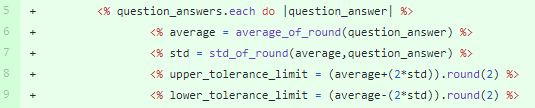

7. The code for the conflict report view included a lot of logic.

The following screenshot from a .html.erb view file is one example of the poor implementation by the previous team:

Solution: We moved the logic from the view to a helper method in app/helpers/review_mapping_helper.rb

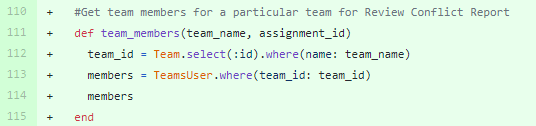

8. There was a bug in the helper methodteam_memberswhich gets the team members of an assignment based on their team name.

The previous implementation fetched these team members solely off the specified team name param. Since team names are not unique, this posed a problem.

Their code looked like:

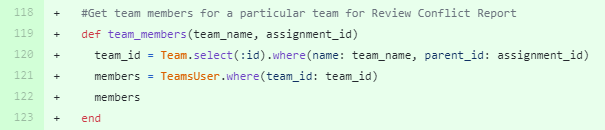

Solution: We fetch the team members using the team name but also the assignment id.

Our implementation looks like:

9. There was minimal effort spent on refactoring test cases and the test cases added in the pull request were shallow tests,

meaning that they simply asserted that the expected value was not empty rather than the correct way of asserting the expected value matched a specific value.

Solution: We added new rspec tests to test the functionality of the reports_controller rendering the correct page, added tests to test the functionality of our helper methods, and also added an rspec test to answer_spec.rb to test the method answers_by_round_for_reviewee which we added to aid in producing the conflict report. For more information about our rspec tests and test coverage, see the Testing section.

Testing

Both manual testing and RSpec testing were used to test the new functionalities.

RSpec Testing

In order to test the changes implemented in the model, controller and helper file new RSpec tests have been added.

1. Add a new test to spec/controllers/reports_controller_spec.rb

The test checks if the new review conflict report is routed and rendered properly by the response controller.

describe '#review_conflict_response_map' do

context 'when type is ReviewConflictResponseMap' do

it 'renders response_report page with corresponding data' do

allow(TeammateReviewResponseMap).to receive(:teammate_response_report)

.with('1')

.and_return([participant, participant2])

params = {

id: 1,

report: {type: 'ReviewConflictResponseMap'},

user: 'no one'

}

get :response_report, params

expect(response).to render_template(:response_report)

end

end

end

2. Add a new test to spec/models/answer_spec.rb

This tests if the query in the newly added method 'answers_by_round_for_reviewee' works properly and fetches a valid result.

it "returns answers by reviewer for reviewee in round from db which is not empty" do

question_answers = Answer.answers_by_round_for_reviewee(@assignment_id, @reviewee_id,@round)

expect(question_answers).to_not be_empty

expect(question_answers.first.attributes).to include('answer' => 1, 'reviewer_id' => 1)

end

3. Create and add a new tests to spec/helpers/review_mapping_helper_spec.rb

This file tests various calculations performed by the newly added helper methods. The results of these calculations are not only used to display on the report but also used to generate the graph.

describe "ReviewMappingHelper" do

describe "#review_score_helper_for_team" do

before(:each) do

@review_answers = [{'reviewer_id': '2', 'answer': 54}, {'reviewer_id': '1', 'answer': 24}, {'reviewer_id': '2', 'answer': 25}]

end

it "The review_score_helper_for_team method calculates the total review scores for each review" do

question_answers = helper.review_score_helper_for_team(@review_answers)

expect(question_answers).to include('1' => 24,'2' => 79)

end

end

describe "#get_score_metrics" do

before(:each) do

@review_scores = {'1' => 50, '2' => 40, '3' => 60}

end

it "The get_score_metrics method calculates various metrics used in the conflict report" do

metric = helper.get_score_metrics(@review_scores, 50)

expect(metric[:average]).to eq 50.0

expect(metric[:std]).to eq 8.16

expect(metric[:upper_tolerance_limit]).to eq 50

expect(metric[:lower_tolerance_limit]).to eq 33.68

end

end

end

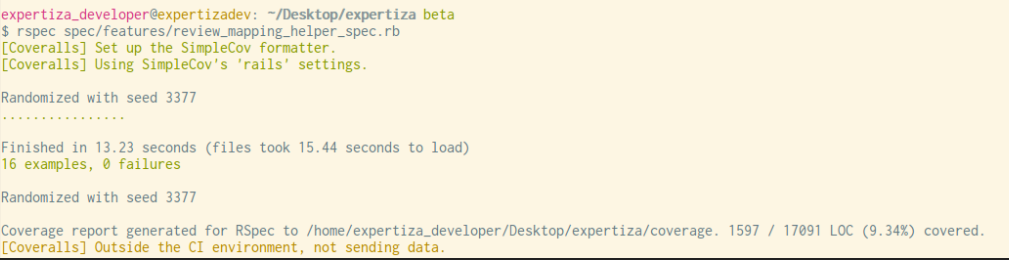

4. Add new test in spec/features/review_mapping_helper_spec.rb

This UI test navigates to the conflict report page and tests if the report is rendered properly.

describe "Test Review conflict report" do

before(:each) do

create(:instructor)

create(:assignment, course: nil, name: 'Test Assignment')

assignment_id = Assignment.where(name: 'Test Assignment')[0].id

login_as 'instructor6'

visit "/reports/response_report?id=#{assignment_id}"

page.select("Review conflict report", :from => "report[type]")

click_button "View"

end

# Check if the page renders

it "has a conflicting review table" do

expect(page).to have_css('table')

end

it "can display teams" do

expect(page).to have_content('Team')

end

it "can display author reviewers and metrics" do

expect(page).to have_selector(:link_or_button, 'Convert Points to Percents')

end

end

All RSpec tests are passing for the current implementation as is evident by the passing Travis CI build.

Manual Testing

In order to test the functionality manually, we follow the following steps:

1. Log in to the instructor account.

2. Create a new assignment with all the required details (name, rubric, submission deadlines) and set the conflict notification threshold to 15%.

3. Add at least 4 participants to the assignment and divide them into 2 students in each using the 'create teams' action.

4. Impersonate one person from each team and add a submission.

5. In the review phase, login to the first team student accounts and give scores of 100% and 50% to the second team's assignment. Submit the review

6. Since the scores differ by more than 15% the email will be sent to the instructor.

7. Access the email by logging in to the instructor email account and check if the email contains the following details:

- Names of the reviewer and reviewee

- Link to the conflicting review

- Link to the newly created report view corresponding to the conflict

- Link to edit the assignment notification limit

7. The conflict report can be accessed in 2 ways:

i. Access the report page using the link mentioned in the email.

ii. Login to instructor account and in the reports section select 'Conflict Review Report'

The screenshots below display conflict email and the conflict report.

Demo

The video [1] demonstrates the functionalities implemented in this project.

Suggestions for Future Teams

We would like to see the bar graphs for each round combined into one double horizontal bar graph. This would save a lot of space on the page and make for a prettier, more cleaner UI. Our team ran out of time to implement this change as it required a refactoring of the code in the views, the code in the helper methods, and the rspec tests.

Files Changed

- Conflict Report

- Response Controller

- Report Format Helper

- Review Mapping Helper

- Summary Helper

- Mailer

- Answer

- Response

- Notify Grade Conflict Message

- Review Conflict Metric

- Review Conflict Report

- Searchbox

- Response Report

- Reports Controller Spec

- Review Mapping Helper Spec

- Review Mapping Helper Spec

- Answer Spec

Useful Links

Github: https://github.com/sid189/expertiza/tree/beta

Pull Request: https://github.com/expertiza/expertiza/pull/1714

Team Information

Project Mentor:

Pratik Abhyankar

Project Members:

Sahil Papalkar

Sahil Mehta

Siddharth Deshpande

Carl Klier