CSC/ECE 517 Fall 2023 - NTX-4 Extend NDB Operator capabilities to support Postgres HA

Kubernetes

Kubernetes, often referred to as K8s, stands as a powerful open-source platform designed to streamline the deployment, scaling, and administration of containerized applications. Originating from Google and currently maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes offers a versatile solution for managing complex, distributed applications in modern cloud-native systems.

At the heart of Kubernetes lies the concept of containers. These are lightweight, isolated environments that execute applications along with their dependencies. Notably, Docker is a commonly used container runtime seamlessly integrated with Kubernetes.

To comprehend Kubernetes, one must first understand the role of nodes. These nodes, whether physical or virtual machines, serve as the execution environment for containerized applications. They manage and provide computational resources within a cluster.

As we continue to zoom in, we find that pods are the basic building block of deployment. With one or more containers sharing network namespaces and storage volumes, pods are the smallest deployable units in Kubernetes. Containers in the same pod can connect with each other utilizing localhost thanks to this co-location.

Now let's talk about orchestration and control. Replica Sets and Deployments are controllers that manage the pod replicas' deployment. For activities like smoothly growing apps and distributing updates, these controllers are indispensable.

Services become important as applications grow in size and become dispersed. Services provided by Kubernetes guarantee that applications operating in pods are consistently exposed and accessible. In addition to load balancing, this feature also serves other uses.

The cluster's external network access to its services is controlled via the Ingress concept, which presents controllers and resources. Kubernetes offers an external access control and organization system in this manner.

ConfigMaps and Secrets enable handling of sensitive data and configuration details apart from application code. An application environment that is safer and easier to administer is enhanced by these resources.

Namespaces are a concept introduced by Kubernetes for logical resource separation and isolation inside a cluster. Effective resource organization and multi-tenancy solution implementation are made possible by this capability.

Installed on each cluster node, Kubelet is the agent in charge of managing containers inside pods. The control plane, which is central to Kubernetes, manages and coordinates the activities of the entire cluster. The scheduler, controller manager, etcd (a key-value store for cluster data), and API server are among its crucial parts.

Lastly, the kubectl command-line tool makes interacting with a Kubernetes cluster easier. This application offers an extensive interface for Kubernetes operations, enabling users to add, remove, and manage different cluster resources with ease.

Kubernetes is a popular choice for managing containerized apps, microservices, and workloads that are cloud-native since it is extremely adaptable and can be linked with a variety of tools and services. It offers a uniform platform for automating deployment, scaling, and operations in contemporary cloud-native systems and abstracts many of the challenges associated with managing containers.

Secret

An object, like a password, token, or key, that holds a tiny amount of sensitive information is called a secret. Usually, a container image or Pod specification would contain this information. Secrets enable the omission of private information from application code.

During the process of generating, viewing, and editing Pods, there is a lower chance of the Secret (and its data) being disclosed because Secrets can be produced independently of the Pods that use them. When working with Secrets, Kubernetes and cluster apps can additionally take extra safety measures, such as not storing important data in nonvolatile storage.

Secrets and ConfigMaps are similar, but Secrets are made especially for storing private information.

Custom Resource Definition

An object that expands the Kubernetes API or lets us add our own API to a project or cluster is called a custom resource. Our own object types are defined in a custom resource definition (CRD) file, which allows the API Server to manage the whole lifecycle.

Kubernetes Operator

One specialized way to package, deploy, and manage Kubernetes applications is with a Kubernetes operator. It creates, configures, and automates complicated application instances on behalf of users by utilizing the Kubernetes API and tools. Kubernetes controllers are extended by operators, who have domain-specific expertise to manage the whole application lifecycle. They can scale, upgrade, and manage different parts of the program, including kernel modules, in addition to continuously monitoring and maintaining the application.

To manage components and applications, operators use custom resources (CRs) defined by custom resource definitions (CRDs). They observe CR kinds and utilize logic that is embedded with best practices to translate high-level user directions into low-level actions. Role-based access control policies and kubectl can be used to manage these custom resources. Beyond the capabilities of Kubernetes' built-in automation features, operators enable the automation of operations that are in line with site reliability engineering (SRE) and DevOps methodologies. They are usually developed by people who are knowledgeable about the business logic of the particular application. They incorporate human operational knowledge into software, avoiding manual duties.

The Operator Framework is a collection of open-source tools that speed up the development of operators. It provides an Operator SDK for developers who lack a thorough understanding of the Kubernetes API, Operator Lifecycle Management for managing the installation and management of operators, and Operator Metering for usage reporting in specialized services.

Nutanix Database Service

Database-as-a-Service Across On-Premises and Public Clouds

Simplify database management and accelerate software development across multiple cloud platforms. Streamline essential yet mundane database administrative tasks while maintaining control and adaptability, ensuring effortless, rapid, and secure database provisioning to bolster application development.

- Secure, consistent database operations - Implement automation for database administration tasks, ensuring the consistent application of operational and security best practices across your entire database infrastructure.

- Accelerate software development - Empower developers to effortlessly deploy databases from their development environments with a few simple clicks or commands, facilitating agile software development.

- Free up DBAs to focus on higher value activities - By automating routine administrative tasks, database administrators (DBAs) can allocate more of their time to valuable endeavors, such as enhancing database performance and delivering fresh features to developers.

- Retain control and maintain database standards - Select the appropriate operating systems, database versions, and database extensions to align with the needs of your applications and compliance standards.

- Database Lifecycle Management: Efficiently oversee the complete lifecycle of your databases, covering provisioning, scaling, patching, and cloning for SQL Server, Oracle, PostgreSQL, MySQL, and MongoDB databases.

- Scalable Database Management: Effectively handle databases at scale, spanning from hundreds to thousands, regardless of whether they are located on-premises, in one or multiple public cloud environments, or within colocation facilities. All of this can be managed from a unified API and console.

- Self-Service Database Provisioning: Facilitate self-service database provisioning for both development/testing and production purposes by seamlessly integrating with popular infrastructure management and development tools like Kubernetes and ServiceNow.

- Database Security: Rapidly deploy security updates across your databases and enforce access restrictions through role-based access controls to ensure compliance, whether for some or all of your database instances.

NDB Kubernetes Operator

The goal of NDB Operator, a Kubernetes operator, is to make the process of setting up and maintaining database clusters within Kubernetes clusters easier. An application with operational knowledge of another application is called a Kubernetes operator. After deployment within the Kubernetes Cluster, it can start monitoring the endpoints of interest and modifying the application under management. An NDB Cluster can be deployed, managed, and modified with the least amount of human intervention thanks to the NDB Operator.

Using their K8s cluster, developers can now provision PostgreSQL, MySQL, and MongoDB databases directly, saving them days or even weeks of work. They can take advantage of NDB's complete database lifecycle management while using the opensource NDB Operator on their preferred K8s platform.

Problem Statement

The problem statement requires us to extend NDB operator capabilities to support Postgres HA (High Availability). Currently NDB has support for Posgres High Availability databases but the NDB operator cannot manage them. Our task is to identify what additions need to be made to the project to support Postgres HA and implement these additions. Moreover, we will perform end-to-end testing of the provisioning and deprovisioning processes to ensure their smooth functionality.

Postgres HA involves implementation of measures that ensure that a PostgreSQL database system remains operational and accessible even in the face of hardware failures, software issues, or other types of disruptions. This includes measures like replication, failover, load balancing and more.

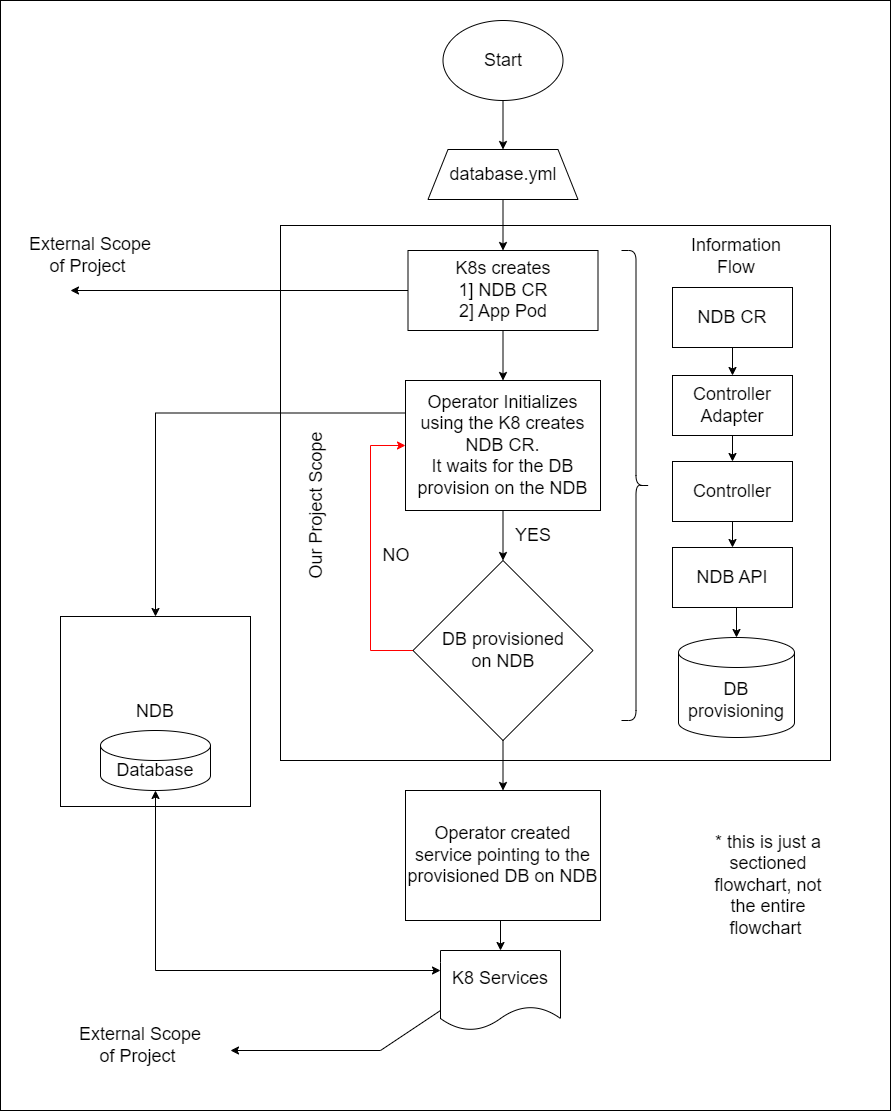

Workflow

Design Principles and Design Patterns

Single Responsibility Principle (SRP): Each method in the Database struct focuses on a specific aspect of functionality, adhering to the SRP. For example, GetInstanceIsHighAvailability is responsible for retrieving the high availability status.

DRY is applied by creating abstractions through interfaces and methods, allowing for a consistent way to access high availability information while accommodating the differences between primary and clone instances. We also used constants instead of variables where it is necessary.

Open/Closed Principle and Interface Segregation Principle are applied in the code by providing an interface (DatabaseInterface) that can be extended for different types of databases, and by implementing that interface in the Database struct. Methods like GetInstanceIsHighAvailability() are specific to the Database type, allowing for extension without modification of the existing code.

Builder: Instead of starting from scratch with a constructor for each object that is produced, we instantiated them using method chaining.

Adaptor Pattern: controller_adapters act as bridge between v1alpha1 and controllers, it facilitates communication between v1alpha1 and controllers despite their incompatible types.

Implementation

To understand the changes that need to be made first we need to define what data is needed by NDB to provision an HA database. This was achieved by obtaining the API equivalent from Nutanix Test Drive. The API equivalent contains the configuration of the database that will be provisioned on NDB. It is a JSON object containing parameters in a key-value format.

To identify the parameters which are new, API equivalents for a normal Postgres instance and a Postgres HA instance were compared. The differences noted are as follows

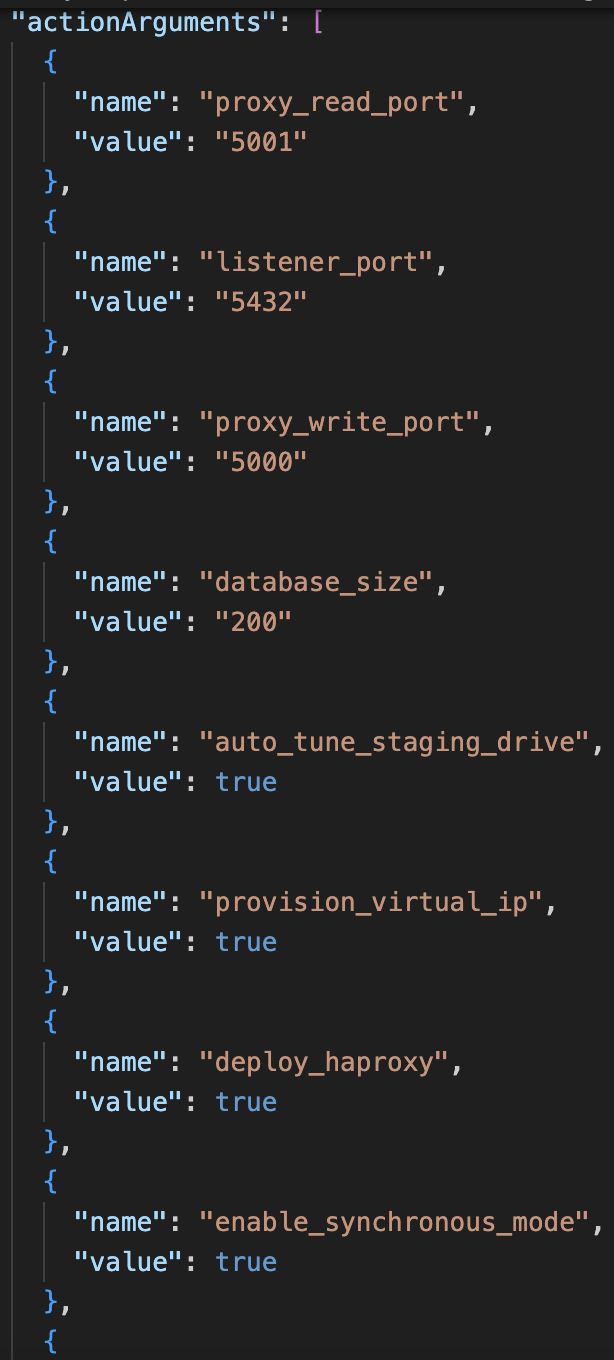

ActionArguments key-value pair contains more parameters in case of Postgres HA. properties of the HA instance like failover modes, backup policy and more are defined here.

A new key-value pair for nodes is present in the HA API equivalent. It contains the details regarding which database holds the primary status thus making it the target for all read write operations. Other databases are set to secondary meaning they copy the data from the primary DB. Failover strategies are also noted here. With the new data parameters identified now we need to find out what changes need to be made. There needs to be a way for users to input whether the database is a high availability database or not.

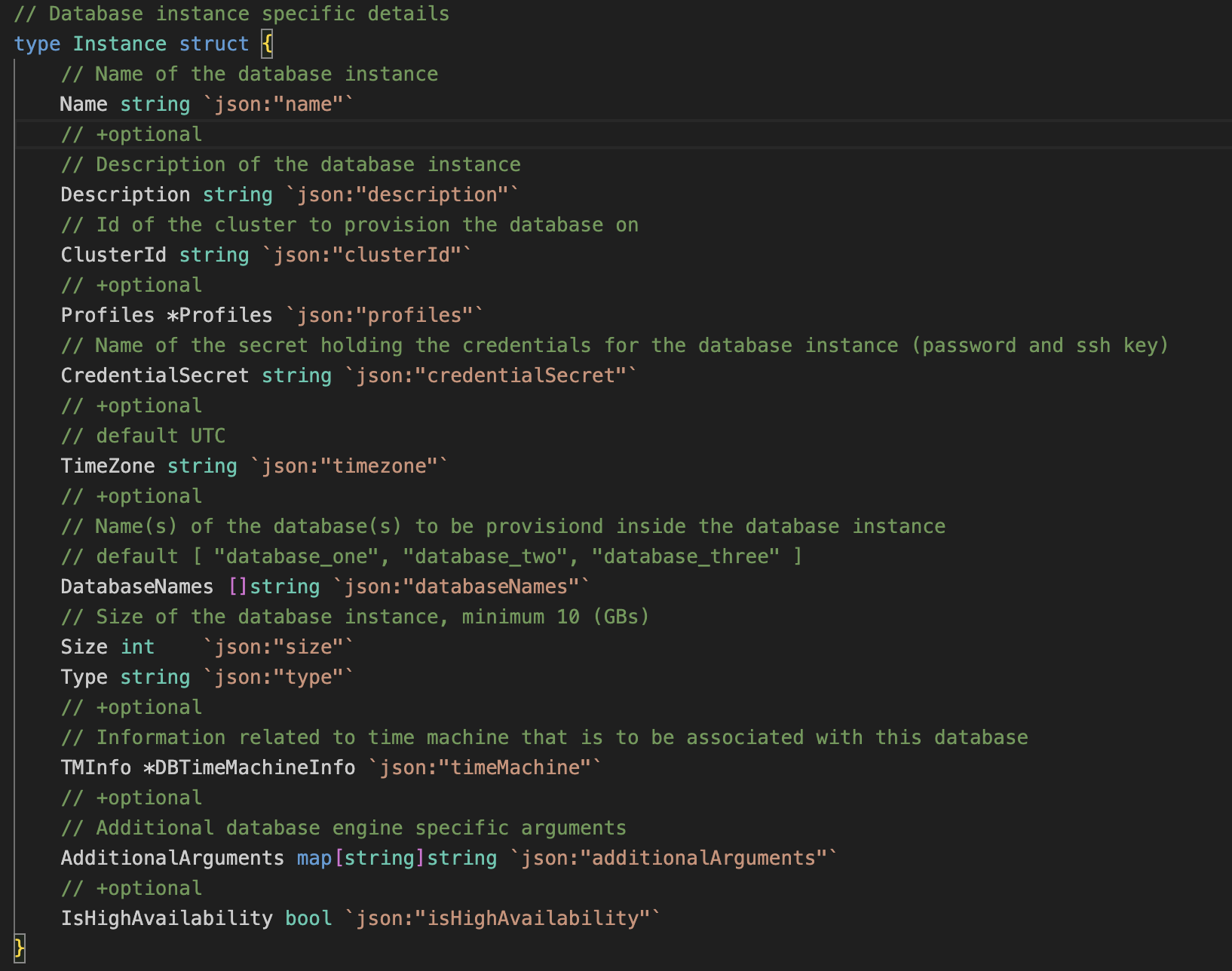

For this purpose a boolean called isHighAvailability is added to the Custom Resource Definition and to the Instance struct in ndb-operator/api/v1alpha1/database_types.go. So when the user supplies the boolean value in the YAML file it will be read by the operator. This property needs to be propagated through the application flow. Hence we move to the ndb-operator/controller_adapters directory.

As previously mentioned controller_adapters is the bridge between v1alpha1 and controllers. The database.go file in controller_adapters directory contains getter methods for all the properties specified in the custom resource. A getter for isHighAvailability These methods are used by the controller.

The controller is the core of the operator. It contains logic for provisioning, deprovisioning, connectivity checks and more. The Reconcile method present in ndb-operator/controllers/database_controller.go is run to check the status of the database. In order to perform any of its functions the controller needs to communicate with NDB. This brings in the ndb-operator/ndb_api directory which contains the methods for performing all actions on NDB.

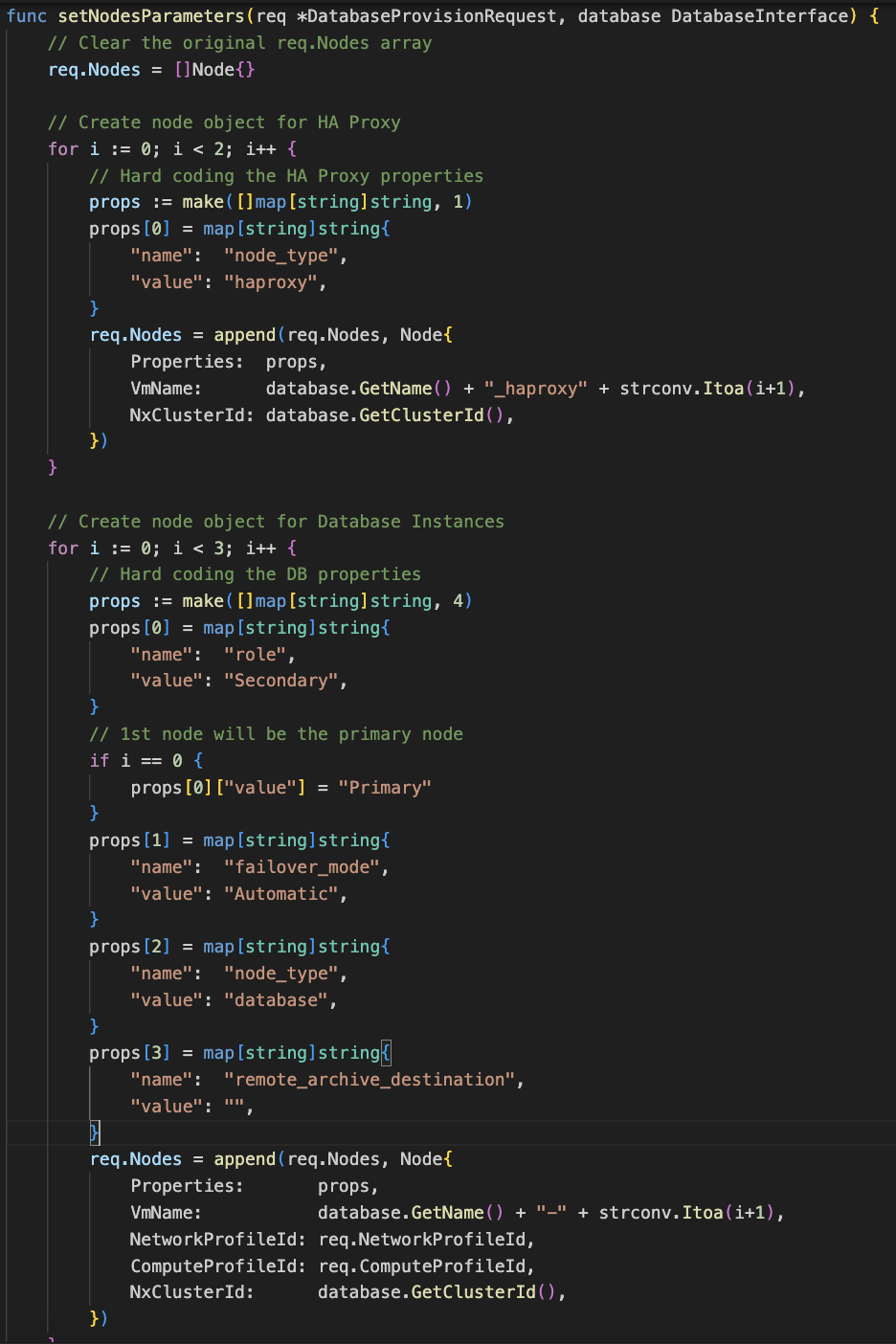

The controller uses the GenerateProvisioningRequest method present in ndb-operator/ndb_api/db_helpers.go to generate a provisioning request that is passed to NDB. In the same file appendRequest methods are present for each DB type that add actionArguments to the provisioning request. A new request appender has been created for Postgres HA databases. This method adds action arguments specific to Postgres HA databases plus it adds properties of the nodes that belong to the HA cluster.

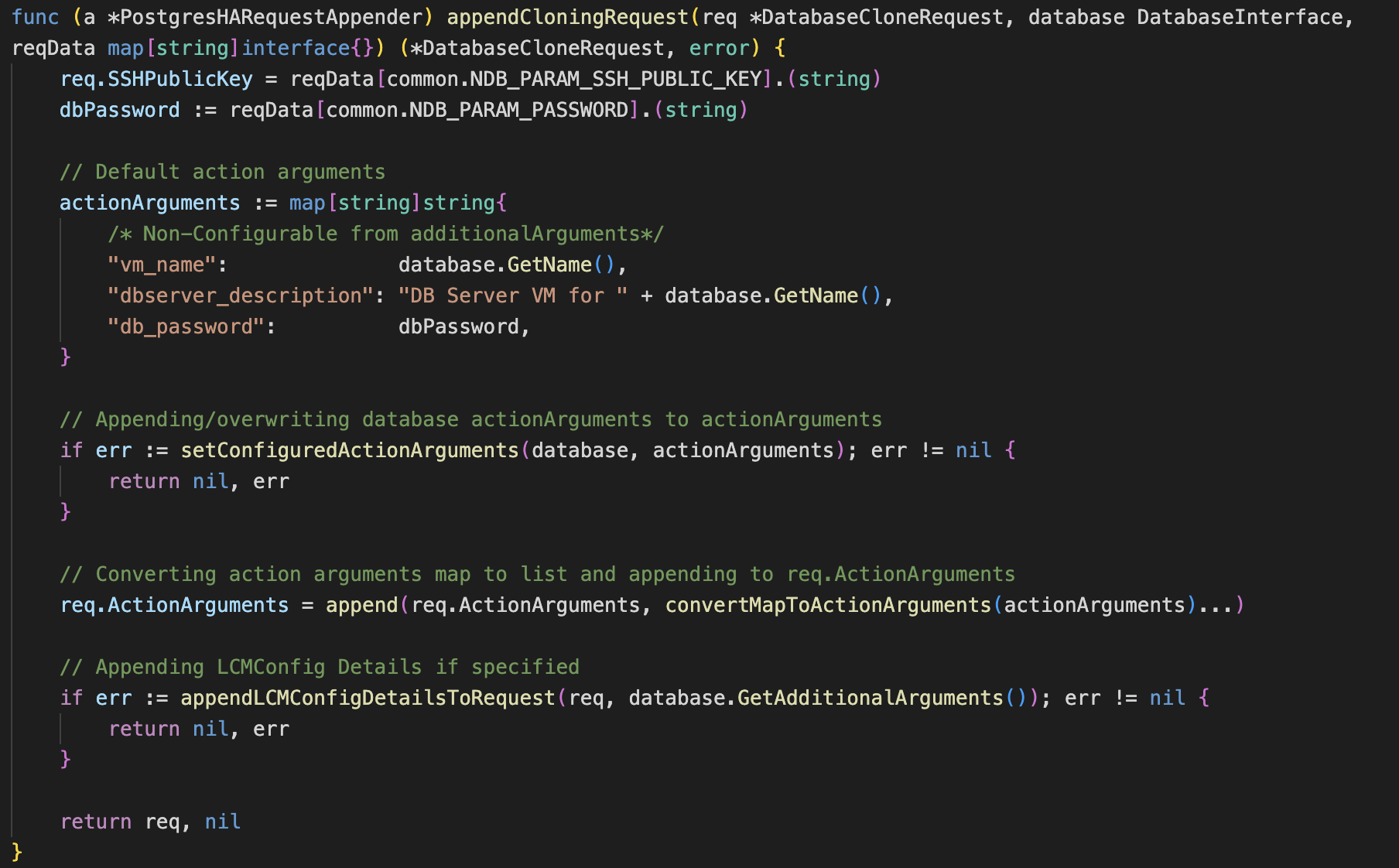

The screenshot above shows the new appendProvisioningrequest method created in db_helpers.go file. The actionArguments hashmap contains the various properties of the HA instance that need to be supplied to NDB for provisioning a database. Now the nodes of the high availability database need to be configured.

SetNodesParameters as per its name, sets the parameters of the nodes that need to be created for achieving high availability. HAProxy nodes are configured first followed by the Postgres Nodes. The generated request is sent back to the controller which passes it to NDB for database provisioning.

Similarly the method appendCloningRequest has also been made that is used to create requests to clone an existing DB by copying all its properties.

Tests

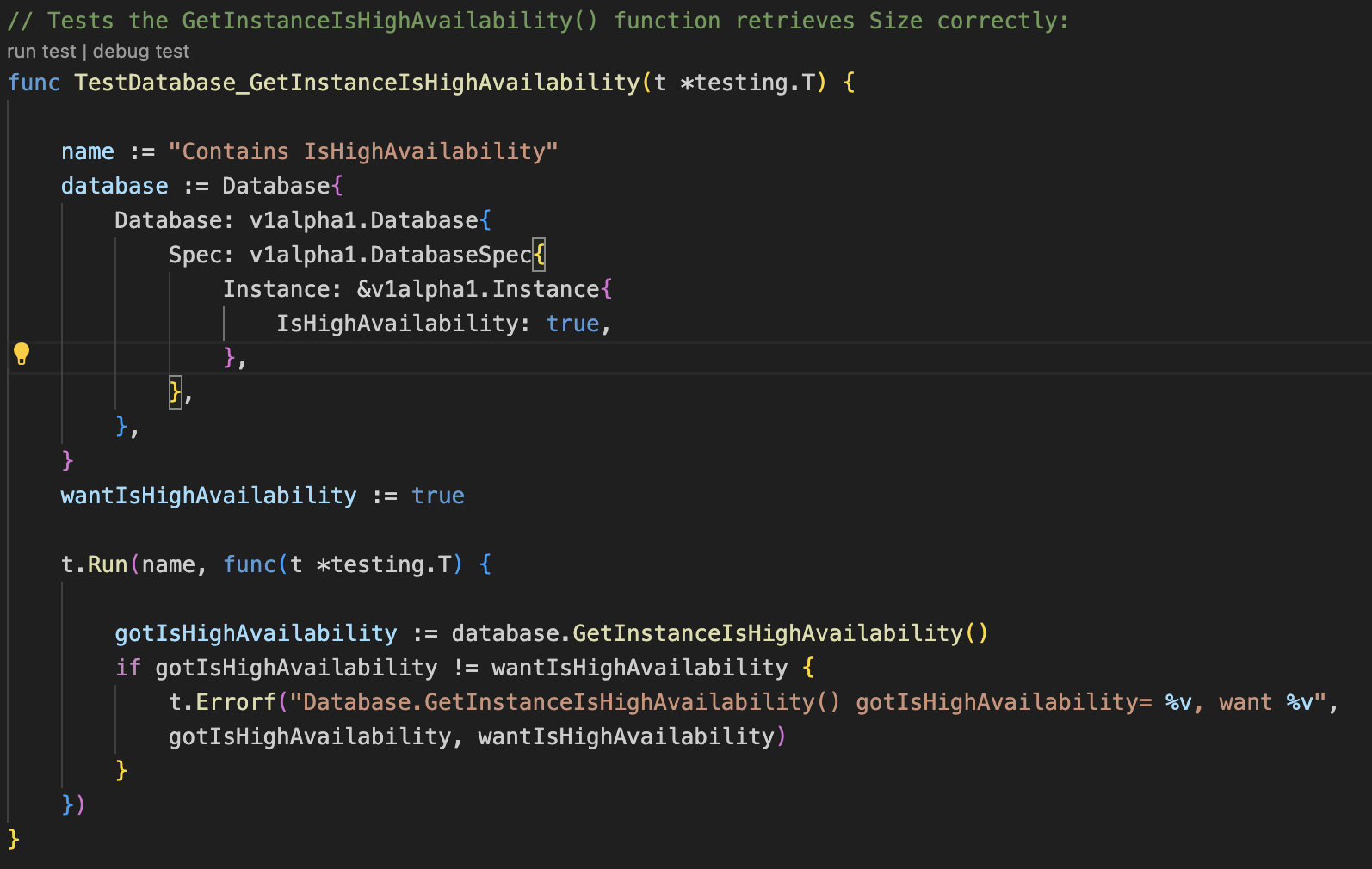

For the purpose of testing this functionality, test scripts have been created in the ndb-operator/ndb-api/db_helpers_test.go file. First we need to verify that the getter for isHighAvailability boolean is working correctly. For this a test function TestDatabase_GetInstanceIsHighAvailibility is added to test the GetInstanceIsHighAvailability method of the Database struct. It checks whether the GetInstanceIsHighAvailability method of the Database struct returns the expected value (true in this case). The t.Errorf statement will print an error message if the test fails.

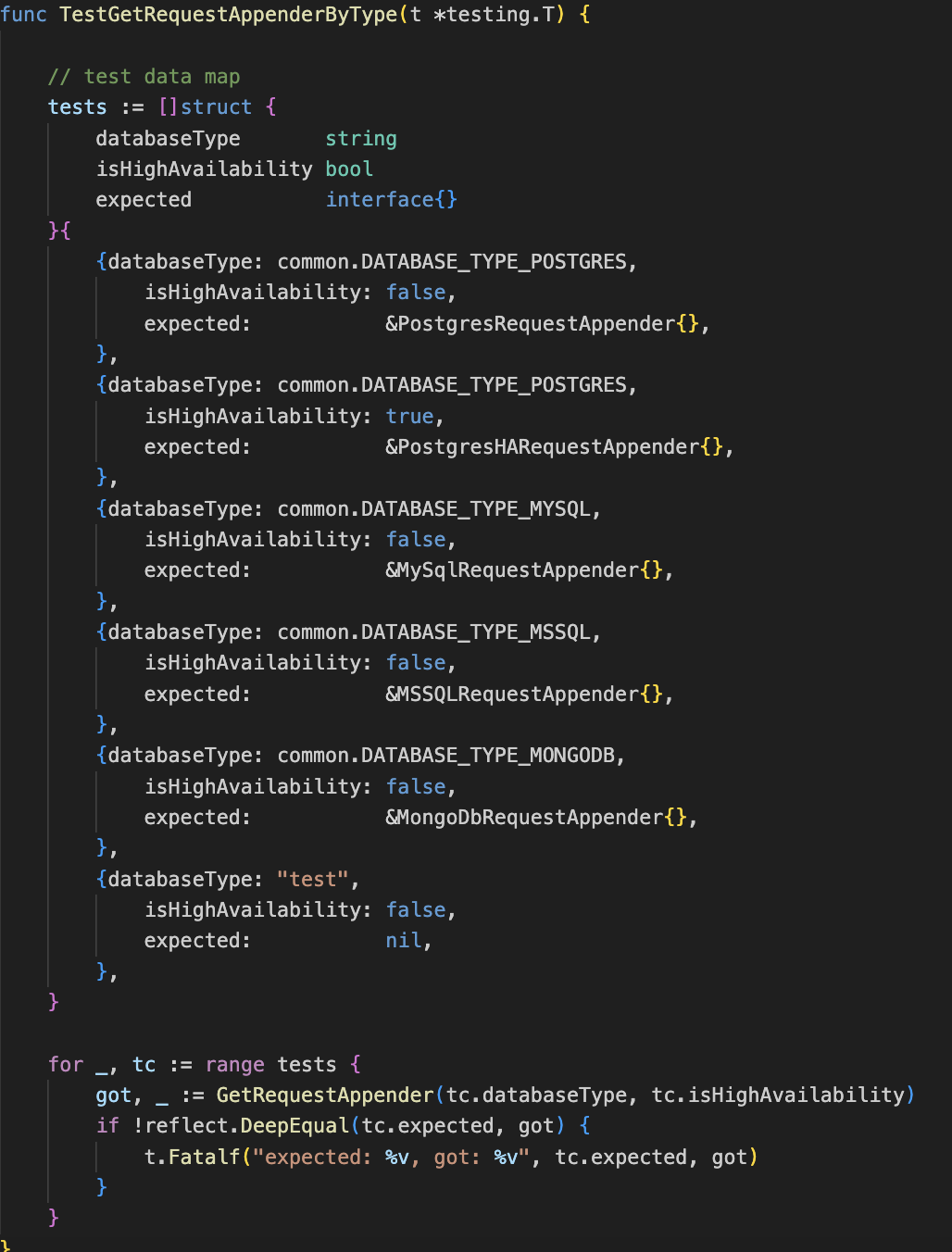

Next we need to make sure that the correct request appender is being returned for the database. To accomplish this, an extra block has been added to the TestGetRequestAppendersByType method.

Postgres HA databases are differentiated from single instance Postgres databases using the isHighAvailability boolean. If its value is true then PostgresHARequestAppender should be returned else PostgresRequestAppender should be called. The TestGetRequestAppenderByType method loops through test cases to verify if the correct appender is being returned. It examines this by creating test cases with various database types, isHighAvailability boolean values and the expected request appender that should be returned. These test cases are passed to the GetRequestAppender method and the returned request appender is compared with expected output.

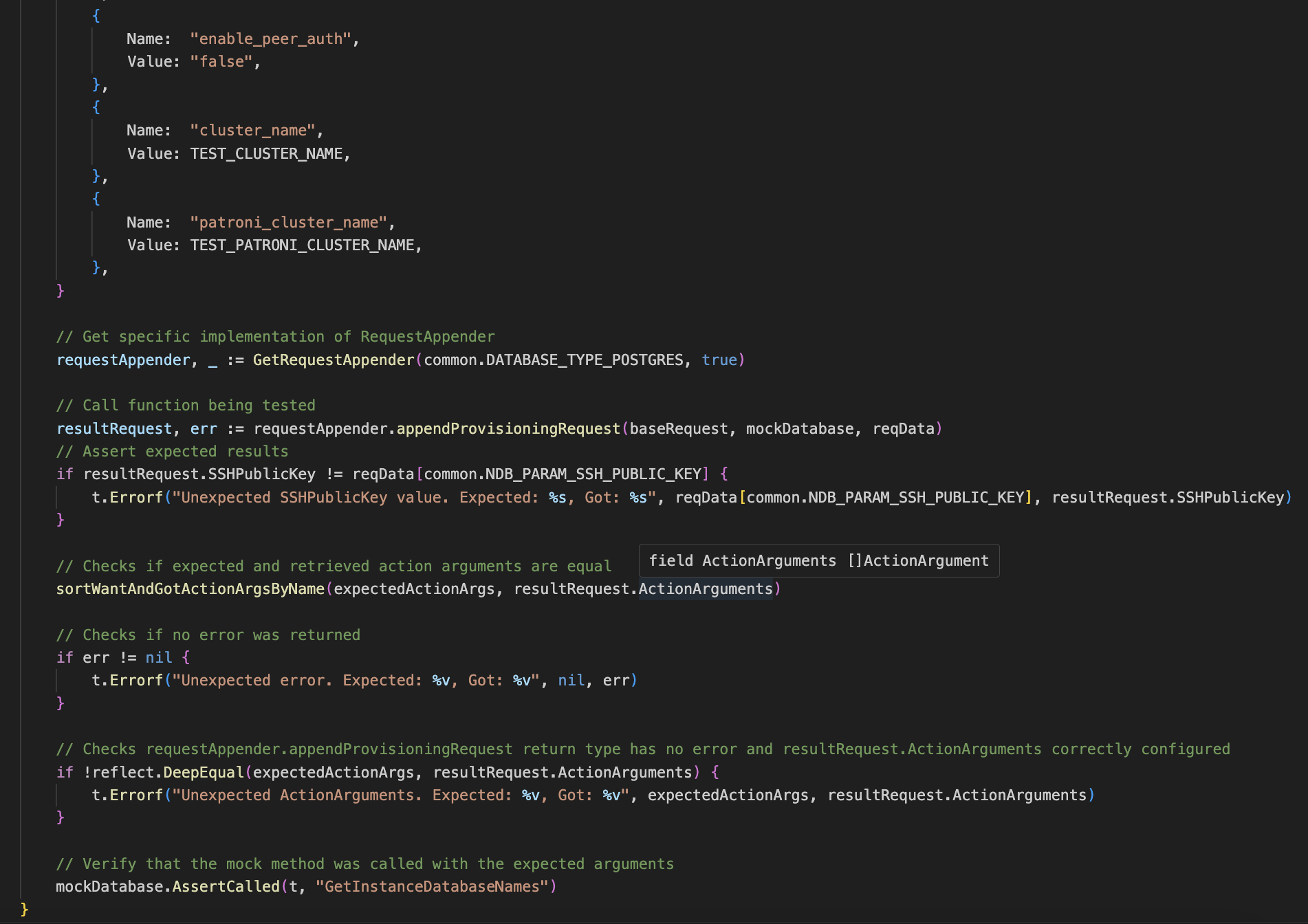

Furthermore, the newly added appendProvisioningRequest for Postgres HA needs to be tested. More precisely the request that is being generated must contain all the parameters required by NDB. For this purpose the TestPostgresHAProvisionRequestAppender methods have been added to the test script.

The TestPostgresHAProvisionRequestAppender method uses a Mock Database object to generate a provisioning request. The action arguments are specified and then the request appender is called. After that the request is validated. The test first creates a base request and mock database object for mocking behavior of the database. After that the action arguments are specified and the request appender is obtained. Asserts are applied on the final request object. The database type is checked. Asserts to ensure that SoftwareProfileId, SoftwareProfileVersionId, ComputeProfileId, NetworkProfileId, and DbParameterProfileId are not empty are performed. If any of the assertions fail, then t.Errorf functions indicate which part of the request is incorrect.

Also, tests have been created for cases where custom arguments have been specified and cases where action arguments are missing or some other data has not been provided. In cases of negative workflow, the test will create the mock database and base request, but skip parameters like the DB password. It will make an instance of ndb client and call GenerateProvisioningRequest. If GenerateProvisioningRequest does not return an error, then the test will fail with an error message.

Video Demo

References

Relevant Links

link to GitHub repository: https://github.com/rithvik2607/ndb-operator

Team

Mentor

Nandini Mundra

Student Team

Sai Rithvik Ayithapu (sayitha@ncsu.edu)

Sreehith Yachamaneni (syacham@ncsu.edu)

Rushil Patel (rdpate24@ncsu.edu)