CSC/ECE 517 Fall 2021 - E2150. Integrate suggestion detection algorithm

Problem Definition

Peer-review systems like Expertiza utilize a lot of students’ input to determine each other’s performance. At the same time, students learn from the reviews they receive to improve their own performance. In order to make this happen, it would be good to have everyone give quality reviews instead of generic ones. Currently, Expertiza has a few classifiers that can detect useful features of review comments, such as whether they contain suggestions. The suggestion-detection algorithm has been coded as a web service, and other detection algorithms, such as problem detection and sentiment analysis, also exist as newer web services. We need to make the UI more intuitive by allowing users to view the feedback of specific review comments and the code needs to be refactored to remove redundancy to follow the DRY principle.

Previous Implementation

Overview

The previous implementation added the following features:

- Setup a config file 'review_metric.yml' where the instructor can select what review metric to display for the current assignments

- API calls (sentiment, problem, suggestion) are made and a table is rendered below, displaying the feedback of the review comments.

- The total time taken by the API calls was also displayed.

UI Screenshots

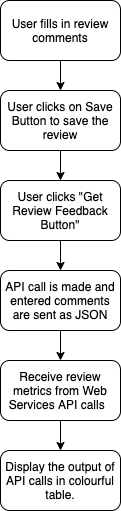

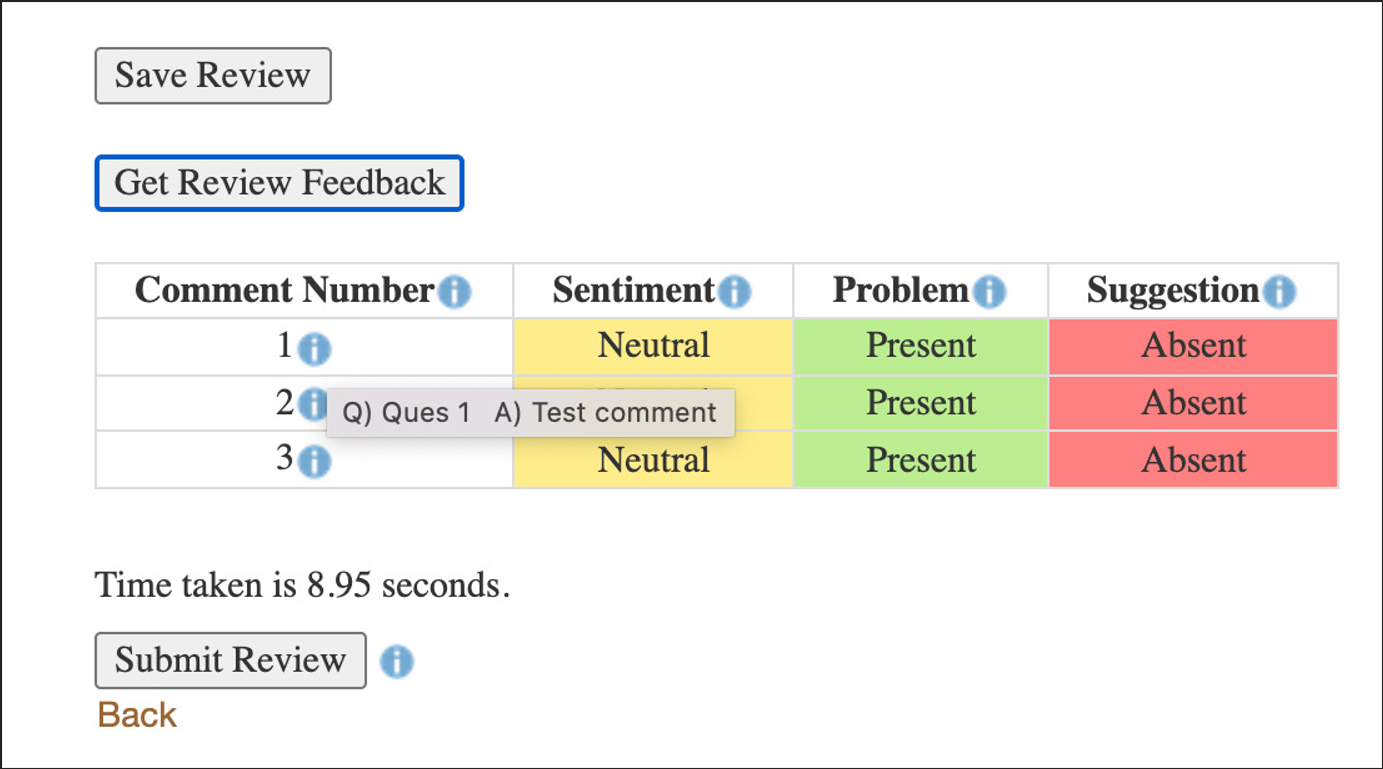

The following image shows how a reviewer interacts with the system to get feedback on the review comments.

Control Flow

Issues with Previous Work

With the previous implementation of this project, students can write comments and request feedback for the comments. There are certain issues with the previous implementation that needs to be addressed.

- The criteria are numbered in the view, and those numbers do not correspond to anything on the rubric form. So if the rubric is long, it would be quite difficult for the reviewer to figure out what automated feedback referred to which comment.

- There are too many hardcoded values present in the previously implemented code. We plan to move these hardcoded values to a configuration file and access them when needed.

- Currently there are many repetitive blocks of code. For Ex.; in _response_analysis.html for the getReviewFeedback(), the API call for each type of tag (sentiment, suggestion, etc) is being repeated. Only the API link differs there.

- The previous implementation takes the text area field in the view to fetch the review comment, but it’s not updated dynamically when the user types a comment. The user needs to save the form then go back to edit view and then request feedback.

Proposed Solution

- We need to improve the UI so that if there are many rubric items, users can easily check the feedback for each comment given to each rubric item. We propose the following solution

- Each comment on hover shows the user's respective comment on the specific rubric review question so that the feedback is easily accessible.

- On click of the get review feedback button, we loop through the dynamically created review question form and store the mapping of questions and the reviewer's corresponding comments which on hovered are displayed as a popup.

- Each comment on hover shows the user's respective comment on the specific rubric review question so that the feedback is easily accessible.

- Another issue with the current implementation is that code is quite repetitive for making different API calls (Sentiment, Suggestion, etc). We propose to store the API link in the config file and refer to the variable in the getReviewFeedback()

- We also plan to remove a global variable response_general, which is being used to store the response of API calls. We will refactor the makeRequest function to directly return the response which can be used in various places. This will resolve implicit coupling issues in the code and make it more easily extendable.

- The previous implementation has hardcoded configuration information like the help text button. We propose to save this information in config files which can be easily modified and will remove unnecessary information from the code.

Implementation

UI Changes

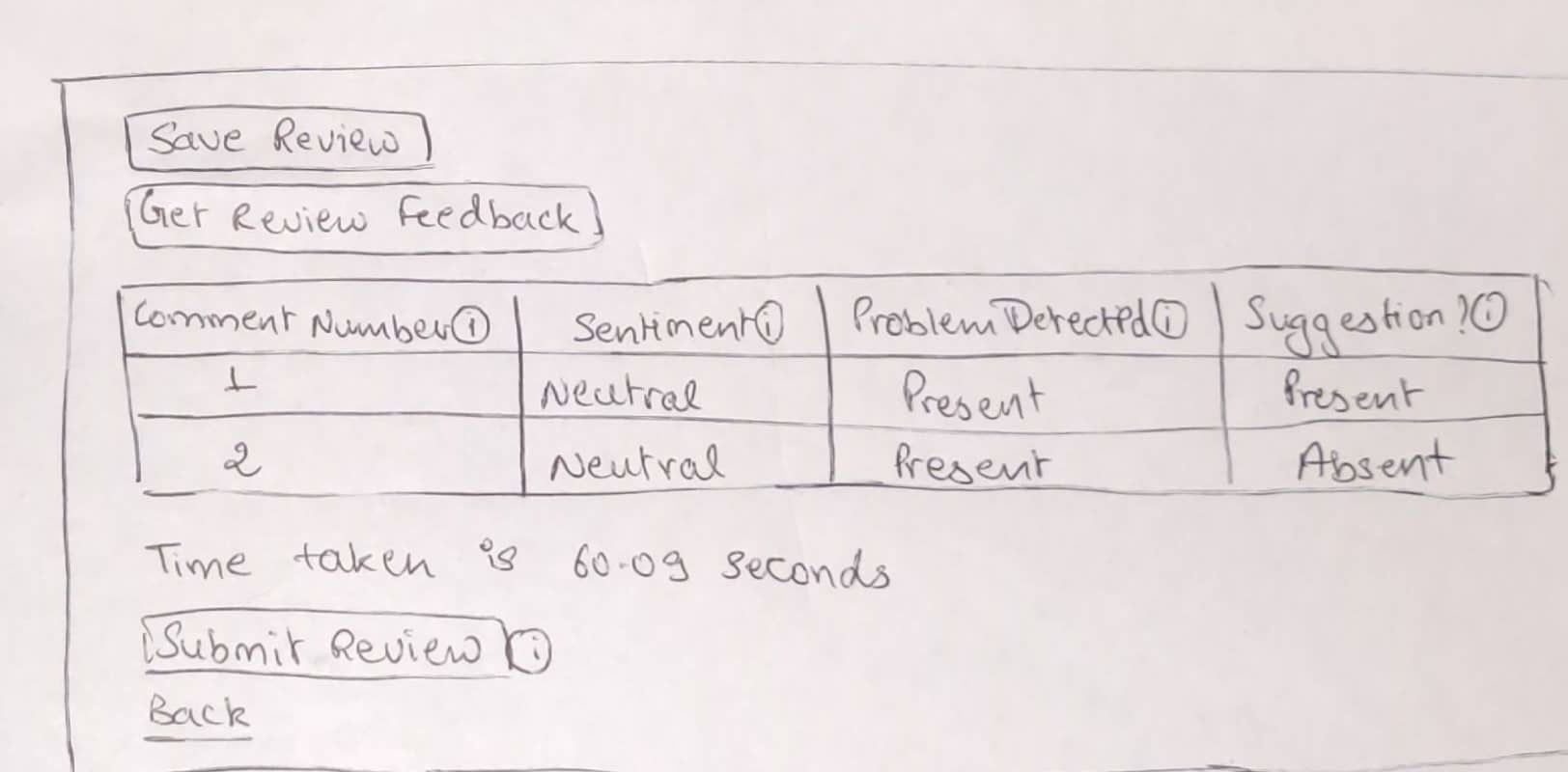

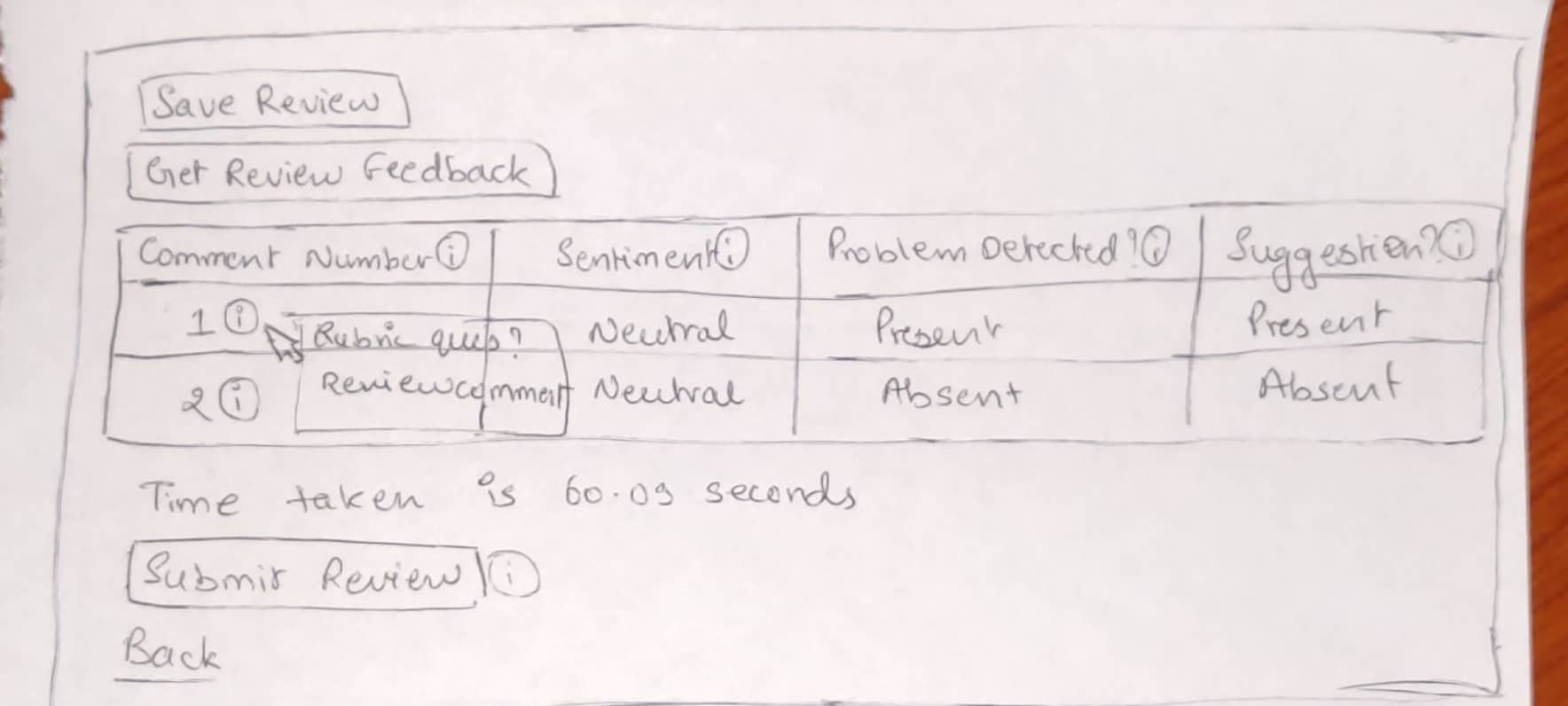

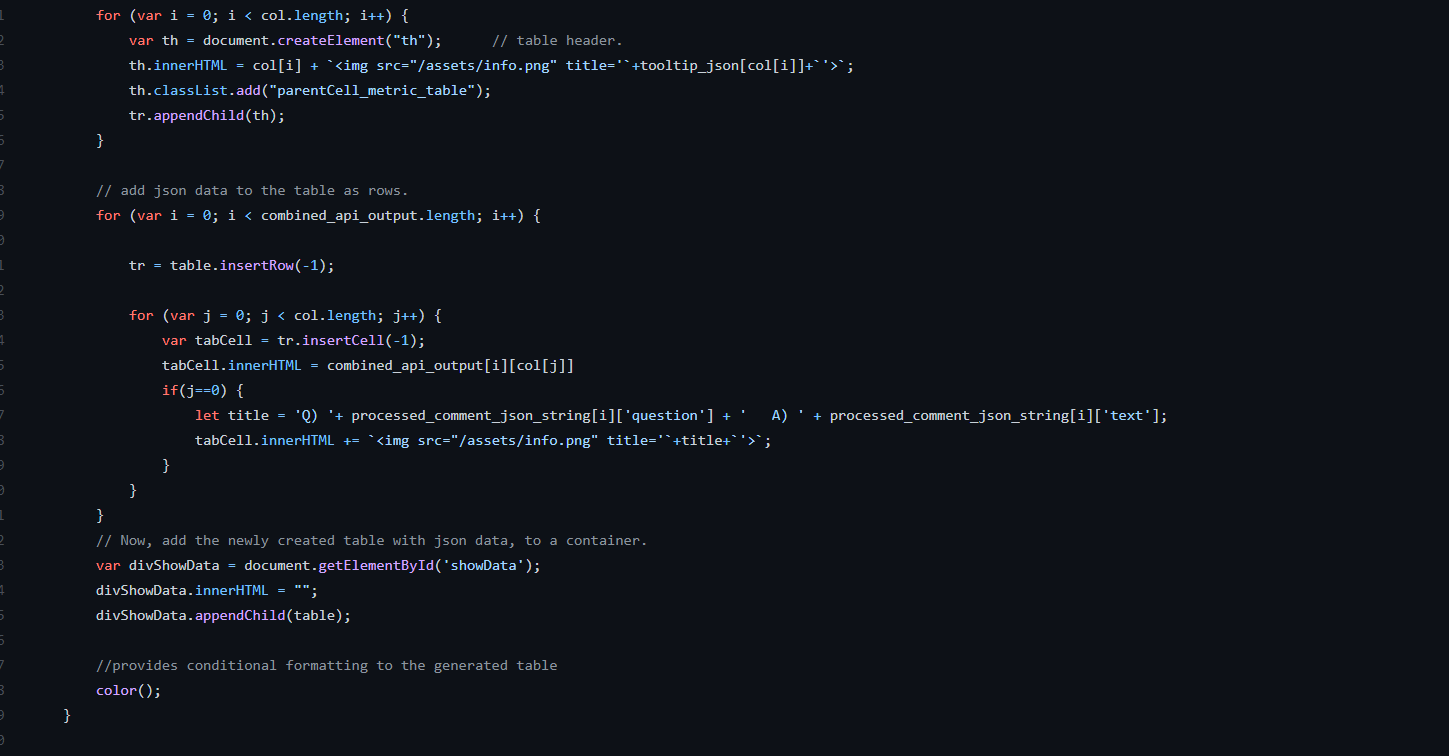

We refactored the UI of the generated table showing the problem, suggestion, and sentiment of the review comment, by adding a tooltip for prompting the Question and its corresponding review comment in the table itself.

UI screenshots

Javascript implementation

- Description - We have added a tooltip in front of every comment number, which on hovering shows the question for which the feedback is given as well as the review comment given for that question.

- File changed - app/views/response/_response_analysis.html.erb

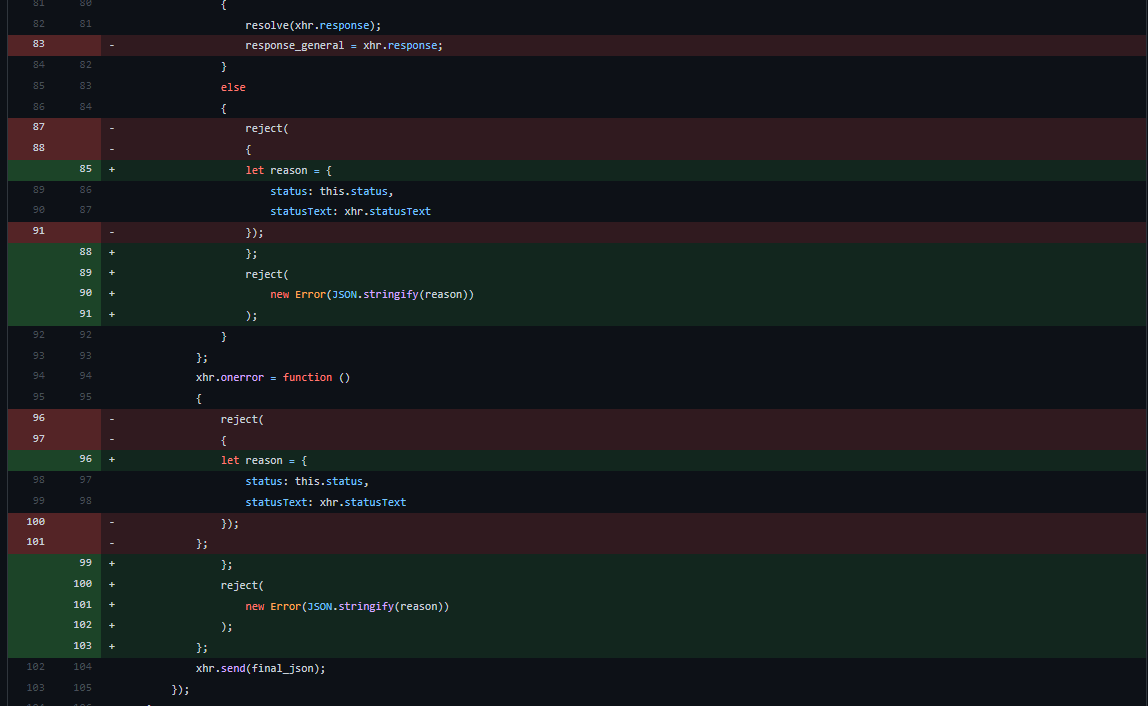

Bug Fixes (Dynamic Feedback)

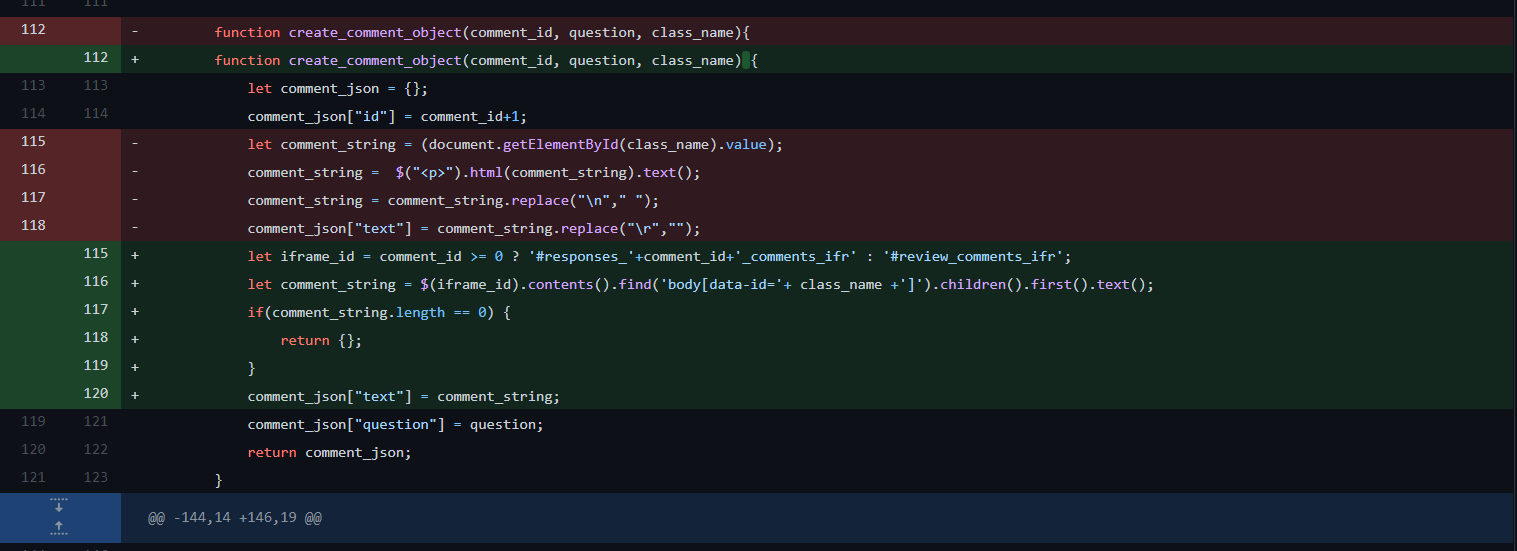

Previously, the implementation took into account the text area field because of which we need to save the form before getting the feedback. We changed the implementation so that users can type in a review comment and dynamically get feedback on it before saving.

Javascript implementation

- Description - The global variable response_general was used to store the response, which was not needed as the Promise will return the response and we threw an error in case of rejection, which is handled makeRequest method

- File changed - app/views/response/_response_analysis.html.erb

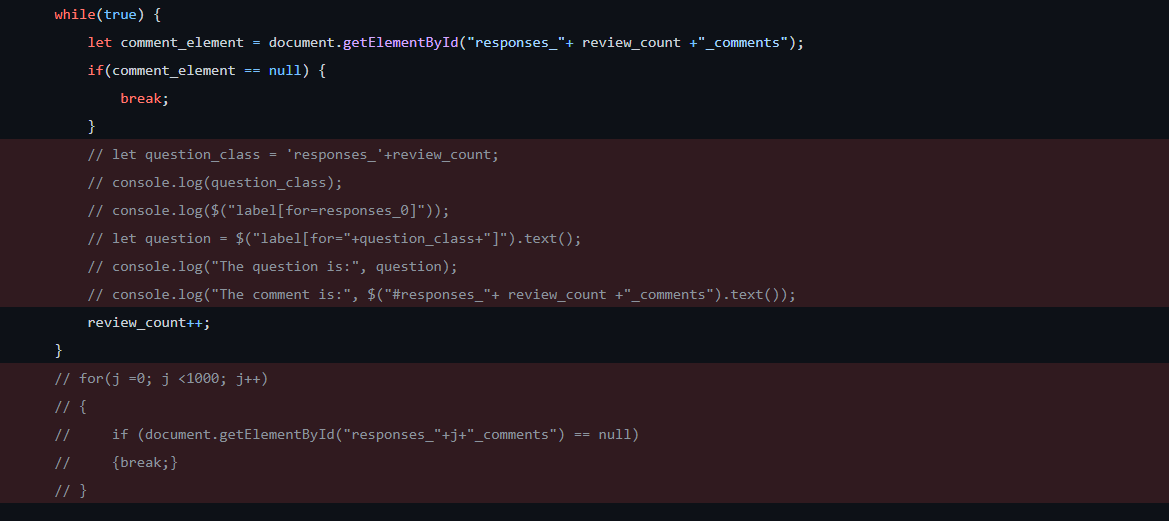

Refactoring (removing code duplication)

In the previous implementation, code was duplicated for handling different scenarios of fetching the review comment. The code was mostly the same with some conditions. We abstracted the functionality into a method that can be used at multiple places instead of duplicating the code.

Also, the previous implementation was counting the number of rubric questions by parsing the DOM Structure, we refactored that logic as well.

Javascript implementation

- Description - The internal function create_comment_object is used for generating comment object according to question and class_name of DOM. This comment JSON is then used to pass to the API for fetching the predictions.

- File changed - app/views/response/_response_analysis.html.erb

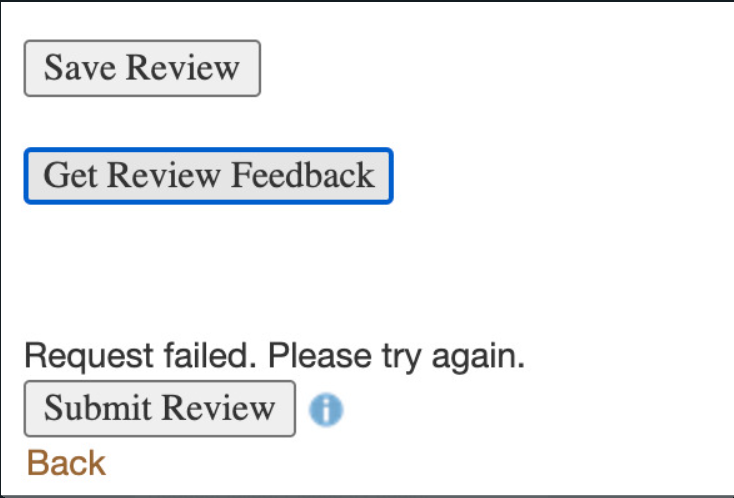

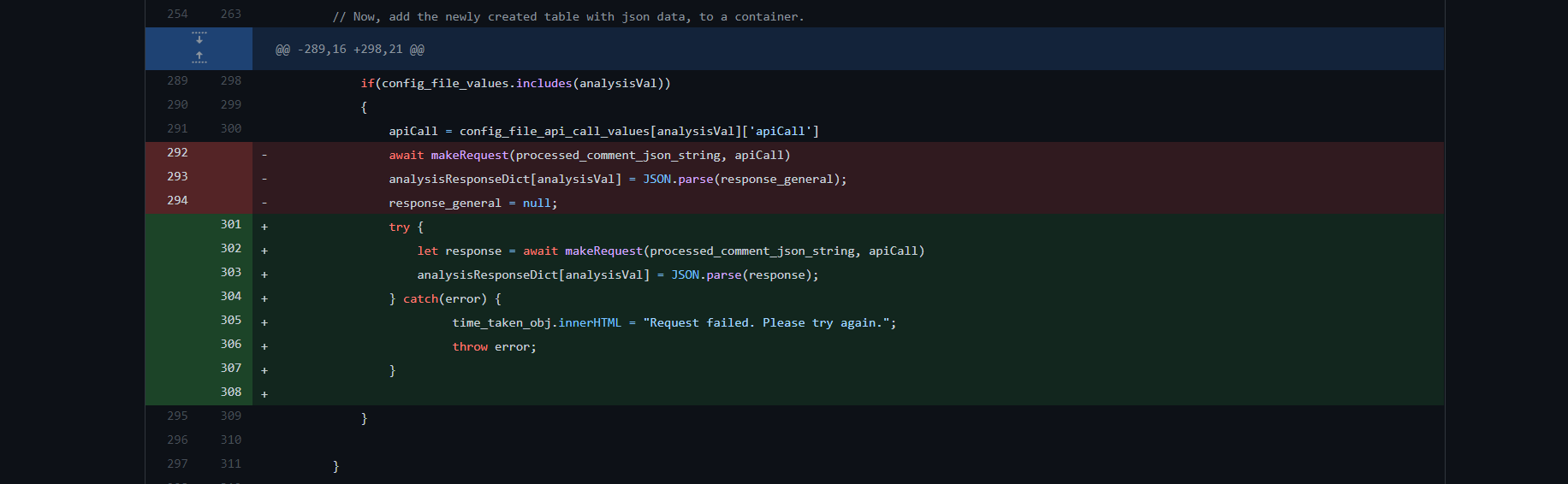

Error handling improvements

We introduced error handling by changing the “Loading…” text to “Request failed. Please try again.” if the API for generating feedback fails. Previously, this case was not handled

Javascript implementation

- Description - We have added try-catch blocks while making API call, and if the promise is rejected we catch the error and change the “Loading…” text at the bottom to “Request failed. Please try again.”

- Files changed - app/views/response/_response_analysis.html.erb

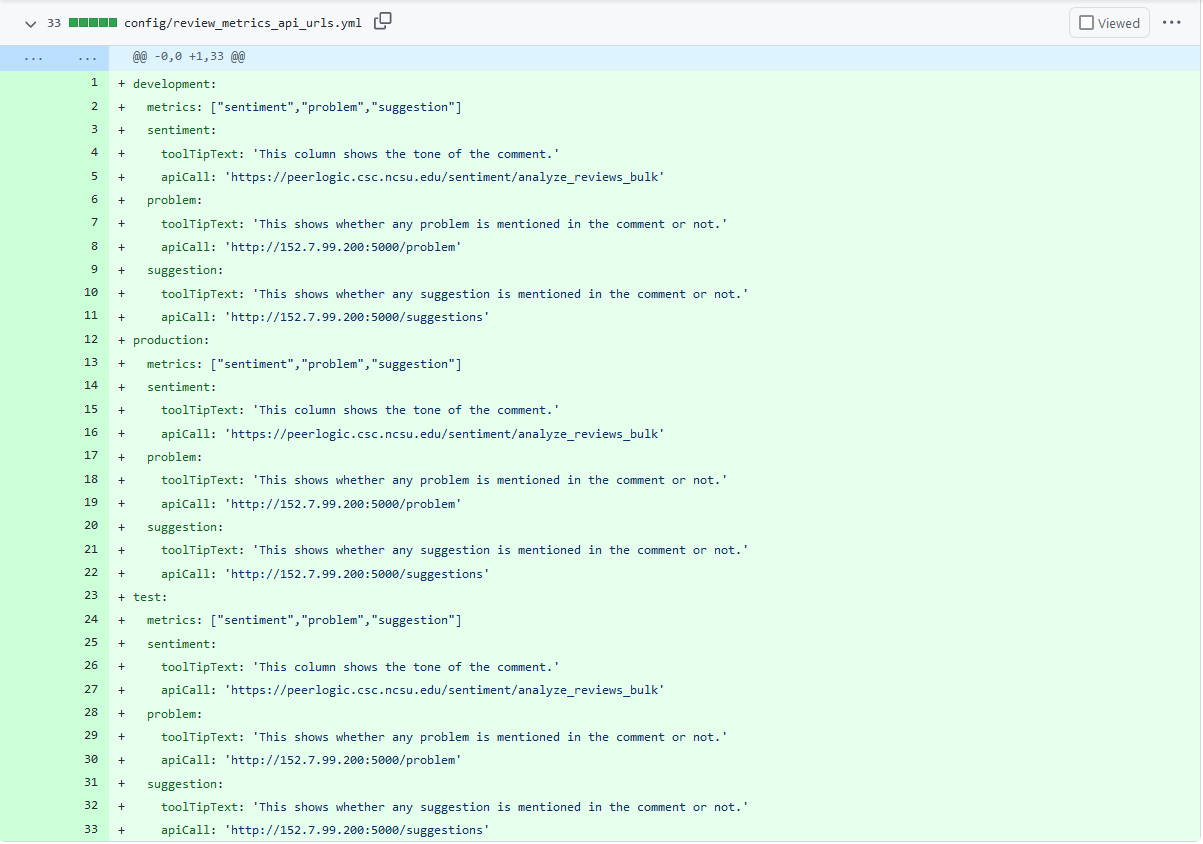

Refactoring (Removing Hardcoded Values)

In the previous implementation the api urls for each analysis and the tool tip description for the analysis were hardcoded into the files. We moved theses values out into the config file below and the values for each of the hardcoded analysis values can be accessed anywhere in the _response_analysis.html.erb file which handles the processing for the analysis. This allows any hardcoded analysis value to be found in one location along with making it easier to append other hardcoded values to each analysis.

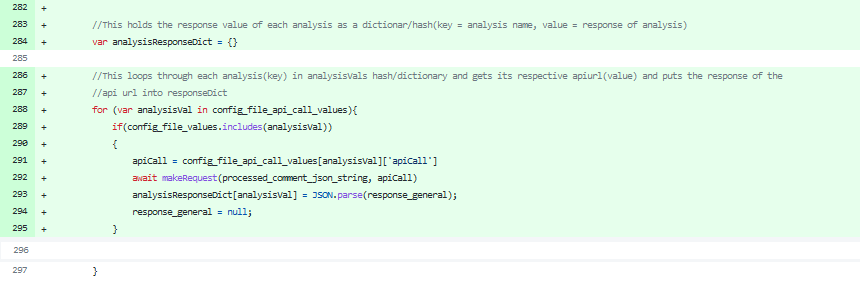

Refactoring (removing duplicate api values)

In the previous implementation each analysis in the _response_analysis.html.erb file had its own block of code for getting the response message, processing values and displaying it to the table. The issue was that many analysis had duplicate blocks of code for getting response message, processing data, and displaying data with only a few analysis requiring different getting, processing, and displaying methods. In the new implementation a for loop would loop through each analysis and would run a default block of code for getting, processing, and displaying data unless the analysis had its own unique getting, processing, and displaying methods where an if statement in the for loop would run the blocks of code for the given method.

Files edited

- review_metrics.yml

- response.html.erb

- _response_analysis.html.erb

- response_controller.rb

- response_controller_spec.rb

Sample API Input/Output

- Input Text is passed in the following JSON format

{

"text": "This is an excellent project. Keep up the great work"

}

- Output is returned in the following JSON format:

{

"sentiment_score": 0.9,

"sentiment_tone": "Positive",

"suggestions": "absent",

"suggestions_chances": 10.17,

"text": "This is an excellent project. Keep up the great work",

"total_volume": 10,

"volume_without_stopwords": 6

}

Testing Plan

Automated Test

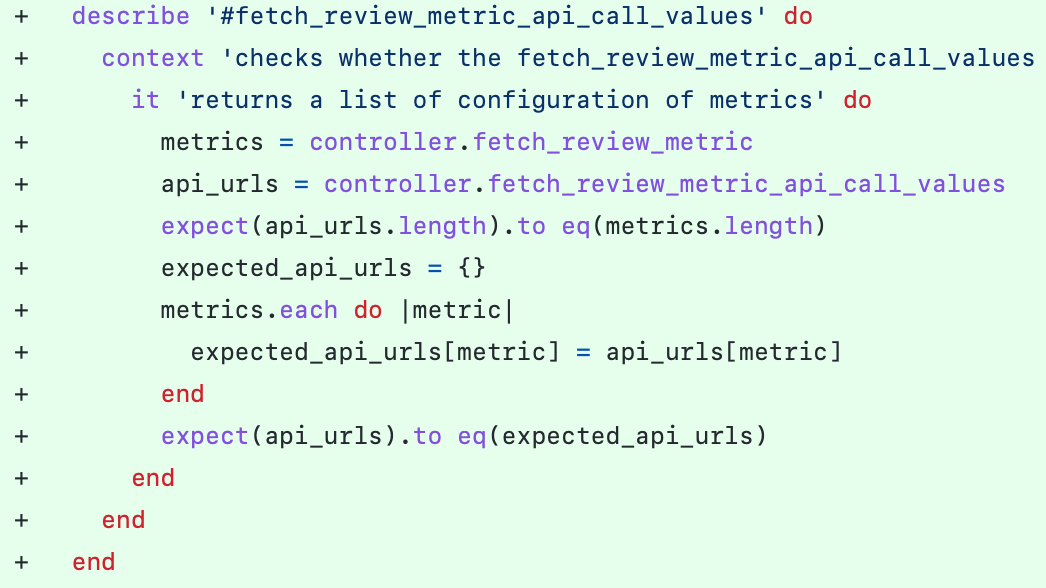

spec/controllers/response_controller_spec.rb

Added a test that confirms the correct API URLS are being pulled from the config file.

Manual Test

- The functionality was written on the client side in javascript solely in _response_analysis.html.erb

- To test this view, any type of review must be accessible as a student.

- There is a button at the bottom of the review called 'Get Review Feedback'.

- When pressing button, API calls are issued and the metrics will show up within the table (a sample of which is shown in above screenshot).

- As API calls will take time, 'Loading..' text will appear until the API calls are complete

- All the review feedback for the comments will be displayed in a colorful table.

- In the feedback table, upon hovering on the comment number, we will be able to see the rubric item and review comments associated with that particular rubric item

Important Links

- https://docs.google.com/document/d/1slx4HPIbgTH-psIKMSCF-HDF9brxf-FuYhzVT9ZiIrM/edit#heading=h.fxfungdw1d5r

- https://github.com/expertiza/expertiza/pull/2159

- https://youtu.be/oN7qCNTFBE8

Team

- Prashan Sengo (psengo)

- Griffin Brookshire (glbrook2)

- Divyang Doshi (ddoshi2)

- Srujan Ponnur (sponnur)