CSC/ECE 517 Fall 2019 - M1950. Support Asynchronous Web Assembly Compilation

Servo is a prototype web browser engine written in the Rust language. Servo is a new, experimental browser that supports synchronously compiling and executing WebAssembly code, but does not yet support asynchronous compilation. This means that the entire WebAssembly program must be fetched before compilation can begin, which leads to longer time loading pages that run WebAssembly programs than in other web browsers. The goal of the project is to support compiling WebAssembly programs asynchronously so compilation can begin while the program is still being fetched from the network.

Introduction

Servo

ServoServo is an open source prototype web browser layout engine being developed by Mozilla, and it is written in Rust language. The main idea is to create a highly parallel environment, in which different components can be handled by fine grained, isolated tasks. The different components can be rendering, HTML parsing, etc.

Rust

Rust is an open source systems programming language developed by Mozilla. Servo is written in Rust. The main purpose behind its design is to be thread safe and concurrent. The emphasis is also on speed, safety and control of memory layout.

Web Assembly

WebAssembly is a new type of code that can be run in modern web browsers — it is a low-level assembly-like language with a compact binary format that runs with near-native performance and provides languages such as C/C++ and Rust with a compilation target so that they can run on the web. It is also designed to run alongside JavaScript, allowing both to work together.

Scope

Major browsers support the WebAssembly standard which can be used to implement performance-sensitive sandboxed applications. Servo is a new, experimental browser that supports synchronously compiling and executing WebAssembly code, but does not yet support asynchronous compilation. This means that the entire WebAssembly program must be fetched before compilation can begin, which leads to longer time loading pages that run WebAssembly programs than in other web browsers. The goal of this work is to support compiling WebAssembly programs asynchronously so compilation can begin while the program is still being fetched from the network.

The scope of this milestone was to complete the initial steps mentioned here. The subsequent steps mentioned here are to be done for the final project.

The steps for the OSS project are as follows:

- The project requirement initially stated that we build and Compile servo. Following are the steps for this:

Servo is built with Cargo, the Rust package manager. Mozilla's Mach tools are used to orchestrate the build and other tasks.

git clone https://github.com/servo/servo cd servo ./mach build --dev

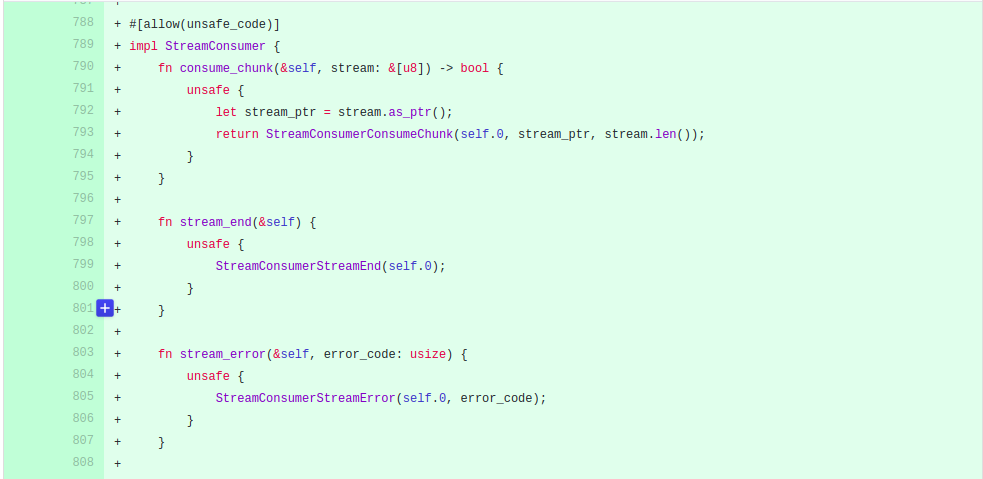

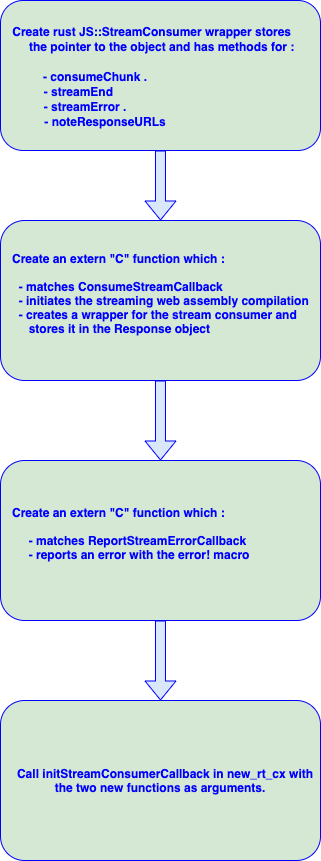

- Create a rust JS::StreamConsumer wrapper that stores a pointer to the object and has methods for consumeChunk, streamEnd, streamError, and noteResponseURLs.

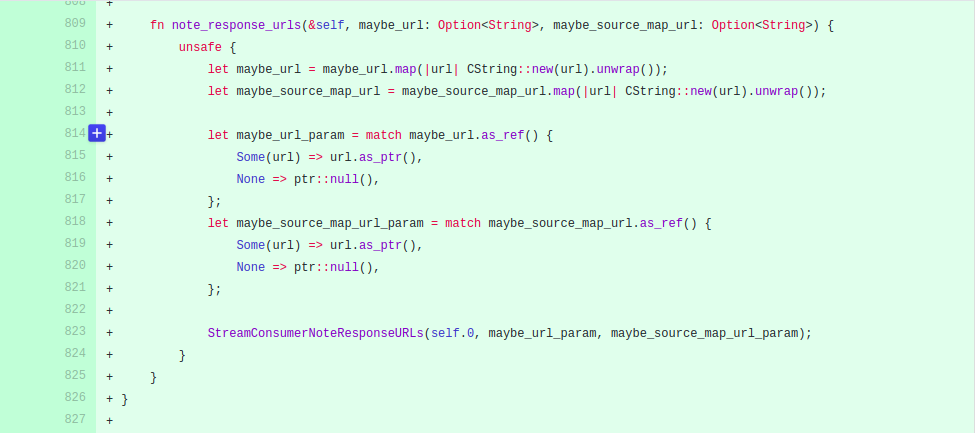

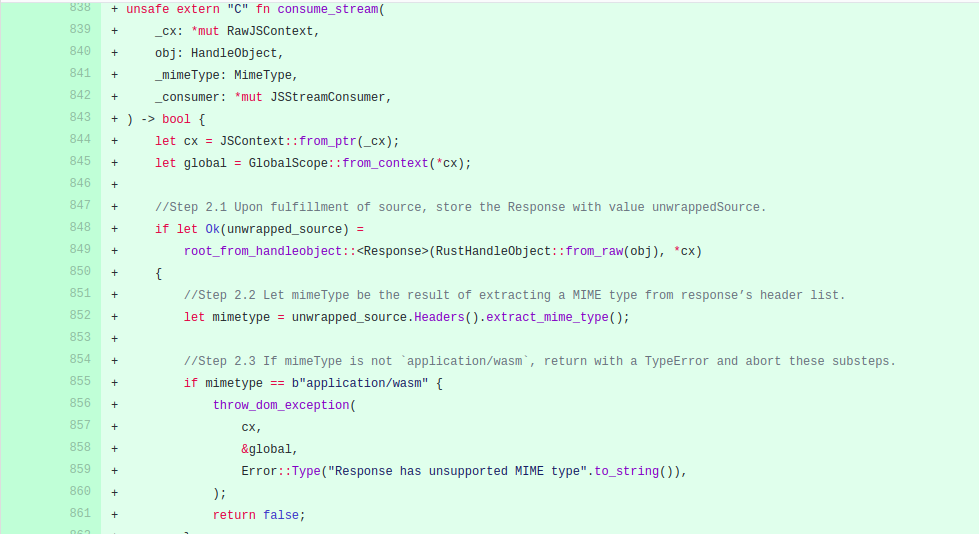

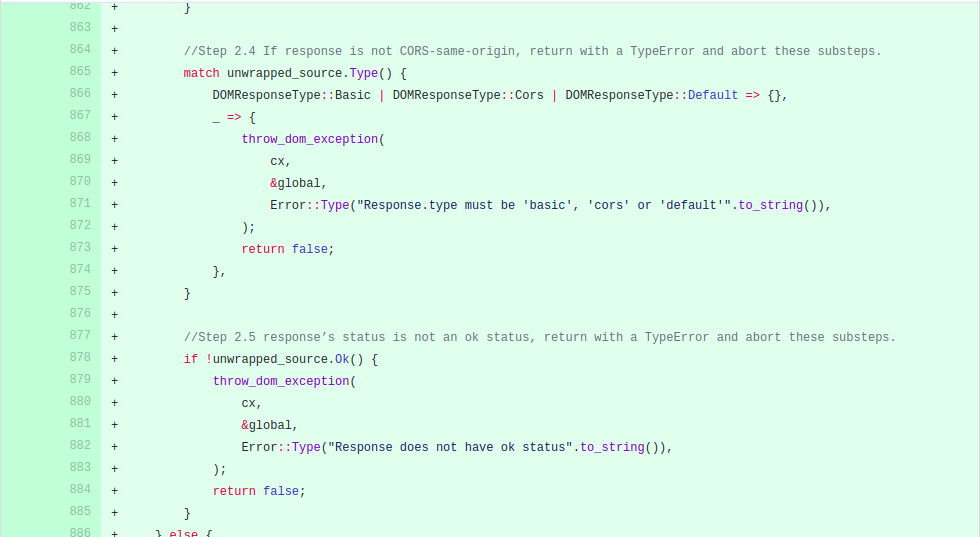

- Implement an extern "C" function in script_runtime.rs that matches ConsumeStreamCallback that initiates the streaming web assembly compilation then creates a wrapper for the stream consumer and stores it in the Response object

- Implement an extern "C" function in script_runtime.rs that matches ReportStreamErrorCallback and reports an error with the error! macro

- Lastly, call InitStreamConsumerCallback in new_rt_and_cx with the two new functions as arguments, described in the specifications

The steps for the final project are as follows:

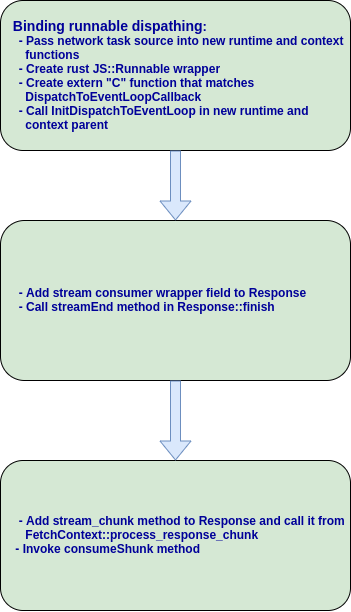

- Binding runnable dispatching:

- Pass network task source into new_rt_and_cx functions

- Create rust JS::Runnable wrapper that stores a pointer to the object and has a run method that calls the new C function passing the stored pointer

- Create an extern "C" function in script_runtime.rs that matches DispatchToEventLoopCallback that casts the closure argument to a boxed network task source, wraps the runnable pointer in the new struct, and uses the network task source to queue a task that calls the run method of the wrapper

- Call InitDispatchToEventLoop in new_rt_and_cx_with_parent passing the new function and the boxed network task source as the closure argument /blob/b2f83932fe9d361face14efd03f2465b9262e687/mozjs/js/src/jsapi.h#L2475) in new-rt_and_cx_with_parent passing the new function

- Add a stream consumer wrapper field to Response, and call the streamEnd method in Response::finish

- Add a stream_chunk method to Response and call it from FetchContext::process_response_chunk; this should invoke the consumeChunk method of the stream consumer wrapper if it exists.

Design Pattern

Design patterns are not applicable as our task involved just implementation of methods. However, the Implementation section below provides details of the steps as why it was implemented, the way it was implemented

Implementation

The following steps were followed to meet the project requirements as per this github page.

OSS Project (Initial Steps)

Step 1

We have implemented a Stream Consumer structure which has the methods for consumeChunk, streamEnd, streamError, and noteResponseURLs based on the Stream Consumer class implemented in the Mozilla Spider Monkey module.

The note_response_urls function takes optional arguments as input, if nothing is passed it calls the underlying JS function with null ptr in place of the URLs.

Step 2

We implemented the consume stream function which checks if a response extracted from the source is valid and returns an appropriate boolean value. The function checks if the response has a valid mime type, is CORS- origin type and has an ok status. if the source cannot be extracted we throw a dom exception and return false.

This step checks if the response type is of "default", "cors" or "basic" type. This step checks the status code of the repsonse. It throws an error if the response is not OK ie. the status is not between 200 and 299.

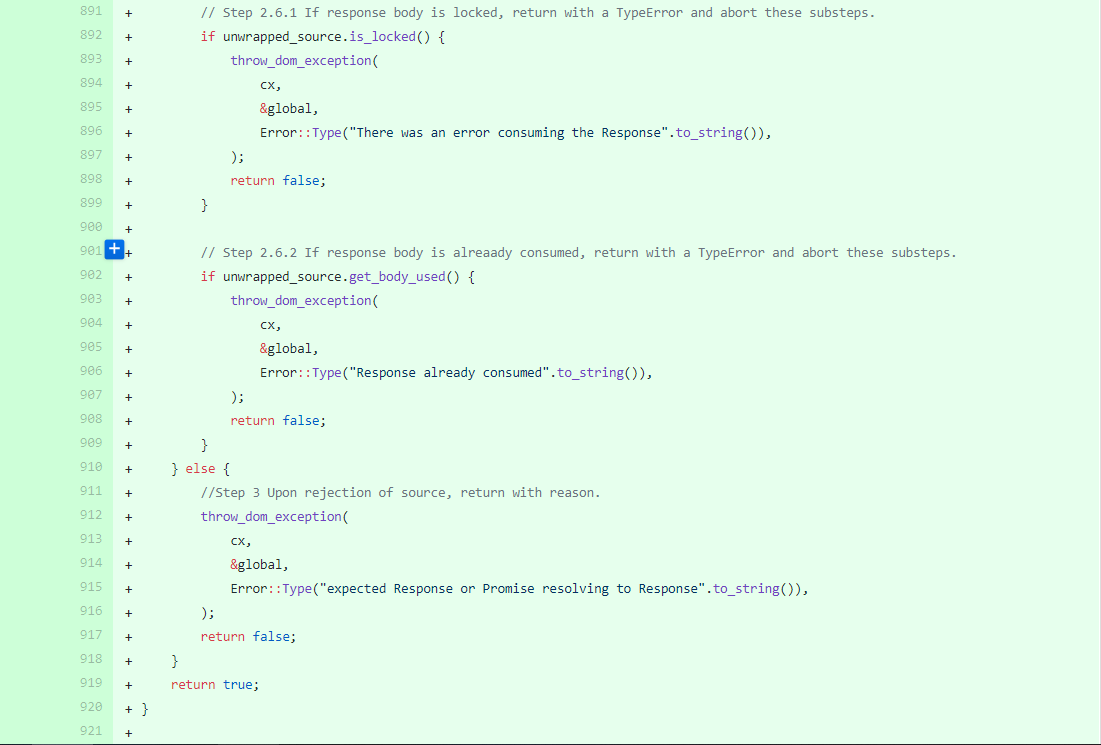

These steps check if the response is locked. If the response is locked, it is not accessible and it throws a type error. If the response's body is already consumed, there is no need to process the response again. The function raises a corresponding type error.

Step 3

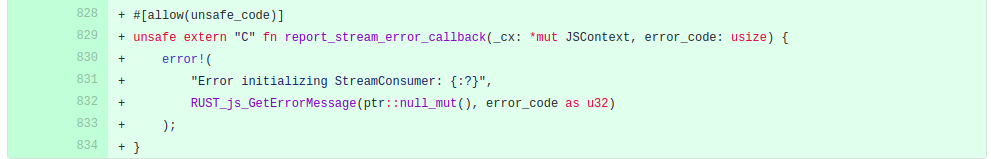

The implementation of the ReportStreamErrorCallback function to retrieve and report errors during stream compilation, CompileError, or TypeError in case of invalid Mime type and unprocessable stream.

Step 4

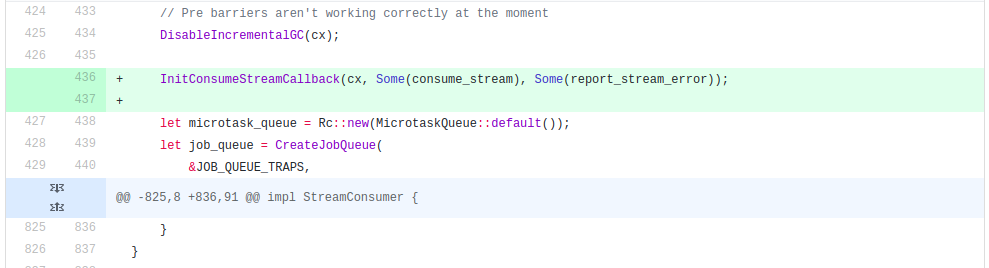

The implementation of InitStreamConsumerCallback in new_rt_and_cx_with_parent function. We call the Consume Stream callback added in step 2 and the Report Stream Error Callback added in Step 3 in new runtime environment.

Final Project (Subsequent Steps)

Step 1

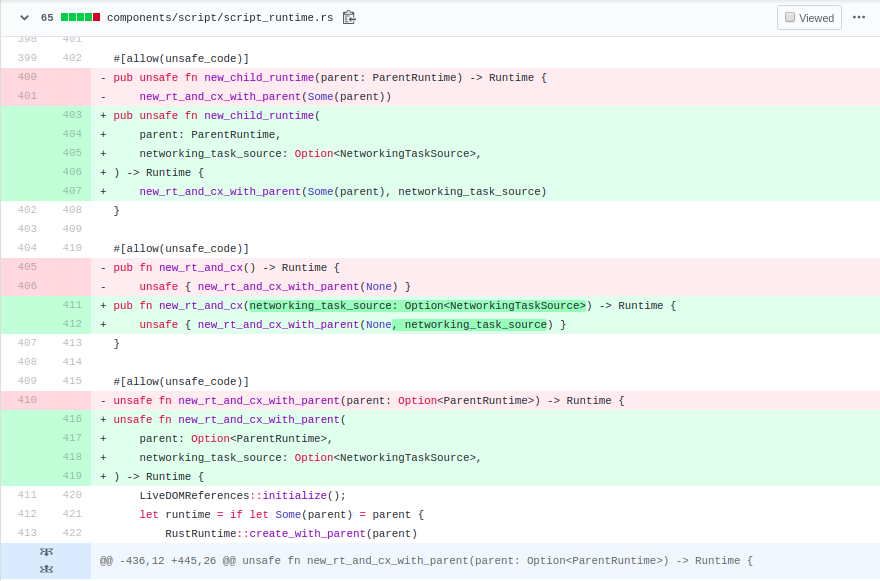

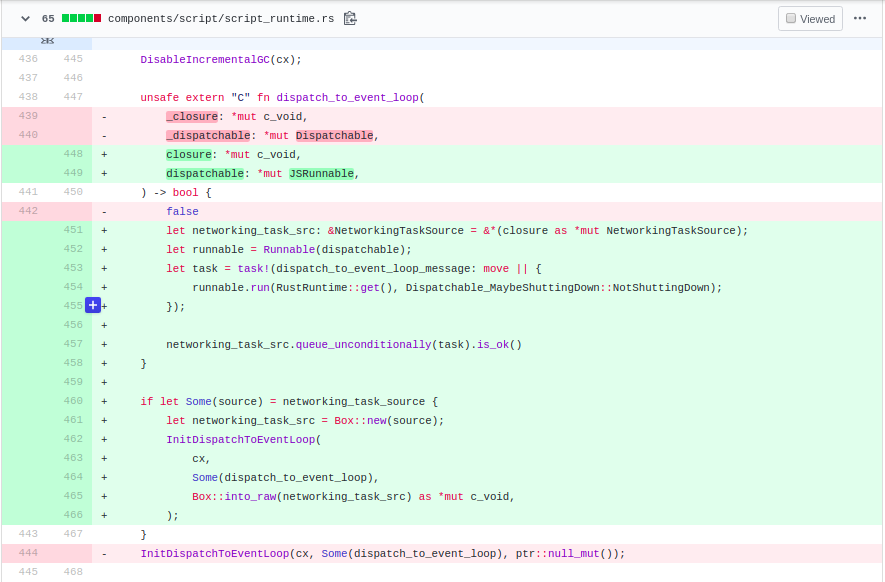

- To enable the asynchronous compilation, we had to integrate event loop support and bind the runnable dispatching in the servo runtime. For this, we needed to pass NetworkingTaskSource object around new_rt_and_cx and new_rt_and_cx_with_parent functions in order to add the task in the job queue for execution.

- Implemented an extern "C" function, dispatch_to_event_loop in script_runtime.rs that matches DispatchToEventLoopCallback that casts the closure argument to a boxed network task source, wraps the runnable pointer in the new struct, and uses the network task source to queue a task that calls the run method of the wrapper. Additionally, called the InitDispatchToEventLoop funtion in new_rt_and_cx_with_parent passing the new function and the boxed network task source as the closure argument in new-rt_and_cx_with_parent passing the new function.

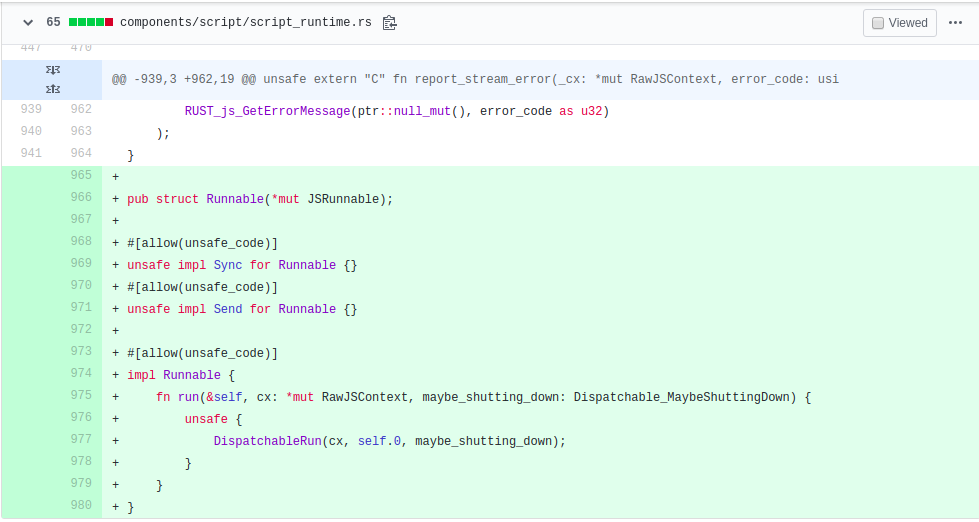

- Added rust JS::Runnable wrapper in script_runtime.rs that stores a pointer to the object and has a run method that calls the added C function, DispatchableRun, passing the stored pointer.

Step 2

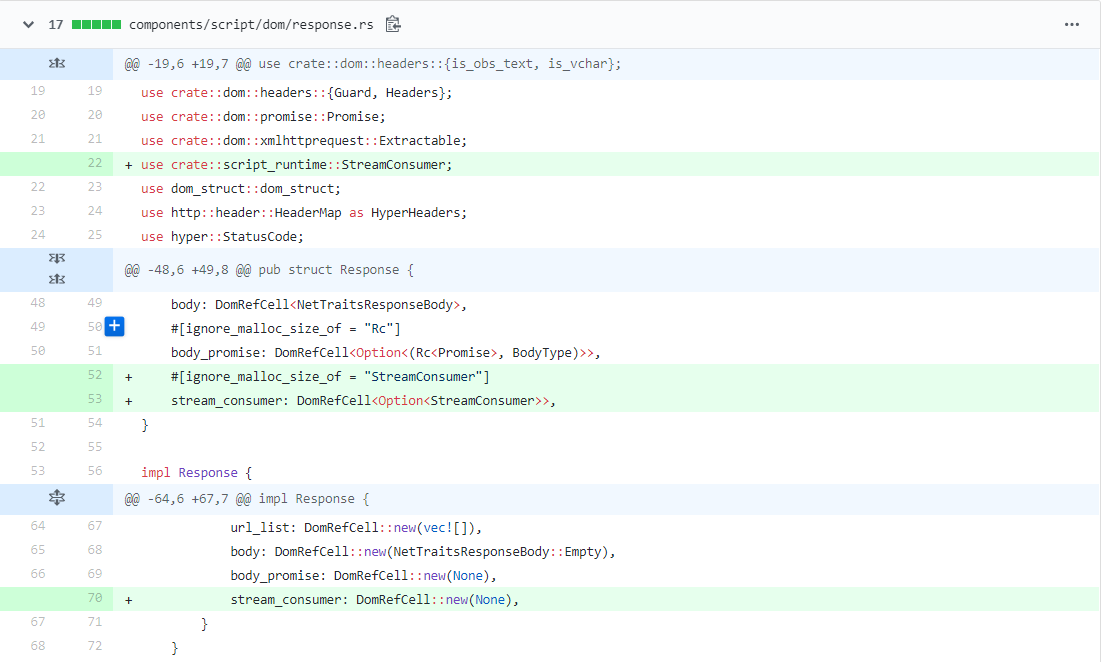

We added a Stream Consumer object defined in the initial steps to the Response structure.

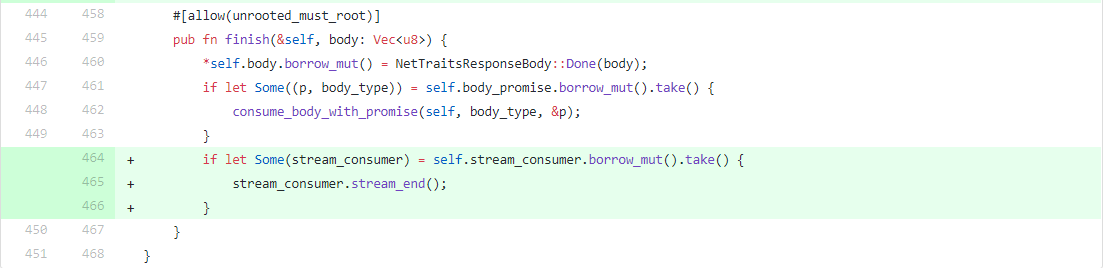

In the Repsonse::finish() method we called the stream_end() function using the Stream Consumer object we recently added.

Step 3

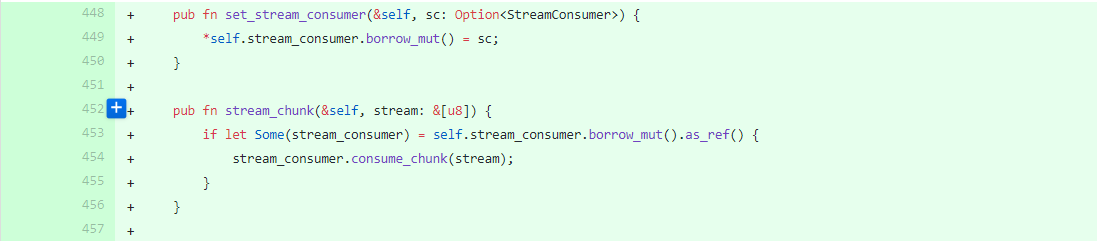

We added a stream_chunk method in the response class which invokes the consume_chunk() method defined for the StreamConsumer object. The consume_chunk() method Asynchronously compiles the WebAssembly module and returns a boolean value based on it's success.

Testing

The changes we have made allow for asynchronous compilation of Web Assembly modules. After the changes we are able to load a website which makes use of WebAssembly promises. Here is the link to our demo.

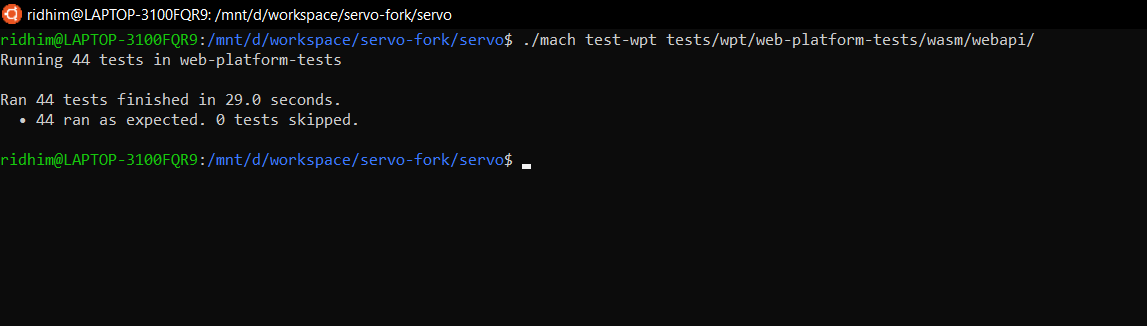

In addition, we have tested our implementation against the servo-provided automated test suite using `./mach test-wpt tests/wpt/web-platform-tests/wasm/webapi` command. The following image is the output of the said command:

Following are the steps to compile the implementation to check for any broken code:

- Install the pre-requisites required for servo as mentioned here

- Run the following commands

cd

git clone https://github.com/Akash-Pateria/servo

cd servo

git checkout -b origin/async-wasm-compilation-initial

./mach fmt

./mach test-tidy

./mach build -d

You will see that the servo build is successful and no errors are reported.

Pull Request

OSS Project (Initial Steps)

Final Project (Subsequent Steps)

References

1. https://doc.rust-lang.org/book

2. https://en.wikipedia.org/wiki/Rust_(programming_language)

3. https://en.wikipedia.org/wiki/Servo_(layout_engine)

4. https://github.com/servo/servo/wiki/Asynchronous-WebAssembly-compilation-project

6. http://rustbyexample.com/

7. https://webassembly.org/

8. https://developer.mozilla.org/en-US/docs/WebAssembly