CSC/ECE 517 Fall 2009/wiki3 4 dt

Terminology

- DRY - Don't repeat yourself

- SCM - Software configuration management

- SDLC - Software development life cycle

- REST - Representational state transfer

- URI - Uniform Resource Identifier

- UI - User Interface

Introduction

The DRY principle states that every piece of knowledge must have a single, unambiguous, authoritative representation within a system.(Definition taken from Pragmatic Programmer, The: From Journeyman to Master) It is a software engineering principle for efficient software development, build, test, deployment and documentation. It can be applied to all the levels in the SDLC. It was formulated by Andy Hunt and Dave Thomas with an intention that a change in the data / code in a single element should not affect other unrelated elements. There are advantages of following this principle in software like ease of maintenance, good understanding of the code etc. DRY principle is not only confined to coding methodologies but is much broader and is extended to any duplication of data. This article throws some light upon various instances where data is duplicated in the real world. Each of the instances are discussed in detail.

Examples

Scenario where DRY principle is effective

Database

In case of a database it is desirable to have a single copy of data. A database, specially the one that satisfies the third normal form, has the feature that a single piece of information is stored only once. This leads to efficient use of memory as less memory would be required for storing data. It becomes easier to maintain and update because changes need to be made only in one place. This also makes the database less prone to errors because if there were multiple copies of this data then it is quite possible that they do not match. But if only one copy is present then there is no question of mismatch of data.

Scenarios where DRY principle is violated

Specific Project requirements

There are certain situations in which duplication is hard to avoid. Some projects have a requirement to be functional on various platforms and environments. Even though the basic functionality remains same, different documents needs to be written for different target platforms as each would have it’s own libraries and development environment. Hence, there will be different documentation even though they share definitions and procedures.

Also the platforms may differ only in the version of the operating system, or the processor might be different. In this case both the code and the documentation duplication cannot be avoided. For example the code changes for the project in Fedora and Red Hat versions of Linux would be minimal but inevitable if there is a requirement. It happens often in software industry.

There are certain workarounds in order to minimize the duplication. For instance, let us discuss about documentation in the code. A programmer is taught to comment the code. The DRY principle says that the low-level knowledge should be in the code and the higher level explanations should be kept for the comments because otherwise we are duplicating knowledge and any change in the code would also require a change in the comments which is not desirable.

Caching

Caching is collection of duplicate data which is already stored elsewhere but when the operations of retrieval are expensive, the cache is used for enhanced performance and low latency. It is applied in many places like web, networking, computer architecture etc. Caching clearly violates DRY principle to a large extent because especially in processors, various levels of caches are maintained. The objective is to reduce the number of processor cycles required to execute the instruction, so that it can use them effectively elsewhere. In the web, caching has proven to improve the speed of data transfer and also the bandwidth utilization is approximately increased by 40%. Most of the caching principles utilize the fact that user may want to access the same data that has been accessed many times before.

Advantages: * Enhanced performance and effective utilization of resources * End user satisfaction.

Disadvantages: *There can be scenarios of more than one cache operating at various levels. Any change in the data has to be updated at all these levels. If not updated periodically or whenever there is a change, there are potential chances that user may get the corrupt data. *The duplicate data may consume a lot of space and may affect performance.

The trade off should be made at the design level, taking into consideration all of the above factors and based on the requirements and emphasis on each criterion, data duplication can be induced or avoided.

Configuration management

Software configuration management has steadily grown in importance over the past decade and it has become mandatory for any software application now. It is considered to be one of the best solutions to handle changes in the code and documents.Most common SCM tools are IBM Rational Clearcase, SVN, WinCVS etc. From the requirement gathering phase through the design, development and till the testing phase, many elements are chosen as configuration items. The SCM software keeps multiple copies of these (both code and documents) and the history of each is maintained from the creation and the changes need to be updated periodically. It is clearly a violation of DRY principle. There is a purpose behind having multiple copies of data and allowing multiple checkouts for the same file.

Advantages: * More than one user can work on the same code with his own copy and merge back into the development branch. * The client sometimes may require previous versions of the working code for various reasons. Although most of the functionality in the newer version may be common, it makes sense to maintain a complete version of the previous releases. * There may be a bug related to the older versions, which has come up after many releases.

Disadvantages: * It should be ensured that the newer version should contain sufficient amount of changes from the previous one. Else data duplication is futile.

Scenario where DRY principle may be useful

Web Architecture

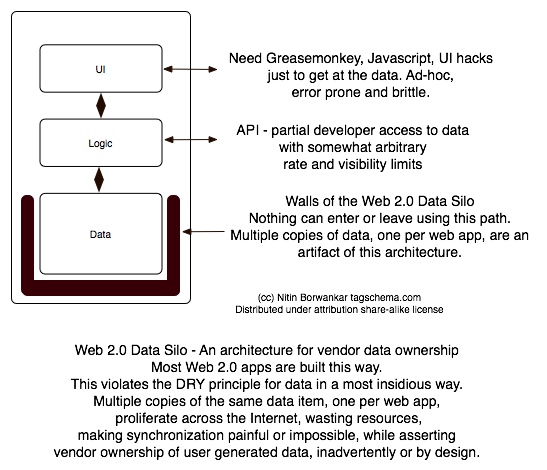

In most web 2.0 applications the users cannot freely access their data. Hence they cannot re-use their data in other web application. The current architecture for most web 2.0 applications is shown below.

Take for example "Flickr". It is popular because of the interesting features it provides e.g. tagging. The users are not concerned about the massive data storage facilities. Hence this application would still function the same even if the data layer as shown in the figure above is not owned by "Flickr". Also, "Flickr" allows users to add the "Flickr" photos to other applications.

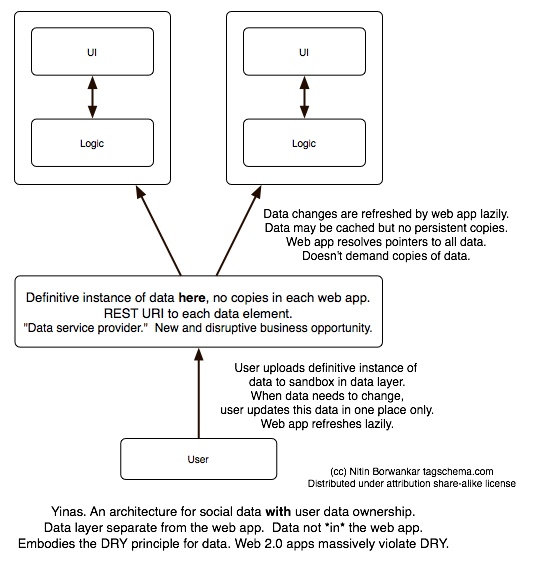

This leads to a possibility of a general purpose data layer which not a part of any one particular web application. The web applications would only point to the data in this layer and the users can have full access and control of their data. The architecture shown below is of the future web application which has been put in place in some parts.

Since most of the applications in the internet community share data, for e.g. social networking websites, it would be very useful to have such an architecture as it would greatly minimize the duplication of data.

(Images have been taken from http://wolfbyte-net.blogspot.com/2009/01/ccd-red-degree-principle-staying-dry.html)

Conclusion

We have seen many scenarios above where the DRY principle is violated and in some of the cases it is very useful for the data. In all of those instances where the DRY principle is not followed, the designers have potential reasons for duplicating the data. In general for any system, it is ideal to have a single copy of data which will suffice the purpose. But it is not applicable for every scenario as mentioned in the article.