CSC/ECE 517 Fall 2009/wiki3 22 SN

Clone Detection and Clone Manipulation

Thesis

The DRY principle says that a particular code fragment should not be repeated more than once in a program. But it happens. And when it does, it is good to be able to find the multiple code "clones." A variety of tools have been designed for this, and some of them even allow joint editing of the clones. Survey the techniques for dealing with the problem, and compare the effectiveness with refactoring (e.g., Extract Method).

Code Cloning

Often in computer systems whether small or large, code can become complex. As systems become larger and larger and more and more people work on the system there are often cases when the code becomes duplicated. Sometimes based on system architecture this is unavoidable. However often times replicated code can be avoided and should be as much as possible. It is always best approach to replace, reuse, and refactor code as much as possible.

There are times when code cloning is unavoidable. Factors such as design, security restrictions, rights of code, and class interaction can all be factors on why code cannot be manipulated in a way in which code cloning is not cannot be fixed.

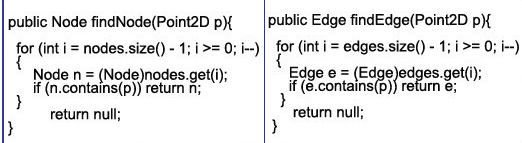

Clone Code Example [Baxter1998]

class Person

{

print 'name:'

print firstname + " " + lastname

}

class Doctor

{

print 'name:'

print firstname + " " + lastname + " M.D."

}

Clone Detection

Clone detection finds code in large software systems that has been replicated and modified by hand. Remarkably, clone detection works because people copy conceptually identifiable blocks of code, and make only a few changes. This means the same syntax is detectably repeated. Each identified clone thus indicates the presence of a useful problem domain concept, and simultaneously provides an example implementation. Differences between the copies identify parameters or points of variation.[Baxter1998]

Clones can enhance a product line development in a number of ways:

- Removal of redundant code

- Lowering maintenance costs

- Identification of domain concepts for use in the present system or the next

- Identification of parametrized reusable implementations

- Sometimes reveal code bugs directly by inspection of parameter bindings with inconsistent actual or conceptual types

There is much hidden knowledge in open source software that can be valuable for improving software quality and productivity. For example, if we want to implement a new feature and know there exists code with similar functionalities in other projects, either reusing the existing code or simply using it for reference will likely shorten development time. Other examples include if a know software fault may occur within a particular context, we can quickly locate all similar defects. To uncover the hidden knowledge, a requisite step is to create an effective code search tool that can scale to the whole open source world, which contains hundreds of billions, even trillions of lines of code. An important aspect of code search is to find copied-and-pasted code or code that looks similar which is code cloning. [Baxter1995]

Taken the approach that there is much to gain from code clone detection there is a need to compare the effectiveness of clone manipulation when a clone is found.

Clone Detection Techniques

All Clone Techniques Cited From Burd2002

String Based

String based techniques use basic string transformation and comparison algorithms which makes them independent of programming languages. Techniques in this category differ in underlying string comparison algorithm. Comparing calculated signatures per line, is one possibility to identify for matching substrings. Line matching, which comes in two variants, is an alternative which is selected as representative for this category because it uses general string manipulations.

Simple Line Matching

Simple Line Matching is the first variant of line matching in which both detection phases are straightforward. Only minor transformations using string manipulation operations, which can operate using no or very limited knowledge about possible language constructs, are applied. Typical transformations are the removal of empty lines and white spaces. During comparison all lines are compared with each other using a string matching algorithm. This results in a large search space which is usually reduced using hashing buckets.

Parameterized Line Matching

Parameterized Line Matching is another variant of line matching which detects both identical as well as similar code fragments. The idea is that since identifier–names and literals are likely to change when cloning a code fragment, they can be considered as changeable parameters. Therefore, similar fragments which differ only in the naming of these parameters, are allowed.

Token Based

Token based techniques use a more sophisticated transformation algorithm by constructing a token stream from the source code. The presence of such tokens makes it possible to use improved comparison algorithms. Next to parameterized matching with suffix trees, which acts as representative, we include in this category because it also transforms the source code in a token-structure which is afterwards matched. The latter tries to remove much more detail by summarizing non interesting code fragments.

Parameterized Matching

Parameterized Matching With Suffix Trees consists of three consecutive steps manipulating a suffix tree as internal representation. In the first step, a lexical analyser passes over the source text transforming identifiers and literals in parameter symbols, while the typographical structure of each line is encoded in a non-parameter symbol. One symbol always refers to the same identifier, literal or structure. The result of this first step is a parameterized string or p-string. Once the p-string is constructed, a criterion to decide whether two sequences in this p-string are a parameterized match or not is necessary. Two strings are a parameterized match if one can be transformed into the other by applying a one-to-one mapping renaming the parameter symbols. An additional encoding prev(S) of the parameter symbols helps us verifying this criterion. In this encoding, each first occurrence of a parameter symbol is replaced by a 0. All later occurrences are replaced by the distance since the previous occurrence of the same symbol. Thus, when two sequences have the same encoding, they are the same except for a systematic renaming of the parameter symbols. After the lexical analysis, a data structure called a parameterized suffix tree (p-suffix tree) is built for the pstring. A p-suffix tree is a generalisation of the suffix tree data structure [16] which contains the prev()-encoding of every suffix of a P-string. Concatenating the labels of the arcs on the path from the root to the leaf yields the prev( )-encoding of one suffix. The use of a suffix tree allows a more efficient detection of maximal, parameterized matches.

Other Techniques

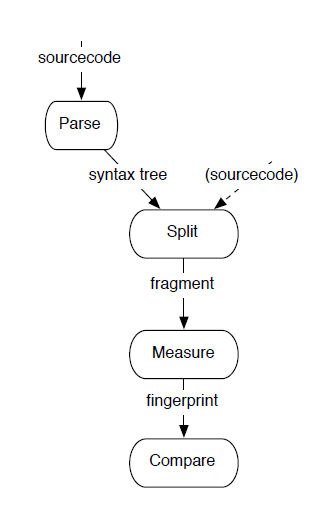

AST-based techniques use parsers to first obtain a syntactical representation of the source code, typically an abstract syntax tree (AST). The clone detection algorithms then search for similar subtrees in this AST.

PDG-based approaches go one step further in obtaining a source code representation of high abstraction. Program dependence graphs (PDGs) contain information of semantically nature, such as control- and data flow of the program.

Metrics-based techniques are related to hashing algorithms. For each fragment of a program the values of a number of metrics is calculated, which are subsequently used to find similar fragments.

Clone Manipulation

So what happens when clone code is? Once the clone detection tools have found code what techniques are available for the tools to solve the issues. The first step is to investigate why the clone code is there.

- Several Questions need to be asked:

- What classes call the code?

- What functionality does the code provide?

- Who “owns” the code?

- How many systems depend on the code?

Once these questions have been answered and it has been decided that this code cloning needs to be resolved then there are different techniques in solving the code clone issue. There are many tools out there to use for code detection and manipulation. Described below are the different tools researched and some information on the techniques used.1

Clone Dr

The CloneDR™ is built with the DMS/Software Reengineering Toolkit. This technology identifies not only exact, but near-miss duplicates in software systems and can be used on a wide variety of languages. Using compiler technology rather than string matching, it will find clones in spite of changed comments, white spaces, line breaks, case changes, different variable names and even different expressions in the clone body. It detects clones in code and/or data declarations. Detected clones can be filtered by size, and automatically or interactively removed. Clones can be removed by replacing them with equivalent preprocessor macros, type declarations, subroutine calls, or inlined subroutine calls (dependent on language and options), without changing the system functionality. The replacement is automatically generated from the detected clones.

SHINOBI

SHINOBI is supported as an Add-In of Microsoft Visual Studio 2005. It automatically detects clones without the programmer's clear intention at the time of opening and editing source code, and constantly displays the detected clones on the view in IDE. SHINOBI displays the detected clones in order of the ranking f the similarity between the source code on the cursor and the detected clones, and the information in the CVS repository, such as message logs and committed dates of the source code that is detected clones.

CCFinderX

CCFinder can be applied to huge source codes. From a source code with approximately a million lines, CCFinder can detect code clones within several minutes to several hours using a PC/AT compatible. By lexical analysis and transformation based on the syntax of the programming languages, CCFinder can extract code clones correctly from source files, even in cases where the names of variables have been changed. CCFinder can run for C/C++, Java, COBOL, Fortran, etc.

- Multi-threading for multi-core CPU

- AST-based preprocessing with an island-parser like parser.

- Search functions

- Users can adapt the tool to another programming languages or dialects.

- Analysis using metrics of code clone

- For interoperability with another tools, the tool can read/write data in tab separated values text format.

- Interactive analysis with multiple views of GUI front-end GemX.

Conclusion

There are many different ways to go about code clone detection. Code clones can be detected on an exact match basis. Unfortunately, using exact match criteria for code clone detection may not be sufficient in all cases. For instance, a programmer may copy a particular piece of code, paste it to another location in the system and proceed to change the pasted code such that it remains syntactically similar to the original code, but not exactly similar. Thus, some form of near-exact clone detection must be employed. Parameterized matching (representative for the token-based approaches) provides a precise picture of a given piece of duplicated code and is robust against rename operations. Therefore it works best in combination with fine-grained refactoring tools that work on the level of statements. This is similar to how the refactoring methods of Extract Method, Move Behaviour, Close to Data, and Transform Conditionals into Polymorphism work. Although there are different techniques use than the refactoring code methods the software tools seem to work nicely and continue to get better. The tools take a variety of techniques and make them work at an efficient level. As the technology advances the tools will become more and more useful to detect code clone issues.

References

- [Baker1992] B.S. Baker, "A Program for Identifying Duplicated Code", Proc. Computing Science and Statistics: 24th Symposium on the Interface, 24, pp. 49-57 Mar. 1992.

- [Baker1995] B.S. Baker, "On finding Duplication and Near-Duplication in Large Software System", Proc. Second IEEE Working Conf. on Reverse Eng., pp. 86-95 Jul. 1995.

- [Balazinska1999] M. Balazinska, E. Merlo, M. Dagenais, B. Lague, and K.A. Kontogiannis, "Measuring Clone Based Reengineering Opportunities", Proc. 6th IEEE Int'l Symposium on Software Metrics (METRICS '99), pp. 292-303, Boca Raton, Florida, Nov. 1999.

- [Baxter1998] I.D. Baxter, A. Yahin, L. Moura, M. Sant'Anna, and L. Bier, "Clone Detection Using Abstract Syntax Trees", Proc. IEEE Int'l Conf. on Software Maintenance (ICSM) '98, pp. 368-377, Bethesda, Maryland, Nov. 1998.

- [Burd2002] Elizabeth Burd, John Bailey, "Evaluating Clone Detection Tools for Use during Preventative Maintenance," Proc. 2nd IEEE International Workshop on Source Code Analysis and Manipulation (SCAM) 2002, pp. 36-43. Montreal, Canada, Oct. 2002.

- [Ducasse1999] S. Ducasse, M. Rieger, and S. Demeyer. "A Language Independent Approach for Detecting Duplicated Code", Proc. IEEE Int'l Conf. on Software Maintenance (ICSM) '99, pp. 109-118. Oxford, England. Aug. 1999.