CSC/ECE 506 Spring 2014/2a

SAS Programming on Distributed-Memory Machines

Abstract

Shared Address Space (SAS) programming on distributed memory machines is a programming abstraction that provides less development effort than that of the traditional method of Message Passing (MP) on distributed memory machines, such as clusters of servers. In distributed memory systems the memory is associated with individual processors and a processor is only able to address its own memory.

This article reviews the underlining concept of Shared Address Space (SAS) programming for Distributed Shared Memory systems. The Partitioned Global address space programming model which is currently popular has been described in detail. This articles also details implementations that employ DSM solutions at the software, hardware and hybrid level. Some of the features of TreadMarks, a DSM implementation, like barrier and lock have been demonstrated using examples. The relevant issues like Performance, Communication requirements and Consistency models have been also covered.

Earlier, distributed systems widely employed message-passing communication. However, this appeared to be much less convenient than the shared-memory programming model because the programmer must be aware of data distribution and explicitly manage data exchange via messages. In addition, such systems introduce severe problems in passing complex data structures, and process migration in multiple address spaces is aggravated. <ref> Distributed shared memory: concepts and systems </ref>

Background

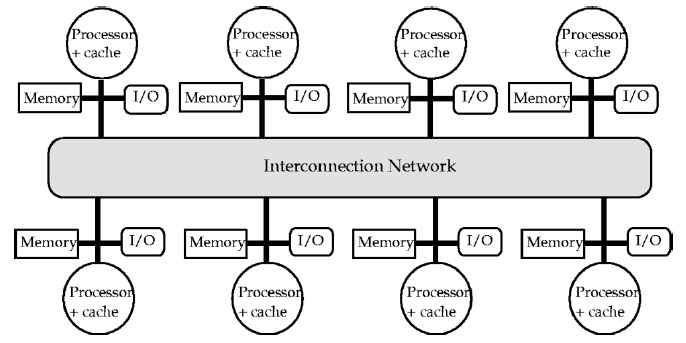

Distributed memory systems are multi-processor systems in which each processor has its own individual memory. Tasks can only operate on a processor's local memory and if non-local data is required, the processor must communicate with one or more remote processors. Distributed memory systems started to flourish in the 1980s. The increasing performance in processors and network connectivity offered the perfect environment for parallel processing over a network of computers. This was a cheap way to put together massive computing power. The main drawback was going from sequential programs made for local memory to parallel programming in shared memory. This was where SAS provided the means to simplify programming by hiding the mechanisms to access distant memory located in other computers of the cluster. Early distributed computer systems relied almost exclusively on message passing in order to communicate with one another, and this technique is still widely used today. In a message passing (MP) model, each processor's local memory can be considered as isolated from that of the rest of the system. Processes or objects can send or receive messages in order to communicate, and this can occur in a synchronous or asynchronous manner. In distributed systems, and particularly with certain types of programs, the message passing model can become overly burdensome to the programmer as tracking data movement and maintaining data integrity can become challenging with many control threads. A shared address or shared-memory system, however, can provide a programming model that simplifies data sharing via uniform mechanisms of data structure reads and writes on common memory. Current distributed systems seek to take advantage both SAS and MP programming model principles in hybrid systems.

Most commonly, a distributed system utilizing SAS will consist of a set of nodes connected by a network. Nodes may be comprised of individual processors or a multiprocessor system (e.g. Symmetric Multiprocessor (SMP)), the latter typically sharing a system bus. Each node itself contains a local memory, which maps partially to the distributed address space. Relevant design elements of early SAS implementations included scalability, coherence, structure and granularity. Most early examples did not structure memory, that is the layout of shared memory was simply a linear array of words. Some, however, structured data as objects or language types. IVY , an early example of a DSM system, implemented shared memory as virtual memory. The granularity, or unit share size, for IVY was in 1-Kbyte pages and the memory was unstructured. A problem when considering optimal page size is the balance between a process likely needing quick access to a large range of the shared address space, which argues for a larger page size, countered by the greater contention for individual pages that the larger page may cause amongst processes and the false sharing it may lead to. Memory coherence is another important design element consideration, and semantics can be instituted that run gradations of strict to weak consistencies. The strictest consistency guarantees that a read returns the most recently written value. Weaker consistencies may use synchronization operations to guarantee sequential consistency.

Partitioned Global Address Space (PGAS) Model

As desktops and high performance computing architectures tend towards distributed collections of multi-core nodes, a new parallel programming paradigm is required to fully exploit the complex distributed and shared memory hierarchies of these evolutionary systems.

Hence PGAS came into picture that exploited the best features of this distributed shared-memory architecture. It brought both performance and data locality features of Message Passing Interface (MPI) and the programmability and data referencing simplicity of a shared-memory model. A PGAS model has the characteristics like one sided communication for improved inter-process performance and local view programming style.

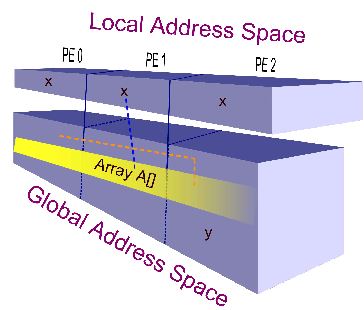

Here local data is kept in private memory and globally shared data kept in shared memory. Here each process shall contribute memory to the shared global memory and any process can directly access any data within the global address space with a single address. In the above figure, every process has been shown with private copies of variable x. Process 2 has declared a shared variable y. A shared array A is distributed across the global address space. Each instance of variable x can directly write and read values to and from the global address space. Also an address can be directly read or written to and from another address within the global address space. <ref> http://cnx.org/content/m20649/latest/ </ref>

Currently three PGA’s programming languages that are being used: Unified Parallel C (UPC) Co-Array Fortran (CAF) Titanium

Cache-Coherent DSM

Early DSM systems implemented a shared address space where the amount of time required to access a piece of data was related to its location. These systems became known as Non-Uniform Memory Access (NUMA), whereas an SMP type system is known as Uniform Memory Access (UMA) architecture. NUMA architectures were difficult to program due to potentially significant differences in data access times. SMP architectures dealt with this problem through caching. Protocols were established that ensured prior to writing a location, all other copies of the location (in other caches) were invalidated. These protocols do not scale to DSM machines and different approaches are necessary. Cache-coherent DSM architectures rely on a directory-based cache coherence protocol where an extra directory structure keeps track of all blocks that have been cached by each processor. A coherence protocol can then establish a consistent view of memory by maintaining state and other information about each cached block. These states usually minimally include Invalid, Shared, and Exclusive. Furthermore, in a cache-coherent DSM machine, the directory is distributed in memory to associate with the cache block it describes in the physical local memory.

Classification of DSMs

From an architectural point of view, DSMs are composed of several nodes connected via a network. Each of the nodes can be an individual machine or a cluster of machines. Each system has local memory modules that are either partially or completely part of the shared memory. There are many characteristics that can be used to classify DSMs. The most common two ways are by the algorithm used by the DSM and the type of implementation.

DSM algorithms

There are three types of DSM algorithm:

- Single Reader/ Single Writer (SRSW)

- central server algorithm - produces long network delays

- migration algorithm - produces thrashing and false sharing

- Multiple Readers/ Single Writer (MRSW) - read replication algorithm. It uses write invalidate. MRSW is the most adopted algorithm.

- Multiple Readers/Multiple Writers (MRMW) - full replication algorithm. It has full concurrency and uses atomic updates.

This historical classification has been extracted from Distributed shared memory: concepts and systems.

| Implementation | Type of Implementation / Cluster configuration | Network | Type of Algorithm | Consistency Model | Granularity Unit | Coherence Policy | SW/HW/Hybrid | Year |

| IVY | User-level library + OS modification | - | MRSW | Sequential | 1 Kbyte | Invalidate | SW | 1984-86 |

| Munin | Runtime system + linker + library + preprocessor + OS modifications | - | Type-specific (SRSW, MRSW, MRMW) | Release | Variable size objects | Type-specific (delayed update, invalidate) | SW | 1989 |

| TreadMarks | User-level | - | MRMW | Lazy release | 4 Kbytes | Update, Invalidate | SW | 1990’s |

| Mirage | OS kernel | - | MRSW | Sequential | 512 bytes | Invalidate | SW | 1987-89 |

| Clouds | OS, out of kernel | - | MRSW | Inconsistent, sequential | 8 Kbytes | Discard segment when unlocked | SW | 1987 |

| Linda | Language | - | MRSW | Sequential | Variable (tuple size) | Implementation- dependent | SW | 1982 |

| Memnet | Single processor, Memnet device | Token ring | MRSW | Sequential | 32 bytes | Invalidate | HW | 1986-88 |

| SCI | Arbitrary | Arbitrary | MRSW | Sequential | 16 bytes | Invalidate | HW | 1992 |

| KSR1 | 64-bit custom PE, I+D caches, 32M local memory | Ring-based | MRSW | Sequential | 128 bytes | Invalidate | HW | 1986 |

| RMS | 1-4 processors, caches, 256M local memory | RM bus | MRMW | Processor | 4 bytes | Update | HW | 1999 |

| Alewife | Sparcle PE, 64K cache, 4M local memory, CMMU | mesh | MRSW | Sequential | 16 Kbytes | Invalidate | Hybrid | 1990's |

| Flash | MIPS T5, I +D caches, Magic controller | mesh | MRSW | Release | 128 Kbytes | Invalidate | Hybrid | 2008 |

| Typhoon | SuperSparc, 2-L caches | NP controller | MRSW | Custom | 32 Kbytes | Invalidate custom | Hybrid | 1994 |

| Shrimp | 16 Pentium PC nodes | Intel Paragon routing network | MRMW | AURC, scope | 4 Kbytes | Update/Invalidate | Hybrid | 1999 |

DSM implementations

Another way of classifying DSMs is the level at which the DSM is implemented. To achieve ease of programming, cost-effectiveness and scalability, DSM systems logically implement the shared memory model on physical distributed memories. <ref> Distributed shared memory: concepts and systems </ref> The normal operations thus include looking-up the data in the local memory, getting the data if it is not present and action on write accesses to maintain the coherence of data. Lookup and action for coherence can be done in Software, hardware or combination of both. Thus DSMs can be classified into software, hardware and hybrid implementations.<ref> Distributed shared memory: concepts and systems </ref>

Software DSMs implementations

Software support for DSMs started being explored in the mid-eighties as they are more flexible than their hardware counter-parts. They are implemented by using user-level software, OS, programming language, or combination of these.

Following are some examples of Software DSMs.

Memo is a user-level DSM. The Mome DSM allows heterogeneous processes running on distributed memory architectures and clusters to share data through the memory mapping of segments into their address space. Each parallel process can freely select the consistency model which must be applied to its own view of the shared data.

IVY (Integrated shared memory) is one of the first proposed software DSM solutions implemented as a set of user-level modules built on top of the modified Aegis operating systems. IVY provides a mechanism for consistency maintainence using an invalidation approach on 1-Kbyte pages. Its most important contribution was in proving the viability of the DSM system with parallel applications in real time systems.

Munin [CARTE91] DSM system which includes two important features: type specific coherence model and release consistency model. However evaluation on Munin programs showed that their performance is less than 10 percent worsecompared to the hand written massage passing counterparts.

Treadmarks is another DSM implementation that counts on significant reduction of data traffic by relaxing consistency semantics according to the release consistency model. This is a user level implementatition that relies on Unix standard libraries to run remote processes and manage memory. Hence it runs smoothly on the kernel level with no modification.

Mirage was introduced in 1989 where coherence maintainence was implemented inside the OS kernel. It main characteristic is that it guarantees page ownership for fixed time called time window. Here performance evaluation has shown that throughput increase is highly sensitive to the proper choice of the parameter delta value.

Clouds is an operating system that has DSM software implementation and implements a set of primitives either on top of Unix or wrt kernel of OS. Cloud has been implemented on SUN-3 workstations connected via Ethernet.

Linda was introduced as an architecture-independent language where the shared memory is put as a ‘tuple space’, tuples mean storage and access units that have data in them. Linda also offers the use of replication as a method for partitioning.

Hardware DSMs implementations

Hardware DSMs though superior in performance, require additional hardware, are complex and expensive. They are mostly used in high-performance, large scale machines. Some examples of hardware DSMs are discussed below.

Memnet The Hardware-Level Distributed Shared Memory implementation called Memnet (Memory Network abstraction) was the earliest. Here the main characteristic is that it avoids costly interprocessor communication and provides abstraction of shared memory to applications from the network.

KSR1 multiprocessor represents one of the early attempts to make DSM systems available on the market. It had a ring based hierarchical organization of clusters each with a local 32 Mbyte cache. Here since there was no main meory the problem of replacement of cache lines arose.

Hybrid DSMs implementations

Hybrid DSM implementations find a balance between the flexibility of software and high performance of the hardware. They are extensively used in mid-range systems. Following are some examples of hybrid DSMs.

Alewife is a specific hybrid system that implements the LimitLESS directory protocol. This protocol represents a hardware-based coherence scheme supported by a software mechanism The main advantage of this is that directory protocol is storage efficient while performing about as well as the full map directory protocol.

Flash multiprocessor the memory coherence protocol is used and also the burden of execution from main processor is shifted to auxiliary protocol processor. Flash also provides message passing with low overhead, owing to hardware support. Since message passing and shared memory machines have been converging recently, Alewife, Flash Typhoon have been instrumental to integrate these two paradigms.

Communication in Modern DSMs

Early SAS programming models in DSM environments suffered from poor performance because protection schemes demanded applications to access the network via system calls, significantly increasing latency. Later software systems and network interfaces arose that were able to ensure safety without incurring the time cost of the system calls. Addressing this and other latency sources on both ends of communication were an important goal for projects such as the Virtual Memory-Mapped Communication (VMMC) model that was developed as part of the Shrimp Project.

Protection is achieved in VMMC because the receiver must grant permission before the sender is allowed to transfer data to a receiver defined area of its address space. In this communication scheme, the receiver process exports areas of its address space that will act as receive buffers and sending processes must import the destinations. There is no explicit receive operation in VMMC. Receivers are able to define which senders can import specific buffers and VMMC ensures only receiver buffer space is overwritten. Imported receive buffers are mapped to a destination proxy space which can be implemented as part of the sender's virtual address space and can be translated by VMMC to a receiver, process and memory address. VMMC supports a deliberate update request and will update data sent previously to an imported receive buffer. This transfer occurs directly without receiver CPU interruption.

Programming Environment

The globally shared memory abstraction provided through virtual memory or some other DSM mechanism allows programmers to focus on algorithms instead of processor communication and data tracking. Many programming environments have been developed for DSM systems including Rice University's TreadMarks in the 1990s. TreadMarks was a user-level library that ran on top of Unix. Programs were written in C, C++ or Fortran and then compiled and linked with the TreadMarks library.

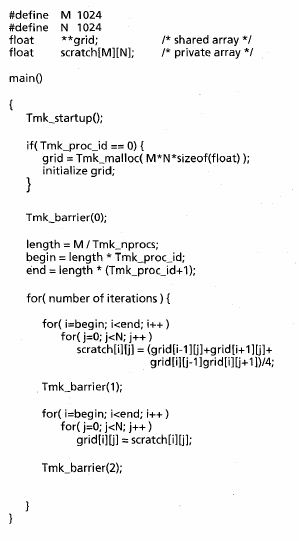

Shown at left is a pseudocode example of using the TreadMarks API to implement the Jacobi method, a type of partial differential equation solver. The code iterates over a 2D array and updates each element to the average of its four nearest neighbors. All processors are assigned an approximately equivalent number of rows and neighboring processes share boundary rows as is necessary for the calculation. This example shows TreadMarks use

of barriers, a technique used for processsynchronization. Barriers prevent race conditions. void Tmk_startup(int argc, char **argv) initializes TreadMarks and starts the remote processes. The void Tmk_barrier(unsigned id) call blocks the calling process until every other process arrives at the barrier. In this example, Tmk_barrier(0) guarantees that process 0 completes initialization before any process proceeds,Tmk_barrier(1) guarantees all previous iteration values are read before any current iteration values are written, and Tmk_barrier(2) guarantees all current iteration values are written before any next iteration computation begins.

To the right is shown a short pseudocode program exemplifying another SAS synchronization technique which uses locks. This program calculates the shortest path in a grouping of nodes that starts at any designated start node, visits each other node once and returns to the origin node. The shortest route identified thus far is stored in the shared Shortest_length and investigated routes are kept in a queue, most promising at the front, and expanded one node at a time. A process compares its resulting shortest partial path with Shortest_length, updating if necessary and returns to the queue to continue its search. Process 0 allocates the shared queue and minimum length. Exclusive access must be established and maintained to ensure correctness and this is achieved through a lock on the queue and Shortest_length. Each process acquires the queue lock to identify a promising partial path and releases it upon finding one. When increasing the Shortest_path a lock is acquired to ensure mutual exclusion to update this shared data as well.

Case Study - 2001 - Shan et al.

In 2001, Shan et al. presented a comparison of the performance and programming effort of MP versus SAS running on clusters of Symmetric Memory Processors (SMPs). They highlighted the "automatic management and coherent replication" of the SAS programming model which facilitates the programming tasks in these types of clusters. This study uses MPI/Pro protocol for the MP programming model and GeNIMA SVM protocol (a page-based shared virtual memory protocol) for SAS on a 32 processors system (using a cluster of 8 machines with 4-way SMPs each). The subset of applications used involves regularly structured applications as FFT, Ocean, and LU contrasting with irregular ones as for example RADIX sort, among others. The complexity of development is represented by the number of code lines per application as shown in the table below this lines. It is observed that SAS complexity is significantly lower than that of MP and this difference increases as applications are more irregular and dynamic in nature (almost doubles for Radix).

| Appl. | FFT | OCEAN | LU | RADIX | SAMPLE | N-BODY |

|---|---|---|---|---|---|---|

| MPI | 222 | 4320 | 470 | 384 | 479 | 1371 |

| SAS | 210 | 2878 | 309 | 201 | 450 | 950 |

The results performance-wise indicated that SAS was only half efficiently dealing with parallelism for most of the applications in this study. The only application that showed similar performance for both methods (MP and SAS) was the LU application. The authors concluded that the overhead of the SVM protocol for maintaining page coherence and synchronization were the handicap of the easier SAS programming model.

Consistency Models

The consistency model plays a fundamental role in DSM systems. Due to the nature of the distributed systems, memory accesses are constrained in the different consistency models. "A memory consistency model defines the legal ordering of memory references issued by some processor, as observed by other processors." The stricter the consistency model, the higher the access times, but programming is more simplified. Some of the consistency models types are:

- Sequential consistency - all processors see the same ordering of memory references, and these are issued in sequences by the individual processors.

- Processor consistency - the order of writes is observed by every individual processor in the system, but the order of reads does not need to be in sequence.

- Weak consistency - consistency is required only on synchronization accesses.

- Release consistency - divides the synchronization accesses into acquire and release. Normal read and writes for a particular node can be done only after all acquires for that node are finished. Similarly, releases can only be done after all writes and reads are finished.

- Lazy Release consistency - extends the Release consistency, by propagating modifications to the shared data only on the acquire, and of those, only the ones related to critical sections.

- Entry consistency - synchronization is performed at variable level. This increases programming labor but helps with lowering latency and traffic exchanges as only the specific variable needs to be synchronized.

Case Study - 2004 - Iosevich and Schuster

In 2004, Iosevich and Schuster performed a study on two memory consistency models in a DSM, the sequential consistency (SC) model and a relaxed consistency model called home-based lazy release consistency (HLRC) protocol. The SC provides a less complex programming model, whereas the HLRC improves on running performance as it allows parallel memory access. Memory consistency models provide a specific set of rules for interfacing with memory. The authors used a multiview (MV) technique to ensure an efficient implementation of SC with fine-grain access to memory. The main advantage of this technique is that by mapping one physical region to several virtual regions, the system avoids fetching the whole content of the physical memory when there is a fault accessing a specific variable located in one of the virtual regions. One step further is the mechanism proposed by Niv and Shuster to dynamically change the granularity during runtime. For this SC (with MV), only all page replicas need to be tracked, whereas for HLRC the tracking needed is much more complex. The advantage of HLRC is that write faults on read only pages are local, resulting in a lower cost for these operations. This table summarizes the characteristics of the benchmark applications used for the measurements. In the Synch column, B represents barriers and L locks.

| Application | Input data set | Shared memory | Sharing granularity | Synch | Allocation pattern |

|---|---|---|---|---|---|

| Water-nsq | 8000 molecules | 5.35MB | a molecule (672B) | B, L | fine |

| Water-sp | 8000 molecules | 10.15MB | a molecule (680B) | B, L | fine |

| LU | 3072 × 3072 | 72.10MB | block (coarse) | B | coarse |

| FFT | 2^20 numbers | 48.25MB | a row segment | B | coarse |

| TSP | A graph of 32 cities | 27.86MB | a tour (276B) | L | fine |

| SOR | 2066 × 10240 | 80.73MB | a row (coarse) | B | coarse |

| Barnes-sp | 32768 bodies | 41.21MB | body fields (4-32B) | B, L | fine |

| Radix | 10240000 keys | 82.73MB | an integer (4B) | B, L | coarse |

| Volrend | a file -head.den- | 29.34MB | a 4 × 4 box (4B) | B, L | fine |

| Ocean | a 514 × 514 grid | 94.75MB | grid point (8B) | B, L | coarse |

| NBody | 32768 bodies | 2.00MB | a body (64B) | B | fine |

| NBodyW | 32768 bodies | 2.00MB | a body (64B) | B | fine |

The authors found that the average speedup of the HLRC protocol is 5.97, and the average speedup of the SC (with MV) protocol is 4.5 if non-optimized allocation is used, and 6.2 if the granularity is changed dynamically.

Performance in SPLASH

There are numerous studies of the performance of shared memory applications in distributed systems. The vast majority of them use a collection of programs named SPLASH and SPLASH-2.. SPLASH is the most commonly used suite for scientific studies of parallel machines with shared memory. Hence Performance in SPLASH has been described.

SPLASH and SPLASH-2

The Stanford ParalleL Applications for SHared memory (SPLASH) is a collection of parallel programs engineered for the evaluation of shared address space machines. These programs have been used by research studies to provide measurements and analysis of different aspects of the emerging DSM architectures at the time. A subsequent suite of programs (SPLASH-2) evolved from the necessity of improving on the SPLASH programs limitations. SPLASH-2 covers a more ample domain of scientific programs, makes use of improved algorithms, and pays more attention to the architecture of the underlying systems. Selected applications in the SPLASH-2 collections include:

- FFT: a Fast Fourier Transform implementation, in which the data is organized in source and destination matrices so that processors have stored in their local memory a contiguous set of rows. In this application all processors involved communicate among them, sending data to each other, to evaluate a matrix transposition.

- Ocean: calculations of large scale ocean movements simulating eddy currents. For the purpose of calculations, it shows nearest-neighbors accessing patterns in multi-grid formation as opposed to using a single grid.

- LU: matrix decomposition in the product of an upper triangular and lower triangular matrices. LU exhibits a "one-to-many non-personalized communication".

- Barnes: simulates the interaction of a group of particles over time steps.

- Radix sort: integer sorting algorithm. This algorithm implementation displays an example of communication among all the processors involved, and the nature of this communication presents irregular patterns.

Communication Requirements

DSM helps provide an easy programming interface i.e. shared memory as well scalability of distributed memory machines. But the communication cost inherited in the underlying network is very expensive, thus limits the scalability of distributed shared memory systems and also create other problems. Page based DSM’s use the virtual memory mechanism in systems to access data in shared memories. Now when a single system is writing data and uses an invalid protocol to get consistency in data, it can cause ‘false sharing’. Two processes in a system can write different data that reside on the same virtual memory page, which can cause it to ping-pong between the two nodes. This shall just cause congesting and excess traffic. Due to this, the granularity of memory unit is restricted in a certain range to prevent false sharing or excessive message passing. Hence there is a need to model the communication with relaxed memory consistency models. This way the shared memory pages shall be consistent in reading data from well-defined points. This traffic can be reduced by sending only the changed data from a modified page.

Case Study - Roy and Chaudhary (2008)

In 2008, Roy and Chaudhary compared the communication requirements of three different page-based DSM systems (CVM, Quarks, and Strings) that use virtual memory mechanisms to trap accesses to shared areas. Their study was also based on the SPLASH-2 suite of programs. In their experiments, Quarks was running tasks on separate processes (it does not support more than one application thread per process) while CVM and Strings were run with multiple application threads.

| Program | CVM | Quarks | Strings |

|---|---|---|---|

| FFT | 1290 | 2419 | 1894 |

| LU-c | 135 | - | 485 |

| LU-n | 385 | 2873 | 407 |

| OCEAN-c | 1955 | 15475 | 6676 |

| WATER-n2 | 2253 | 38438 | 10032 |

| WATER-sp | 905 | 7568 | 1998 |

| MATMULT | 290 | 1307 | 645 |

| SOR | 247 | 7236 | 934 |

The results indicate that Quarks generates a large quantity of messages in comparison with the other two systems. This can be observed in the table above. LU-c (contiguous version of LU) does not have a result for Quarks, as it was not possible to obtain results for the number of tasks (16) used in the experiment. This was due to the excessive number of collisions. In general, due to the lock related traffic, the performance of Quarks is quite low when compared to the other two systems for many of the application programs tested. CVM improves on the lock management by allowing out of order access through a centralized lock manager. This makes the locking times for CVM much smaller than for others. Nevertheless, the best performer is Strings. It wins in overall performance over the two other system compared, but there is room for improvement here too, as it was observed that the locking times in Strings are an elevated percentage of the overall computation. The overall conclusion is that using multiple threads per node and optimized locking mechanisms (CVM-type) provides the best performance.

Current Developments

A more recent version of a distributed shared memory system is vNUMA. Chapman and Heiser, describe vNUMA (where v is for virtual and NUMA is for Non Uniform Memory Access) as "a virtual machine that presents a cluster as a virtual shared-memory multiprocessor." The virtualization in vNUMA is a layer between the real CPUs that form the distributed shared memory system and the OS that runs on top it, usually Linux. The DSM in vNUMA is part of the hypervisor, which is the part of a virtual system that maps guest virtual addresses into real physical ones. The guest virtual addresses get then mapped into virtual memory addresses through the guest OS. The difference with other virtual machines is that vNUMA runs one OS on top of several physical machines, whereas virtual machines often run several guest OS on top of one host OS that runs on one machine. And it uses DSM software techniques to present all the virtualized memory as a whole. The DSM in vNUMA is a single-writer/multiple-reader write-invalidate protocol with sequential coherence. It is based on the IVY DSM, but it introduces several improvements to increase performance. The owner of a page can determine if the page needs to be sent by looking at the copyset (contains information of the set of nodes that maintain a copy of the page), avoiding several page faults and the manager becomes the owner of the copyset as soon as it is part of it. There are a couple other improvements: incremental deterministic merging and write-update-plus (WU+). Incremental deterministic merging uses sequence numbers to ensure that a location gets updated with the latest value and not intermediate, out of order writes. Write-update-plus (WU+) enforces single-writer for pages where atomic operations are done. vNUMA dynamically changes from multiple-writer to single-writer when atomic operations are detected. In vNUMA what it is and why it matters, VMware presents vNUMA as part of vSphere, a virtualization platform oriented to build cloud computing frameworks.

See also

- Performance and Productivity Breakthroughs with Very Large Coherent Shared Memory: The SGI® UV Architecture SGI white paper.

- Versatile SMP (vSMP) Architecture

- Adrian Moga, Michel Dubois, "A comparative evaluation of hybrid distributed shared-memory systems," Journal of Systems Architecture, Volume 55, Issue 1, January 2009, Pages 43-52

- Jinbing Peng; Xiang Long; Limin Xiao; , "DVMM: A Distributed VMM for Supporting Single System Image on Clusters," Young Computer Scientists, 2008. ICYCS 2008. The 9th International Conference for , vol., no., pp.183-188, 18-21 Nov. 2008

References

- Bienia, Christian, Sanjeev Kumar, and Kai Li."PARSEC vs. SPLASH-2: A quantitative comparison of two multithreaded benchmark suites on chip-multiprocessors." Workload Characterization, 2008. IISWC 2008. IEEE International Symposium on. IEEE, 2008.

- Matthew Chapman and Gernot Heiser. 2009. vNUMA: a virtual shared-memory multiprocessor. In Proceedings of the 2009 conference on USENIX Annual technical conference (USENIX'09). USENIX Association, Berkeley, CA, USA, 2-2.

- Shan, H.; Singh, J.P.; Oliker, L.; Biswas, R.; , "Message passing vs. shared address space on a cluster of SMPs," Parallel and Distributed Processing Symposium., Proceedings 15th International , vol., no., pp.8 pp., Apr 2001

- An Introduction to the Partitioned Global Address Space (PGAS) Programming Model,Module by: Tim Stitt Ph.D.

- Mutanga, Alfred. "CACHE PERFORMANCE EVALUATION FOR MULTIPROCESSOR SYSTEM." Nov 2013

- Jegou, Y. , Implementation of page management in Mome, a user-level DSM Cluster Computing and the Grid, 2003. Proceedings. CCGrid 2003. 3rd IEEE/ACM International Symposium on, p.479-486, 21 May 2003, IRISA/INRIA, France

- Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996

- Chandola, V. , "Design Issues in Implementation of Distributed Shared Memory in User Space,"

- Nitzberg, B.; Lo, V. , "Distributed Shared Memory: A Survey of Issues and Algorithms"

- Steven Cameron Woo , Moriyoshi Ohara , Evan Torrie , Jaswinder Pal Singh , Anoop Gupta, "The SPLASH-2 programs: characterization and methodological considerations," Proceedings of the 22nd annual international symposium on Computer architecture, p.24-36, June 22-24, 1995, S. Margherita Ligure, Italy

- Hennessy, J.; Heinrich, M.; Gupta, A.; , "Cache-Coherent Distributed Shared Memory: Perspectives on Its Development and Future Chanllenges," Proceedings of the IEEE, Volume: 87 Issue:3, pp.418 - 429, Mar 1999, Comput. Syst. Lab., Stanford Univ., CA

- J. Protic, M. Tomasevic, and V. Milutinovic, An Overview of Distributed Shared Memory

- Yoon, M.; Malek, M.; , "Configurable Shared Virtual Memory for Parallel Computing" University of Texas Technical Report tr94-21, July 15 1994, Department of Electrical and Computer Engineering, The University of Texas at Austin

- Dubnicki, C.; Iftode, L.; Felten, E.W.; Kai Li; , "Software Support for Virtual Memory-Mapped Communication" Parallel Processing Symposium, 1996., Proceedings of IPPS '96, The 10th International, pp.372 - 381, 15-19 Apr 1996, Dept. of Comput. Sci., Princeton Univ., NJ

- Dubnicki, C.; Bilas, A.; Li, K.; Philbin, J.; , "Design and Implementation of Virtual Memory-Mapped Communication on Myrinet" Parallel Processing Symposium, 1997. Proceedings., 11th International, pp.388 - 396, 1-5 Apr 1997, Princeton Univ., NJ

- Kranz, D.; Johnson, K.; Agarwal, A.; Kubiatowicz, J.; Lim, B.; , "Integrating Message-Passing and Shared-Memory: Early Experience" PPOPP '93 Proceedings of the fourth ACM SIGPLAN symposium on Principles and practice of parallel programming, pp.54 - 63

- Amza, C.; Cox, A.L.; Dwarkadas, S.; Keleher, P.; Honghui Lu; Rajamony, R.; Weimin Yu; Zwaenepoel, W.; , "TreadMarks: shared memory computing on networks of workstations" Computer, Volume: 29 Issue:2, pp. 18 - 28, Feb 1996, Dept. of Comput. Sci., Rice Univ., Houston, TX

- Protic, Jelica, Milo Tomagevic, and Veljk Milutinovic. "A Survey of Distributed Shared Memory Systems." 28th Annual Hawaii International Conference on System Sciences. IEEE. Hawaii, 1995. Reading.

Others

<references> </references>