CSC/ECE 506 Spring 2013/2b ks

Topic_Writeup

Past_Wiki_1

Past_Wiki_2

Introduction

Processor clock speed has plateaued in the last several years. This has resulted in demand for other alternatives to achieve performance gains. At the heart of sciences discoveries has been parallelism. Today’s computers are driven meant to take advantage of processing in parallel to produce quicker results. Even personal computers bought in stores contains CPU’s with several cores that execute in parallel.

While the idea of multi-core is a fairly new concept for the CPU, it has been a focus for GPU design for much longer. GPU’s have had the luxury of being purposed for very specialized tasks related to graphics computation and rendering. These types of computations require a great deal of parallelism to be efficient. In Understanding the Parallelism of GPU’s, the author[3] uses an example of blending two images together. Part of what the GPU will need to be able to do is perform a blending action on pixels in both images. This process will for the most part be the same operation, just on different data points. The intense level of floating point calculation along with a focused purpose have led GPU’s to rely heavily on data parallelism. This focus has lead to very different engineering choices as GPU’s have matured. Now that CPU clock speeds are no longer improving, the scientific and technology communities are looking to GPU’s for a way to see continued efficiency gains.

Based on the engineering choices of GPU hardware, developers have been wanting to take advantage of the raw processing power to leverage more responsive applications. The requirements to do this were very daunting to anyone but the most highly trained and experience programmers. A strong level knowledge for the low level details was required to see any true benefit and if the developer was not highly trained in the area of processing they were trying to see performance gains in that could instead have the exact opposite affect. Due to these challenges software alternative slowly started to appear to try and make the task of leveraging this power through software less daunting. As part of this article we will take a look at General Purpose Graphic Processing Unit (GPGPU) programming. We will take a look at language abstraction such as CUDA and OpenCL to see how accessible this approach is becoming. We will also examine the possible performance gains of this approach and how they compare to solutions that are evolving that still utilize CPU’s. Lastly, we will give our thoughts on the future of this area of study based on our assessments and our expectation for its place in the industry.

History

In the the nineties three-dimensional rendering was in high demand as the next step in graphical performance. Based on this demand, programming interfaces emerged called OpenGL and DirectX. OpenGL is a language independent, platform independent collections of functions which can be called by a client application to render 2D and 3D graphics. Because OpenGL[4] is language independent many 3rd parties right extensions to the language or interface with it using their own API’s (javascript would be an example of this). DirectX[5] is Microsofts platform specific version of this same concept which made it’s debut as part of Window 95 to help incentivize the Windows platform for game developers who were more likely to write games for DOS due to its allowance of direct access to the graphics hardware. Both technologies are built with rendering power and efficiency as their central focus.

At the turn of the 21st century the two major companies in the GPU industry emerged as Nvidia and ATI both made great strides in allowing developers access to the graphics rendering hardware. Nvidia began allowing developers to insert small chunks of code into the graphics pipeline giving them programmable control over shaders. ATI were next contribute this evolution when they were the first to support floating point calculations in the hardware. This opened the door for more realistic graphics rendering and modeling. Even with these notable steps, developers were still very restricted about how many instructions they could write and what functions were supported. It was enough to entice developers but they were still quite limited.

Even with the progress being made to allow developers access to the graphics hardware, developers were still required to have extensive knowledge of how to go about manipulating that graphics hardware and the algorithmic calculations that needed to take place to accomplish their goal. In short, developers were capable of doing it but it was not very accessible. In 2006 Nvidia introduced CUDA[6] (Compute Unified Device Architecture) which truly revolutionized development for GPU’s and truly made GPGPU a reality for the technology industry. CUDA introduced a true parallel computing platform that gave developers access to memory and computational processes[7]. It also gave this access through extensions to the C programming language giving the development community an interface they were familiar with. The drawback to Nvidia’s CUDA is that it was not agnostic. It was specifically built for Nvidia GPUs. This challenge was addressed in 2008 when OpenCL was released. Originally a project from Apple, it was submitted to Khronos Group to complete. It is a completely open standard that has been adopted by many of the high profile technology leaders including Intel and AMD. OpenCL is a collection of API’s that provide data parallelism and is hardware agnostic.

Hardware Overview

The architecture which supports graphics processing builds its concept on the same parallelized framework governing CPUs. It strips outs much of the complexity used by CPUs for such techniques as out-of-order execution and branch prediction in favor of adding more cores to the die and even broadening its applications of SIMD and simultaneous multithreading. The result is a highly parallelized architecture which is optimized for handling repetitive tasks on very large blocks of data.

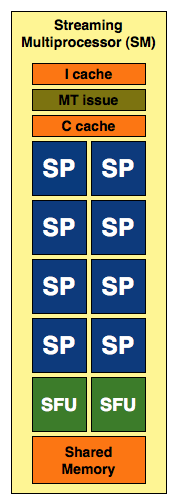

Streaming Multiprocessor

The core unit of a GPU is the streaming multiprocessor (SM). A GPU is made up of a certain amount of SM’s which operate in parallel. At each clock cycle a single SM executes the same instruction across each one of its set of 32-bit SIMD processors, commonly referred to as cores or stream processors. Along with a set of cores, each SM acts as a miniature CPU complete with an L1 cache, texture cache, instruction cache, shared memory space, and an ALU known as a special function unit (SFU) used for complex trigonometric functions[8]. These resources are all shared between the various cores which make up an SM. In addition to these resources, each core also contains a set of 32-bit registers and as well as a set of single-precision floating point functional units for simple mathematical operations. The number of SM’s per graphics card varies between models, but the capabilities are certainly growing with time. In 2010 Nvidia announced its “Fermi” architecture which came alongside the GTX 480. It contained 4 graphics processing clusters (GPC’s) each of which contained 4 SM’s. Each SM in the design contained 32 processors organized into two separate groups[9]. While this was only a few years ago, technological improvements can certainly be seen in Nvidia’s recently released “Kepler” architecture, which was used on its 600-series cards. With Kepler, a new architecture for streaming multiprocessors was introduced which Nvidia named "SMX" or "next-generation streaming processor". Each SMX makes much more efficient use of power by running at lower clock speeds than Fermi's SM's. In addition, Nvidia was also able to increase the total performance of its cards due to the new SMX design. The GTX 680 released in early 2012 contains 8 SMX units each of which contains 192 cores for a total of 1,536 cores on the card[10]. This increase from 512 cores two years earlier demonstrates the rapid rate at which GPU's are increasing their performance over time .

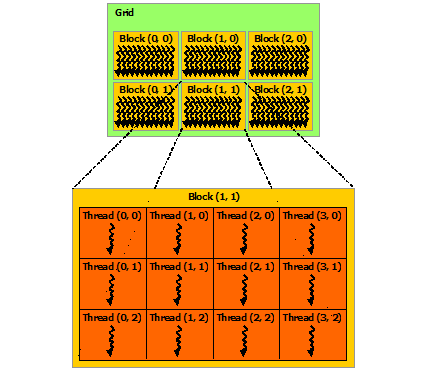

Thread Hierarchy

While each SM can only execute a single instruction per clock cycle, having multiple SM’s allows for execution of multiple instructions in parallel. GPU instructions are executed as a grid which are made up of a set of thread blocks. Thread blocks are distributed between the streaming multiprocessors, and it is possible that some SM’s will contain multiple thread blocks. Only a single SM may execute a single thread block at a time, and each block is processed thoroughly before the next one can be executed on the SM. Drilling further down, a thread block is divided into a set of 32 threads known as warps. Warps act as scheduling units for the set of instructions a single SM is tasked with. Depending on the capability of the GPU, an SM may execute a single warp after a certain amount of clock cycles. During warp execution, the 32 threads operate concurrently meaning a new thread can begin execution without a context switch. The caches and special function units provided by the SM are all shared between the threads, and each of them can also access specific registers owned by the various cores of the SM[11].

Warp Scheduling

The idea for scheduling warps is such that no warp shall remain idle while being waited on. In this way the SM is always keeping up productivity. The process for warp scheduling begins with fetching a single warp instruction every cycle from the instruction cache into an instruction buffer maintained by the SM. Then, during the same cycle a scheduler selects one warp from the buffer for execution if no warp is currently being executed. The scheduler checks the operands of the selected warp and determines if they are all ready to be executed. If even one of the warp's operands are waiting for another instruction to be executed first, the scheduler will skip the warp and choose another one. The choice of which warps to set up for execution can be based on a variety of criteria such as the time the warp has waited in the buffer and resources required to run the instruction contained in the warp. The scheduler also keeps track of which instructions are currently being executed, fetched into the buffer, or chosen for execution in the next clock cycle.

GPU vs CPU Multithreading

The method in which GPU’s enact simultaneous multithreading has specific advantages when compared to CPU multithreading. It can be assumed that similar instructions are grouped within the same thread block of an SM while the task distribution of a CPU may deal with threads which are completely independent of each other and may belong to entirely different applications. The similarities of instructions which are executed by a single thread block allows for more efficiency in the cache and memory units provided by the SM.

GPGPU Data Parallelism

Overview

With the introduction of floating point calculations and APIs from the likes of Nvidia and Apple, it became more appealing to developers to try and take advantage of the parallelism GPUs provided. What all of these advances meant to the development community is that data parallelism had proven very powerful in the graphics rendering arena and could be leveraged for other types of application processing. From a high level, the algorithmic concept that these API’s take advantage of is data is arranged in data structures that can be executed in parallel. These data structures are aligned to an index space. How this index space is determined is based on the data and how much information needs to be shared. Ideally the indexes will be aligned so that the data in a particular index interacts with data in other indexes as minimally as possible. At this point a sequence of operations is applied across these indexes. During occasions where data elements do need to be shared, performance can remain relatively intact based on shift operations and masking techniques[12], as long as they are kept beneath a certain rate of occurrence. This data processing model lyes at the core of GPGPU and in conjunction with the SIMD execution model that contains its own specialized data structures and alignment operations combine to create a type of framework that APIs such as CUDA and OpenCL leverage to get peak performance out of the software applications that interface with them.

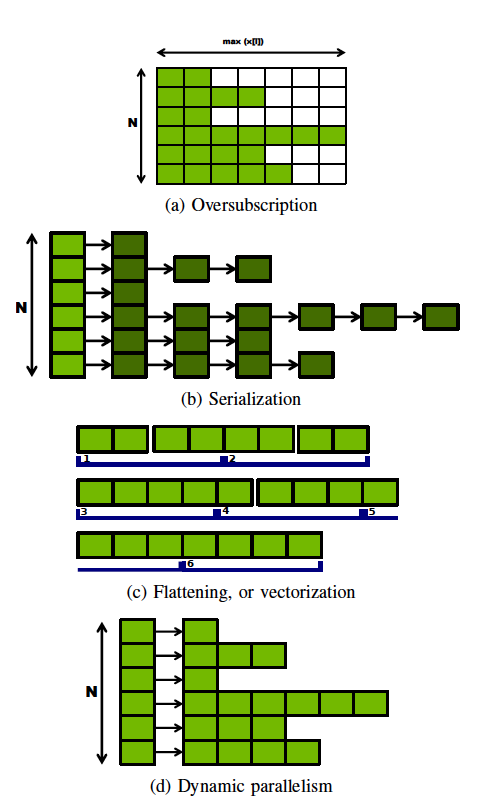

To give a bit more background on data parallelism we will discuss in a bit more detail how the process is achieved and how it has evolved. The first most basic scenario we will talk about is Oversubscription. If we think of each of our data structures that we have created along an index as a worker, and this collection of workers are all trying to complete a job, then In this type of parallelism, resources are allocated based on which worker has the most work to do. So all of the workers have the same amount of resources even though it is highly likely that very few of the workers actually need that amount of resources[13]. It is a rather wasteful model as programmers will typically allocate many more sources than they will actually use, but it helps illustrate the concept of how parallelism works. Moving from that example to Serialization helps a bit. In the model this workers run in parallel but individually execute sequentially. Now you are only allocated as many resources as each worker needs. We solved one challenge with Oversubscription but we still have another, and that is that each worker can have a very unbalanced workload compared to the other workers. We have no way of knowing if all of the workers are being utilized fully to get maximum efficiency. This is where the concept of flattening comes in. Here, before the workload is distributed among the workers it is organized in evenly weighted vectors. This is why it is also referred to as vectorized parallelism[14]. Here the workers do the same job as the previous models, but complication is introduced in accurately flattening the data and also extensive logging operations to keep track of how the work has been separated. Finally we have Dynamic Parallelism where we introduce a concept called work stealing. In this model, when a worker becomes overburdened they can divide out that work and spin up a new job. At that point another worker, perhaps one that is idle can take over that job thus relieving the initial worker from that burden. This dynamic model brings a lot of flexibility and elasticity to parallelism. The tradeoff to this is greater runtime analysis and logic supplied by the developer[15].

Dynamic Parallelism has been the focus of the most recent technologies with hardware vendors focusing on allowing software API’s to to take advantage of this model efficiently. The reason for this is that flattening out the data before assigning it to workers is a computationally expensive task. By allowing irregular workloads that can dynamically adjust, developers are relieved of that overhead. This approach is also called Nested Parallelism[16] and enables the software written to more lightweight and to more closely represent real world workflow.

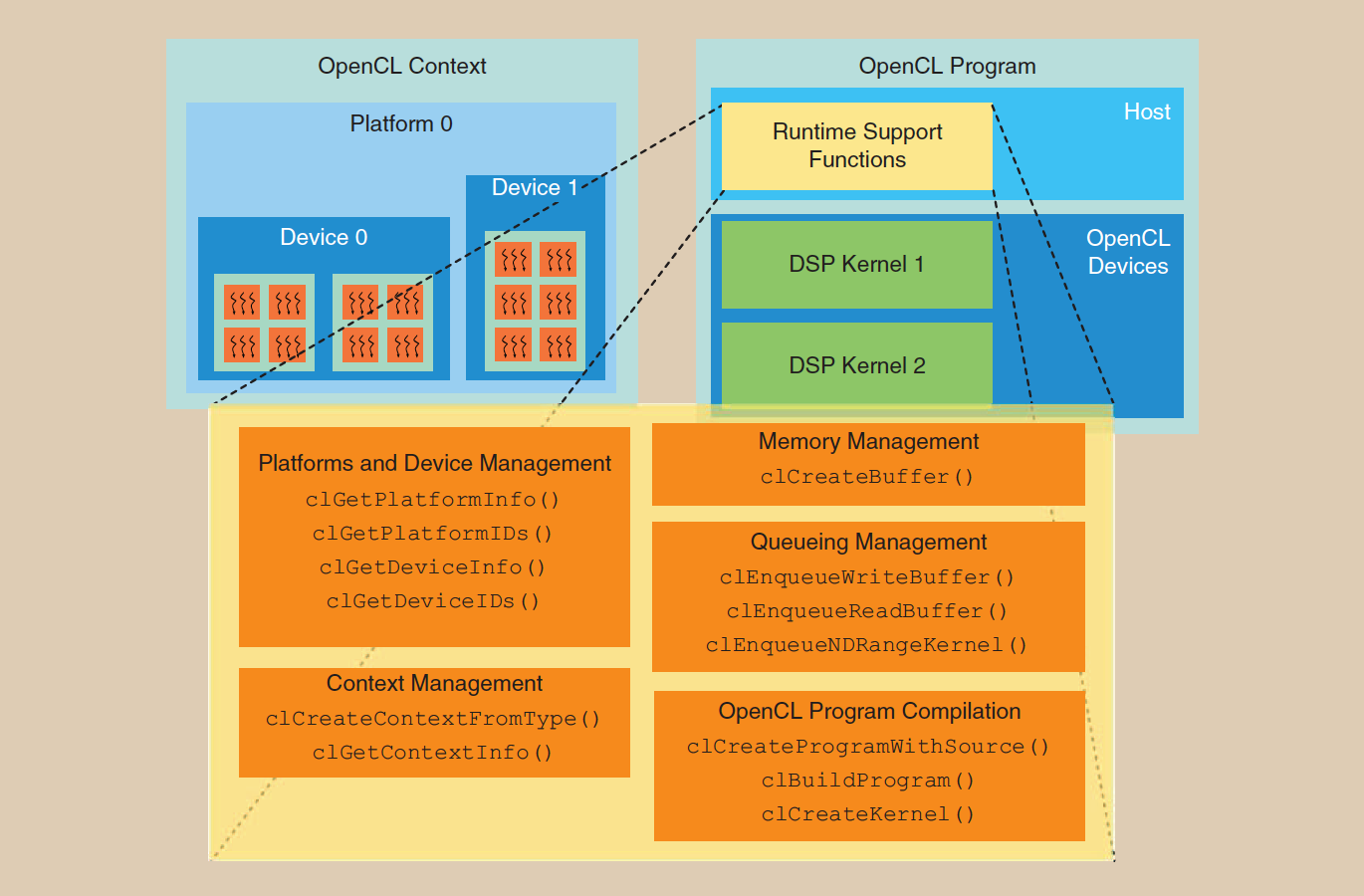

OpenCL

OpenCL[17] is an open standard collection of API’s that is supported by several graphics hardware vendors and more recently large technology companies who are interested in GPGPU to improve efficiency and gain an edge in the high performance market. It is maintained by the Khronos group and has become an industry standard. OpenCL provides programming API’s so that C and C++ developers can write logic that is executed on a variety of microprocessor designs including GPUs. It provides a core functionality that is supported across hardware vendors and also includes specialized functions for specific hardware environments to allow the developer the opportunity to tune performance. The hardware and especially the GPU market is rapidly evolving and makes the challenge of supporting all different hardware configurations nearly impossible. The biggest strength of OpenCL is that it provides a standard interface where a developer can create efficient instructions that are correct and portable across vary hardware specifications. This industry standard makes it ideal for us to look at in our discussion of data parallelism.

Because OpenCL strives to be hardware agnostic it encourages run-time compilation and at that moment the runtime will take advantage of that particular device’s hardware and software features. Although correctness and portability are supported, peak performance is not guaranteed. While OpenCL will attempt to take advantage of all the device hardware configuration has to offer at runtime, intervention by the developer to write specific functions that work most efficiently on that hardware may still be necessary for optimal performance.

OpenCL’s runtime is defined as a set of functions that fall into 4 categories[18]:

Query Platform info

These functions gather information about the platform that the OpenCL instructions are running on as well as the OpenCL platform these instructions were written under.

Examples:

clGetPlatformIDs

clGetPlaformInfo

Contexts

This group is vast and contains functions that manage state and operation lifecycle.

Examples:

clCreateContextFromType

clGetContextInfo

Query Devices

This group is utilized to query individual devices that are available on a platform.

Examples:

clGetDeviceIDs

clGetDeviceInfo

Runtime API

This group is the most extensive list of functions for the OpenCL runtime. Here you have the ability to control command queues, control memory objects, creates program objects, and launch kernels.

Examples:

clCreateCommandQueue

clCreateBuffer

clEnqueueWriteBuffer

clCreateProgramWithSource

clBuildProgram

clCreateKernal

clEnqueueNDRangeKernel

An OpenCL program is similar to some other low level languages. It has a main routine that is executed on a host. When this main routine reaches a section of the code written for parallel execution the parallel kernel launches on the GPU where it is executed using a multithreaded execution model. Typically a programmer will define sections of the code that are meant for parallel processing. These definitions are picked up by the compiler which loads these as kernels to be executed on several threads. Once the kernel is executing it will adapt dynamically to evenly distribute the work. This requires the programs to be able to identify how many cores are on the system and how the algorithm should be partitioned. It should also be able to query the environment or context of the multicore system. Since OpenCL has all of these functions available to it through its APIs the OpenCL task scheduler splits the workload to do balanced computation. This functionality is also open to the developer if they would like to decide this logic themselves. Data to be executed in parallel is passed into memory using OpenCL functions such as clEnqueueWriteBuffer and then passed back to the main routine with a similar function clEnqueueReadBuffer. An example routine that illustrates data parallelism is below[19]:

/*This kernel executes

simultaneously several work

items to

perform the parallel sum of

arrays a and b of dimension

N*/

__kernel void k_parAdd(__

global int *a, __global int

*b, __global int *c,

int tid){

if(tid<N)

c[tid] = a[tid]+b[tid];

}

Performance

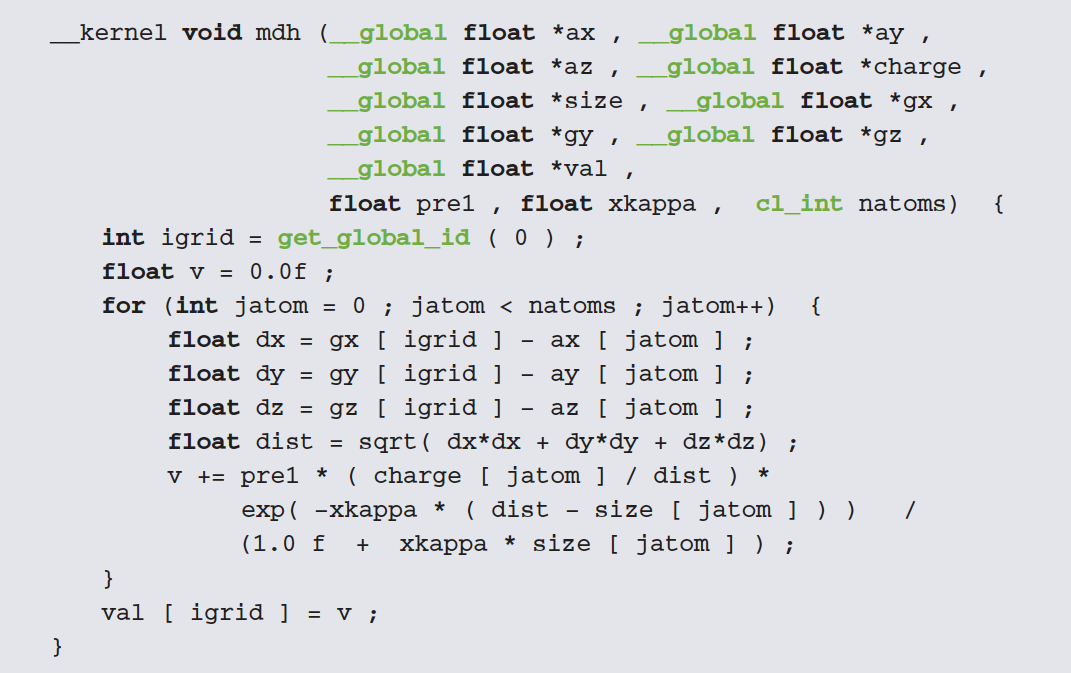

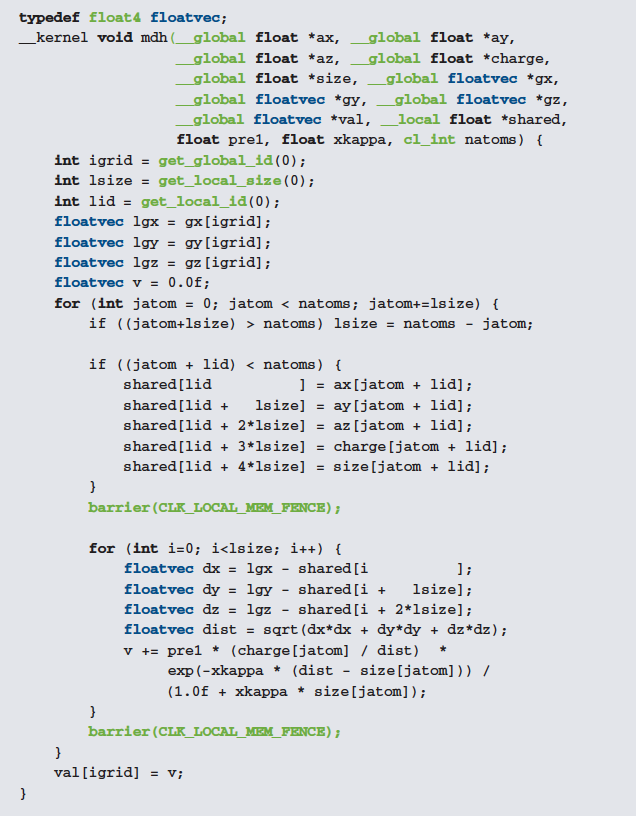

Several studies have been coming out in recent years illustrating the performance improvements data parallelism in software running on GPUs has over traditional CPU implementations. Perumalla[20] conducted a study using an agent based model on well known problems such as the Game of Life. In his study he found a 10 - 30 fold speedup with GPU-based execution over CPU ones. He also observed that GPUs illustrated their biggest performance gains when the application exhibited strong locality where data was not needed to be transferred between index spaces often. In Yongpeng Zhang’s study here at NC State[21] he found that CPUs not only get outperformed by GPUs in terms of raw performance, but also in cost performance and in energy performance. And also in John E. Stone’s paper he used kernels written to solve the Poisson-Boltzmann equation to illustrate a performance gain of 20 times compared to that of code running on CPUS. When tuning the code, the study showed a performance improvement of up to 141 times! Below you can see examples of the kernels written in OpenCL for the study.

Overall, recent studies have shown a consistent improvement in performance for parallel application executing on GPUs over CPUs. Furthermore, it has also been shown that if the software developer has a strong focus on data movement and performance tuning within their code that drastic performance gains can be seen.

References

1. https://wiki.aalto.fi/download/attachments/70779066/T-106.5840_2012_Halme.pdf?version=1&modificationDate=1357205607000

2. http://en.wikipedia.org/wiki/OpenCL

3. http://renderingpipeline.com/2012/11/understanding-the-parallelism-of-gpus/

4. http://en.wikipedia.org/wiki/OpenGL

5. http://en.wikipedia.org/wiki/DirectX

6. http://moss.csc.ncsu.edu/~mueller/seminar/fall09/pmea09.pdf

7. http://en.wikipedia.org/wiki/CUDA

8. http://www.pgroup.com/lit/articles/insider/v2n1a5.htm

9. http://en.wikipedia.org/wiki/GeForce_400_Series

10. http://en.wikipedia.org/wiki/GeForce_600_Series

11. http://www.cs.utah.edu/~mhall/cs6963s09/lectures/6963_L3.pdf

12. http://parlab.eecs.berkeley.edu/wiki/_media/patterns/data_parallel.pdf

13. https://wiki.aalto.fi/download/attachments/70779066/T-106.5840_2012_Halme.pdf?version=1&modificationDate=1357205607000

14. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.118.9152&rep=rep1&type=pdf

15. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.118.9152&rep=rep1&type=pdf

16. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.118.9152&rep=rep1&type=pdf

17. http://en.wikipedia.org/wiki/OpenCL

18. http://ieeexplore.ieee.org/Xplore/cookiedetectresponse.jsp

19. http://en.wikipedia.org/wiki/OpenCL

20. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.118.9152&rep=rep1&type=pdf

21. http://moss.csc.ncsu.edu/~mueller/seminar/fall09/pmea09.pdf

Questions

1. Suppose a single SM is comprised of 2 thread blocks each of which are divided into 12 warps. How many threads are handled by the SM?

2. How would doubling the number of processors an SM is able to handle effect the overall performance of a GPU?

3. What are some ways a warp scheduler can determine the next instruction for execution?

4. Can a thread block be distributed across multiple SM's?

5. How do GPU's handle multithreading differently from CPU's?

6. What are some ways GPU hardware has increased performance over the last several years?

7. What was the major achievement from hardware vendors near the turn of the 21st century that allowed for more realistic 3D rendering and help legitimize the GPU as an execution platform for software outside graphical applications?

8. Given that OpenCL prefers runtime compilation to take advantage of the latest hardware of a system, can an OpenCL application guarantee peak performance?

9. How is it easier to write software that interfaces with the GPU than it was in the early 1990's?

10. What are some of the advantages of dynamic parallelism and what are the tradeoffs?