CSC/ECE 506 Spring 2011/ch3 ab

Overview

The main goal of this wiki is to explore synchronization mechanisms in various architectures. These mechanisms are used to maintain program “correctness” and prevent data corruption when parallelism is employed by a system. This wiki will first give a brief description of various parallel programming models that could be using the synchronization mechanisms, which will be covered later in the wiki.

Types of Parallelism

Section Overview

This section will give a brief overview of common types of parallel programming models. For more detailed information on this topic please see THIS WIKI. The following parallelisms will be covered here: DOALL, DOACROSS, DOPIPE, reduction, and functional parallelism

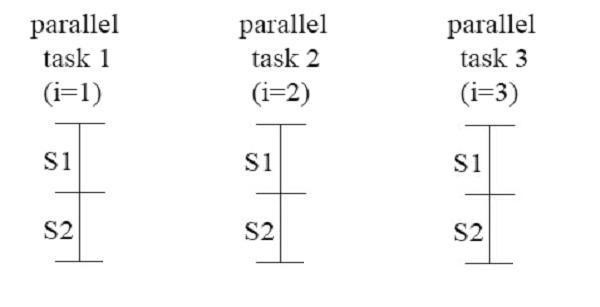

DOALL Parallelism

DOALL parallelism allows all iterations of a loop to be executed in parallel. There are no loop-carried dependencies.[2] The following code is an example of a loop that could use DOALL parallelism to parallelis for the i loop [3]:

for (i=0; i<n; i++)

for (j=0; j< n; j++)

S3: a[i][j] = a[i][j-1] + 1;

Note the lack of dependencies across the different iterations of the i loop.

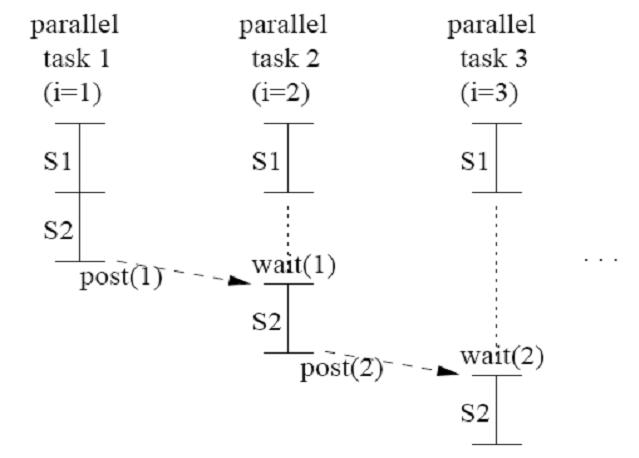

DOACROSS Parallelism

Consider this the following loop[3]:

for (i=1; i<=N; i++) {

S: a[i] = a[i-1] + b[i] * c[i];

}

It is not possible to use DOALL parallelism on this loop because of the loop-carried dependence of the “a” variable. But notice that the “b[i] * c[i]” portion of the code does not have any loop-carried dependencies. This is the situation needed to use DOACROSS parallelism. The following loop can be developed to use DOACROSS parallelism.[3]

post(0);

for (i=1; i<=N; i++) {

S1: temp = b[i] * c[i];

wait(i-1);

S2: a[i] = a[i-1] + temp;

post(i);

}

Each iteration of “b[i] * c[i]” can be performed in parallel, then as soon as the loop-carried dependence on a is satisfied S2 can execute.

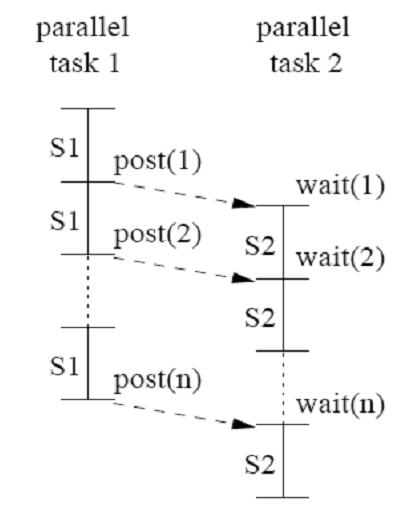

DOPIPE parallelism

DOPIPE parallelism is another method of parallelism for loops that have loop-carried dependences that uses pipelining. Consider the following loop [3]:

for (i=2; i<=N; i++) {

S1: a[i] = a[i-1] + b[i];

S2: c[i] = c[i] + a[i];

}

In this example there is both a loop-carried dependence on S1 and a loop-independent dependence between S1 and S2. These dependencies require that S1[i] executes before S1[i+1] and S2[i]. This leads to the following parallelized code [3]:

for (i=2; i<=N; i++) {

a[i] = a[i-1] + b[i];

post(i);

}

for (i=2; i<=N; i++) {

wait(i);

c[i] = c[i] + a[i];

}

This code satisfies all of the above requirements.

Functional parallelism

Functional parallelism is used when a loop contains statements that are independent of one another. It provides a modest amount of parallelism and it does not grow with input size. However, it can be used in conjunction with data parallelism (i.e. DOALL, DOACROSS, etc). Consider the following loop [3]:

for (i=0; i<n; i++) {

S1: a[i] = b[i+1] * a[i-1];

S2: b[i] = b[i] * coef;

S3: c[i] = 0.5 * (c[i] + a[i]);

S4: d[i] = d[i-1] * d[i];

}

Statement S4 has no dependence on any of the other statements in the loop, therefore it can be executed in parallel of statements S1, S2, and S3 [3]:

for (i=0; i<n; i++) {

S1: a[i] = b[i+1] * a[i-1];

S2: b[i] = b[i] * coef;

S3: c[i] = 0.5 * (c[i] + a[i]);

}

for (i=0; i<n; i++) {

S4: d[i] = d[i-1] * d[i];

}

Reduction

Reduction can be used on operations that are both commutative and associative such as addition multiplication, and logical operations. An example of this is if a sum of products needs to be performed on a matrix. The matrix can be divided into smaller portions and assign one processor to work on each portion of the matrix. After all of the processors have completed their tasks, the individual sums can be combined into a global sum. [4]

Why Synchronization is Needed

When using any of the above parallel programming models, synchronization is needed to guarantee accuracy of the overall program. The following are a few example situations where synchronization will be needed.

- The code following the parallelized loop requires that all of the parallel processes be completed before advancing. It cannot be triggered simply by one of the processes completing.

- A portion of code in the middle of a parallelized section MUST be executed in a very particular order so that global variables used across processes get read and written in the proper order. This is known as the critical section

- Multiple processes must update a global variable in such a way that one process does not overwrite the updates of a different process. (i.e. SUM = SUM + <process update>)

This is just a few examples. Every architecture implements synchronization in a unique way using different types of mechanisms. The following section will highlight various architectures’ synchronization mechanisms.

Synchronization Mechanisms

Section Overview

In order to accomplish the above parallelizations in a real system, the memory must be carefully orchestrated such that no information gets corrupted. Every architecture handles synchronizing data from parallel processors slightly differently. This section is going to look at different architectures and highlight a few of the mechanisms that are used to achieve this memory synchronization.

IA-64

IA-64 is an Intel architecture that is mainly used in Itanium processors.

Spinlock

the spinlock is used to guard against multiple accesses to the critical section at the same time. The critical section is a section of code that must be executed in sequential order. It cannot be parallelized. Therefore, when a parallel process comes across an occupied critical section the process will “spin” until the lock is released. [5]

// available. If it is 1, another process is in the critical section. // spin_lock: mov ar.ccv = 0 // cmpxchg looks for avail (0) mov r2 = 1 // cmpxchg sets to held (1) spin: ld8 r1 = [lock] ;; // get lock in shared state cmp.ne p1, p0 = r1, r2 // is lock held (ie, lock == 1)? (p1) br.cond.spnt spin ;; // yes, continue spinning cmpxchg8.acqrl = [lock], r2 // attempt to grab lock cmp.ne p1, p0 = r1, r2 // was lock empty? (p1) br.cond.spnt spin ;; // bummer, continue spinning cs_begin: // critical section code goes here... cs_end: st8.rel(lock) = r0 ;; //release the lock

The above code demonstrates how a spin lock is used. Once the process gets to a spin lock, it will check to see if the lock is available. If it is not, then the process will proceed into the spin loop where it will continuously check to see if the lock is available. Once it finds out the lock is available, it will attempt to obtain the lock. If another process obtains the lock first, then the process will branch back into the spin loop and continue to wait.

Barrier

A barrier is a common mechanism used to hold up processes until all processes can get to the same point. The mechanism is useful in various kinds of different parallelisms (DOALL, DOACROSS, DOPIPE, reduction, and functional parallelism) This architecture uses a unique form of the barrier mechanism called the sense-reversing barrier. The idea behind this barrier is to prevent race conditions. If a process from the “next” instance of the barrier races ahead while slow processes from the current barrier are leaving, the fast processes could trap the slow processes at the “next” barrier and thus corrupting the memory synchronization. [5]

Dekker’s Algorithm

Dekker’s Algorithm uses variables to indicate which processors are using which resources. It basically arbitrates for a resource using these variables. Every processor has a flag that indicates when it is in the critical section. So when a processor is getting ready to enter the critical section it will set its flag to one, then it will check to make sure that all of the other processor flags are zero, then it will proceed into the section. This behavior is demonstrated in the code below. It is a two-way multiprocessor system, so there are two processor flags, flag_me and flag_you. [5]

// The flag_me variable is zero if we are not in the synchronization and // critical section code and non-zero otherwise; flag_you is similarly set // for the other processor. This algorithm does not retry access to the // resource if there is contention. dekker: mov r1 = 1 ;; // my_flag = 1 (i want access) st8 [flag_me] = r1 mf ;; // make st visible first ld8 r2 = [flag_you] ;; // is other's flag 0? cmp.eq p1, p0 = 0, r2 (p1) br.cond.spnt cs_skip ;; // if not, resource in use cs_begin: // critical section code goes here... cs_end: cs_skip: st8.rel[flag_me] = r0 ;; // release lock

Lamport’s Algorithm

Lamport’s Algorithm is similar to a spinlock with the addition of a fairness mechanism that keeps track of the order in which processes request the shared resource and provides access to the shared resource in the same order. It makes use of two variable x and y and a shared array, b. The example below shows example code for this algorithm. [5]

// The proc_id variable holds a unique, non-zero id for the process that // attempts access to the critical section. x and y are the synchronization // variables that indicate who is in the critical section and who is attempting // entry. ptr_b_1 and ptr_b_id point at the 1'st and id'th element of b[]. // lamport: ld8 r1 = [proc_id] ;; // r1 = unique process id start: st8 [ptr_b_id] = r1 // b[id] = "true" st8 [x] = r1 // x = process id mf // MUST fence here! ld8 r2 = [y] ;; cmp.ne p1, p0 = 0, r2;; // if (y !=0) then... (p1) st8 [ptr_b_id] = r0 // ... b[id] = "false" (p1) br.cond.sptk wait_y // ... wait until y == 0 st8 [y] = r1 // y = process id mf ld8 r3 = [x] ;; cmp.eq p1, p0 = r1, r3 ;; // if (x == id) then.. (p1) br.cond.sptk cs_begin // ... enter critical section st8 [ptr_b_id] = r0 // b[id] = "false" ld8 r3 = [ptr_b_1] // r3 = &b[1] mov ar.lc = N-1 ;; // lc = number of processors - 1 wait_b: ld8 r2 = [r3] ;; cmp.ne p1, p0 = r1, r2 // if (b[j] != 0) then... (p1) br.cond.spnt wait_b ;; // ... wait until b[j] == 0 add r3 = 8, r3 // r3 = &b[j+1] br.cloop.sptk wait_b ;; // loop over b[j] for each j ld8 r2 = [y] ;; // if (y != id) then... cmp.ne p1, p2 = 0, r2 (p1) br.cond.spnt wait_y br start // back to start to try again cs_begin: // critical section code goes here... cs_end: st8 [y] = r0 // release the lock st8.rel[ptr_b_id] = r0 ;; // b[id] = "false"

IA-32

IA-32 is an Intel architecture that is also known as x86. This is a very widely used architecture.

Locked Atomic Operation

This is the main mechanism for this architecture to manage shared data structures such as semaphores and system segments. The process uses the following three interdependent mechanisms to implement the locked atomic operation: [6]

- Guaranteed atomic operations.

- Bus locking, using the LOCK# signal and the LOCK instruction prefix.

- Cache coherency protocols that insure that atomic operations can be carried out on cached data structures (cache lock). This mechanism is present in the P6 family processors.

Guaranteed Atomic Operation

The following are guaranteed to be carried out automatically: [6]

- Reading or writing a byte.

- Reading or writing a word aligned on a 16-bit boundary.

- Reading or writing a doubleword aligned on a 32-bit boundary.The P6 family processors guarantee that the following additional memory operations will always be carried out atomically:

- Reading or writing a quadword aligned on a 64-bit boundary. (This operation is also guaranteed on the Pentium® processor.)

- 16-bit accesses to uncached memory locations that fit within a 32-bit data bus.

- 16-, 32-, and 64-bit accesses to cached memory that fit within a 32-Byte cache line.

Bus Locking

A LOCK signal is asserted automatically during certain critical sections in order to lock the system bus and grant control to the process executing the critical section. This signal will disallow control of this bus by any other process while the LOCK is engaged.

Linux Kernel

Linux Kernel is referred to as an “architecture”, however it is fairly unconventional in that it is an open source operating system that has full access to the hardware. It uses many common synchronization mechanisms, so it will be considered here. [8]

Busy-waiting lock

Spinlocks

This mechanism is very similar to the mechanism described in the IA-64 architecture. It is a mechanism used to manage access to a critical section of code. If a process tries to access the critical section and is rejected it will sit and “spin” while it waits for the lock to be released.

Rwlocks

This is a special kind of spinlock. It is for protected structures that are frequently read, but rarely written. This lock allows multiple reads in parallel, which can increase efficiency if process are not having to sit and wait in order to merely carry out a read function. Like before however, one write is allowed at a time with no reads done in parallel

Brlocks

This is a super fast read/write lock, but it has a write-side penalty. The main advantage of this lock is to prevent cache “ping-pong” in a multiple read case.

Sleeper locks

Semiphores

A semaphore is special variable that acts similar to a lock. If the semaphore can be acquired then the process can proceed into the critical section. If the semaphore cannon be acquired, then the process is “put to sleep” and the processor is then used for another process. This means the processes cache is saved off in a place where it can be retrieved when the process is “woken up”. Once the semaphore is available the “sleeping” process is woken up and obtains the semaphore and proceeds in to the critical section.

CUDA

CUDA, or Compute Unified Device Architecture, is an Nvidia architecture which is the computing engine for their graphics processors.

_syncthreads

The _syncthreads operation can be used at the end of a parallel section as a sort of “barrier” mechanicm. It is necessary to ensure the accuracy of the memory. In the following example, there are two calls to _syncthreads. They are both necessary to ensure the expected results are obtained. Without it, myArray[tid] could end up being either 2 or the original value of myArray[] depending on when the read and write take place.[7]

// myArray is an array of integers located in global or shared

// memory

__global__ void MyKernel(int* result) {

int tid = threadIdx.x;

...

int ref1 = myArray[tid];

__syncthreads();

myArray[tid + 1] = 2;

__syncthreads();

int ref2 = myArray[tid];

result[tid] = ref1 * ref2;

...

{

PowerPC

PowerPC is an IBM architecture that stands for Performance Optimization With Enhanced RISC-Performance Computing. It is a RISC architecture that was originally designed for PCs, however it has grown into the embedded and high-performance space. [10]

Isync

isync is an instruction that guarantees that before any code proceeding after the isync instruction can execute, all of the code preceding it has already completed. It also ensures that any cache block invalidations instructions that were executed before the isync have been carried out with respect to the processor executing the isync instruction. It then causes any prefetched instructions to be discarded. [9]

Memory Barrier Instructions

Memory Barrier Instructions can be used to control the order in which storage access are performed. [9]

HeavyWeight sync

This memory barrier creates an ordering function for the storage accesses that are associated with all of the instructions that are executed by the processor executing the sync instruction.

LightWeight sync

This memory barrier creates an ordering function for the storage accesses caused by LOAD and STORE instructions that are executed by the processor executing the sync instruction. Also, this instruction must execute on the specified storage location in storage that is neither a Write Through Required nor a Caching Inhibited.

Enforce In-order Execution of I/O

The Enforce In-order Execution of I/O, or eieio, instruction is a memory barrier that creates an ordering function for the storage accesses caused by LOADs and STOREs. These instructions are split into two groups: [9]

1. Loads and stores to storage that is both Caching Inhibited and Guarded, and stores to main storage caused by stores to storage that is Write Through Required

2. Stores to storage that is Memory Coherence Required and is neither Write Through Required nor Caching Inhibited

For the first group the ordering done by the memory barrier for accesses in this set is not cumulative. For the second group the ordering done by the memory barrier for accesses in this set is cumulative.

Cell Broadband Engine

Cell Broadband Engine, also referred to as Cell or Cell BE, is an IBM architecture whose first major application was in Sony’s PlayStation 3. Cell has streamlined coprocessing elements which is great for fast multimedia and vector processing applications. [12]

This architecture is interesting because it uses a shared memory model in which the LOADs and STOREs use a “weakly consistent” storage model. Meaning that, the sequence in which any of the following orders are executed may be different from each other: [11]

- The order of any processor element (PPE or SPE) performing storage access

- The order in which the accesses are performed with respect to another processor element

- The order in which the accesses are performed in main storage

It is important that the accesses to the shared memory happen in the correct program order or information could be lost or corrupted. In order to ensure that this doesn’t happen the following memory barrier instructions are used:

Fence

After all previous issued commands within the same “tag group” have been performed the fence instruction can be issued. If there is a command that is issued after the fence command, it might be executed before the fence command. [11]

Barrier

After all previous issued commands have been performed, the barrier command and all of the instructions after the barrier command can then be executed. [11]

References

- WIKI reference for parallel programming models

- WIKI reference for DOALL parallelism

- Lecture 5 from NC State's ECE/CSC506

- Lecture 6 from NC State's ECE/CSC506

- IA-64 Software Development Manual

- IA-32 Software Development Manual

- CUDA Programming Guide

- Linux Kernel Architecture Overveiw

- PowerPC Architecture Book

- Wikipedia information on PowerPC

- IBM cell Cell Architecture Book

- Wikipedia information on Cell