CSC/ECE 506 Fall 2007/wiki4 7 2815

Introduction

The sole aim of all Computer Architects today is to increase the speed of the processor. If Instruction level parallelism (ILP) along with pipelining seems to be a potential solution for this motive, data and control dependencies seem to drag the speed by causing a much lower than ideal instructions per cycle (IPC). Deeper pipelines, which aim at increasing clock rate to increase processor speed of execution, turn out to be a hindrance when branch misprediction penalty becomes larger as more stages of pipeline need to be flushed and refilled.

To reduce the impact of these critical instructions, loads that miss in cache and difficult to predict branches, we need to note that the main program does calculate the values crucial for the address of load or for branch outcome, but not in a timely manner. If we somehow had the branch predictions and loads done much before the actual program needs it, the program could run faster. This requires that an additional program be run along with the main program that does such jobs and only such jobs. This additional program does everything to help main program run faster. This program, when implemented along with the main program on an advanced multiprocessor like CMP (where communication between multiple programs is not a big issue), there is significant increase in the running speed.

Such helpful programs that run along with the main program are called Helper threads.

Helper Threads

Helper threads are additional programs usually running the same code as the main program. It runs faster than the main program to fetch values needed by the main program well in advance. Since the main motive of a helper thread is to “help” the main program, it doesn’t have to do everything that the main program does. In fact, if it does everything that the main program does, it would run as slow/fast as the main program which wouldn’t help much.

We need the helper thread mainly to take care of the following jobs:

- Make hard to predict branch predictions early

- Pre fetch irregular data (data which would definitely be a cache miss) into on-chip caches

Now, the challenge is to select the instructions included in the helper thread. It should be selected to make sure that instructions are not redundant but are yet relevant. Another challenge is to ensure that the helper thread is able to calculate the value needed to resolve a critical instruction in time. Timeliness is critical since a branch prediction is useless after the branch has been resolved and a pre fetch would simply be extra computation if the main thread already executed the load.

Construction of Helper Threads

Helper threads are seldom manually coded mainly because manual construction of helper threads is cumbersome and error-prone. Hence, it is desirable to automate this process. There are several ways proposed to construct these threads. We discuss the two main techniques here:

Using Complier

Construction of helper threads using compilers involves several steps:

1. Delinquent load identification: Delinquent loads are those loads which cause the most cache misses. These are identified. This may be done by using performance analyzers such as Intel’s VTuneTM. In identifying such loads, the profile information is extracted where the compiler also keeps tracks of the cycle costs associated with those delinquent loads i.e., memory stall time. Finally, delinquent loads that account for a large portion of execution time are selected to be targeted by helper threads.

2. Loop Selection: Research has shown that delinquent loads most likely occur within heavily traversed loop nest. Thus, loop structures can naturally be used for picking an appropriate region where helper threads will be constructed. In order to minimize the overhead of thread management, there are two goals. One is to minimize no. of helper thread invocations which can be done by keeping trip count of outer loop that encompasses the targeted loop small. Another goal is to make sure that once started, a helper thread runs for a considerable amount of time. This is to minimize thread activation cost. This can be achieved by having the targeted loop trip count maximum. This can be optimized by searching from the innermost loop which is most preferred target and going outward.

3. Slicing: Next, the compiler identifies instructions to be executed in the helper thread. This process is called slicing. This process aims at keeping the instruction count to minimum to construct efficient and light weighted helper threads. Only the statements that affect the address computation of the delinquent load, including the change of the control flow, are selected as a slice and everything else is filtered out.

4. Live in variable identification: Live in variables are those which form a part of helper thread’s available variables. They are global in nature. These variables are explicitly passed through global variables that are declared solely for the use of helper threads.

5. Code Generation: After the compiler analysis phases, the constructed helper threads are attached to the application program as a separate code. In addition, the codes to create, schedule, and invoke the helper thread are inserted as well. Whenever the main thread encounters a trigger point, it first passes the function pointer of the corresponding helper thread and the live-in variables, and wakes up the helper thread. After being woken up, the helper thread indirectly jumps to the designated helper thread code region, reads in the live-ins, and starts execution. When the execution ends, the helper thread returns back to the dispatcher loop and goes to sleep again.

Using Hardware

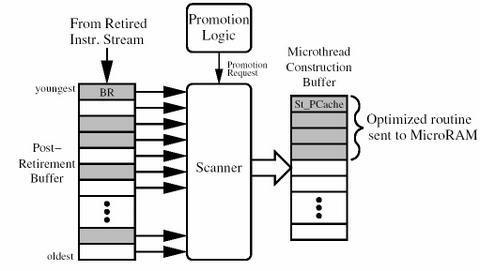

As opposed to the compiler generation, this is the hardware method of creating helper threads. This makes use of post retirement buffers and scanners. Retire is a process in the pipeline when the execution of an instruction is complete and it is about to get the status updated. If there is an instruction path at the end of which there is a branch which is always difficult to predict, then it indicates a set of instructions that should go into the helper thread. There is a buffer called post retirement buffer. Instructions from this targeted path are stored in the buffer after retirement. When such a path is identified, it is scanned before deciding what exactly goes into helper set. The scanner removes any instructions irrelevant to the branch, and puts the remaining instructions into the helper thread construction buffer. This is stored in a small content addressable memory chip called MircoRAM.

Applications of Helper threads

As discussed previously, helper threads are mainly used for the following applications:

Predict difficult branches

This uses the hardware mechanism of thread generation. A branch prediction is considered difficult based on the context in which the branch occurs. Taking the context of a branch into account makes these helper threads more efficient since helper threads will only be used when branches are truly difficult to predict. Addition of context also adds complexity into hardware.

The hardware used to make difficult branch predictions is called the Microthread Builder. There is a Post Retirement Buffer (PRB) which is used to store the last i instructions to retire from the primary or the main thread. Instructions enter the PRB after they retire and are pushed out as younger instructions are added. Dependency information, computed during instruction execution, is also stored in each PRB entry.

When a decision to predict branches with helper threads is made, the Microthread Builder extracts the data flow tree needed to compute the branch outcome. The PRB is frozen and scanned from youngest to oldest (the branch will always be the youngest instruction, as it just retired). Instructions making up the data flow tree are identified and extracted into the Microthread Construction Buffer (MCB). Microthread Construction Buffer is the place where the helper thread is constructed. The identification is not difficult, as the dependency information is already stored in the PRB. The Branch Prediction Hardware is represented as in the block diagram below

Next step, an instruction is inserted at the end of the helper thread to communicate the branch outcome generated by the helper thread back to the front-end of the machine.

Last step is to recognize what is called ‘Spawn Point’. Spawn point determines when exactly a helper thread is called. It is crucial to start the helper thread as early as possible so the information is available prior to the main thread reaching the critical section. The spawn point should be chosen such that helper is not started very early which ends up making the helper thread have a lot of instructions. Helper thread should be started close enough to the critical situation so as to remain short and far enough to ensure that the critical situation has not passed by.

Prefetch loads/stores

This makes use of a helper thread construction algorithm. This is pretty much similar to the compiler based thread generation discussed above.

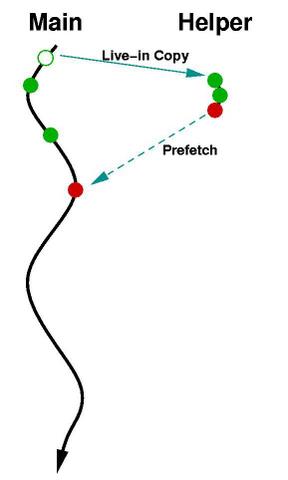

The goal of the helper thread construction algorithm is to make the helper thread as short as possible, while still containing necessary instructions for generating correct prefetches to target loads/stores that likely miss in the L2 cache. To achieve this, prefetching regions are identified first. These are the regions of code that have a high concentration of L2 cache miss. The prefetching sections are typically loop nests consisting of several millions of dynamic instructions. Reads and writes that likely miss in a prefetching section will then be converted into prefetch instructions in the helper thread. The helper thread is spawned only once at the beginning of the application. The figure shown below gives an idea of how the helper thread is constructed. Red spot indicates the problem load.

The following is the algorithm used to extract the prefetching helper thread for the identified loops:

1. Inline function calls in the loop. This means, the function body is replaced by function call instructions.

2. Identify target reads and writes to array elements or structures’ fields that likely miss in the L2 cache.

3. Starting from these read and writes, identify address computations. These are added into the address chain which determines the instruction addresses that form helper thread.

4. Privatize the locations that are written in address chain. If the location is an array element, substitute read/writes of the array in the address chain with read/writes to a new privatized array that belongs to the helper thread.

5. Replace target read and writes by prefetch instructions that access the same addresses.

6. Remove unnecessary computations, branches, and redundant prefetch instructions.

The helper thread is spawned at the beginning of the application. One complexity is that prefetching threads need to be spawned early to tolerate spawning overhead and cache miss latency.

The Slipstream Approach

The Slipstream paradigm proposes that only a subset of original dynamic instruction stream is needed to make full correct forward progress.

We know that processors run the entire set of dynamic instructions to determine the final output. The slip stream idea is to run a subset of this dynamic instruction stream in parallel with the original but a little ahead of it on a Chip Multiprocessor. This shorter program is similar to the helper thread we have been discussing.

This shorter program speculatively runs ahead of the full program and supplies the full program with control and data outcomes. The full program executes efficiently due to the communicated outcomes, at the same time validating the speculative, shorter program. The two programs combined run faster than the original program alone.

This can be better understood when we see how the name ‘Slipstream’ was originated. This was named after slipstreaming in stock car racing. At speeds in excess of 190 m.p.h., high air pressure forms at the front of a race car and a partial vacuum forms behind it. This creates drag and limits the car’s top speed. A second car can position itself close behind the first (a process called slipstreaming or drafting). This fills the vacuum behind the lead car, reducing its drag. And the trailing car now has less wind resistance in front (and by some accounts, the vacuum behind the lead car actually helps pull the trailing car). As a result, both cars speed up by several m.p.h.: the two combined go faster than either can alone.

With slipstream processors, the operating system creates two redundant processes. The two programs simultaneously run on a single chip multiprocessor. One of the programs always runs ahead of the other. The leading program is called Advanced Stream (A-stream) and the trailing program is called Redundant Stream (R-stream). Hardware monitors the R-stream and detects the following:

- Dynamic instructions that repeatedly and predictably have no observable effect. (e.g., unreferenced writes, non-modifying writes)

- Dynamic branches whose outcomes are consistently predicted correctly.

Such instructions are bypassed in the A-stream since it needs better jobs than just predict what can be predicted by R-stream as well.

This reduced A-stream is sped up because it fetches, executes, and retires fewer instructions than it would otherwise. Also, all values and branch outcomes produced in the leading A-stream are communicated to the trailing R-stream. Although the R-stream is not reduced in terms of retired instructions, it has an accurate picture of the future and fetches/executes more efficiently. In summary, the A-stream is sped up because it is shorter and the R-stream is sped up because it receives accurate predictions from the A-stream. The two redundant programs combined run faster than either can alone.

Hence we see that as in stock car racing, A-stream and R-stream mutually improve one another’s performance. The A-stream could not be accurately reduced without the trailing R-stream. And the R-stream is helped along in the slipstream (control and data flow outcomes) of the A-stream. The user perceives an overall speedup because both programs finish earlier.

Conclusion

From statistics, we can learn that the number of applications that can use helper threads in minimal. Most integer applications have fairly modest memory footprints, and therefore have few cache misses to eliminate. Many of those with large memory footprints, such as databases, are fairly easy to parallelize into true threads, which are always a better choice than “helper” threads if it is possible to create them. On the floating-point side, many applications are easily parallelizable or have fairly regular access patterns that can be prefetched using hardware mechanisms or occasional software prefetch instructions right in the main thread. As a result of these fundamental application characteristics, the selection of applications that can really be helped by helper threads is fairly limited.

A second problem is that very tight synchronization is needed between the main thread and its helpers in order to keep them the proper distance ahead of the main thread. Too far ahead, and they will cause cache thrashing by prefetching data and then replacing it with subsequent prefetches before the main thread can even use it. On the other hand, if they are not far enough ahead they might not be able to prefetch cache lines in time. Inserting just enough synchronization to keep these threads properly paced without slowing down the main thread significantly is still an active area for research.

However, applications like slipstreaming, Master-Slave Spec. Parallelization and Simultaneous Subordinate Multithreading have proven that helper thread are to remain in vogue.

References

2. Helper Threading: Improving Single-Thread Performance Using Additional Execution Contexts

3. A Survey on Helper Threads and Their Implementations

4. Physical Experimentation with Prefetching Helper Threads on Intels Hyper-Threaded Processors

5. Helper Thread Prefetching for Loosely-Coupled Multiprocessor Systems

Further reading

1. Master-Slave Spec. Parallelization