CSC/ECE 506 Fall 2007/wiki1 5 jp07

Section 1.1.4: Supercomputers.

Compare current supercomputers with those of 10 yrs. ago. Update Figures 1.10 to 1.12 with new data points. For 1.12, consult top500.org

Definitions of a Supercomputer

- Supercomputer

- "The class of fastest and most powerful computers available."

- "An extremely fast computer that can perform hundreds of millions of instructions per second."

- "A time dependent term which refers to the class of most powerful computer systems world-wide at the time of reference."

It is obvious that the term supercomputer has a definition that takes many forms. For some it refers to a definite set of goals such as, x number of instructions per second. For others it is about having the best possible performance of any computer at the current time. Still for some, the primary factor is price. In general, a supercomputer receives its classification because it is both one of the most expensive computers and one of the most powerful computers.

LINPACK Benchmark

The main metric for evaluating the effectiveness of supercomputers has long been the LINPACK benchmark suite. [2] In general this benchmark attempts to solve a dense system of linear equations and measure's the processors speed at completing the task. Although this is a very narrow field, it proves to give a industry standard metric for supercomputers and is used for ranking by top sites such as the Top 500.

Note that benchmark performance with the LINPACK benchmarks is usually given in FLOPS, floating point operation per second. This is not necessarily the best overall metric for measuring system performance [3] but still proves useful because of the nature and specific target of the LINPACK benchmark. Since Linpack is heavy on floating point operations the notion of FLOPS is a good indicator of the amount of load the processor can handle. However, applications not requiring intensive floating point calculations would not be a good candidate for a FLOPS measurement.

The Evolution of the Supercomputer

Innovation in computer architecture usually begins with supercomputers. The world of supercomputing is a "playground" for new architecture, with concepts being introduced long before they are used in standard microprocessors. In the past this was especially true as the 1960's introduced supercomputers with pipelined instruction processing and dynamic instruction scheduling. Then in the 1970's vector processors began to emerge as the major force for supercomputing architecture. Then around 1997, massively parallel processors have began to dominate the market share of supercomputers. But, eventually new styles of parallelism emerged, and the idea of clustered architecture [4] have increasingly dominated the supercomputers in use.

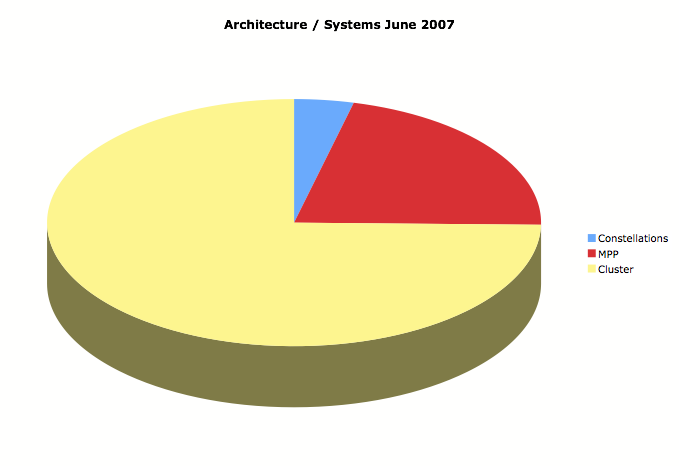

Evolution of Architecture

As mentioned already, the types of systems have varied greatly among supercomputers, and the last 10 years have been no different. As the following graphs show, Parallel Vector Processors (PVPs) and Symmetric shared memory multiprocessors (SMPs) have died out. MPPs have seen a decline in popularity and given way to a majority of the market coming from clustered architectures. [5]

Evolution of Performance

Since the Cray1 there has been significant strides made in architecture performance. In 1995 the fastest supercomputers in the world, the T94 was fastest, had a uniprocessor performance around 1,000 MFLOPS for the LINPACK benchmark. In the recent June 2007 reports, the fastest supercomputer in the world, the Blue/Gene L System topped 280.6 TFLOPS. A massive improvement over 10 years.

In the figure below, there is a summary of the peak performance of the top supercomputers in the last 10 years. Recorded for each year are the top two scorers for the max performance on the LINPACK benchmark.

This data was collected from the Top 500 website. The Top 500 website has also compiled charts of their own that illustrate the improvement of the top supercomputers in the world and can be found here.

Dominant Supercomputers of the last 10 years

Cray Supercomputers

When starting about the beginning of supercomputing, the first name mentioned is Seymour Cray. Commonly referred to as the "father of supercomputing" Cray fueled the interest in supercomputing by developing the Cray-1 in 1976 for Los Alamos National Laboratory. At the time it sold for $8.8 million, had a 8MB memory, and performed at 160 MFLOPS. [6] Cray founded his own business which eventually became Cray Inc. [7] Even today Cray manufactured computers have contenders for the fastest supercomputers in the world. Their overall market share has declined however, with only 2.2% of the world's top 500 belonging to Cray manufactured machines. [8]

ASCI Red

In 1997 the ASCI Red was installed at Sandia National Laboratories, located in Albuquerque, New Mexico. During the period of 1997 to 2005 it remained operational until it was shut down in 2006. The primary goal of ASCI Red was to simulate nuclear explosions to prevent the need for actual testing.

ASCI Red used a MIMD layout with a mesh of 4,510 compute nodes, 1212 gigabyte of total distributed memory and 12.5 terabyte of disk storage. One important note about ASCI Red is that it was the first supercomputer to top 1 TFLOPS on the Linpack benchmark score.[9][10]

The now mostly defunct website for the supercomputer can be found here

Earth Simulator

In order to simulate geoclimate change and determine the effects of global warming, the supercomputer known as the Earth Simulator was created. Between 2002-2004, as performance graph earlier showed, it was the fastest supercomputer in the world.

Completed in 2002, NEC built a processor consisting of 640 nodes made up of eight vector processors. There is a total of 10 tebibytes of memory and 700 terabytes of storage. [11][12]

Blue/Gene L System

Currently the fastest supercomputer in the world, the Blue/Gene L System is located in the Terascale Simulation Facility at Lawrence Livermore National Laboratory. Developed by IBM, the computer performs many complex tasks such as scientific simulations that include "ab initio molecular dynamics; three-dimensional (3D) dislocation dynamics; and turbulence, shock, and instability phenomena in hydrodynamics. It is also a computational science research machine for evaluating advanced computer architectures." [13]

The following list taken from Top 500 details some of the main features of the Blue Gene System:

- "Nodes are configured as a 32 x 32 x 64 3D torus; each node is connected in six different directions for nearest-neighbor communications"

- "A global reduction tree supports fast global operations such as global max/sum in a few microseconds over 65,536 nodes"

- "Multiple global barrier and interrupt networks allow fast synchronization of tasks across the entire machine within a few microseconds"

- "1,024 gigabit-per-second links to a global parallel file system to support fast input/output to disk"

Source: Top500.org [14]