CSC/ECE 517 Fall 2023 - NTX-4 Extend NDB Operator capabilities to support Postgres HA: Difference between revisions

No edit summary |

No edit summary |

||

| Line 111: | Line 111: | ||

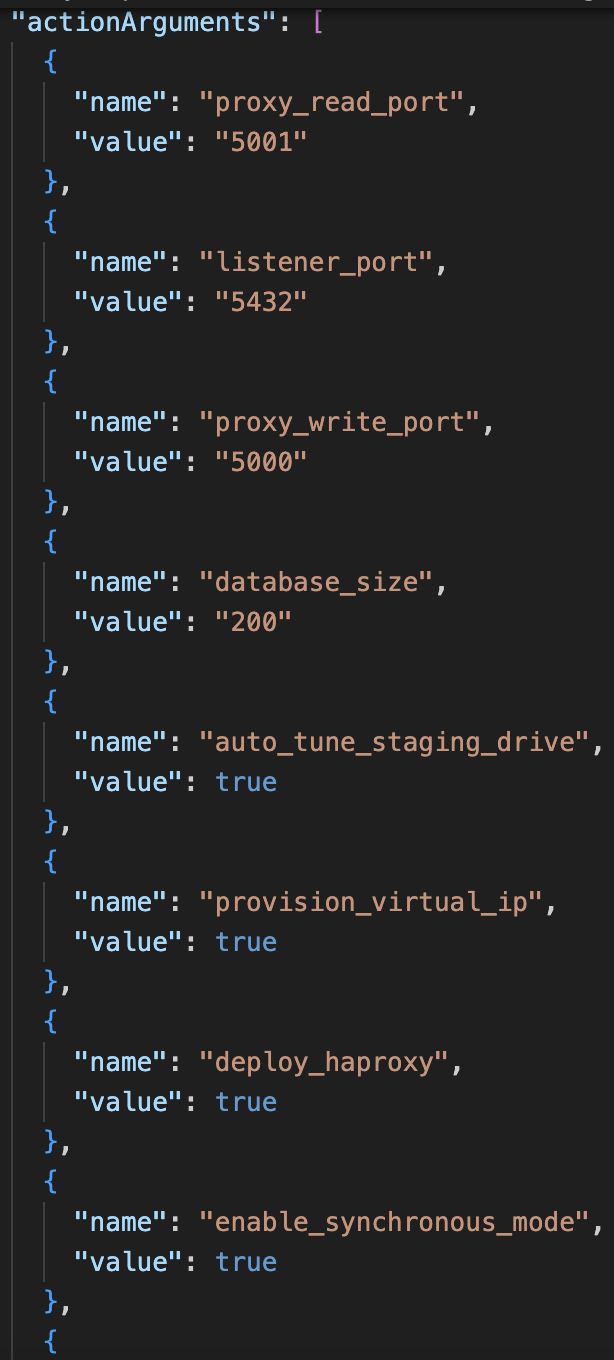

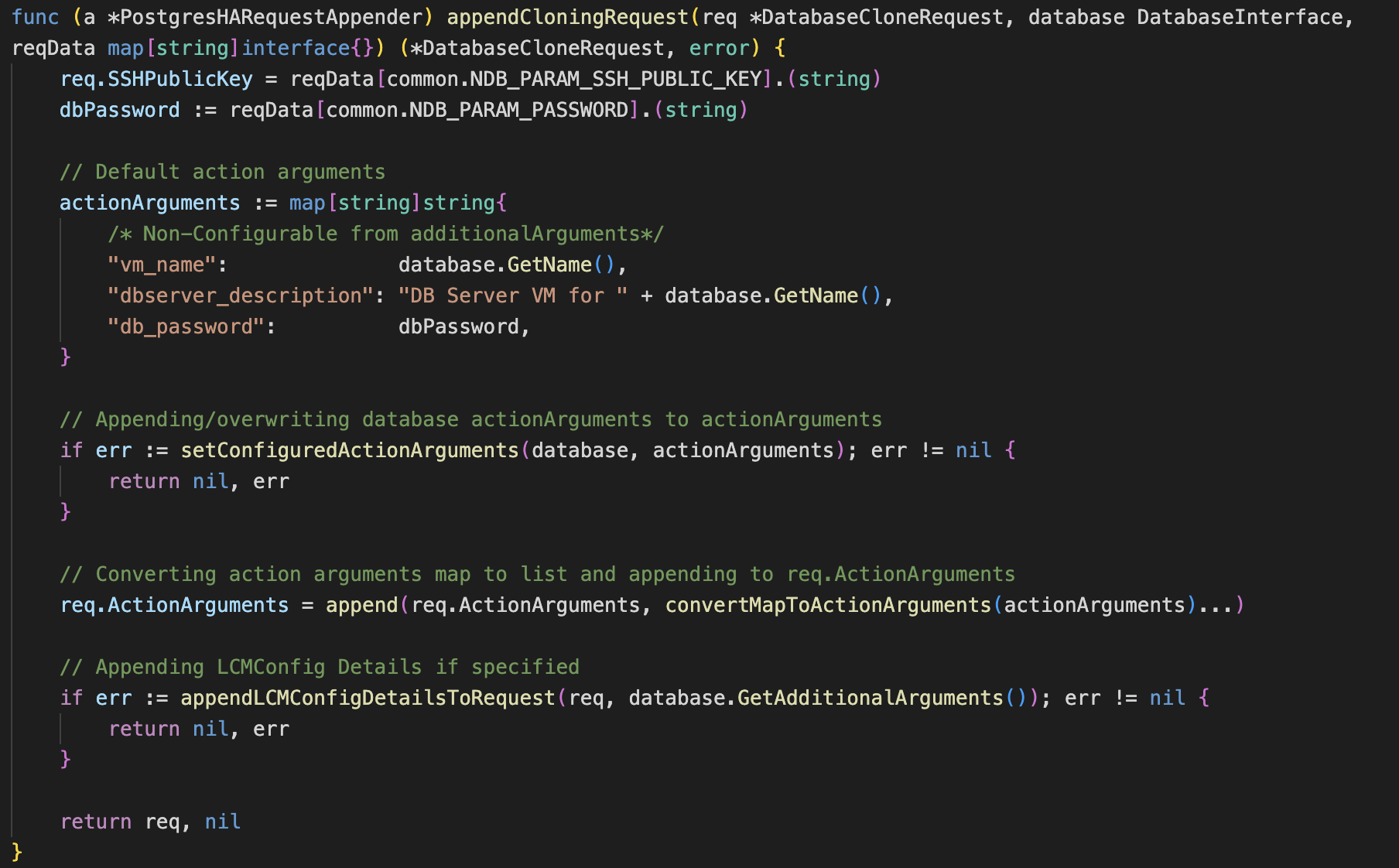

With the new data parameters identified now we need to find out what changes need to be made. To begin a new main.go file will be created that directly calls NDB to provision a fresh instance. The db_helpers.go file present in the ndb_api directory has the method GenerateProvisioningRequest which contacts NDB to provision a new DB. In the same file appendRequest methods are present for each DB type that add actionArguments to the JSON request objects. Another such method can be made for Postgres HA instances for hardcoded data. Like this we can work our way backwards through the application flow. | With the new data parameters identified now we need to find out what changes need to be made. To begin a new main.go file will be created that directly calls NDB to provision a fresh instance. The db_helpers.go file present in the ndb_api directory has the method GenerateProvisioningRequest which contacts NDB to provision a new DB. In the same file appendRequest methods are present for each DB type that add actionArguments to the JSON request objects. Another such method can be made for Postgres HA instances for hardcoded data. Like this we can work our way backwards through the application flow. | ||

[[File:Image10.png|600px]] | |||

The screenshot above shows the new appendProvisioningrequest method created in db_helpers.go file. The actionArguments hashmap contains the various properties of the HA instance that need to be supplied to NDB for provisioning a database. Similarly the method appendCloningRequest has also been made that is used to create requests to clone an existing DB by copying all its properties. | |||

[[File:11Image.png|700px]] | |||

<h2>Test Plan</h2> | <h2>Test Plan</h2> | ||

Revision as of 04:00, 16 November 2023

Kubernetes

The open-source container orchestration platform Kubernetes, sometimes shortened to K8s, automates the deployment, scaling, and administration of containerized applications. Google built it initially, and the Cloud Native Computing Foundation (CNCF) is currently responsible for its maintenance. The strong and adaptable container management architecture offered by Kubernetes simplifies the deployment and maintenance of complicated, dispersed applications.

Key Concepts and Components of Kubernetes

1. Containers: Containers are small, lightweight, isolated environments that run applications and their dependencies. Kubernetes is built to interact with them. One of the most often utilized container runtimes with Kubernetes is Docker.

2. Nodes: Your containerized apps are executed on these machines, which might be real or virtual. Nodes are accountable for managing containers and supplying computational power inside a cluster.

3. Pods: In Kubernetes, pods are the smallest deployable units. One or more containers that share the same network namespace and storage volumes can be found inside a pod. Using `localhost`, containers in the same pod can speak to one another.

4. Replica Sets and Deployments: These are the controllers that keep track of and regulate the number of pod replicas that are deployed. They are employed in rolling out and scaling upgrades.

5. Services: Applications that are operating in pods can be consistently accessed and exposed through the use of Kubernetes services. They can be applied to various tasks like service discovery and load balancing.

6. Ingress: Resources and controllers for ingress offer a mechanism to control external network access to services inside the cluster.

7. ConfigMaps and Secrets: They are used to handle sensitive data, such as passwords or API keys, and configuration information apart from the application code.

8.Namespaces: Kubernetes provides a notion called namespaces that lets you divide and isolate resources inside a cluster logically. Applications for organizing and multi-tenancy can benefit from it.

9. Kubelet: This agent is in charge of making sure containers are operating in a pod and is installed on every cluster node.

10. Master Node: The cluster is managed and supervised by the control plane, which is made up of the Kubernetes master components. It consists of the scheduler, controller manager, etcd (a key-value store for cluster data), and API server.

11. kubectl: To communicate with a Kubernetes cluster, use this command-line utility. It gives you the ability to add, remove, and manage cluster resources.

Kubernetes is a popular choice for managing containerized apps, microservices, and workloads that are cloud-native since it is extremely adaptable and can be linked with a variety of tools and services. It offers a uniform platform for automating deployment, scaling, and operations in contemporary cloud-native systems and abstracts many of the challenges associated with managing containers.

Secret:

An object, like a password, token, or key, that holds a tiny amount of sensitive information is called a secret. Usually, a container image or Pod specification would contain this information. Secrets enable the omission of private information from application code.

During the process of generating, viewing, and editing Pods, there is a lower chance of the Secret (and its data) being disclosed because Secrets can be produced independently of the Pods that use them. When working with Secrets, Kubernetes and cluster apps can additionally take extra safety measures, such as not storing important data in nonvolatile storage.

Secrets and ConfigMaps are similar, but Secrets are made especially for storing private information.

Custom Resource Definition:

An object that expands the Kubernetes API or lets us add our own API to a project or cluster is called a custom resource. Our own object types are defined in a custom resource definition (CRD) file, which allows the API Server to manage the whole lifecycle.

Kubernetes Operator:

One specialized way to package, deploy, and manage Kubernetes applications is with a Kubernetes operator. It creates, configures, and automates complicated application instances on behalf of users by utilizing the Kubernetes API and tools. Kubernetes controllers are extended by operators, who have domain-specific expertise to manage the whole application lifecycle. They can scale, upgrade, and manage different parts of the program, including kernel modules, in addition to continuously monitoring and maintaining the application.

To manage components and applications, operators use custom resources (CRs) defined by custom resource definitions (CRDs). They observe CR kinds and utilize logic that is embedded with best practices to translate high-level user directions into low-level actions. Role-based access control policies and kubectl can be used to manage these custom resources. Beyond the capabilities of Kubernetes' built-in automation features, operators enable the automation of operations that are in line with site reliability engineering (SRE) and DevOps methodologies. They are usually developed by people who are knowledgeable about the business logic of the particular application. They incorporate human operational knowledge into software, avoiding manual duties.

The Operator Framework is a collection of open-source tools that speed up the development of operators. It provides an Operator SDK for developers who lack a thorough understanding of the Kubernetes API, Operator Lifecycle Management for managing the installation and management of operators, and Operator Metering for usage reporting in specialized services.

Nutanix Database Service

Database-as-a-Service Across On-Premises and Public Clouds

Simplify database management and accelerate software development across multiple cloud platforms. Streamline essential yet mundane database administrative tasks while maintaining control and adaptability, ensuring effortless, rapid, and secure database provisioning to bolster application development.

- Secure, consistent database operations - Implement automation for database administration tasks, ensuring the consistent application of operational and security best practices across your entire database infrastructure.

- Accelerate software development - Empower developers to effortlessly deploy databases from their development environments with a few simple clicks or commands, facilitating agile software development.

- Free up DBAs to focus on higher value activities - By automating routine administrative tasks, database administrators (DBAs) can allocate more of their time to valuable endeavors, such as enhancing database performance and delivering fresh features to developers.

- Retain control and maintain database standards - Select the appropriate operating systems, database versions, and database extensions to align with the needs of your applications and compliance standards.

- Database Lifecycle Management: Efficiently oversee the complete lifecycle of your databases, covering provisioning, scaling, patching, and cloning for SQL Server, Oracle, PostgreSQL, MySQL, and MongoDB databases.

- Scalable Database Management: Effectively handle databases at scale, spanning from hundreds to thousands, regardless of whether they are located on-premises, in one or multiple public cloud environments, or within colocation facilities. All of this can be managed from a unified API and console.

- Self-Service Database Provisioning: Facilitate self-service database provisioning for both development/testing and production purposes by seamlessly integrating with popular infrastructure management and development tools like Kubernetes and ServiceNow.

- Database Security: Rapidly deploy security updates across your databases and enforce access restrictions through role-based access controls to ensure compliance, whether for some or all of your database instances.

NDB Kubernetes Operator

The goal of NDB Operator, a Kubernetes operator, is to make the process of setting up and maintaining database clusters within Kubernetes clusters easier. An application with operational knowledge of another application is called a Kubernetes operator. After deployment within the Kubernetes Cluster, it can start monitoring the endpoints of interest and modifying the application under management. An NDB Cluster can be deployed, managed, and modified with the least amount of human intervention thanks to the NDB Operator.

Using their K8s cluster, developers can now provision PostgreSQL, MySQL, and MongoDB databases directly, saving them days or even weeks of work. They can take advantage of NDB's complete database lifecycle management while using the opensource NDB Operator on their preferred K8s platform.

Problem Statement

The problem statement requires us to extend NDB operator capabilities to support Postgres HA (High Availability). Currently NDB has support for Posgres High Availability databases but the NDB operator cannot manage them. Our task is to identify what additions need to be made to the project to support Postgres HA and implement these additions. Moreover, we will perform end-to-end testing of the provisioning and deprovisioning processes to ensure their smooth functionality.

Postgres HA involves implementation of measures that ensure that a PostgreSQL database system remains operational and accessible even in the face of hardware failures, software issues, or other types of disruptions. This includes measures like replication, failover, load balancing and more.

Approach

- To start a hardcoded file containing basic config for Postgres HA can be made.

- The hardcoded file will contain details such as databaseInstanceName, description, clusterId, credentialSecret, size.

- Starting from ndb_api changes can be made to integrate Postgres HA support.

- The flow of changes will be from ndb_api -> controller adapters -> controllers -> api.

- In ndb_api, another method appendRequest for Postgres HA needs to be added to db_helpers.go. Within this method, additional specifications for this specific database type shall be provided.

- Within api, the Instance struct needs to be updated to include parameters like copies of the instance, how often data will be copied from the primary and more.

To understand the changes that need to be made first we need to define what data is needed by NDB to provision an HA database. This was achieved by obtaining the API equivalent from Nutanix Test Drive. The API equivalent contains the configuration of the database that will be provisioned on NDB. It is a JSON object containing parameters in a key value format.

To identify the parameters which are new, API equivalents for a normal Postgres instance and a Postgres HA instance were compared. The differences noted are as follows

ActionArguments key value pair contains more parameters in case of Postgres HA. properties of the HA instance like failover modes, backup policy and more are defined here.

A new key value pair for nodes is present in the HA API equivalent. It contains the details regarding which database holds the primary status thus making it the target for all read write operations. Other databases are set to secondary meaning they copy the data from the primary DB. Failover strategies are also noted here.

With the new data parameters identified now we need to find out what changes need to be made. To begin a new main.go file will be created that directly calls NDB to provision a fresh instance. The db_helpers.go file present in the ndb_api directory has the method GenerateProvisioningRequest which contacts NDB to provision a new DB. In the same file appendRequest methods are present for each DB type that add actionArguments to the JSON request objects. Another such method can be made for Postgres HA instances for hardcoded data. Like this we can work our way backwards through the application flow.

The screenshot above shows the new appendProvisioningrequest method created in db_helpers.go file. The actionArguments hashmap contains the various properties of the HA instance that need to be supplied to NDB for provisioning a database. Similarly the method appendCloningRequest has also been made that is used to create requests to clone an existing DB by copying all its properties.

Test Plan

Manual tests will be performed first to validate the correctness of the code. Following that test scripts using the default testing package will be used to perform automated testing.

References

Relevant Links

link to GitHub repository: https://github.com/rithvik2607/ndb-operator

Team

Mentor

Nandini Mundra

Student Team

Sai Rithvik Ayithapu (sayitha@ncsu.edu)

Sreehith Yachamaneni (syacham@ncsu.edu)

Rushil Patel (rdpate24@ncsu.edu)