CSC/ECE 517 Fall 2021 - E2168. Testing - Reputations: Difference between revisions

| Line 78: | Line 78: | ||

min_question_score = 0;<br> | min_question_score = 0;<br> | ||

max_question_score = 5;<br> | max_question_score = 5;<br> | ||

type = ReviewQuestionnaire;<br> | type = ReviewQuestionnaire;<br> | ||

Note: We will define the assignment with ReviewQuestionnaire type rubric. <br> | Note: We will define the assignment with ReviewQuestionnaire type rubric. <br> | ||

Revision as of 20:16, 29 November 2021

Project Overview

Introduction

Online peer-review systems are now in common use in higher education. They free the instructor and course staff from having to provide personally all the feedback that students receive on their work. However, if we want to assure that all students receive competent feedback, or even use peer-assigned grades, we need a way to judge which peer reviewers are most credible. The solution is to use a reputation system.

The reputation system is meant to provide objective value to student assigned peer review scores. Students select from a list of tasks to be performed and then prepare their work and submit it to a peer-review system. The work is then reviewed by other students who offer comments/graded feedback to help the submitters improve their work.

During the peer review period it is important to determine which reviews are more accurate and show higher quality. Reputation is one way to achieve this goal; it is a quantization measurement to judge which peer reviewers are more reliable.

Peer reviewers can use expertiza to score an author. If Expertiza shows a confidence ratings for grades based upon the reviewers reputation then authors can more easily determine the legitimacy of the peer assigned score. In addition, the teaching staff can examine the quality of each peer review based on reputation values and, potentially, crowd-source a significant portion of the grading function.

Currently the reputation system is implemented in Expertiza through web-service, but there's no test written for it. Thus our goal is to write tests to verify Hamer's and Lauw’s algorithm from the reputation system.

System Design

The below is referenced from project E1625, which would give us the overall description of the reputation system.

There are two algorithms intended for use in calculation of the reputation values for participants.

There is a web-service (the link accessible only to the instructors) available which serves a JSON response containing the reputation value based on the seed provided in the form of the last known reputation value which we store in the participants table. An instructor can specify which algorithm to use for a particular assignment to calculate the confidence rating.

As the paper on reputation system by observes, “the Hamer-peer algorithm has the lowest maximum absolute bias and the Lauw-peer algorithm has the lowest overall bias.This indicates, from the instructor’s perspective, if there are further assignments of this kind, expert grading may not be necessary.”

- Reputation range of Hamer’s algorithm is

- red value < 0.5

- yellow value is >= 0.5 and <= 1

- orange value is > 1 and <= 1.5

- light green value is > 1.5 and <= 2

- green value is > 2

The main difference between the Hamer-peer and the Lauw-peer algorithm is that the Lauw-peer algorithm keeps track of the reviewer's leniency (“bias”), which can be either positive or negative. A positive leniency indicates the reviewer tends to give higher scores than average. This project determines reputation by subtracting the absolute value of the leniency from 1. Additionally, the range for Hamer’s algorithm is (0,∞) while for Lauw’s algorithm it is [0,1].

- Reputation range of Lauw’s algorithm is

- red value is < 0.2

- yellow value is >= 0.2 and <= 0.4

- orange value is > 0.4 and <= 0.6

- light green value is > 0.6 and <= 0.8

- green value is > 0.8

The instructor can choose to show results from Hamer’s algorithm or Lauw’s algorithm. The default algorithm should be Lauw’s algorithm.

Objectives

Our objectives for this project are the following:

- Double and stub an assignment, a few submissions to the assignment, under different review rubrics

- Manually calculate reputation scores based on paper "Pluggable reputation systems for peer review: A web-service approach"

- Validate correct reputation scores based on different review rubrics generated by reputation management VS manual computation of reputation score expectation on different reputation range of Hamer's and Lauw's algorithm with or without instructor score impact.

Files Involved

- reputation_web_service_controller_spec

Test Plan

Testing Objects

In order to implement testing on reputation, it is crucial to create sample reviews so that we could possibly obtain reputation score. During the kickoff meeting, our team defined five necessary steps to follow for the purpose of testing. Also, appropriate objects could be created and confined as discussed below.

1 : Setup Assignment

- Essential Parameters to be configured

submitter_count = 0;

num_reviews = 3;

staggerred_deadline = false;

rounds_of_reviews = 2;

reputation_algorithm = lauw/hamer;

Note: We will create multiple two-round review assignment objects with 3 reviews required done per students.

@assignment_1 = create(:assignment, created_at: DateTime.now.in_time_zone - 13.day, submitter_count: 0, num_reviews: 5, num_reviewers: 5, num_reviews_allowed: 5, rounds_of_reviews: 2, reputation_algorithm: 'lauw', id: 1)

@assignment_2 = create(:assignment, created_at: DateTime.now.in_time_zone - 13.day, submitter_count: 0, num_reviews: 5, num_reviewers: 5, num_reviews_allowed: 5, rounds_of_reviews: 2, reputation_algorithm: 'hamer', id: 2)

2. Submission to Assignment

- Essential Parameters to be configured

content = website_link;

Note: We will have to check on how submission is determined by the system. The initial thought is to just submit a website. However, the alternative way might be check on the directory number.

3. Setup Questionnaires(Rubrics)

- Essential Parameters to be configured

instructor_id = from_fixture;

min_question_score = 0;

max_question_score = 5;

type = ReviewQuestionnaire;

Note: We will define the assignment with ReviewQuestionnaire type rubric.

@questionnaire_1 = create(:questionnaire, min_question_score: 0, max_question_score: 5, type: 'ReviewQuestionnaire', id: 1)

@assignment_questionnaire_1_1 = create(:assignment_questionnaire, assignment_id: @assignment_1.id, questionnaire_id: @questionnaire_1.id, used_in_round: 1)

@assignment_questionnaire_1_2 = create(:assignment_questionnaire, assignment_id: @assignment_1.id, questionnaire_id: @questionnaire_1.id, used_in_round: 2)

@assignment_questionnaire_2_1 = create(:assignment_questionnaire, assignment_id: @assignment_2.id, questionnaire_id: @questionnaire_1.id, used_in_round: 1)

@assignment_questionnaire_2_2 = create(:assignment_questionnaire, assignment_id: @assignment_2.id, questionnaire_id: @questionnaire_1.id, used_in_round: 2, id: 4)

3. Setup Questions under Questionnaires

@question_1_1 = create(:question, questionnaire_id: @questionnaire_1.id, id: 1)

@question_1_2 = create(:question, questionnaire_id: @questionnaire_1.id, id: 2)

@question_1_3 = create(:question, questionnaire_id: @questionnaire_1.id, id: 3)

@question_1_4 = create(:question, questionnaire_id: @questionnaire_1.id, id: 4)

@question_1_5 = create(:question, questionnaire_id: @questionnaire_1.id, id: 5)

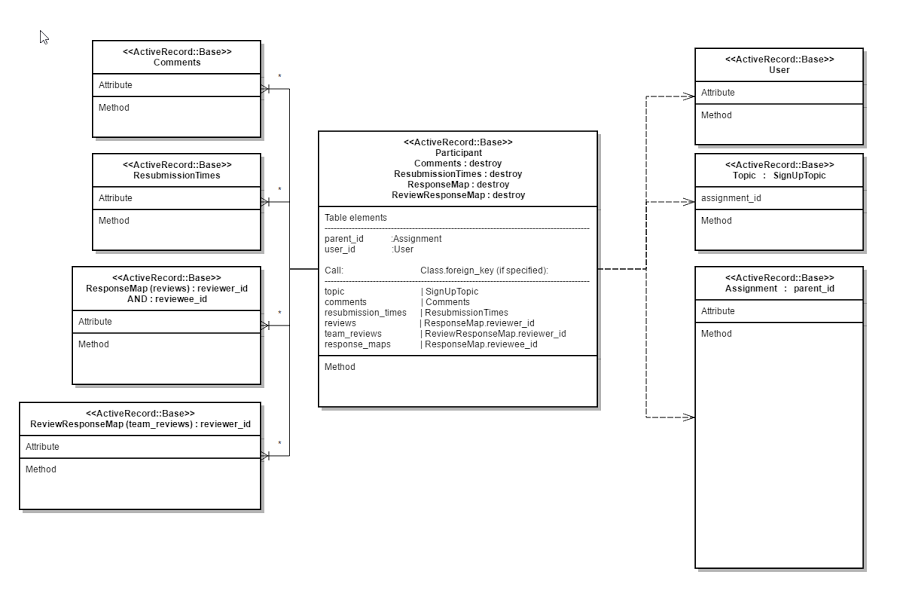

4. Setup response map

- Essential Parameters to be configured

reviewed_object_id = assignment_id;

reviewer_id = participants;

reviewee_id = AssignmentTeam;

Note: we will setup response map to determine relationship between reviewer and reviewee of an assignment.

The manifestation of each object will contribute to the success of the following test on reputations. Some fields in each object can be empty or have default values. Some attributes are not relevant to the test. When implementing the test, the test scripts need to generate or set fixed values for corresponding fields.

Relevant Methods

ReputationController_spec

- db_query

This is the normal db query method, call this method will return peer review grades with given assignment id. We will test this method in two aspect. 1. Test whether or not the grade return is right based on the specified algorithm. 2. We need to test the correctness of the query.

context 'test db_query' do

it 'return average score' do

create(:answer, question_id: @question_1_1.id, response_id: @response_1_1.id, answer: 1)

create(:answer, question_id: @question_1_2.id, response_id: @response_1_1.id, answer: 2)

create(:answer, question_id: @question_1_3.id, response_id: @response_1_1.id, answer: 3)

create(:answer, question_id: @question_1_4.id, response_id: @response_1_1.id, answer: 4)

create(:answer, question_id: @question_1_5.id, response_id: @response_1_1.id, answer: 5)

result = ReputationWebServiceController.new.db_query(1, 1, false)

expect(result).to eq([[2, 1, 60.0]])

end

end

- db_query_with_quiz_score

This is the special db query, call this method will return quiz scores with given assignment id. We will test this method with same logic as the first one.

- json_generator

This method will generate the hash format of the review, we will test this method by calling to and convert the result to json format the print to test its correctness.

context 'test db_query' do

it 'return average score' do

create(:answer, question_id: @question_1_1.id, response_id: @response_1_1.id, answer: 1)

create(:answer, question_id: @question_1_2.id, response_id: @response_1_1.id, answer: 2)

create(:answer, question_id: @question_1_3.id, response_id: @response_1_1.id, answer: 3)

create(:answer, question_id: @question_1_4.id, response_id: @response_1_1.id, answer: 4)

create(:answer, question_id: @question_1_5.id, response_id: @response_1_1.id, answer: 5)

result = ReputationWebServiceController.new.db_query(1, 1, false)

expect(result).to eq([[2, 1, 60.0]])

end

end

- client

client method will fill many instance variables with corresponding class variables, we need to test this method with send_post_request.

- send_post_request

This method send a post request to peerlogic.csc.ncsu.edu/reputation/calculations/reputation_algorithms to calculate get the reputation result and use show the result in corresponding UI and update given reviewer's reputation. We will test this method based on the algorithm in the paper, first to test the result reputation value, second to test the update value in database.

context 'test send_post_request' do

it 'failed because of no public key file' do

# reivewer_1's review for reviewee_1: [5, 5, 5, 5, 5]

create(:answer, question_id: @question_1_1.id, response_id: @response_1_1.id, answer: 5)

create(:answer, question_id: @question_1_2.id, response_id: @response_1_1.id, answer: 5)

create(:answer, question_id: @question_1_3.id, response_id: @response_1_1.id, answer: 5)

create(:answer, question_id: @question_1_4.id, response_id: @response_1_1.id, answer: 5)

create(:answer, question_id: @question_1_5.id, response_id: @response_1_1.id, answer: 5)

params = {assignment_id: 1, round_num: 1, algorithm: 'hammer', checkbox: {expert_grade: "empty"}}

session = {user: build(:instructor, id: 1)}

expect(true).to eq(true)

# comment out because send_post_request method request public key file while this file is missing

# so at this time send_post_request is not functioning normally

# get :send_post_request, params, session

# expect(response).to redirect_to '/reputation_web_service/client'

end

end

Results

Collaborators

Jinku Cui (jcui23)

Henry Chen (hchen34)

Dong Li (dli35)

Zijun Lu (zlu5)