CSC/ECE 517 Spring 2024 - NTNX-1 : Extend NDB Operator to Support Postgres HA

Problem Backgroud

High Availability (HA)

High Availability (HA) is a design principle aimed at ensuring that a system remains operational with minimal downtime, even in the face of failures. HA systems are characterized by redundancy, with backup components ready to take over instantly if the primary ones fail. They feature automatic fault detection and recovery mechanisms to swiftly restore service, often without human intervention. Additionally, a distributed architecture reduces the risk of a single point of failure, enhancing the system's overall resilience. In essence, high availability is about maintaining continuous service and quickly recovering from any disruptions to keep the system running smoothly.

Postgres HA Instance

The Postgres HA Instance is a configuration for PostgreSQL databases designed for high availability. It ensures that the database remains operational and accessible even during hardware failures or software issues. This setup includes database replication, which synchronizes data across multiple servers for quick failover; failover mechanisms to automatically switch to a standby server if the primary fails; and load balancing to evenly distribute the load and prevent any single server from becoming a bottleneck. Managed through HAProxy, the system directs queries to the appropriate server based on current load and server health, maintaining smooth and continuous database operations.

In this project, The NDB API already supports Postgres High Availability DB, but provisioning them in the Kubernetes operator is not supported.

NDB Operator

The NDB Operator is a Kubernetes tool designed for managing and automating the lifecycle of Nutanix Database Service (NDB) clusters, including MySQL NDB Clusters. It facilitates the setup, scaling, and backup of databases, integrating seamlessly into the Kubernetes environment. By automating these tasks, the NDB Operator simplifies database management, allowing developers to focus on application development rather than database administration. It utilizes tools like Ansible, Jenkins, and Terraform for streamlined database operations, ensuring that databases are efficiently provisioned, scaled, and maintained. The operator enhances security and compliance through features like data encryption and access control, capitalizing on Kubernetes capabilities such as auto-scaling and load balancing. This setup not only improves database management efficiency but also provides a robust framework for applications to reliably connect and interact with databases, ensuring high availability and performance.

Problem Statement

The Nutanix Database Service (NDB) Operator currently supports the provisioning and management of various database systems within Kubernetes environments. However, it lacks specific capabilities for handling PostgreSQL High Availability (HA) instances, which are critical for ensuring database resilience and uptime in production environments. To address this gap, we need to enhance the NDB Operator to fully support the deployment and management of Postgres HA instances.

This project will add fields to the existing architecture. With the new HA options, we will create end-to-end and unit tests for provisioning and removing DB.

Existing Architecture

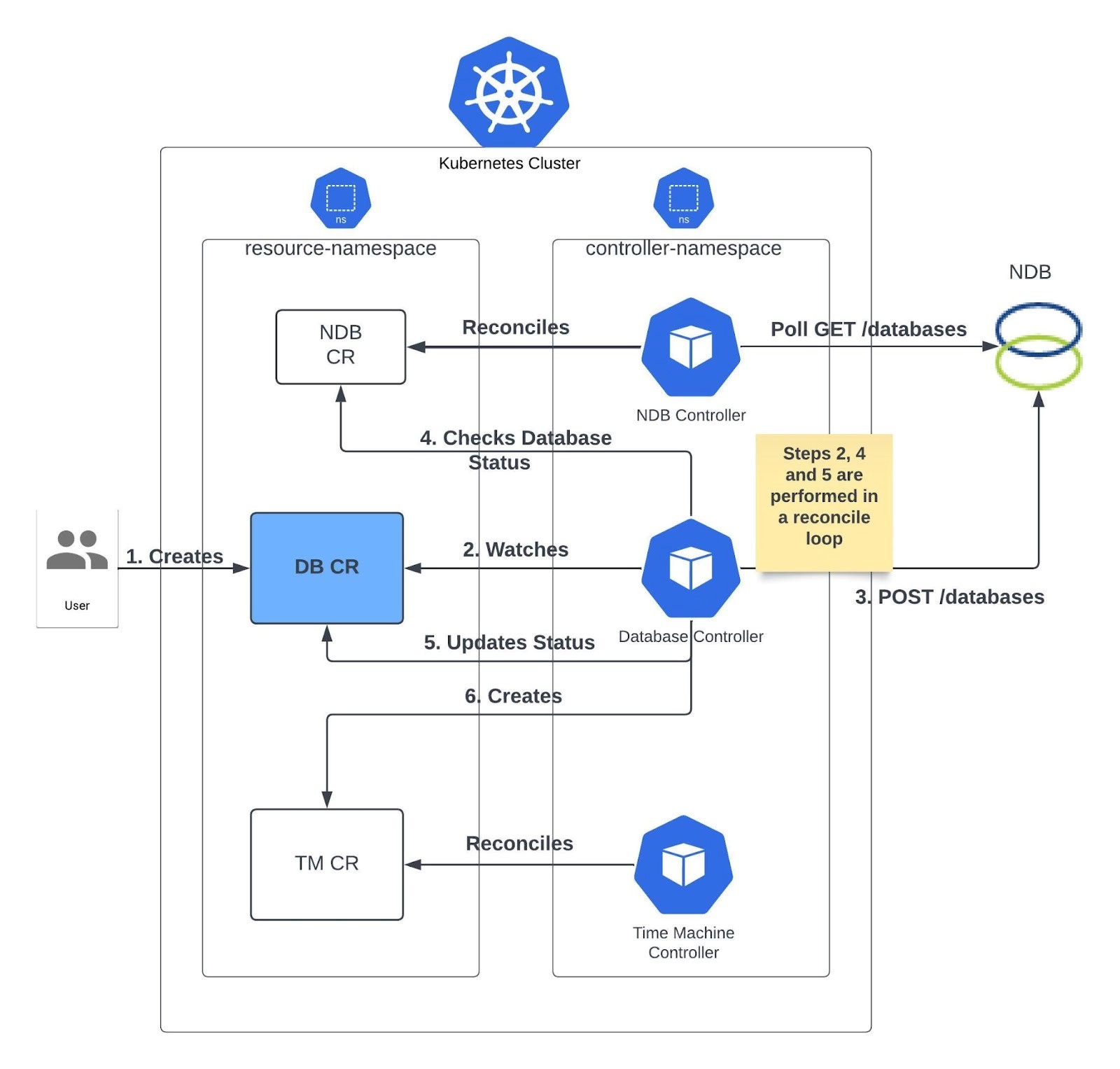

In the current architecture, the NDB Operator monitors Kubernetes clusters for newly created custom resources, representing database provisioning requests. Upon detecting these requests, it communicates with the NDB Server to register the pending database provisioning tasks. The operator manages the lifecycle of these tasks through a continuous reconciliation process, adjusting the system's state according to the defined custom resources and reacting to any changes made by the users.When provisioning a new database, the NDB Operator monitors the cluster for newly created custom resources. Once it is created, the operator syncs the change with the NDB Server, which records all databases to be provisioned.The operator then reconciles the database/NDB CR, and watches its status thereafter. If a user modifies that DB CR, the reconcile loop begins again.

This team will expand the NDB Operator to accommodate Postgres HA.

Functional Design

Workflow

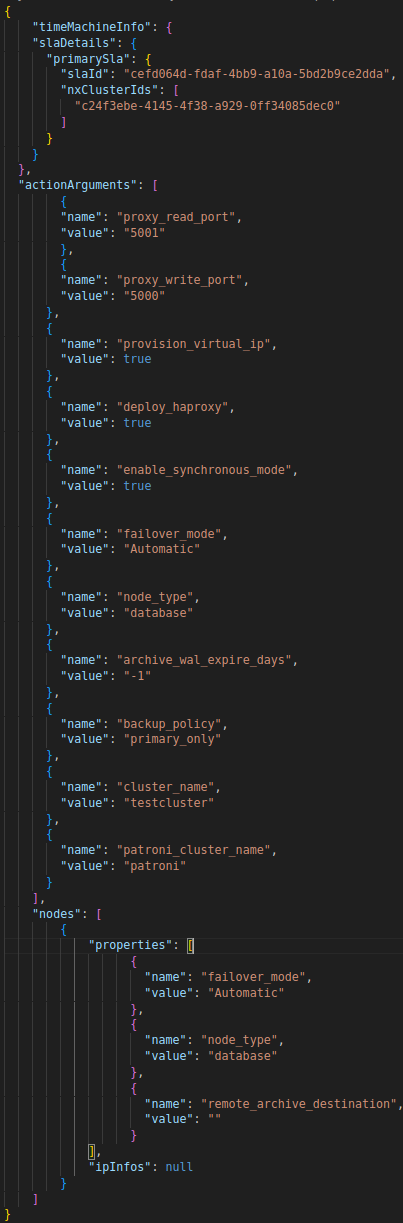

- To start we compared the API payloads for creating a Postgres and Postgres HA database to see which parameters are unique to Postgres HA. The values unique to the Postgres HA payload can be seen here:

- Next, we compared these unique parameters with the pull request from last semester to begin implementing Postgres HA. This told us which parts have been partially or fully implemented and which have not been added at all.

- When comparing the Postgres HA with the changes made in the existing PR we can see that most of the parameters have been implemented in a hard coded fashion. With the past implementation a user can create a Postgres HA instance by setting the new isHighAvailability parameter in the NDB Custom Resource (referred to later as CR) to true. This allowed the provisioning of a Postgres HA instance with preset values for the various HA options.

- Our implementation will instead move the isHighAvailability field to be a part of the database parameters AdditionalArgument map. This has the benefit of not requiring another field for the custom resource when not wanting an HA instance.

- Most of the work in our implementation will be allowing the default values below to be set using optional parameters. These optional parameters will be provided by the user when creating the CR by specifying the parameters by their key/value pairs inside the additionalArguments section.

- Provided parameters are used (assuming it is valid) to override the default values.

- If the parameter is absent, the default values will be left unchanged.

- In addition to the the additionalArguments we need the ability to specify individual nodes and their properties. Since the properties can vary between nodes and the number of nodes is variable the additionalArguments, that only supports key/value parts of the String type, is not a good location for this information. We are adding a new struct titled Node and the manifest that is used to provision the request will accept an array of these new node structs.

Out of those ActionArguments above, the unimplemented ones are:

actionArguments": [

{

"name": "provision_virtual_ip",

"value": true

},

{

"name": "deploy_haproxy",

"value": true

},

{

"name": "failover_mode",

"value": "Automatic"

},

{

"name": "archive_wal_expire_days",

"value": "-1"

},

{

"name": "patroni_cluster_name",

"value": "patroni"

},

],

Implementation

1.Struct Definition and Modification

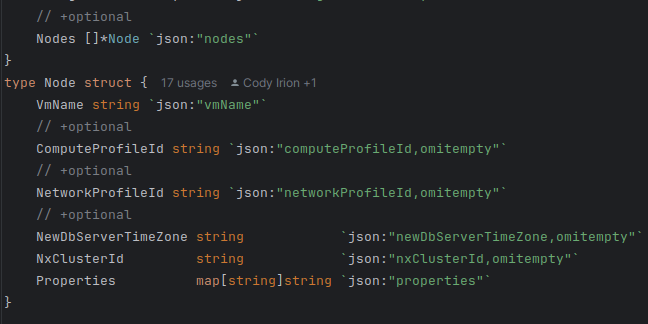

In order to add the new struct to the database request a few changes are needed to be made to the code. The first of which is to add the a new Node struct to the database_type file. These new struct will hold all the field and the properties that can be specified in the database custom resource (CR). An optional array of these structs will be added to the Instance and Clone structs to allow users to specify which nodes to create. If no node specifications are given a default setup will be used. The // +optional looks like just a comment but it what marks a field as optional in the CR, without it and error will be thrown if the field is not provided.

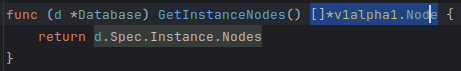

2.Method Addition for Struct Accessibility

Next to make these new structs available when creating the provisioning request we need to add a method to the interface.go file and implement that method.This interface is implemented in the database.go file and this will make the new Node structs available when we build the provisioning request in the next steps.

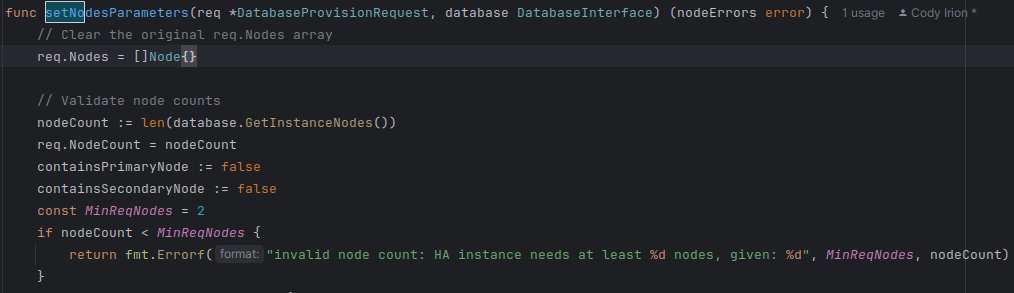

3.Provisioning Request Construction and Node Validation

Now that the Node information is available we need to use the provided information to build the request. This is accomplished by modifying the existing setNodesParameters function inside the db_helpers.go file. This method originally had all the values hard set and would only create an Postgres HA instance with one configuration, 2 HA proxy nodes and 3 database nodes. The new implementations will allow the user to specify 2 or more nodes. The setNodesParameters function will be modified to to return golangs built in error object in the case of an error when setting the node parameters. Initially the length of the array of nodes is checked to make sure there are at least 2 nodes. There must be at a minimum 2. There must be at least one primary and one secondary database node. This is checked with the containsPrimaryNode and containsSecondaryNode variables. If these are false after creating all the nodes an error is returned.

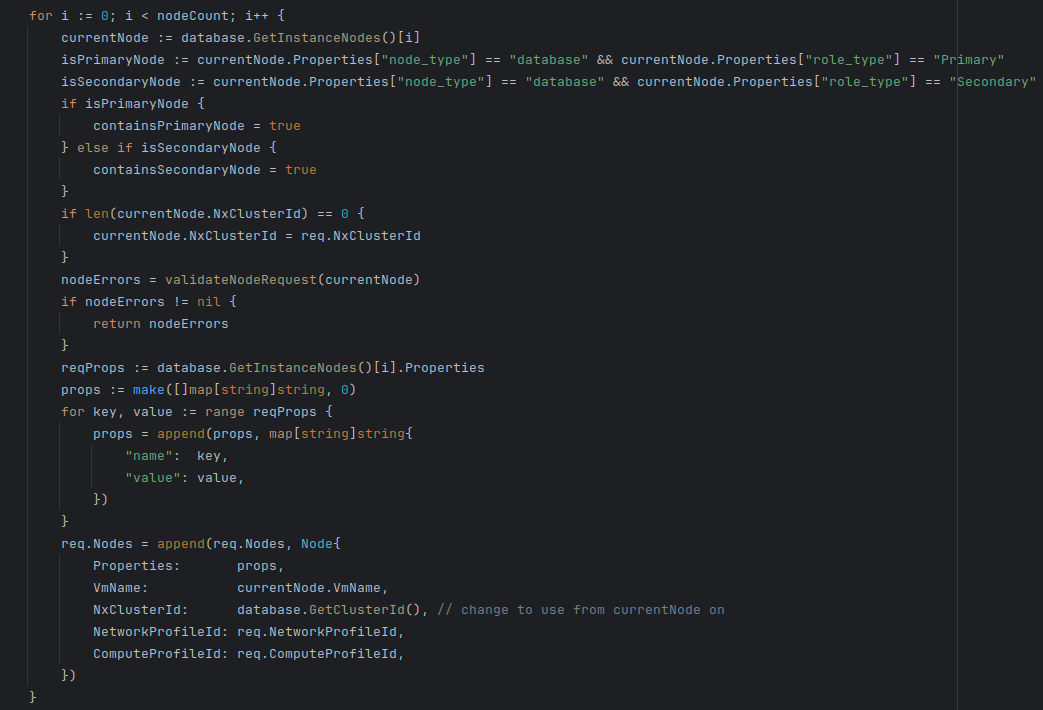

If the number of nodes is valid the node structs are iterated through. While iterating through the nodes the node properties are validated using the validateNodeRequest function. If no errors are found the properties are added to an array of key/value pairs. After adding all the properties the rest of the node fields are set then the node is added to the request

4.Provisioning Request Arguments Validation

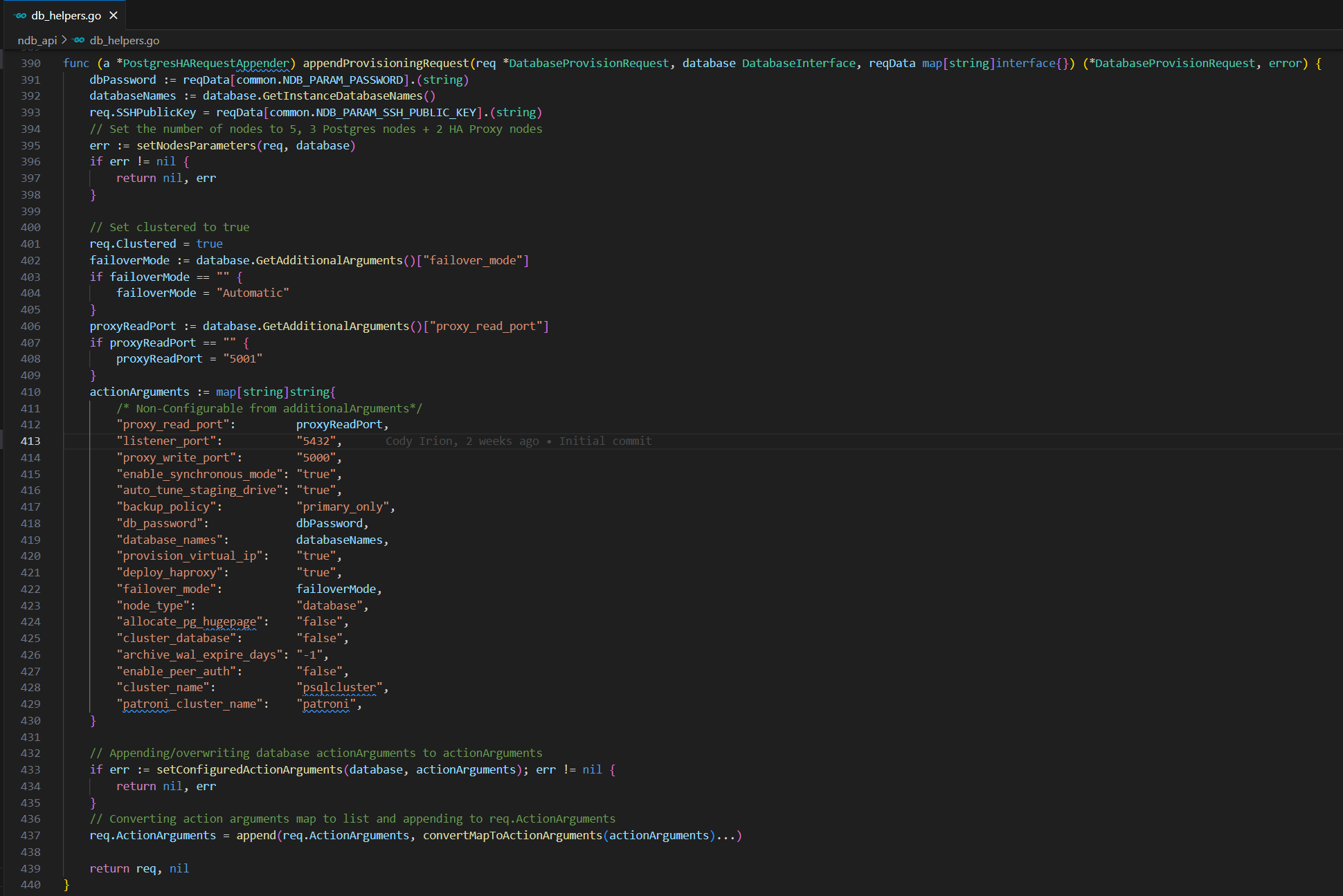

The controller utilizes the GenerateProvisioningRequest method in ndb-operator/ndb_api/db_helpers.go to generate a provisioning request sent to NDB. Within the same file, there are appendRequest methods for each database type, which add relevant action arguments to the provisioning request. An additional method appendProvisioningRequest developed by the previous team specifically for Postgres HA databases will be used to make the additional modifications. This method allows the provisioning request with action arguments for Postgres HA databases and incorporates node properties belonging to the HA cluster.

This function is responsible for appending provisioning requests for a PostgreSQL database with high availability setup. It fetches necessary parameters from the provided data and database interface and constructs action arguments for the request. This function is defined as a method on the type PostgresHARequestAppender. It takes three parameters: req, database, and reqData, and returns a pointer to DatabaseProvisionRequest and an error.

Setting Failover Mode and Proxy Read Port:

- failoverMode: This extracts the failover mode from additional arguments of the database. If not specified, it defaults to "Automatic".

- proxyReadPort: This extracts the proxy read port from additional arguments of the database. If not specified, it defaults to "5001".

The same process will be applied for the rest of the arguments, these if statements check if the value is empty, and if so, set a default value.

Creating Action Arguments Map creates a map actionArguments with various parameters required for provisioning. Then the setConfiguredActionArguments is called to append the database-specific action arguments. If it fails, the function returns an error.

Once the function gathers and sets default parameters, it constructs a comprehensive action argument map, incorporating vital configuration details for the PostgreSQL database, such as synchronous mode, backup policies, and cluster names. Additionally, it handles database-specific configurations via the setConfiguredActionArguments method, ensuring precise appending or overwriting of any specific settings. These assembled action arguments are then seamlessly integrated into req.ActionArguments, forming an integral part of the holistic provisioning request.

Test Plan

Test Description

We are integrating a test case into the existing end-to-end tests. These can be found in the repository at the following link. The structure of our tests is similar to that of the tests for the standard Postgres instance.

https://github.com/nutanix-cloud-native/ndb-operator/tree/main/automation/tests/provisioning

This test suite validates the full PostgreSQL provisioning process, from request initiation to deployment and operation. It simulates real-world scenarios to ensure the service handles configuration changes, maintains data persistence, and recovers from failures effectively.

Additionally,If the PostgreSQL end-to-end tests are successful, we may proceed with some tests for the future architecture as follows: Node structure, accessibility methods, provisioning request construction, argument validation, and the overall workflow of HA provisioning.

Test Code Sample

Test Node struct addition in database_type file:

Test case: when adding a new Node struct

Test case: verifies that all fields can be set and accessed correctly, including optional fields

Code Sample:

func TestNodeStructCreation(t *testing.T) {

// Example of testing the creation of the Node struct

node := ndb_api.Node{

VmName: "test-vm",

ComputeProfileId: "compute-profile-1",

NetworkProfileId: "network-profile-1",

Properties: map[string]string{

"role": "Primary",

"node_type": "database",

},

}

assert.Equal(t, "test-vm", node.VmName)

assert.Equal(t, "compute-profile-1", node.ComputeProfileId)

assert.Equal(t, "network-profile-1", node.NetworkProfileId)

assert.Equal(t, "Primary", node.Properties["role"])

assert.Equal(t, "database", node.Properties["node_type"])

}

Test Plan

Test Node struct addition in database_type file: Test case: when adding a new Node struct Test case: verifies that all fields can be set and accessed correctly, including optional fields Test method for Node struct accessibility: Test case: when accessing Node struct through the new interface method Test case: ensures that the Node struct is accessible and correctly integrated into the provisioning process Test provisioning request construction and node validation: Test case: when constructing a provisioning request with an invalid node count Test case: verifies that the request fails when the node count is below the required minimum for HA Test case: when constructing a provisioning request with a valid node count and configuration Test case: verifies that the request succeeds and the nodes are correctly configured Test provisioning request arguments validation: Test case: when appending action arguments for an HA provisioning request Test case: ensures that HA-specific action arguments are correctly constructed and included in the request Test case: when handling default and overridden parameters in the provisioning request Test case: verifies that default values are correctly applied and can be overridden by user-specified values Test performance and stability of the HA setup: Test case: when measuring the performance of the HA provisioning process Test case: verifies that the performance meets the expected benchmarks Test case: when testing resilience and fault tolerance of the HA setup Test case: ensures that the HA system maintains high availability standards under various failure scenarios

References and Repository

https://github.com/nutanix-cloud-native/ndb-operator

https://sdk.operatorframework.io/docs/building-operators/golang/tutorial/

https://docs.google.com/document/d/11Z-AAB7O-Fy0tRo1X7qI5_JfRcwcZuuhpGY0tKoFp0U/edit?usp=sharing

https://www.nutanix.com/what-we-do

Repo

According to the comments we received from project 3, "There is a good introduction at the start, but The working of the code should be described in prose." Our code is described in the pull request linked in below, and it is also mentioned in the following document.

https://github.com/dvrohan/ndb-operator

Video

Provisioning Postgres HA Instances Team

Mentor

Kartiki Bhandakkar <kbhanda3@ncsu.edu>

Student

Cody Irion

Zhi Zhang

Justin Orringer

Kandarpkumar Patel