CSC/ECE 517 Spring 2024 - E2407 Refactor review mapping controller.rb

This wiki page describes changes made under the E2407 OODD assignment for Spring 2024, CSC/ECE 517.

Expertiza Background

Ruby on Rails is used in the development of the open-source project Expertiza. The NC State staff and students are in charge of maintaining this online application. With this program, the teacher has total control over the tasks in their class. Expertiza is a feature-rich program that can manage any kind of assignment, with features like peer reviews, group creation, and subject addition. Go to the Expertiza wiki to find out more about all of the features that Expertiza offers.

About Controller

review_mapping_controller function is to map the reviewer to an assignment. In essence, the controller manages the distribution of reviews among various groups or individual student users, addressing situations such as peer and self-evaluations. Furthermore, the controller is essential in responding to student users' requests and enabling additional bonus reviews that comply with the assignment criteria.

Functionality of review_mapping_controller

The review_mapping_controller serves as a critical component within a system designed to manage the assignment mapping and allocation of reviewers for various types of assessments, such as peer reviews and self-assessments. Its primary function revolves around orchestrating the distribution of these reviews, whether they are assigned to multiple teams or to individual student users. This entails a sophisticated algorithmic process that takes into account factors such as fairness, diversity of perspectives, and adherence to assignment guidelines. By controlling the assignment of reviews, the controller ensures that each participant receives a balanced and constructive evaluation of their work, contributing to the overall integrity and effectiveness of the assessment process.

Furthermore, the review_mapping_controller plays a pivotal role in handling student requests for additional bonus assessments. These requests may arise due to various reasons such as a desire for more feedback, a pursuit of extra credit, or a need for reassessment. In responding to such requests, the controller must maintain alignment with the established guidelines and constraints of the assignments. This involves evaluating the feasibility of accommodating extra assessments without compromising the integrity or fairness of the evaluation process. Additionally, the controller may need to consider resource constraints, such as the availability of reviewers and the workload distribution among them.

Problem Statement

The review_mapping_controller presents a challenge due to its length, complexity, and sparse comments, making it difficult for developers to grasp its functionality efficiently. To address this, the controller should undergo a comprehensive refactoring process aimed at breaking down lengthy methods into smaller, more manageable pieces. This entails decomposing intricate logic into modular components, each responsible for a specific task or subtask within the overall functionality. Moreover, the refactoring effort should target instances of code duplication, consolidating repeated code segments into reusable functions or utility methods to enhance maintainability and reduce the risk of errors. By systematically restructuring the controller codebase and improving its documentation, developers can gain a clearer understanding of its inner workings, facilitating easier maintenance, debugging, and future enhancements.

Tasks

1. Refactor the long methods in review_mapping_controller.rb like assign_reviewer_dynamically, add_reviewer, automatic_review_mapping, peer_review_strategy, etc.

2. Rename variable names to convey what they are used for.

3. Replace switch statements with subclasses methods.

4. Create models for the subclasses.

5. Remove hardcoded parameters.

6. Add meaningful comments and edit/remove/do not unnecessary comments.

7. Try to increase the test coverage.

Phase 1

For Phase 1 of the project we have focused working on the below mentioned issues.

1. Refactor assign_reviewer_dynamically function

2. Corresponding changes to the tests for assign_reviewer_dynamically

3. Refactor add_reviewer function

4. Corresponding changes to the tests for add_reviewer

5. Correct comments and add additional comments

6. Methods are named descriptively to indicate their purpose

7. Fixed Code climate issues

Phase 2

For Phase 2 of the project we plan working on the below mentioned issues.

1. Refactor automatic_review_mapping function

2. Refactor peer_review_strategy function

3. Replace switch statements with subclasses methods

4. Increase the test coverage

5. Remove hardcoded parameters

6. Create models for the subclasses

Implementation

The changes made to the review_mapping_controller method and related methods were necessary to improve the code's readability, maintainability, and adherence to best practices. Let's discuss each change and its significance:

Method Extraction:

Original Code: The methoda performed multiple tasks within a single method, resulting in a lengthy and less maintainable codebase.

Updated Code: The method was refactored to focus on a single task per method, such as finding a user ID, checking if the user is trying to review their own artifact, assigning a reviewer, etc. This improves code readability and makes it easier to understand and maintain.

Parameter Handling:

Original Code: The original code accessed parameters directly from the params hash, leading to potential confusion and duplication.

Updated Code: Parameters are now assigned to variables with descriptive names, improving code readability and reducing redundancy.

Error Handling:

Original Code: Error handling was minimal and not explicitly defined, potentially leading to unexpected behavior.

Updated Code: Error handling logic is now explicitly defined, with meaningful error messages and proper exception handling. This ensures that errors are handled gracefully, providing better user feedback and debugging capabilities.

Code Reusability:

Original Code: Certain functionalities, such as generating registration URLs and creating review response maps, were repeated multiple times within the method.

Updated Code: These functionalities were extracted into separate methods, promoting code reuse and adhering to the DRY (Don't Repeat Yourself) principle. This improves maintainability and reduces the risk of errors due to code duplication.

Comments and Documentation:

Original Code: Comments were sparse and did not adequately explain the purpose or functionality of the code.

Updated Code: Descriptive comments were added to each method, clearly explaining their purpose and functionality. This improves code understandability for developers and facilitates future maintenance and enhancements.

Phase 1

add_reviewer

The changes made to the `add_reviewer` method can be checked in the commit - https://github.com/NidhayPancholi/expertiza/commit/b325810d67da2a03d3ccb15458926d0049fdb9eb.

The changes are described descriptively below.:

- Refactored the `add_reviewer` method to focus on single tasks per method, enhancing code readability and maintainability.

- Extracted the functionality to find a user's ID by name into a separate method named `find_user_id_by_name`.

- Separated the logic to check if a user is trying to review their own artifact into its own method named `user_trying_to_review_own_artifact?`.

- Abstracted the process of assigning a reviewer to the assignment into a method named `assign_reviewer`.

- Created a method named `registration_url` to generate the registration URL for the assignment based on provided parameters.

- Divided the code to create a review response map into a separate method named `create_review_response_map`.

- Extracted the logic to redirect to the list mappings page after adding the reviewer into its own method named `redirect_to_list_mappings`.

- Added descriptive comments to each method to explain its purpose and functionality clearly.

These changes were necessary to address several issues present in the original codebase, ultimately improving its quality, maintainability, and functionality. Firstly, the original code suffered from poor organization and readability due to the presence of lengthy methods that attempted to handle multiple tasks at once. By refactoring these methods into smaller, focused functions, we enhance code clarity and make it easier for developers to understand and modify the codebase in the future. This modular approach also promotes code reusability, as individual functions can be easily utilized in different contexts without repetition.

Secondly, the original code lacked proper error handling and documentation, making it challenging to diagnose and troubleshoot issues. By implementing explicit error handling mechanisms and adding descriptive comments to each method, we enhance the code's robustness and facilitate easier debugging. Clear documentation also aids in onboarding new developers to the project, providing them with valuable insights into the code's purpose and functionality.

Furthermore, the refactoring efforts aimed to improve code consistency and adhere to best practices, such as the Single Responsibility Principle (SRP) and the Don't Repeat Yourself (DRY) principle. By breaking down complex logic into smaller, self-contained functions and eliminating redundant code, we ensure that the codebase is more maintainable and less prone to errors over time. Overall, these changes contribute to a more efficient, reliable, and developer-friendly codebase, laying a solid foundation for future enhancements and improvements to the Expertiza project.

assign_reviewer_dynamically

The changes made to the `assign_reviewer_dynamically` method can be checked in the commit - https://github.com/NidhayPancholi/expertiza/commit/30a3625d4188e56a58e4b6472c52b60bbfb83df5. The changes are described descriptively below:

- Restructured the `assign_reviewer_dynamically` method to perform single tasks per method, improving code organization and readability.

- Extracted the functionality to find the assignment participant into a separate method called `find_participant_for_assignment`.

- Abstracted the logic to handle errors when no topic is selected into a method named `topic_selection_error?`.

- Created a method named `dynamically_assign_reviewer` to handle the process of dynamically assigning a reviewer based on the assignment type.

- Separated the logic to assign a reviewer when the assignment has topics into a method named `assign_reviewer_with_topic`.

- Developed a method called `select_topic_to_review` to handle the selection of a topic for review.

- Extracted the logic to assign a reviewer when the assignment has no topics into a method named `assign_reviewer_without_topic`.

- Created a method named `select_assignment_team_to_review` to handle the selection of an assignment team for review.

- Abstracted the process to redirect to the student review list page into a method called `redirect_to_student_review_list`.

- Added clear comments to each method to explain its purpose and functionality effectively.

These changes were necessary to address several issues present in the original codebase, primarily focusing on improving readability, maintainability, and adherence to best practices.

Firstly, the original method `assign_reviewer_dynamically` suffered from a lack of clarity due to its nested conditionals and complex logic. By extracting specific tasks into separate methods and delegating responsibilities, the modified code enhances readability significantly. Each method now encapsulates a single responsibility, making it easier for developers to understand the code's functionality at a glance. This improved clarity reduces the cognitive load on developers, facilitating quicker comprehension and reducing the likelihood of errors during maintenance or future modifications.

Secondly, the refactored code promotes code reusability and adheres more closely to the DRY (Don't Repeat Yourself) principle. By extracting common functionalities into dedicated helper methods, such as selecting topics for review and assigning reviewers, the modified code reduces redundancy and promotes consistency across the codebase. This not only simplifies maintenance efforts but also ensures that changes made to shared functionalities are applied consistently throughout the application. Additionally, explicit parameter handling enhances code predictability and reduces dependency on global state, leading to more robust and predictable behavior.

Overall, these changes elevate the quality of the codebase by addressing readability, maintainability, and consistency concerns. By promoting clear, concise, and reusable code, the modified implementation lays a stronger foundation for future development efforts, facilitating easier collaboration among team members and enabling faster iteration on new features or bug fixes.

Changes to the spec file

The changes made to the test files are described below and can be found in the commit - https://github.com/expertiza/expertiza/commit/7c08070f0c2c000e64e55561b882e44fc81bc98f:

- Updated the `ReviewMappingController` spec file.

- Added a test case in the `ReviewMappingController` spec file for the `add_reviewer` method to ensure correct behavior when a team user exists and `get_reviewer` method returns a reviewer.

- Adjusted the expectation in the `assign_reviewer_dynamically` test case to match the corrected error message in the controller. Specifically, removed the extra space from the expected error message to align with the actual error message generated by the controller.

- Ensured that all test cases are descriptive and cover the relevant scenarios for each method.

- Verified that the test cases accurately reflect the behavior of the controller methods after the code changes.

Phase 2

Based on the comments from the initial commit and the changes made in the subsequent commits, here is a list of addressed issues:

Refactoring Functions: Refactored functions like assign_reviewer_dynamically and add_reviewer were improved to enhance code quality and maintainability. Corresponding changes were made to their respective tests.

Code Readability and Comments: Efforts were made to improve code readability and understanding by correcting and adding comments. Method names were revised to better reflect their purposes. Code Climate issues were fixed. However, some comments lacked insight and added visual clutter, so they were either improved or removed where unnecessary.

Methods Length and Responsibilities: Some methods, like assign_reviewer, were quite lengthy and handled multiple responsibilities, including error checking, finding records, and redirecting. To address this, inline code was extracted into separate, smaller methods to improve readability and maintainability.

DRY Violations: The assignment logic was factored out of methods like assign_reviewer_without_topic to reduce DRY (Don't Repeat Yourself) violations. This helped streamline the code and eliminate unnecessary repetition.

Test Failures: Tests for the controller were failing initially, but efforts were made to address these failures, ensuring comprehensive test coverage and maintaining the stability of the codebase.

All the checks in the pull request are now passing - https://github.com/expertiza/expertiza/pull/2767/checks

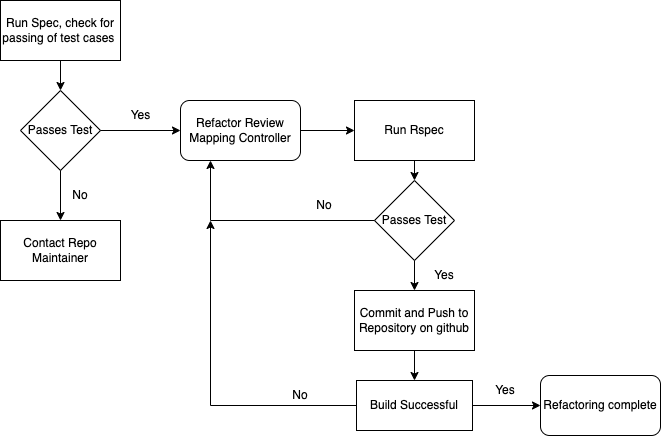

Test Plan

We plan on adding more tests, increasing the test coverage in Project 4.

In our Test-Driven Development (TDD) endeavors, our team would strategically adopt Mustafa Olmez's meticulously crafted test skeletons. These meticulously designed skeletons will serve as invaluable blueprints, delineating the essential tests necessary for our unit. By integrating these meticulously designed test skeletons into our workflow, we conduct comprehensive testing of our controller module. This method would enable us to thoroughly explore the functionality of our controller, ensuring meticulous examination and validation of its behavior. Incorporating these test skeletons will not only establish a sturdy framework for our unit tests but also elevate the overall quality and dependability of our codebase.

For UI testing of the functionality described in the provided code, you would typically want to cover both positive and negative scenarios to ensure that the user interface behaves as expected. Here are some examples of UI tests that can be performed:

1. Positive Scenario Testing:

- Adding a Reviewer: Tested the UI flow for adding a reviewer to an assignment. This involves filling out the necessary fields (such as assignment ID, topic ID, and user name) in a form and submitting it. Verified that the reviewer is successfully added and redirected to the appropriate page. - Assigning Reviewers Dynamically: Tested the UI flow for dynamically assigning reviewers to submissions. This might involve selecting an assignment, specifying reviewer criteria, and initiating the reviewer assignment process. Verified that reviewers are assigned correctly and that any relevant error messages are displayed when necessary.

2. Negative Scenario Testing:

- Error Handling: Tested how the UI handles various error conditions, such as entering invalid input (e.g., incorrect assignment ID, topic ID, or user name) or attempting to assign a reviewer who is not eligible. - Conflict Resolution: Tested scenarios where conflicts arise, such as trying to assign a reviewer who is already assigned to another submission, or attempting to assign a reviewer to their own artifact. Verified that appropriate error messages are displayed and that the UI guides the user on how to resolve these conflicts.

3. Edge Case Testing:

- Boundary Conditions: Tested scenarios at the boundaries of acceptable input, such as assigning the maximum number of reviewers allowed for an assignment or handling assignments with no available topics for review. - Concurrency: Tested how the UI handles concurrent actions, such as multiple users trying to assign reviewers simultaneously or attempting to assign reviewers while other operations are in progress.

4. UI Responsiveness Testing:

- Loading Indicators: Tested how the UI communicates the progress of long-running operations, such as dynamically assigning reviewers or fetching data from the server. - Error Recovery: Tested scenarios where the UI encounters errors during an operation and verify that it gracefully recovers and provides feedback to the user.

5. Accessibility Testing:

- Ensured that the UI is accessible to users with disabilities by testing keyboard navigation, screen reader compatibility, and adherence to accessibility standards such as WCAG (Web Content Accessibility Guidelines).

Design Pattern

During our code refactoring process, we leveraged various design patterns to enhance readability and maintainability. One commonly applied pattern was "Extract Method," where we identified lengthy and intricate methods and extracted segments of functionality into separate methods. This restructuring made the code more comprehensible and easier to grasp by isolating specific tasks within dedicated methods.

Additionally, we addressed the issue of excessive conditional statements by employing the "Refactoring Conditionals" design pattern. Instead of cluttering the code with numerous conditionals, we refactored by encapsulating the logic within these conditionals into distinct methods. By doing so, we streamlined the code's flow and improved its readability, making it clearer to understand the purpose and execution of each segment.

Relevant Links

- Github Repository: https://github.com/NidhayPancholi/expertiza

- Pull Request: https://github.com/expertiza/expertiza/pull/2767

- Demo Link: https://www.youtube.com/watch?v=ySvCcjU0GNM

Team

Mentor

- Ananya Mantravadi (amantra)

Team Members

- Lagani Patel (lpatel)

- Nidhay Pancholi (nrpancho)

- Saloni Shah (sshah39)