CSC/ECE 517 Spring 2022 - S2201: Improving User Experience for SQLFE

About SQLFE

SQL File Evaluation (SQLFE) is an open-source tool that helps in the flexible scoring of multiple SQL queries in multiple submitted files from assignments, lab tests, or other work. It was developed by Prof. Paul Wagner at University Of Wisconsin Eau Claire. This tool has features to support the automated grading of the SQL assignments. It allows partial grading of the question to fairly grade the students and also allows students to provide comments, and multiple solutions to the same problem.

Technologies Used

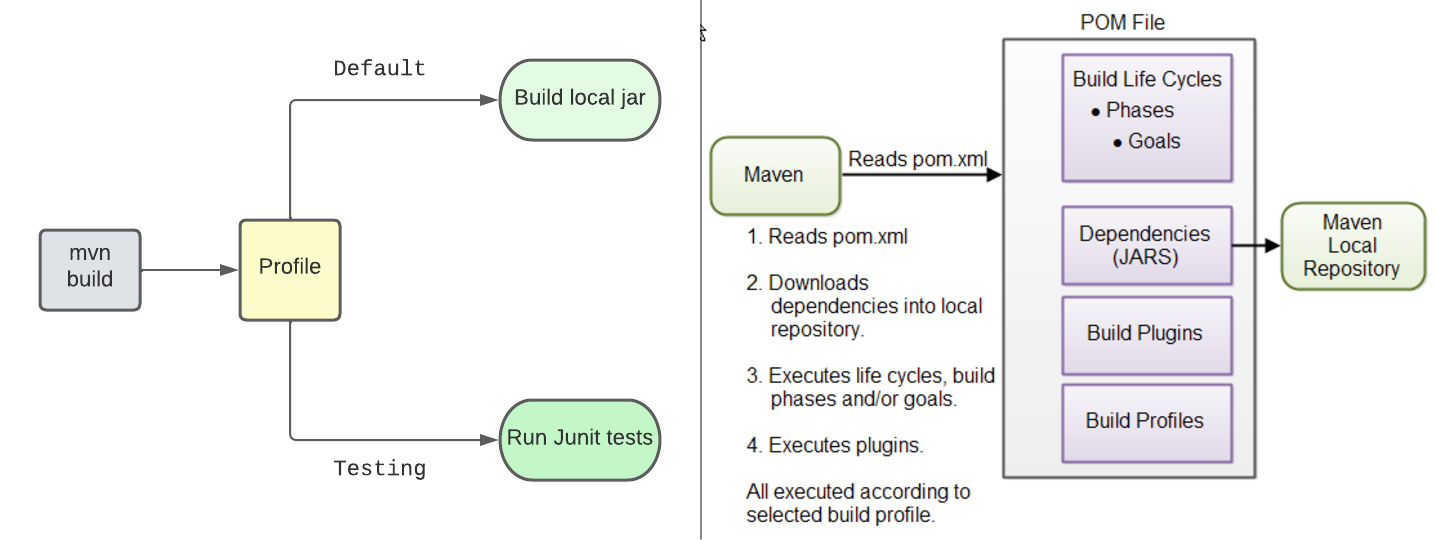

The technologies used include Maven, Junit, Java, MySQL ,Oracledb. Java is a high level object oriented programming language that is mainly used for back-end server-side development. Java is used in this project to write the underlying program used to assist professors in grading SQL student submissions. Maven is a build automation tool used for building and managing Java based projects. With Maven, the user can have different build profiles to set up different configurations of the Java project used for testing, development, and deployment configurations. Maven has POM files that are basically XML files that contain information related to the project and configuration like dependencies, source directory, plugin, and goals. Junit is a unit testing framework. Similar to Rspec for Ruby, Junit allows the user to automate unit testing to ensure that the classes work well for a system using Java. Oracledb, like any database, is a collection of structured data. This is different from MySQL, which was previously used to store the data on this particular project. However, both database platforms essentially serve the same purpose. In comparison, Oracledb supports parallel and distributed databases and also better indexing than MySQL. Though, MySQL is said to be more configurable, the commercially used Oracledb is the selected relational database management system for this particular project.

Tasks

The project tasks range over several smaller tasks with a cumulative effort directed towards improving the user experience and setting up better processes. As most of the task here are refactoring output files and moving the project to a different build, we don't have any design pattern refactoring.

Task 1

Improve the formatting of the query and feedback output in each individual evaluation output file.

Current Implementation

The evaluation files that are generated have a randomly formatted output which is hard to read and is not user-friendly.

Proposed Output

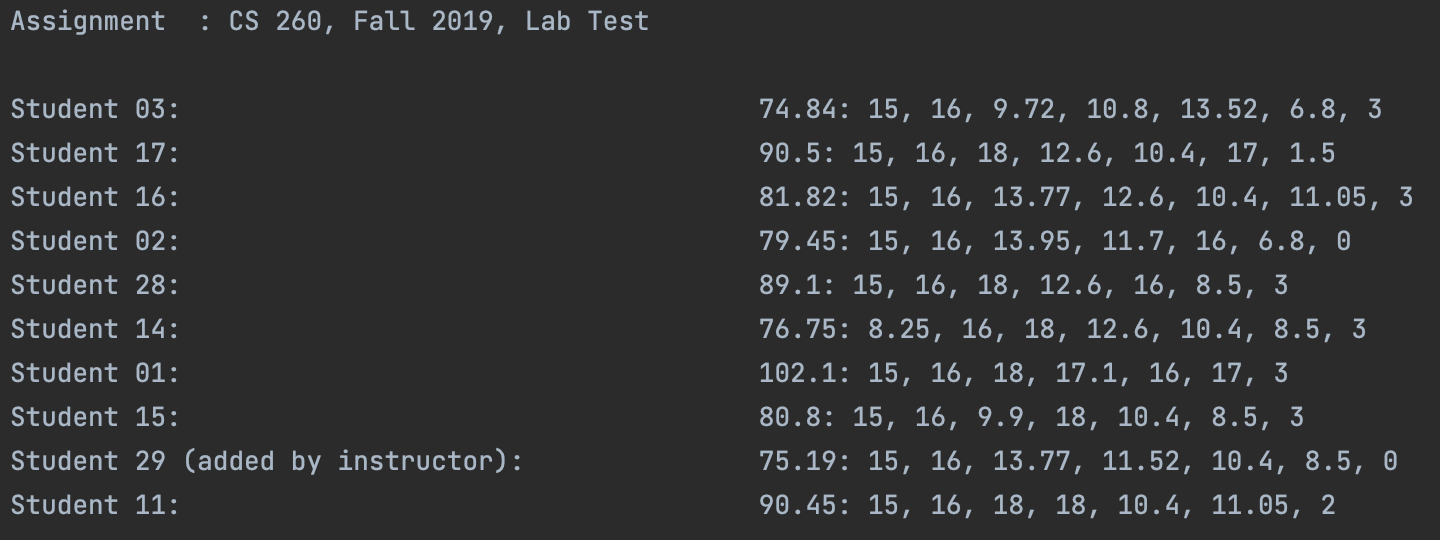

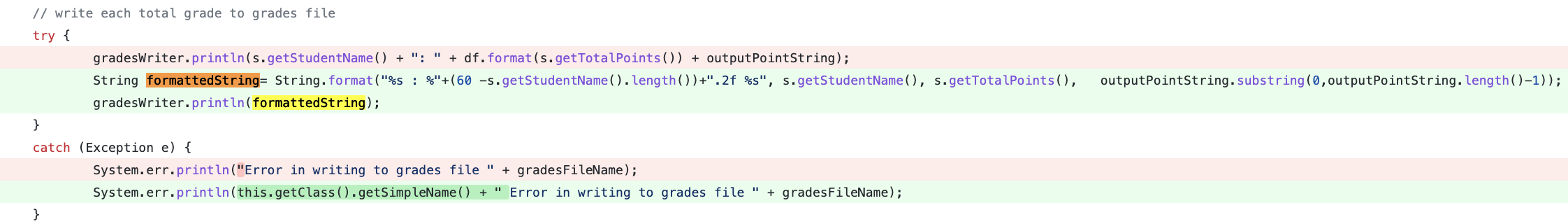

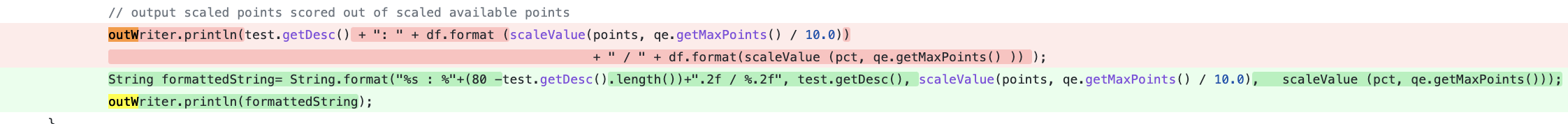

For a cleaner experience, we are going to remove the commas at the end of the lines. Also, we are going to change the alignment of the lines such that they all are indented in the correct manner.

Similar changes will be done for each student's output file.

Files modified

BackEnd.java

Submissions.java

BackEnd.java

Task 2

Add the JUnit test case coverage for the general SQLFE package. We are aiming to add unit tests for Assignment, FrontEnd, SubmissionCollection, and TestResult classes.

Once the design feature is further pushed to development, this page will be updated with specific code regarding the JUnit test cases desired for this feature. Until then, the following are outlined below:

Assignment.java

- Check the regular expression for the question with an unacceptable format like a 1, %1, and $2.

- Check the question without the period(.) in the question name.

- Check whether the name of propertiesFilename is valid or not. If the file is invalid it should throw the appropriate exception.

- Check if the final output i.e. the questions is correct. For this we will check the following two values:

1. The number of questions is the same.

2. The points for each question are the same.

To check the above scenarios we have created AssignmentTests.java file.

TestResult.java

- Add a warning and check the expected value in the containsWarning function.

- Set the test score and check its expected value in the getScore function.

- Check the TestResult function with parameters and check the expected value of the parameters (score, warning, extraRows, missingRows)

To check the above scenarios we have created TestResultTests.java file.

FrontEnd.java

- Write Frontend information out to the properties file and check the value with set values(in the test case)

- Check to set the input parameters with a different datatype.

- Appropriate action should be taken if the file does not exist.

To check the above scenarios we have created FrontEndTests.java file.

SubmissionCollection.java

- Parsewriter and Commwriter files exist after the function is called.

- Check if the number of submissions is the same as expected - totalSubmissions is the same as filecount

- Check whether the submission file name is the same as expected.

- Appropriate action should be taken if the submission folder path does not exist.

To check the above scenarios we have created SubmissionCollectionTests.java file.

Task 3

Current Implementation

Currently, the project does not have any integrated build tool. We need to manually download the jars, build the project and run the jar. Instead, we want to automate the build process using the maven build tool.

Proposed Solution

We need to migrate the project to maven. We will be adding a pom.xml file correspondingly. We are going to add three kinds of profiles:

- Default or Development - This profile will be used when a developer is developing or making changes to the application.

- Testing - This profile triggers all the unit test cases that have been written and gives a consolidated output for the same.

Since the project is not used as a dependency anywhere else we don't plan to set up a deployment to the maven central repository.

To implement the proposed changes we have added pom.xml file.

Task 4

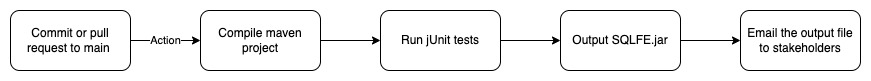

Currently, the project does not contain any automated build functionality. We plan to create a CI/CD pipeline for continuous build functionality which will generate an executable, compile all the JUnit test cases as well as, integrates supported databases.

Proposed Solution

We are thinking of using GitHub Actions for this, as they make it very easy to implement and automate workflows as per our requirement. Our workflow will do the following:

- Action will trigger whenever we make a commit or a pull request to the main/master branch.

- The action will compile the project using 'maven build' command and store the output jar inside project folder.

- We will use another action to email that jar to all the respective stakeholders (alternate solution could be to make it publicly accessible using GitHub pages).

- In case of a pull request, we will create a testing build so that it doesn't break any functionality and the stable build is accessible to those who want that.

To implement the proposed solution maven-build-merge.yml and maven-build-pr.yml files are created.

Task 5

Current Implementation

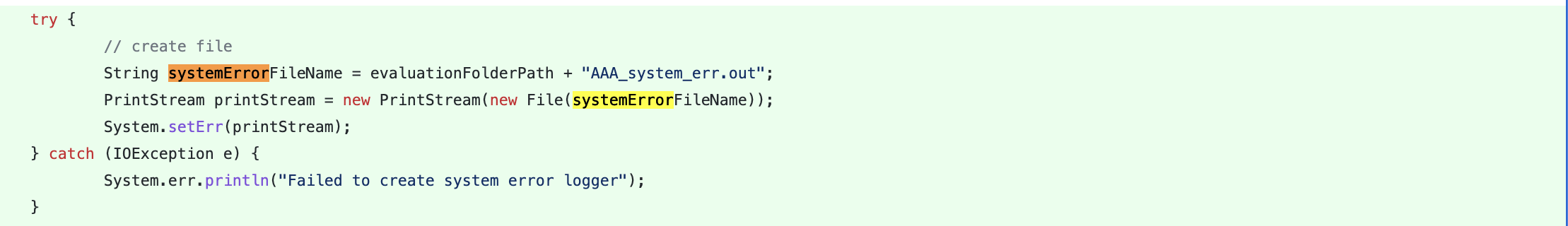

Any errors like use of wrong database credentials etc. are currently shown in the GUI for SQLFE. We plan to remove system errors visible to user via GUI to a file in /evaluations folder and hide them from the user.

Proposed Solution

We plan to create a new file `AAA_system_error.out` which will contain all the system errors/exceptions noticed by the application and remove all the code which was publishing that data to the GUI.

To implement the proposed solution the following files are modified:

- Assignment.java

- BackEnd.java

- FrontEnd.java

- FrontEndView.java

- MySQL5xDataAccessObject.java

- MySQL80DataAccessObject.java

- OracleDataAccessObject.java

- Submission.java

- SubmissionCollection.java

Test Plan

The tasks involve minimal code changes and require adding build/deployment functionality. For the code changes(Copy any system.err output to a file in the /evaluations folder instead of going to the console/GUI window.) we plan to add the following JUnit test:

- Create the wrong DAO object and check if a file with the relevant message is created correspondingly.

The rest of the tasks involve Manual testing where we will be manually triggering the builds etc. to check if the purpose of the task is met.

Manual Testing

Task 1

- Copy the current output file to a directory outside the project scope.

- Run the project generating the new output file.

- The generated output file should be formatted in the same manner as shown in the image above.

Task 3

- Trigger the maven build using: mvn clean install

- Check for the existence of a new jar SQLFE.jar is generated in the project's root directory.

Task 4

- Navigate to the project's GitHub page. Go to the Github actions page.

- Enter your email in the prompt.

- Choose a branch and trigger a build using Github actions.

- Check your mailbox for an automated mail containing a jar/.exe file.

Task 5

- Launch the project.

- Enter the wrong name for the database.

- Try to run an evaluation.

- The project will generate a new file in the evaluation folder containing the error. Check this file for appropriate messages and accurate format.

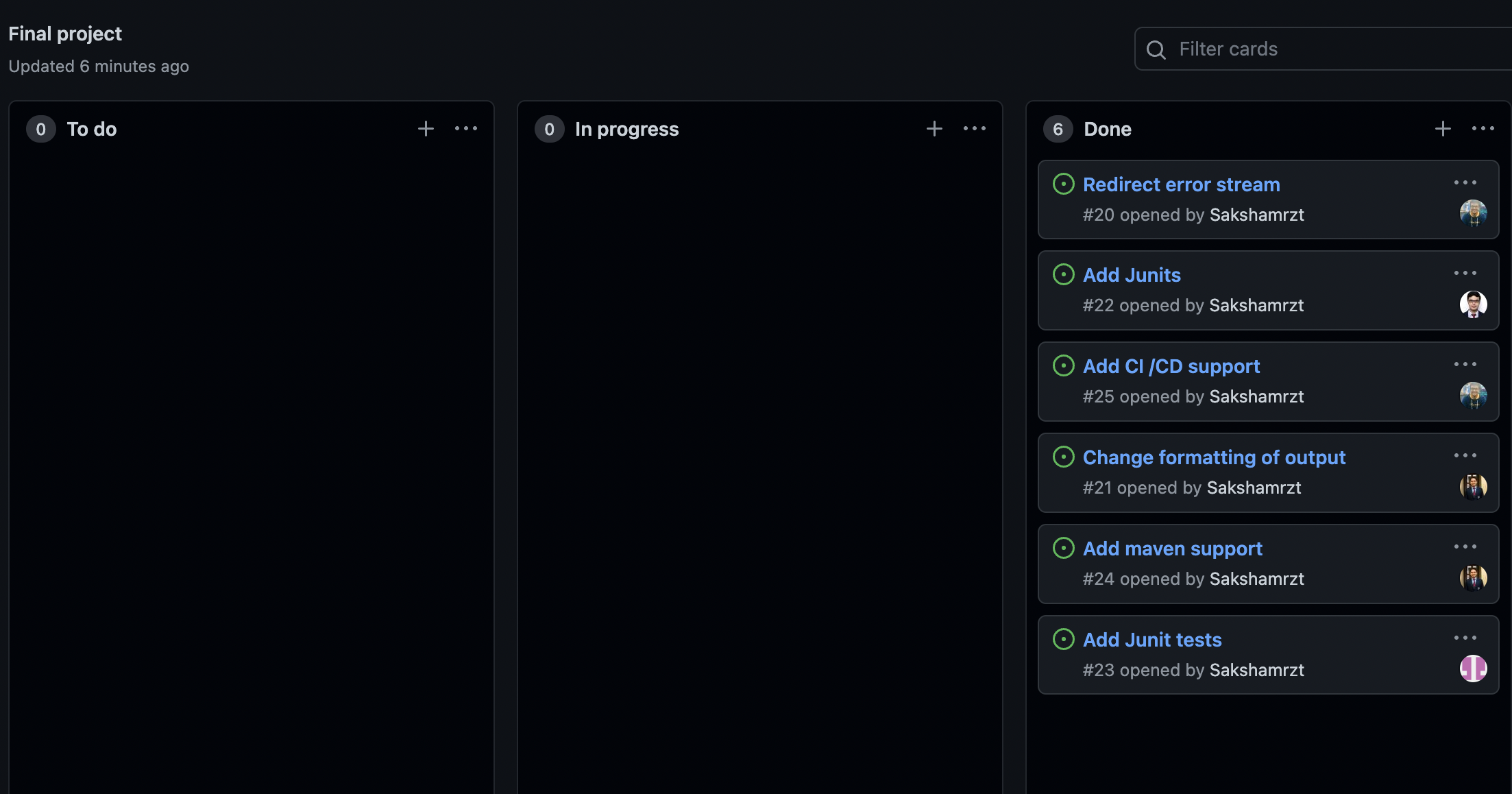

Track our works for collaboration

We have used Github projects to keep a track of all the tasks and the progress associated with those tasks.

Github and Related Link

The forked git repository for this project can be found [1].

The pull request can be found [2].

The demo can be found [3].

Team

Jackson Hardy(jmhardy3)

Rachit Sharma(rsharm26)

Saksham Thakur(sthakur5)

Shubhender Singh(ssingh54)

Mentor: Dr. Paul Wagner

Note: The requirement "Ensure that all file (of SQL queries) parsing errors are logged in the /evaluations/AAA_parse_problems file (this was broken by a previous contributor)" has been removed from the scope of the project.