CSC/ECE 517 Fall 2025 - E2563. Review tableau

This page contains information about E2563.Review calibration, which was a project in CSC517 Fall 2025.

Please see below for a description of the design of the project.

Background

Expertiza is an open-source application based on the Ruby on Rails framework. Instructors can create and manage assignments, and students can form teams for each one, as well as peer review other teams’ work. The frontend is written in TypeScript, and the backend is written in Ruby. The current codebase can be found here (FRONTEND, BACKEND), as well as in the external info section below. This work is part of an ongoing reimplementation effort of the following old Expertiza repository.

Problem Statement

Instructors need to be able to view the responses made by a student during rounds of peer review in order to accurately assign a grade for that review. To streamline this process, our goal is to design and implement a dedicated page that allows instructors to view all grading reviews submitted by a given student. This page, named the review tableau page, should organize and present the reviews in a clear, structured, and accessible manner. Specifically, the page must display all student grading reviews, grouped by assignment, round, and topic, with each group being rendered as a separate table.

Requirements

Core Requirements

- A user with the Instructor role can view the review tableau(s) for a given student from a dedicated page (the ‘tableau page’) of the Expertiza site.

- The tableau page can be accessed by clicking a “Summary” link under the “Reviews Done” column when viewing students in the instructor’s rewiew-grading dashboard.

- The tableau page has the following contents:

- A header, displaying the text “Review by Student, “ followed by the student’s ID.

- Individual tables (‘tableaus’), each corresponding to a round of reviewing from a specific assignment with a specific rubric used during the review.

- Tableaus are displayed in a single column on the tableau page.

- Tableaus are sorted first by the round in which the review was made, and then finally by the rubric used for review. This ensures that topics made chronologically close together are displayed sequentially. In the usual case, where the same rubric is used for all submissions, there will be only a single tableau. If rubric varies by topics, there may be multiple tableaus, reflecting the fact that the rubric may be different for different submissions reviewed by this reviewer.

- An individual tableau has the following contents:

- A label for the course number, semester, and year in which the review was made.

- A label for the assignment for which the review was made.

- A label for the round number for which the review was made.

- The primary table, which contains:

- A highlighted column, populated with questions asked on the rubric.

- An arbitrary number of additional columns, each corresponding to a review for that assignment and round that uses the rubric. Each of these is labelled after the team being reviewed and annotated with the date/time the review was submitted.

- Each row of these columns is populated with the student’s responses for the corresponding review and question.

- Note that a single tableau records the results of all reviews made using the same rubric for a particular round. If a student provided reviews for topics (frontend, backend, full stack) with varying rubrics, then a tableau will be created for each type of rubric filled out.

- Creating a backend API that can retrieve the student’s grading reviews and sort them per each assignment, round, and topic. (Functional and local development)

Design Requirements

- Follow the same design guidelines as reimplementation-front-end (https://github.com/AnvithaReddyGutha/reimplementation-front-end/blob/main/design_document.md).

- Use the same widgets (circles with numbers inside) that are used on the Response views.

Implementation

There are three key components to the implementation of the review tableau:

- A new front end page,

ReviewTableau.tsx, which consists of the view shown to the user accessing the review records. - A new front end service,

gradesService.ts, which converts the results of API calls into parameters for the view. - A new back end API GET endpoint in

grades_controller.rb, calledget_review_tableau_data.

The intended flow, once integration is complete with E2568's work, is for admins and instructors to view a dashboard of participants for a given assignment, select one participant whose reviews are to be displayed, and then the review tableau page is loaded with the appropriate information. Because this dashboard does not yet exist, and to avoid redundant work, we have left the review tableau page inaccessible except via manually inputting the corresponding URL. This is for demonstration purposes: authorization still occurs as if the current user had requested the tableau.

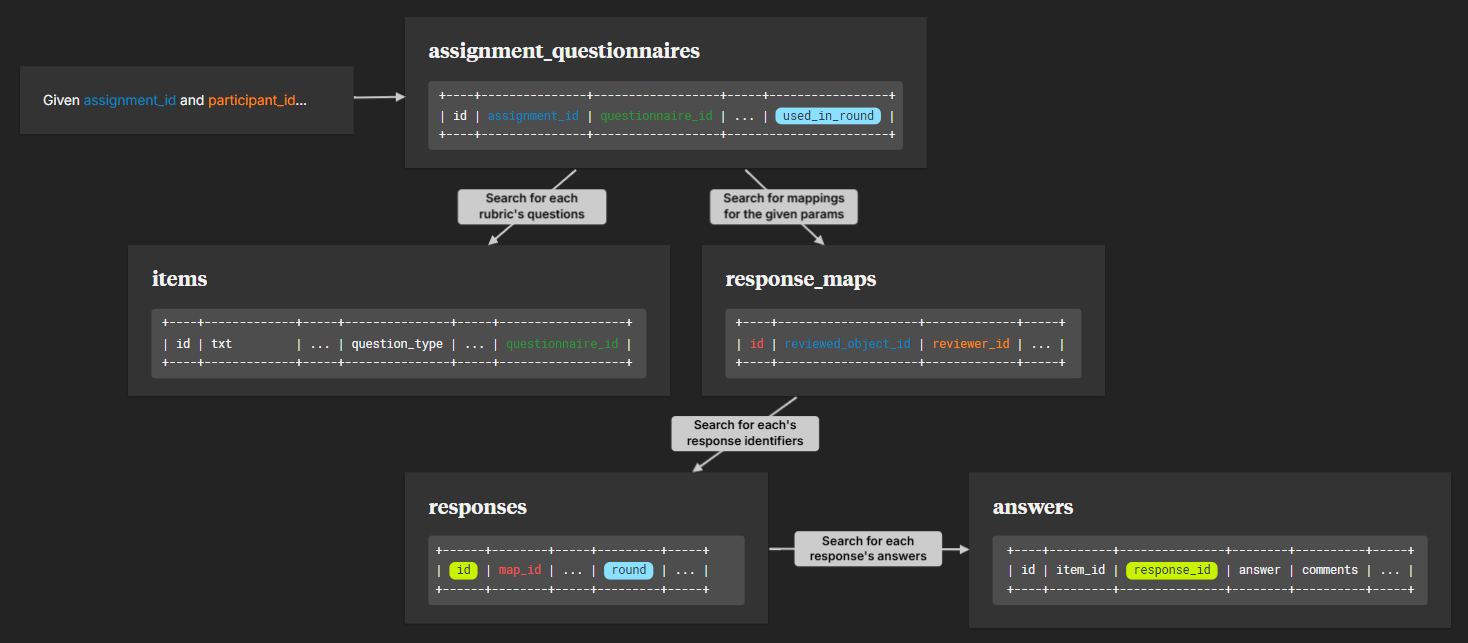

The get_review_tableau_data endpoint accepts two parameters: an assignment_id and a participant_id. The Assignment identifier is used to search the AssignmentQuestionnaires table for all Questionnaires corresponding to each round of review in the target Assignment. The corresponding Items ("questions" on a standard rubric) are retrieved from the Items table, and hashed with their identifiers into the resulting JSON. The function then searches the ResponseMaps table for all Responses made for the target assignment by the target participant (the reviewer), and cross-references them with the Responses table to obtain the round in which the Response was submitted. Finally, the Answers table is consulted for each response identifier, and the value / comment associated with each answer is stored in the same hash. The JSON output is received by the front end and displayed in tables, one for each round.

NOTE: The current implementation assumes that there is only one Questionnaire associated with each round of a particular Assignment. Although association of several rubrics within a single round of review is an intended feature, and unused support exists for it in both the front end and back end components being introduced, there is currently no way to disambiguate which Response objects correspond to which associated Questionnaires outside of looking at the round number. As such, the feature as described cannot be implemented until changes are made to the database as part of work by the "multiple rubrics per topic" team(s).

The API payload adheres to the following structure, before being read and turned into parameters usable by the front end view:

{

"responses_by_round": {

"1": {

"min_answer_value": 0,

"max_answer_value": 5,

"items": {

"1": {

"description": "Criterion 1",

"question_type": "Checkbox",

"answers": {

"values": [

1,

3,

4

],

"comments": [

"Auto-generated answer 1",

"Reviewer note 1: The response meets expectations. A small suggestion for criterion 1: be more specific about implementation details.",

"Reviewer note 1: Strong submission — add more explanation for criterion 1 to increase clarity."

]

}

},

"2": {

"description": "Criterion 2",

"question_type": "Scale",

"answers": {

"values": [

4,

4,

5

],

"comments": [

"Auto-generated answer 2",

"Reviewer note 1: Strong submission — add more explanation for criterion 2 to increase clarity.",

"Reviewer note 1: Well done. One minor improvement is to illustrate your point with a concrete example for criterion 2."

]

}

}

}

},

"2": {

"min_answer_value": 0,

"max_answer_value": 5,

"items": {

"1": {

"description": "Criterion 1",

"question_type": "Checkbox",

"answers": {

"values": [

3,

3,

4

],

"comments": [

"Round 2: Well done. One minor improvement is to illustrate your point with a concrete example for criterion 1.",

"Reviewer note 2: The response meets expectations. A small suggestion for criterion 1: be more specific about implementation details.",

"Reviewer note 2: Strong submission — add more explanation for criterion 1 to increase clarity."

]

}

},

"2": {

"description": "Criterion 2",

"question_type": "Scale",

"answers": {

"values": [

3,

4,

5

],

"comments": [

"Round 2: Good work on criterion 2. Clear and well-structured.",

"Reviewer note 2: Strong submission — add more explanation for criterion 2 to increase clarity.",

"Reviewer note 2: Well done. One minor improvement is to illustrate your point with a concrete example for criterion 2."

]

}

}

}

},

"3": {

"min_answer_value": 0,

"max_answer_value": 5,

"items": {

"1": {

"description": "Criterion 1",

"question_type": "Checkbox",

"answers": {

"values": [

5,

3,

4

],

"comments": [

"Round 3: Well done. One minor improvement is to illustrate your point with a concrete example for criterion 1.",

"Reviewer note 3: The response meets expectations. A small suggestion for criterion 1: be more specific about implementation details.",

"Reviewer note 3: Strong submission — add more explanation for criterion 1 to increase clarity."

]

}

},

"2": {

"description": "Criterion 2",

"question_type": "Scale",

"answers": {

"values": [

5,

4,

5

],

"comments": [

"Round 3: Good work on criterion 2. Clear and well-structured.",

"Reviewer note 3: Strong submission — add more explanation for criterion 2 to increase clarity.",

"Reviewer note 3: Well done. One minor improvement is to illustrate your point with a concrete example for criterion 2."

]

}

}

}

}

},

"participant": {

"id": 1,

"user_id": 4,

"user_name": "alton_mckenzie",

"full_name": "Prof. Tyler Keebler",

"handle": "alton_mckenzie"

},

"assignment": {

"id": 1,

"name": "ponder"

}

}

The finalized UI looks like this:

Test Plan

Manual Testing

- Login to Expertiza with an admin or instructor account.

- If the review dashboard exists:

- Navigate to the review dashboard for the desired assignment. Scroll to the desired participant, and click on the “summary” link.

- If the review dashboard is unimplemented:

- Navigate directly to http://localhost:3000/review-tableau?assignmentId=1&participantId=1, with both values replaced with the desired assignment/participant identifiers.

- Confirm that each table contains the items and answers for individual rubrics, arranged by individual responses and separate rounds of review.

Automated Testing

- npm tests for the new get_review_tableau_data endpoint (Review_Tableau.test.tsx).

- Parameter Validation - Ensures the component properly handles missing required URL parameters (assignmentId and participantId) by displaying an appropriate error message and preventing unnecessary API calls.

- Successful Data Loading - Verifies the complete data flow from API call to UI rendering, including proper display of reviewer information, course details, assignment name, and round headers.

- Error Handling - Tests graceful handling of API failures by mocking network errors and confirming error messages are displayed while hiding the main content.

- Loading States - Validates that loading indicators appear during data fetch operations and disappear once data is loaded, ensuring good user experience during async operations.

- Question Types - Tests the component's ability to handle multiple question types (Scale, Criterion, Dropdown) within a single rubric, verifying proper data transformation and rendering of mixed question formats.

Team Members

Adam Imbert (apimbert)

Bestin Lalu (blalu)

Yumo Shen (jshen23)