CSC/ECE 517 Fall 2025 - E2562. Review grading dashboard

Introduction

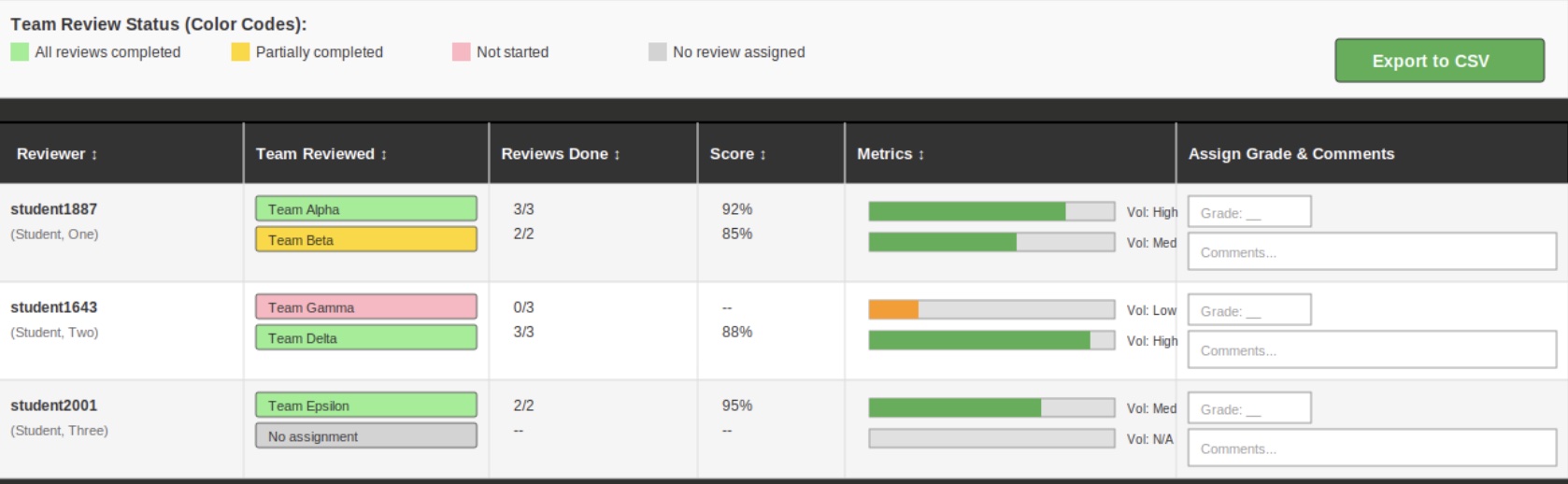

The Review Grading Dashboard is a new unified interface in Expertiza that modernizes the grading workflow by consolidating reviewer details, review statuses, computed scores, word-volume metrics, and instructor grading inputs into a single, sortable, and interactive dashboard. Built as part of the reimplementation project, this dashboard uses a modern REST API, reusable React components, and a clean architecture aligned with the new Expertiza design philosophy.

This document provides a **highly detailed technical explanation** of the backend logic, API design, data structures, architectural patterns, and internal workflows—intended for developers building or extending the Review Grading feature.

Background

Code repositories:

Legacy Expertiza scattered review information across many pages. The new dashboard replaces this fragmented approach with a single data-driven, API-powered interface.

Problem Statement

Before this redesign:

- Instructors had to open **each review individually**.

- Completion status, scores, and team mappings were on **different pages**.

- No central place existed to **see scores**, **assign grades**, or **visualize review quality**.

- No way existed to export all review results at once.

Requirements

Core Functional Requirements

- Dashboard must fetch all review data using a **single API call**.

- Must render:

- Reviewer details

- Assigned teams and review completion states

- Per-round scores

- Metrics on unique word usage

- Inline grading + comments

- CSV export must reflect **exactly what instructors see**.

- Must support multi-round review assignments.

Technical Requirements

- Data must be produced using **optimized queries**, minimal N+1 loading.

- Must use existing scoring method:

aggregate_questionnaire_score. - All metric calculations must be done server-side to ensure reproducibility.

- All API responses must follow reimplementation JSON standards.

- Frontend must use modular React components with predictable props.

Demo

Demo Video

Design Document

Images

✦ Technical Design: Deep Backend Explanation (Option A Expanded)

1. Data Aggregation Workflow

The dashboard requires combining information from several Expertiza models:

| Model | Purpose |

|---|---|

| Participant | Represents the reviewer as part of an assignment |

| ReviewResponseMap | Specifies which team a participant must review |

| Response | Stores the actual review answers and timestamps |

| Assignment | Determines the review rounds and questionnaires |

| Team | Represents the team being reviewed |

Because these models are spread across separate tables, the controller performs structured join operations:

Participant

.includes(:user)

.includes(review_response_maps:

[:reviewee, response: [:scores, :comments]])

This drastically reduces SQL calls, avoiding the classic N+1 problem from the legacy system.

2. Score Computation

The dashboard must compute weighted scores. This is done using:

response.aggregate_questionnaire_score

Internally, this:

- Fetches each question’s max score

- Normalizes each response

- Computes weighted total

The dashboard does **not** recalculate scoring logic—only aggregates output.

3. Review “Volume” Metric

Implemented in ResponseVolumeMixin:

def volume

text = answers.pluck(:comments).join(" ")

normalized = text.downcase.gsub(/[^a-z0-9\s]/i, '')

tokens = normalized.split.uniq

tokens.count

end

Technical details:

- Strips punctuation

- Splits on whitespace

- De-duplicates tokens using Ruby’s uniq

- Returns integer count of unique words

4. Multi-Round Review Handling

Assignments may have multiple review rounds. The dashboard must show one score + one metric per round.

Algorithm:

- Identify the round using

Response.round. - Group responses by

reviewer_idandround. - For each group:

- Compute aggregate score.

- Compute volume metric.

- Compute completion status.

5. Dashboard API JSON Structure

The API returns structured JSON:

{

"reviewers": [

{

"id": 12,

"username": "jdoe",

"fullname": "John Doe",

"reviews_assigned": 3,

"reviews_completed": 2,

"teams_reviewed": [

{ "team_id": 5, "team_name": "Team 1", "status": "done" },

{ "team_id": 6, "team_name": "Team 2", "status": "pending" }

],

"round_data": [

{

"round": 1,

"score": 88.6,

"volume": 73,

"average_volume": 65

}

],

"grade": null,

"comment": ""

}

]

}

6. CSV Export Internal Logic

The CSV generator produces:

Reviewer Username, Reviewer Name, Team Reviewed, Round 1 Score, Round 1 Volume, Round 2 Score, Round 2 Volume, Grade, Comments

Technical details:

- Columns vary based on number of rounds.

- Uses Ruby

CSV.generate. - Includes computed averages, not raw text.

✦ System Architecture (Option C Expanded)

1. High-Level Architecture Diagram

+-------------------+ +-----------------------+

| React Frontend | | Rails API Backend |

+---------+---------+ +-----------+-----------+

| |

| GET /review_dashboard_data |

+-------------------------------->+

| |

| JSON: reviewers, scores, |

| metrics, grades |

<--------------------------------+

| |

| POST /assign_review_grade |

+-------------------------------->+

| |

| GET /export_reviews_csv |

+-------------------------------->+

2. Detailed Component Interaction Diagram

React Dashboard

|

|-- calls ApiService.fetchDashboardData()

| |

| |-- axios GET /review_dashboard_data

| |

| |-- receives structured reviewer JSON

|

|-- renders ReviewTable

| |

| |-- for each row, render:

| - Reviewer identity

| - Review counts

| - Scores (per round)

| - MetricsChart (Recharts)

| - GradeInput

| - CommentInput

|

|-- on grade input:

| |

| |-- axios POST /assign_review_grade

|

|-- on export click:

|

|-- axios GET /export_reviews_csv

|

|-- triggers browser file download

3. Backend Internal Flow

ReviewGradingController#index | |-- loads Participants for assignment |-- loads ReviewResponseMaps |-- joins Users, Teams, Responses |-- computes score/volume per round |-- builds JSON structure |-- returns response

4. Error Handling & Data Failure Modes

Backend handles:

- Missing responses → score = null, volume = null

- Late submissions → status = "late"

- No reviews assigned → empty arrays

- Invalid grade → HTTP 422

Frontend handles:

- Empty dashboard → “No reviewers found”

- Fallback UI if metrics chart cannot render

- Retry option for failed grade submissions

5. Performance Considerations

- Uses

includesto prevent N+1 queries. - Computes all heavy metrics ahead of time on backend.

- JSON payload optimized to only include necessary fields.

- Recharts renders only visible metrics (virtual scrolling optional).

Data Flow

Frontend (React)

↓ GET /review_dashboard_data

Backend (Rails)

→ Aggregates Participant / Team / Response data

→ Computes:

• Score per round

• Unique-word volume

• Average volume per round

→ Returns JSON

Frontend

→ Renders reviewer rows + charts

→ Provides inline grading

POST /assign_review_grade

→ Updates grade + comment

GET /export_reviews_csv

→ Downloads CSV file

Test Plan

Backend Technical Tests

- Validate round grouping logic.

- Validate unique word calculation (stopword edge cases).

- Ensure response-to-round mapping is correct.

- Test every API error condition:

- invalid grade

- missing participant

- missing response

- incorrect assignment id

- CSV generator with zero reviewers

Frontend (Technical) Tests

- Test MetricsChart with:

- zero volume

- extremely large review text

- missing average

- multi-round assignments

- Test sorting stability (ascending/descending).

- Test grade input debounce + retry.

Team Members

- Deekshith Anantha

- Srinidhi

- Abhishek Rao

- Mentor: Ishani Rajput