CSC/ECE 517 Fall 2021 - Refactor Evaluation of SQL Queries

About SQL File Evaluation

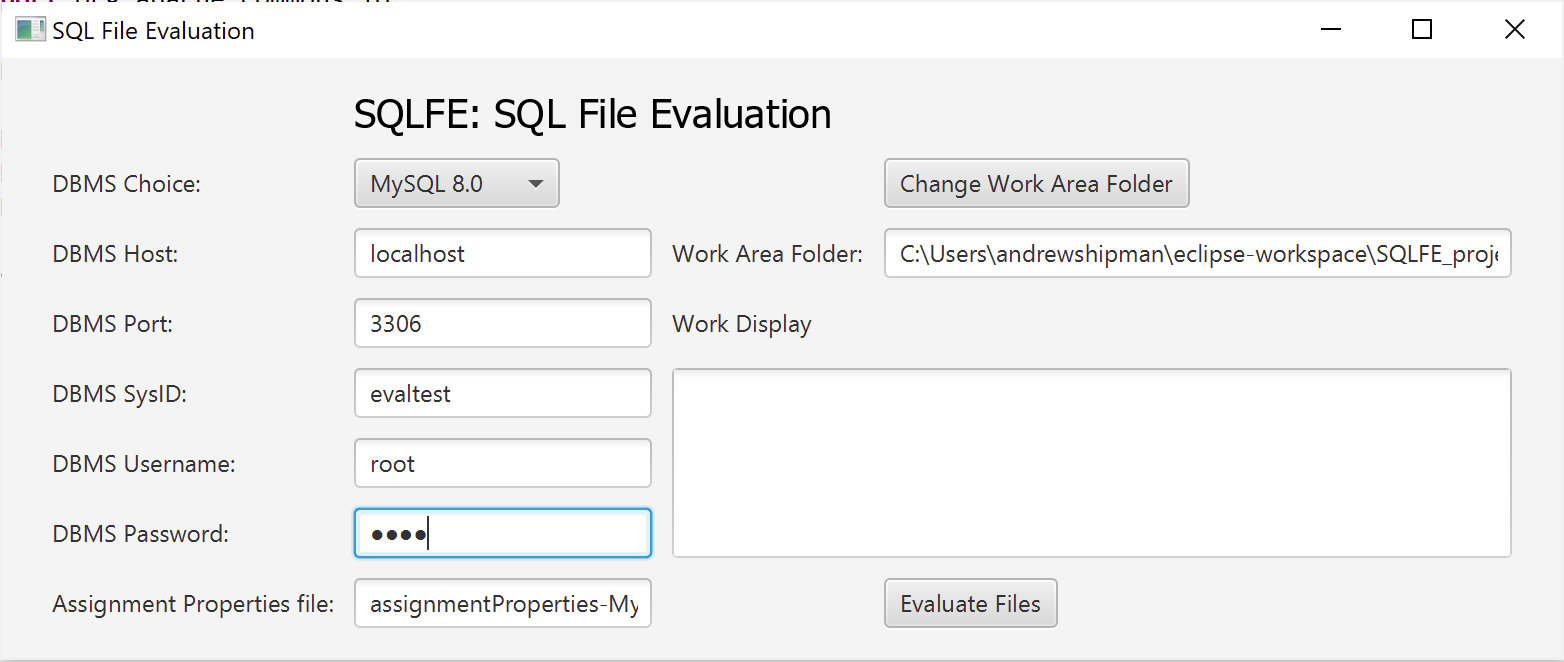

SQL File Evaluation is a program developed by DR. Paul Wagner to help with the grading of SQL exams given to students in a database systems class. The program parses through tests given to the students and compares their answer queries to the correct answers for the questions. This tool allows the professor to have tests graded in a matter of seconds that could traditionally take hours to grade. When the software is started it provides a GUI with options to connect to either an Oracle or MySQL database to run the answers against and grade the tests. Instructions must create their own assignment properties files which allows them to distribute points accordingly. Once all the inputs have been entered the software will run all of the tests answers, giving points according to the instructor's assignment properties file and produces an output file for each test as well as an overall summary file that tracks all of the students grades.

Project Goal

The goal of this project was to refactor the code of the back end of the software to improve clarity and readability. the main focus was on the refactoring of the evaluate method, which was part of the BackEnd class. The original version of the evaluate method was almost 200 lines of code and involved many different processes. Dr. Wagner said the method started out as a few lines but as the code evolved, it grew much larger and needed to be refactored. Our plan for the refactoring of the code involved a few key tasks which were:

- Breaking up sections of code that were focusing on the same task into separate methods

- Create checking functions for conditionals that contained a lot of ANDs and ORs

- Change names of variables that are only a single letter or a few letters to more descriptive names

- Add comments to describe what every section of code is doing

- Add comments to methods we created to state their purpose and keep the same styling.

- Ensure all code complies with SOLID principles

Following the refactoring, we also created junit tests to ensure the code was still working with the changes we had made to the code. The testing ensured the proper files were created as well as that all the necessary files were created. additionally the testing checks the summary file and compares it to a summary file that is known to be correct to ensure the summary (and all of the files within it are created properly.

Refactoring

One of the main goals of refactoring this code was to shorten the length of the evaluate method. We were able to shorten the previously almost 200 lines of code to less than 30 lines.

By analyzing the code to see which SOLID principles were being violated, we decided that one way we would tackle this issue was by breaking up the sections of code into various methods.

The evaluate method in BackEnd.java file was broken down 6 new methods. The evaluate method was too heavy and big with approx. 250 line of syntax. As per the new file it has been refactored to 30 lines. The new method have been given a descriptive names. Most of the variables were also renamed to be more descriptive. New for loops were used rather than ones with old and too many conditions. There were some not useful conditions which were removed. We even used forEach for making the code more up-to date and faster. New method names to which evaluated method was broken into are buildGradesWriter, getCurrentQuestions, evaluateTests, makeTestObject and processSubmission.

We added a buildGradesWriter method to set up the grade writer for the output.

private PrintWriter buildGradesWriter(Assignment a) { //sets up grade writer for output file

decFormat.setMaximumFractionDigits(2); //sets decimal formatting to 2 decimal places

PrintWriter gradesWriter = null; // grade summary file writer

// set up the assignment output file

try {

gradesWriter = new PrintWriter(gradesFileName, "UTF-8");

// output general information

gradesWriter.println("Assignment : " + a.getAssignmentName());

gradesWriter.println("");

} catch (IOException ioe) {

System.err.println("IOException in writing to file " + gradesFileName);

}

return gradesWriter;

} // end - buildGradesWriter

We added a getCurrentQuestions method to find the matching question for the answer.

private List<Question> getCurrentQuestions(List<Question> questions, QuestionAnswer questionAnswer) {

// find the matching question(submission) for the answer

List<Question> currQuestions = new ArrayList<Question>();

boolean foundOne = false;

boolean foundAll = false;

int questionIndex = 0;

while (questionIndex < questions.size() && !foundAll) {

int indexOfQNum = questions.get(questionIndex).getQNumStr().indexOf(questionAnswer.getQNumStr());

// first match

if (!foundOne && indexOfQNum == 0) {

foundOne = true;

currQuestions.add(questions.get(questionIndex)); // use this question

}

// subsequent match

else if (foundOne && indexOfQNum == 0) {

currQuestions.add(questions.get(questionIndex)); // add this question too

}

// first non-match after subsequent match

else if (foundOne) {

foundAll = true;

}

// not a match

questionIndex++;

} // end - while looking for question(submission) to match student answer

if (!foundOne) {

System.err.println("cannot find question");

}

return currQuestions;

} // end - getCurrentQuestions

We added an evaluateTests method that would create test objects containing the results of the evaluation.

private void evaluateTests(List<ISQLTest> questionTests, List<Integer> questionPercentages, List<String> questionConditions, List<EvalComponentInQuestion> questionEvalComps) {

// creates test object containing results of evaluation

for (EvalComponentInQuestion questionEvalComp : questionEvalComps) {

// get test names

String currTestName = "sqlfe.sqltests." + questionEvalComp.getEvalComponentName();

// make test object out of test name

questionTests.add(makeTestObject(currTestName));

// get current percents

questionPercentages.add(questionEvalComp.getPercent());

// get current condition

questionConditions.add(questionEvalComp.getCondition());

} // end - for each test in question

} // end - evaluateTests

We added a makeTestObject method to create test objects for evaluation.

private ISQLTest makeTestObject(String currTestName) { //creates test objects for evaluation

try {

Class<?> aClass = Class.forName(currTestName);

Object oTest = aClass.newInstance();

return (ISQLTest) oTest;

} catch (Exception e) { //error handling

System.out.println("exception in generating class object from name");

}

return null;

} // end - makeTestObjects

Lastly, we added a processSubmission method that parses submission to grade accuracy of questions.

private void processSubmission(Submission submission, List<Question> questions, PrintWriter gradesWriter) {

// parses submission to grade accuracy of questions

Utilities.threadSafeOutput("\nEvaluating " + submission.getSubmissionFileName() + ": \n ");

double submissionPoints = 0;

ArrayList<QueryEvaluation> queryEvals = new ArrayList<QueryEvaluation>();

// initialize output point string for grade summary file

String outputPointString = ": ";

// connect to data access object for each submission

dao.connect();

// process each question answer in the submission

ArrayList<QuestionAnswer> questionAnswers = submission.getAnswers();

if (questionAnswers != null) {

for (QuestionAnswer questionAnswer : questionAnswers) {

threadSafeOutput("Q" + questionAnswer.getQNumStr() + ".");

Query actualQuery = questionAnswer.getActualQuery();

List<Question> currQuestions = getCurrentQuestions(questions, questionAnswer);

// loop through all possible questions, evaluate, choose max

double highestPoints = -1.0; // set below zero so any evaluation is better

QueryEvaluation maxQE = null; // variable for the highest query evaluation

for (Question currQuestion : currQuestions) {

// get the desired query for this question

Query desiredQuery = currQuestion.getDesiredQuery();

// get the evaluation components for this question

List<EvalComponentInQuestion> questionEvalComps = currQuestion.getTests();

int maxPoints = currQuestion.getQuestionPoints();

ArrayList<ISQLTest> questionTests = new ArrayList<ISQLTest>();

ArrayList<Integer> questionPercentages = new ArrayList<Integer>();

ArrayList<String> questionConditions = new ArrayList<String>();

// evaluate all tests for this question

evaluateTests(questionTests, questionPercentages, questionConditions, questionEvalComps);

// build a query evaluation, evaluate and add this queryEvaluation to the current submission

QueryEvaluation queryEvaluation = new QueryEvaluation(actualQuery, desiredQuery, dao, maxPoints,

questionTests, questionPercentages, questionConditions, null, 0.0);

double questionPoints = queryEvaluation.evaluate();

// use maximum score if multiple options for question

if (questionPoints > highestPoints) {

highestPoints = questionPoints;

maxQE = queryEvaluation;

}

}

// end - for each question

queryEvals.add(maxQE); // add best queryEvaluation for this answer to the list

submissionPoints += highestPoints; // add the highest question score to the submission total

outputPointString += (decFormat.format(highestPoints) + ", "); // add highest points to string for grade summary output

} // end - for each question answer //try other method

submission.setTotalPoints(submissionPoints); // add the total points to the submission

submission.setQueryEvals(queryEvals); // add the query evaluations to the submission

submission.writeSubmission(evaluationFolderPath); // write out each submission'submission output file

} // end - if any question answers exist

// clean up/disconnect data access object

dao.disconnect();

// write each total grade to grades file

try {

gradesWriter.println(submission.getStudentName() + ": " + decFormat.format(submission.getTotalPoints()) + outputPointString);

} catch (Exception e) {

System.err.println("Error in writing to grades file " + gradesFileName);

}

} // end - processSubmission

These methods greatly shortened the length of the main evaluate method code which made it much increased its readability.

In addition to creating these new methods, we added comments to describe the methods' functions. We also used descriptive variable names instead of one letter variable names (or irrelevant variable names) that do not explain its purpose.

Testing

Testing was a necessary step in working on the open source software because when we received the code it was working and we needed to ensure it still worked after we made the changes in refactoring. Java has a testing frame work called junit that allowed us to create unit tests and verify the code worked. we implemented these tests on the the Backend method, which was the section of code that we refactored.

The first step in creating the test was to set up both a front and and a back end object so the code was able to run. This allowed for the tests to emulate the program when it was actually running. We manually set up all of the information needed for the code to connect to the the proper MySQL database so it could grade the exams. The code below shows how the unit test was set up to begin testing the back end class. The JFXPanel is needed to make sure the test runs properly as if a user was using the software. At the end of the code the evaluate method is called which is what we refactored.

class BackEndTests extends AbstractTest {

@Test

void test() {

try {

// initialize jfx for test to run

JFXPanel fxPanel = new JFXPanel();

// set default values for testing an input

// values will need to be changed for information used in personal testing database

String dbmsChoice = "MySQL 8.0";

String dbmsHost = "localhost";

String dbmsPort = "3306";

String dbmsSystemID = "evaltest";

String dbmsUsername = "root";

String dbmsPassword = "****";

String evaluationFolder = "C:\\Users\\andrewshipman\\eclipse-workspace\\SQLFE_project";

String assignPropFile = "assignmentProperties-MySQL";

FrontEnd f = new FrontEnd();

// set values for the front end used for testing

f.setDbmsChoice(dbmsChoice);

f.setDbmsHost(dbmsHost);

f.setDbmsPort(dbmsPort);

f.setDbmsSystemID(dbmsSystemID);

f.setDbmsUsername(dbmsUsername);

f.setDbmsPassword(dbmsPassword);

f.setEvaluationFolder(evaluationFolder);

f.setAssignPropFile(assignPropFile);

// setup back end and run evaluation

BackEnd b = new BackEnd(f);

f.setABackEnd(b);

b.transferData(f);

b.evaluate();

After the evaluate method is completed the testing is ready to begin to validate that the evaluate method did its job correctly. This is done in a few ways. The first way is that the program checks to make sure the summary file was created. This provides proof that the evaluate method at least ran and tried to create something. the code for this test is fairly simple:

// test if output file was created File testFile = new File(f.getEvaluationFolder() + "\\evaluations\\AAA_grade_summary.out"); assertTrue(testFile.exists());

The next test checks to see that the program created the proper number of output files. This should be 1 for each exam that was submitted into the files folder for evaluation plus an additional 3 files one for the summary, one for any problems that were found in the parser, and one containing the student comments. The code for this test is:

// test if there is the proper number of files File inputFiles = new File(f.getEvaluationFolder() + "\\files"); File outputFiles = new File(f.getEvaluationFolder() + "\\Evaluations"); assertEquals(inputFiles.list().length, (outputFiles.list().length - 3));

The final test checked the output summary of the program against a file that was known to be correct for the first 5 sample files that came with the java code. This test used an API for comparing the contents of 2 files to see if they are the same or different. The correct file is located in another folder in the project called TestingFiles. The code for this test is below:

// test if grade summary output is correct for 5 files // uses file in testing file to assert output is correct for 5 evaluations File compareFile = new File(f.getEvaluationFolder() + "\\TestingFiles\\Correct_5_evals_summary.out"); assertTrue(FileUtils.contentEquals(testFile, compareFile));

all of the tests passed along with manual testing and checking of all 66 sample files included with the project. This meant our refactoring achieved the same goal of the original program.

Usage

The software can be downloaded either as an executable or as a the source code which can be run through Java Eclipse or Intelij IDE. The documentation on how to download and run the program be found in the docs folder on the github repository.