CSC/ECE 517 Fall 2019 - E1975. Generalize Review Versioning

Introduction

Expertiza is a peer-review based learning platform in which work-products by students are evaluated and scored by peer-review of other students. Review process occurs in stages. After submission of initial feedback for a work-product by the peer-group, students are allowed time to incorporate the comments and improve their work. This second version of the work product is reviewed again and scores are given. This process might be repeated again and the average score from the last review stage is considered as the final score for that work-product. This incremental development of work-products and progressive learning is the fundamental concept underlying the Expertiza system.

Purpose

The system currently is designed to give a reviewer a new form for each round of review (rather than requiring the reviewer to edit an existing file) and automatically remove scores of reviews that are not redone. The system also triggers an email to reviewer when there is a change in the submission. The scores are calculated on the fly based on the rubrics.

Scope

This project will enhance the existing scoring and peer review functionality and the underlying implementation along with the testing of the existing implementation. Testing of the score calculation, display for the weighted and non-weighted scores based on the combination of the rubrics are part of this project. This project also includes an additional feature for the author to notify the reviewers for the change in the submission.

Background

The system currently is designed to give a reviewer a new form for each round of review (rather than requiring the reviewer to edit an existing file) and automatically remove scores of reviews that are not redone. The scores are calculated based on the rubrics defined in the system. There’s already an existing implementation for the computation of score but it doesn’t work for multipart rubric

Standards

The new standards that will be adhered to in this system are those given for the rails framework, and the code should follow rails and object oriented design principles. Any newly added code will adhere to the guidelines mentioned below:

● https://github.com/rubocop-hq/rails-style-guide

● http://wiki.expertiza.ncsu.edu/index.php/Object-Oriented_Design_and_Programming.

Problem Statement

Problem

The system currently is designed to give a reviewer a new form for each round of review (rather than requiring the reviewer to edit an existing file) and automatically remove scores of reviews that are not redone. The functionality needs to be tested. It works in some cases, but we are suspicious that it is not entirely correct.

Profile Email Preference:

User in expertiza has liberty to control what kind of emails they should receive. As the reviewers will receive an email for the change in submission. This can be controlled by selecting an option (When someone else submits work I am assigned to review) on the profile page of the user. As the checkboxes are far away on the right corner of the screen, the position of checkboxes need to be changed to make it more user friendly.

Scores for students in Expertiza can be based on a combination of rubrics: review rubrics for each round of review, author feedback, and teammate review. In CSC/ECE 517, we don’t really use the student-assigned scores to grade projects, but the peer-review score shown to the student is based entirely on the second round of review

Here is how scores are calculated:

1. If only zero-weighted scores are available (here, in the first round of review), the average of all zero-weighted scores should be shown in grey font. An information button should explain why the scores are shown in grey (“The reviews submitted so far do not count toward the author’s final grade.”):

2. If any non-zero weighted scores are available, then the score shown for the assignment should be the weighted average of the scores. For example, if Round 2 reviews are weighted 90% and author feedback is weighted 10%, and two Round 2 reviews each gave the work a score of 80%, and the only author-feedback score was 100%, then the overall score is::

80%⨉90% + 100%⨉10% = 82%

3. If a review is submitted, and then the author(s) update the submission before the end of the current round, it will reopen the review, and then the reviewer can go in and update the submitted review. However, the previous review score that was given in the current round will count until the reviewer updates the submitted review. This functionality doesn’t work for multipart rubrics.

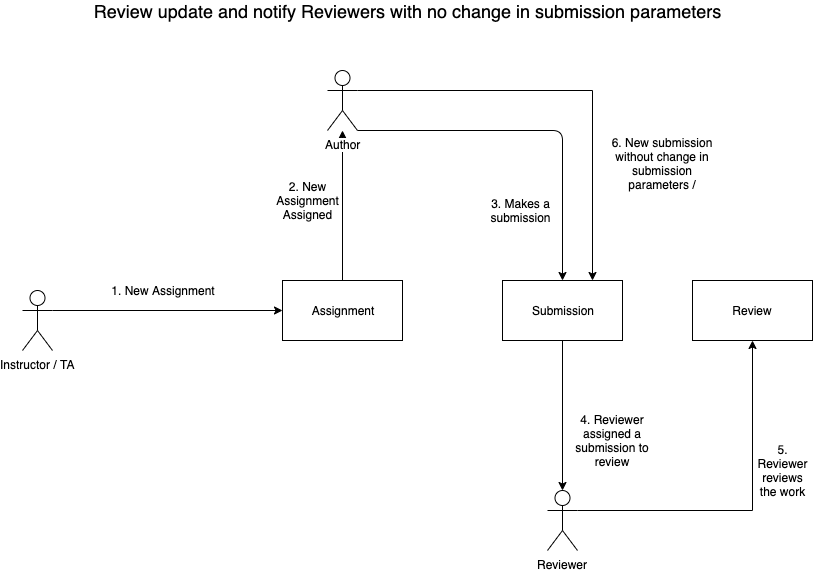

The system also doesn’t generate emails to reviewers in case a new submission is made by the author without changing submission parameters like submission URL. Eg: A new submission is made by making a new deployment. But the deployment URL doesn’t change. In this case as well, without changing the current flow the author should have an option to notify all the reviewers to revisit the submission.

Interface Requirement

The overall user interface would remain the same as of the current system. Only the checkboxes for email preferences on user profile will be moved closer to the text to be more visible.

Quality Requirements

The interface changes are minimal and very easy to adapt. The checkboxes will be clearly visible for email preferences. The proposed changes should not affect the current system functionality in any way. The solution is only intended to enrich the project features.

Performance Requirements

There are no performance overhead created while meeting the requirements.

Assumptions

The functionality should work in case the assignment rubric is not multi-part.

Proposed Solution

Profile Email Preference

We have modified the file app/views/users/_prefs.html.erb to move the check boxes to the left of the text.

Zero-weighted scores

If only zero-weighted scores are available in the first round of review, the average of all zero-weighted scores will be shown in a gray font. An information button will explain why the scores are shown in gray (“The reviews submitted so far do not count toward the author’s final grade”)

Inside compute_total_score method in on_the_fly_calc.rb module, we'll check if the weighted score exists, if not then we'll calculate the non weighted score. We'll also have to add a method in the questionnaire model to get non weighted score. Few changes are required for the UI to display non-weighted score in grey with information icon and weighted score in green.

Modified Files

app/models/assignment_participant.rb

app/views/grades/view_team.html.erb

We have added a new method weighted_scores_exist which will determine if there's any round which has non-zero weight assigned to it, if yes then the flag is_weighted will be set to true or false and based on the boolean value the css class to change the color of the score will be toggled.

def weighted_scores_exist(scores)

#This method checks if the round has a weight assigned to it#

#A more appropriate place for this method is the assignment_team class and needs to be moved in the future#

scores[:is_weighted] = true

scores.each do |score|

if score[0]["review"]

is_weighted = score[1][:is_weighted]

is_completed = score[1][:assessments].any?

if is_completed and !is_weighted

scores[:is_weighted] = false

end

end

end

scores

end

Non-zero weighted scores

If any non-zero weighted scores are available, then the score shown for the assignment should be the weighted average of the scores. For example, if Round 2 reviews are weighted 90% and author feedback is weighted 10%, and two Round 2 reviews each gave the work a score of 80%, and the only author-feedback score was 100%, then the overall score is 80%⨉90% + 100%⨉10% = 82%

The score calculation was initially tested manually to verify the scenarios with multiple weights and rounds. Also, existing spec for score calculation with a weighted average for both varying and non-varying rubric is covering this scenario. The spec is written under /spec/models/assignment_spec.rb. The "score" function of the "model/assignment.rb" is called for online (on the fly score calculation).

Following file has the spec for weighted average score calculation.

spec/models/assignment_spec.rb

Review Update

If a review is submitted, and then the author(s) update the submission before the end of the current round, it will reopen the review, and then the reviewer can go in and update the submitted review. However, the previous review score that was given in the current round will count until the reviewer updates the submitted review. However, the previous review score that was given in the current round will count until the reviewer updates the submitted review.

This feature can be broken down into the following sub-cases:

1. A submission can only be made before deadline

2. A review can edit a review only before deadline

3. Score calculation should be only done for latest submitted review

Test Plan

Rspec

New test cases has been added to test and validate the functionalities which are not working as expected. Also, the test cases for features that will be newly added to the system, will also be written. Test cases has been added to these files:

spec/features/post_deadline_review_submission_sepc.rb

spec/models/assignment_participant_spec.rb

The scenarios covered by the newly introduced test cases are as follows:

1. Zero weighted scores with gray color: rspec spec/model/assignment_participants.rb

2. Non-zero weighted scores with green color: rspec spec/model/assignment_participants.rb

3. Review update: rspec spec/features/post_deadline_review_submission_sepc.rb

The test for "submission after deadline" and "review after deadline" are added as they were not present. A new spec file under spec/features by name post_deadline_review_submission_spec.rb has been created to accommodate the two cases.

1. Spec to ensure submission can't be made after the deadline

File : spec/features/post_deadline_review_submission_spec.rb

it 'should not allow submission after deadline' do

user = User.find_by(name: "student2065")

stub_current_user(user, user.role.name, user.role)

# goto student_task page, which has link to "Your work"

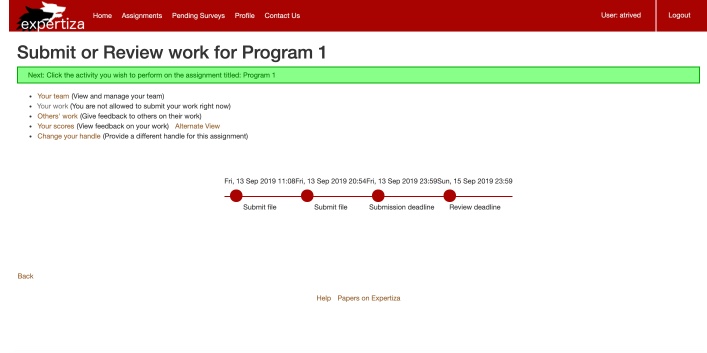

visit 'student_task/view?id=1'

# the page will have text "Your work" but will be grayed

expect(page).to have_content "Your work"

# the page will not have link to content "Your work"

expect{click_link "Your work"}.to raise_error(Capybara::ElementNotFound)

end

2. Spec to ensure review can be done only before deadline

File : spec/features/post_deadline_review_submission_spec.rb

it "should not be able to review work after deadline" do

# The spec is written to reproduce following bug. "Others' work" link open after deadline passed

user = User.find_by(name: "student2065")

stub_current_user(user, user.role.name, user.role)

visit 'student_task/view?id=1'

# the page should have content, but after deadline passes it is displayed as gray

# but there should not be any link attached to it

expect(page).to have_content "Others' work"

# this is the bug, even after deadline has passed, the link is still present

# the ui comment in file views/student_task/view.html.erb says

#

# But the link seems to be open even after deadline passed.

# Screenshot attached as part of wiki for E1975, Fall 2019

expect(page).to have_link("Others' work", "/student_review/list?id=1")

end

The test scenario is able to produce the following bug. At times the review link is open even after the deadline has passed. The bug is also shown/reproduced in the following screenshot for Program 1.

3. Score calculation

Score calculation for assignments for review is part of models/assignment.rb. The score calculation is handled by function "score" in this file and the testing is done for both multipart varying and non-varying rubric. The respective spec is written in file spec/models/assignment_spec.rb.

describe '#scores' do

context 'when assignment is varying rubric by round assignment' do

it 'calculates scores in each round of each team in current assignment' do

allow(participant).to receive(:scores).with(review1: [question]).and_return(98)

allow(assignment).to receive(:varying_rubrics_by_round?).and_return(true)

allow(assignment).to receive(:num_review_rounds).and_return(1)

allow(ReviewResponseMap).to receive(:get_responses_for_team_round).with(team, 1).and_return([response])

allow(Answer).to receive(:compute_scores).with([response], [question]).and_return(max: 95, min: 88, avg: 90)

expect(assignment.scores(review1: [question]).inspect).to eq("{:participants=>{:\"1\"=>98}, :teams=>{:\"0\"=>{:team=>#<AssignmentTeam id: 1, "\

"name: \"no team\", parent_id: 1, type: \"AssignmentTeam\", comments_for_advertisement: nil, advertise_for_partner: nil, "\

"submitted_hyperlinks: \"---\\n- https://www.expertiza.ncsu.edu\", directory_num: 0, grade_for_submission: nil, "\

"comment_for_submission: nil>, :scores=>{:max=>95, :min=>88, :avg=>90.0}}}}")

end

end

context 'when assignment is not varying rubric by round assignment' do

it 'calculates scores of each team in current assignment' do

allow(participant).to receive(:scores).with(review: [question]).and_return(98)

allow(assignment).to receive(:varying_rubrics_by_round?).and_return(false)

allow(ReviewResponseMap).to receive(:get_assessments_for).with(team).and_return([response])

allow(Answer).to receive(:compute_scores).with([response], [question]).and_return(max: 95, min: 88, avg: 90)

expect(assignment.scores(review: [question]).inspect).to eq("{:participants=>{:\"1\"=>98}, :teams=>{:\"0\"=>{:team=>#<AssignmentTeam id: 1, "\

"name: \"no team\", parent_id: 1, type: \"AssignmentTeam\", comments_for_advertisement: nil, advertise_for_partner: nil, "\

"submitted_hyperlinks: \"---\\n- https://www.expertiza.ncsu.edu\", directory_num: 0, grade_for_submission: nil, "\

"comment_for_submission: nil>, :scores=>{:max=>95, :min=>88, :avg=>90}}}}")

end

end

end

Manual Testing

Step 1. The instructor will create an assignment and assign the zero and non-zero weights to the rubrics.

Step 2. The author submits the work done in expertiza.

Step 3. Peers check the submitted work and give reviews for it.

Test 1: Zero weighted scores shown in gray.

Login as an author in expertiza and go to scores page to view the feedback.

Zero weighted scores should be displayed in gray to the logged in user.

Test 2: Information button.

Login as an author in expertiza and go to scores page to view the feedback.

The information button should explain why the scores are shown in gray (“The reviews submitted so far do not count toward the author’s final grade.”)

Step 4. The mentor evaluates the final submission and give grades.

Test 3: Display non-zero weighted scores

Login as an author in expertiza and go to scores page to view the feedback.

The scores shown for the assignment should be the weighted average of the scores.

The zero weighted scores have to be replaced with non-zero weighted scores.

Step 5: The author updates the work and re-submits it again.

Test 4: Review Update

The Review should be reopened. The reviewer should be able to go and update the submitted review.

If the review is not updated the previous review score has to be retained.