CSC/ECE 506 Spring 2013/10b ps

Topic_Writeup

Past Wiki 1

Past Wiki 2

Introduction

In this wiki we seek to perform a survey of memory consistency models in modern microprocessors. We will begin by giving a brief synopsis of what memory consistency is and how it can be achieved. We will also discuss some legacy systems that utilized some initial consistency models and then discuss how those models evolved into more sophisticated versions in more recent years. After we have this foundation we will move into more advanced architectures and implementations typically seen today.

What is Memory Consistency

In order for our discussion to begin we must understand what a Memory Consistency Model is. The Memory Consistency Model specifies how memory behaves when multiple processors do reads and writes to the same location. Because this concept has such a vast scope for such a critical role to the system, memory consistency impacts system design, programming languages, and underlying hardware.

This is critically important because we want our systems to be as efficient as possible. In order to achieve this goal there are various optimizations the system performs to overlap operations and allow these operations to complete out-of-order. To add to this challenge, there are also several optimizations the compiler can perform to increase execution efficiency. It is intuitive to think that instructions are executed sequentially but in reality this execution really only needs to be sequential where operations operate on the same memory location. As multi-processor systems and parallel computing became more of a necessity in the technology industry the necessity for correct and highly efficient memory consistency models came into place. Memory consistency allows for techniques to optimize execution but also puts a contract in place to ensure correctness between the programmer and the system. In short, allowing tasks to execute in parallel is great for improving performance, but it also introduces new challenges because these tasks can now complete in different order than how they were initially conceived [16, 10].

How Memory Consistency Used to be Done

It is intuitive to think of operations executing on a multi-processor system to behave the same as instructions executing on a single processor system. This an interleaving model called Sequential Consistency and is the classical approach to consistency can be achieved. It was formally defined by Lamport as, “The result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operations of each individual processor appear in this sequence in the order specified by its program.” What this means is that all memory access are immediately visible to all processors.

This type of system was seen in the 1980’s and present in system like the 386-based Compaq SystemPro. The key way Sequential Consistency was able to achieve overlap was to put writes in a write buffer so that the computation can be overlapped to the next read. Memory operations are issued in program order by processors. Because operations are executed one at a time they appear to be atomic with respect to each other.

The advantages to a model like this is that it is easy to understand from a programmer perspective. Unfortunately the rules put in place by this model are very restrictive and can negatively impact performance. Sequential Consistency disallows the reordering of shared memory operations between processors. The drawbacks of this strict model produces high latency and very little benefit of true serial execution. This is because reads tend to be closely interleaved with writes yielding very little utilization of the write buffer.

Memory Consistency Today

While maintaining Sequential Consistency guarantees correctness, it is very limiting in terms of performance as only one instruction may be executed at a time and executing any instructions requiring memory access atomically. As a result, widely implemented computer architectures try to reduce the requirements imposed by the Sequential Consistency model while still maintaining program correctness. These Relaxed Consistency models allows for the processor to make adjustments in the order of memory accesses in order to execute the instructions more efficiently.

Relaxed models can be categorized based on how they relax the program order constraint. Below are three general categories we will be examining during this discussion:

1. Write to Read relaxation: allow a write followed by a read to execute out of program order. Examples of models that provide this relaxation include the IBM-370 and the Sun SPARC V8 Total Store Ordering (TSO).

2. Write to Write relaxation: allows two writes to execute out of program order. An example of a model that provides this relaxation is the Sun SPARC V8 Partial Store Ordering (PSO).

3. Read to Read, Write: allows reads to execute out of program order with respect to their following reads and writes. Examples of models that provide this relaxation include the DEC Alpha (Alpha), the Sun SPARC V9 Relaxed Memory Order (RMO), and the IBM PowerPC (PowerPC).

Apart from the program order relaxation, some of these models also relax the write atomicity requirement. Some examples of this type of this type of consistency requirement relaxation are

4. Read others’ write early: allow a read to return the value of another processor’s write before the write is made visible to all other processors for cache-based systems.

5. Read own write early: a processor is allowed to read the value of its own previous write before the write is made visible to other processors.

Implementations

Below we look at some Relaxed Consistency models that were implemented commercial systems. We divide our discussion of commercialized systems into two major categories, the more traditional computing architectures that are found in servers, large clusters, and in personal computers, and the newly emerging mobile devices which are becoming more sophisticated each day.

Note that a single architecture may employ multiple Relaxed Consistency models, we are just selecting some examples that illustrate how each memory consistency model works.

Commercial Computers

Write to Read relaxation:

In Write to Read relaxation, we allow program order optimization by allowing a read to be reordered with respect to previous writes from the same processor and sequential consistency is maintained through the enforcement of the remaining program order constraints. Though relaxing the program order from a write followed by a read can improve performance, this reordering is not sufficient for compiler optimizations. Most compiler optimizations require the full flexibility of reordering any two operations in program in addition to just write to read. The IBM-370 and the SPARC V8 TSO use Write to Read relaxation with the primary difference between them being when they allow a read to return the value of a write.

IBM-370

The IBM-370 model relaxes certain writes to reads program order but maintains the atomicity requirements of Sequential Consistency. It enforces the program order constraint from a write to a following read, by using the safety net serialization instructions that may be placed between the two operations. This method of relaxed consistency model is the most stringent, only next to Sequential Consistency.

It should be noted that Write to Read relaxed consistency model implemented by the IBM-370 still requires program order for a write and a read in the following three cases:

- A write followed by a read to the same location [depicted by dashed line in between W and R in the figure].

- If either the write or the read are generated by a serialization instruction [WS , RS].

- If there is a non-memory serialization instruction such as fence instruction [F] between the write and read.

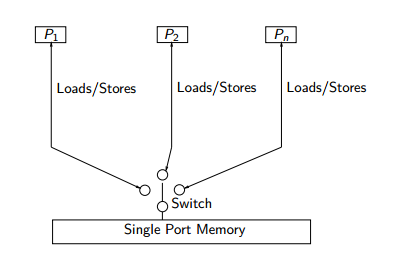

The conceptual system shown in the above figure is similar to that used for representing Sequential Consistency. The main difference is the presence of a buffer between each processor and the memory. Since we assume that each processor issues its operations in program order, we use the buffer to model the fact that the operations are not necessarily issued in the same order to memory. The cancelled reply path from the buffer to the processor implies that a read is not allowed to return the value of a write to the same location from the buffer.

The IBM-370 model has two types of serialization instructions: special instructions that generate memory operations (e.g., compare-and-swap) and special non-memory instructions (e.g., a special branch)[9]

'SPARC V8 Total Store Ordering (TSO)'

The total store ordering (TSO) model is one of the models proposed for the SPARC V8 architecture. TSO always allows a write followed by a read to complete out of program order; i.e reads issued to the same pending writes are allowed to be reordered. Read returns a previous write value if present, else it fetches the value from the memory. All other program orders are maintained. This is shown in the conceptual system below by the reply path from the buffer to a read that is no longer blocked.

For TSO, a safety net for write atomicity is required only for a write that is followed by a read to the same location in the same processor. The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes.

'Difference between IBM-370 and SPARC V8 TSO:'

Let us consider the program segment below to demonstrate the difference between TSO and IBM 370 models:

First consider the program segment in (a). Under the SC or IBM-370 model, the outcome (u,v,w,x)=(1,1,0,0) is disallowed. However, this outcome is possible under TSO because reads are allowed to bypass all previous writes, even if they are to the same location; therefore the sequence (b1,b2,c1,c2,a1,a2) is a valid total order for TSO. Of course, consistency value requirement still requires b1 and b2 to return the values of a1 and a2, respectively, even though the reads occur earlier in the sequence than the writes. This maintains the intuition that a read observes all the writes issued from the same processor as the read. Consider the program segment in (b). In this case, the outcome (u,v,w,x)=(1,2,0,0)is not allowed under SC or IBM-370, but is possible under TSO.[9]

Write to Write relaxation:

For Write to Write relaxation we allow: 1. Two writes to execute out of program order. 2. A write followed by a read execute out of program order.

This relaxation enables a number of hardware optimizations, including write merging in a write buffer and overlapping multiple write misses, thus servicing the writes at a much faster rate. However, this relaxation is still not sufficiently flexible to be useful to a compiler. The SPARC V8 Partial Store Ordering model (PSO) is an example of a system that uses Write to Write relaxation. SPARC V8 Partial Store Ordering The Partial Store Ordering (PSO) model is similar to the TSO model for SPARC V8. The figure below shows mostly an identical conceptual system, with only a slight difference in the program order. This relaxation allows writes to locations to be overlapped if they are not to same locations. This is represented by the dashed line between the W's in the program order figure. Safety net for the program order is provided through a fence instruction, called the store barrier or STBAR, which may be used to enforce the program order between writes.

Let us consider an example to demonstrate the working of PSO.

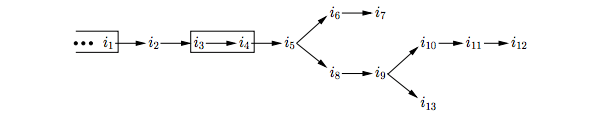

With relaxed Write to Read models, the only value for (u,v) is (1,1). With PSO, since it allows writes to different locations to complete out of program order, it also allows the outcomes (0,0) or (0,1) or (1,0) for (u,v). In this example, STBAR instruction needs to be placed immediately before c1 on P1 in order to disallow all outcomes except (1,1).[9] Read to Read and Read to Write relaxation: For Read to Read and Read to Write we shed the restrictions on program order between all operations, including read to read and read followed by write to different locations . This flexibility provides the possibility of hiding the latency of read operations by implementing true non-blocking reads in the context of either static (in-order) or dynamic (out-of-order) scheduling processors, supported by techniques such as non-blocking (lockup-free) caches and speculative execution. The compiler also has full flexibility to reorder operations.

The DEC Alpha, Relaxed Memory Order (RMO) on the SPARC V9, and PowerPC are examples of systems using this model. Except for Alpha, all the other models allow reordering of two reads to the same location.

All of the models in this group allow a processor to read its own write early with the exception of the PowerPC. All these architectures provide explicit fence instructions to ensure program order, however it should be noted that the frequent use of fence instructions can incur a significant overhead due to an increase in the number of instructions and the extra delay that may be associated with executing fence instructions.

DEC Alpha The DEC Alpha allows reads and writes to different locations to complete out of program order unless there is a fence instruction between them. Memory operations to the same location, including reads, are required to complete in program order. Safety net for program order is provided through the fence instructions, the memory barrier (MB) and the write memory barrier (WMB). The MB instruction can be used to maintain program order between any memory operations, while WMB instruction provides this guarantee only among write operations. The Alpha model does not require a safety net for write atomicity. The conceptual system shown above is the same as IBM 370 which requires a read to return the value of the last write operation to the same location. But since we can relax the program order from a write to a read, we can safely exploit optimizations such as read forwarding.

SPARC V9 Relaxed Memory Order (RMO) The SPARC V9 architecture uses RMO which is an extension of the TSO and PSO models used in SPARC V8. The Read to Read, Read to write program order is relaxed in this model, like in PSO. But a Read to Write or the order between two writes to the same location are not relaxed. This is shown in the figure for program order below. RMO provides four types of fences [F1 through F4] that allow program order to be selectively maintained between any two types of operations. A single fence instruction, MEMBAR, can specify a combination of the above fence types by setting the appropriate bits in a four-bit opcode. No safety net for write atomicity is required.

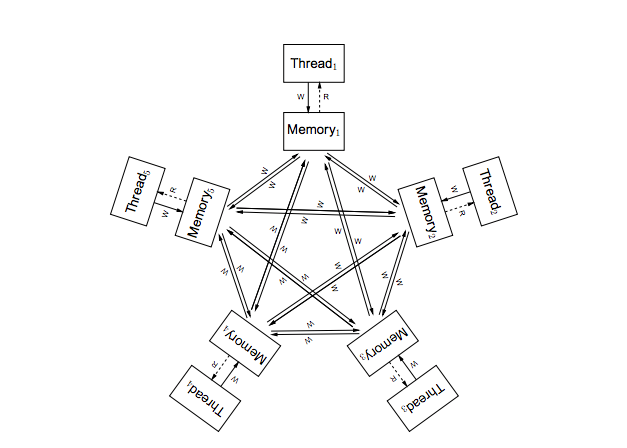

IBM PowerPC The PowerPC does constrain the program order in some situations such as: -Between sub-operations. That is each operation may consist of multiple sub-operations and that all sub-operations of the first operation must complete before any sub-operations of the second operation, represented by the double lines between operations in the figure below. -Writes to the same location. -Among conflicting operations.

The IBM PowerPC model exposes multiple-copy semantics of memory to the programmer as shown in the conceptual system above. The safety net fence instruction is called SYNC which is similar to the MB fence instruction of the Alpha systems. However, SYNC between two reads allows the instructions to occur out of program order. Also the PowerPC allows a write to be seen early by another processor’s read. Hence a read-modify-write operation may be needed to enforce program order between two reads to the same location as well as to make writes appear atomic.

The table below summarizes the program constraint under each model we have described so far and other commercial servers that also implement these three Relaxed Consistency models (these additional examples are not discussed above).

Relaxed Consistency Method Commercial System or Architecture W-> R W -> W R -> R and/or R->W IBM-370 Yes SPARC V8 TSO Yes SPARC V8 PSO Yes Yes DEC Alpha Yes Yes Yes SPARC V9 RMO Yes Yes Yes IBM PowerPC Yes Yes Yes AlphaServer 8200/8400 Yes Yes Yes Cray T3D Yes Yes Yes SpareCenter1000/2000 Yes Sequent Balance Yes AMD 64 Yes Yes IA64 Yes Yes Yes PA-RISC Yes Yes Yes x86 Yes Yes

Mobile Devices

Processors that are designed to be quick, low power, low heat, and capable of supporting many applications are becoming very popular in today’s technology space. From tablets to smartphones, many of these devices are run on ARM, and to a lesser extent IBM POWER architecture. This type of multiprocessors utilize highly relaxed memory models. This allows a wide range of processor optimizations including better performance, better power usage, and simpler hardware. The pillars that ARM architecture are built upon to achieve as much hardware optimization as possible is:

1. Reads and Write can be done out of order. This includes speculative reads and writes that are a part of certain branches not yet reached. Many other relaxed memory models only allow local reordering under specific conditions, but in ARM any local reordering is allowed unless conditions are implemented by the programer.

2. The memory system does not guarantee that writes will become visible to all other hardware threads at the same point.

In order to conceptualize the architecture think of each as effectively having it’s own copy of memory. A write by one thread may propagate to other threads in any order. When this propagation happens they may be interleaved arbitrarily. This allows for great hardware execution optimization but also put a responsibility on the programmers shoulders to instill barriers if they want to constrain this behavior.

Given these properties, a greater focus is put on the programmer-observable behavior. This is the behavior exhibited by a multithreaded program as it is executed and analyzing the results. This is not related to performance but instead the correctness of the results. The looseness of the ARM architecture must be taken into account by the programmer and they must realize that their multithreaded application may exhibit different observable behavior given the conditions it is run under.

Quiz

References

1. http://www.intel.com/content/www/us/en/architecture-and-technology/64-ia-32-architectures-software-developer-vol-3a-part-1-manual.html

2. http://pic.dhe.ibm.com/infocenter/lnxinfo/v3r0m0/topic/liaaw/ordering.2006.03.13a.pdf

3. http://dl.acm.org/citation.cfm?id=1941553.1941594

4. http://home.deib.polimi.it/speziale/tech/memory_consistency/mc.html

5. http://homes.cs.washington.edu/~djg/papers/asf_micro2010.pdf

6. http://people.ee.duke.edu/~sorin/papers/asplos10_consistency.pdf

7. http://www.rdrop.com/users/paulmck/scalability/paper/whymb.2010.06.07c.pdf

8. http://en.wikipedia.org/wiki/Memory_ordering

9. http://www.montefiore.ulg.ac.be/~pw/cours/psfiles/struct-cours12-e.pdf

10. http://www2.engr.arizona.edu/~ece569a/Readings/ppt/memory.ppt

11. http://ipdps.cc.gatech.edu/2000/fmppta/18000989.pdf

12. http://preshing.com/20120930/weak-vs-strong-memory-models

13. http://csg.csail.mit.edu/pubs/memos/Memo-493/memo-493.pdf

14. http://classes.soe.ucsc.edu/cmpe221/Spring05/papers/29multi.pdf

15. http://os.inf.tu-dresden.de/Studium/DOS/SS2009/04-Coherency.pdf

16. http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf

17. http://www.cl.cam.ac.uk/~pes20/ppc-supplemental/test7.pdf

18. http://www.cl.cam.ac.uk/~pes20/ppc-supplemental/pldi105-sarkar.pdf

19. http://www.cs.utah.edu/formal_verification/publications/conferences/pdf/charme03.pdf

20. http://www.cl.cam.ac.uk/~pes20/weakmemory/x86tso-paper.tphols.pdf