CSC/ECE 506 Spring 2012/11a ht

Introduction

When dealing with a relatively small number of processors (8-16), according to Solihin 320, using a bus based shared memory structure is fine. Unfortunately, when you need to provide a shared memory structure for processors much greater than that, you will need a different set of organization. This new organization is needed due to the physical limitations of the bus. There are two ways you can create such a system. These include Distributed Shared Memory (DSM) or Non-Uniform Memory Access (NUMA). The benefits of having a DSM and NUMA is that we can now scale to a larger amount of processors. The disadvantage is that scaling in such a way may not be the most cost-effective solution, Solihin 320. For the remainder of this section, we will be discussing the performance of DSM's.

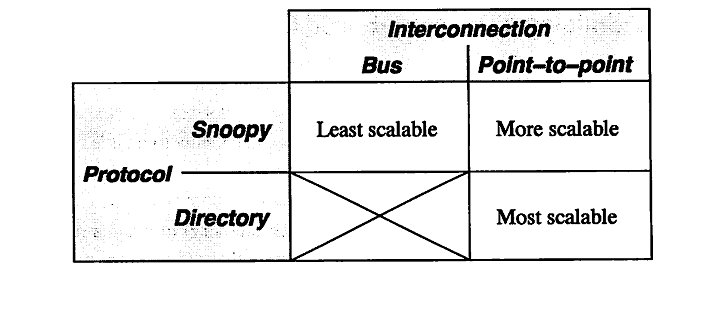

According to Solihin 320, there are two aspects that restrict the scalability of bus-based multiprocessors. These include the physical limitations of interconnections and the limitations of the protocol. To explain in detail, on a bus-based system, adding a processor will not affect any other physical restrictions on the system. Unfortunately, when adding a new processor, you will be reducing the speed of the bus. Second, the protocol needed to keep coherence does not scale well. As you increase the number of processors to the system, the amount of traffic also increases. This means that you might run the risk of overwhelming the bandwith. According to Solihin, there are a few ways that we can mitigate this problem. The following is from 321 of the Solihin textbook.

From the table, we can see that there is three ways to scale a multiprocessor system. The first being a single bus system. This is the least scalable due to the limitations of the bus wire itself. As you add processors you will decrease the bus speed due to having to increase the wire length. Also, you run into an issue of overwhelming the bus due to the amount of traffic. The second way is to use a point-to-point bus system. This allows for the speed of the bus to remain relatively fast, but since the traffic will also scale with the number of processors, there will be a limitation due to overwhelming the bus system with traffic. Lastly, the most scalable system to date is using a directory system. This allows for the bus to remain fast due to the short wires, and the bus traffic to remain low since the directory holds information on cache locations.

Protic <ref name="protic">Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996 doi: 10.1109/88.494605 paper</ref> defines a DSM to "consists of multiple independent processing nodes with local memory modules, connected by a general interconnection network." What this means is that rather than having these processors connected on a single bus line, there is a network of bus lines. The new issues that arise from such a method involves how to communicate with another node. This is usually solved using a message passing model that is effective depending on the topology that is used. According to Protic <ref name="protic"></ref>, "... compared to shared-memory systems, hardware problems are easier and software problems more complex in distributed-memory systems." What we can walk away with is that even though you have made the hardware limitation less of a factor, the software problem is now more complex in nature.

According to <ref name="nitzberg">Nitzberg, B.; Lo, V.; , "Distributed shared memory: a survey of issues and algorithms," Computer , vol.24, no.8, pp.52-60, Aug. 1991

doi: 10.1109/2.84877

paper</ref> DSM has been researched since the 1980's. There are many reasons why DSM has been an area of research focus. Uniprocessor bus-based systems suffer from a hardware and software limitation that can be mitigated using a DSM. But, this has only become an issue as we start having more and faster processors that we want to add to the system. According to Nitzberg <ref name="nitzberg"></ref>, there are three approaches that have been used to implement a DSM system. These include hardware, operating system, and compiler implementations.

Optimization Implementations

Shasta Protocol

The Shasta protocol improves on the regular software disributed shared memory system such that the shared data can be kept coherent at fine granularity. This is implemented by inserting inline code that checkes the cache state of shared data before each load or store. This protocol also allows the coherence granularity to be varied across different shared data structures in a single application, thus alleviating any potential inefficiencies that arise from the fixed large(page-size) granularity of the communication typical in most software shared memory systems. As in the hardware cache-coherent multiprocessors, shared data in this systems has three states - Invalid, Shared and Exclusive. This protocol provides a number of mechanisms for dealing with the long communication latencies in a workstation cluster. It minimizes extraneous coherence messages, and hence requires fewer messages to satisfy shared memory operations compared to protocols commonly used in hardware DSM systems. It also includes optimizations such as non-blocking stores, that aggressively exploit a relaxed memory consistency model. Other optimizations include detection of migratory data sharing, issuing multiple load misses simultaneously, merging of load and store misses to the same cache line, and support for prefetching and home placement directives.

Multiple Coherence Granularity

The Shasta protocol can support multiple granularities for communication and coherence, even with a single application. This can provide a significant performance boost in a software DSM system, since data with good spatial locality can be communicated at a coarse grain to amortize large communication overheads , while data prone to false sharing can use a finer sharing granularity. The implementation automatically chooses a block size based on the allocated size of a data structure. The basic heuristic is to choose a block size equal to the object size up to a certain threshold; the block size for objects larger than a given threshold is simply set to the base Shasta line size (typically set to be 64 bytes). The rationale for the heuristic is that small objects should be transferred as a single unit, while larger objects (e.g. large arrays) should be communicated at a fine granularity to avoid false sharing. Since the choice of the block size does not affect the correctness of the program, the programmer can freely experiment with various block sizes to tune the performance of an application. Controlling the coherence granularity in this manner is significantly simpler than approaches adopted by object-based or region-based DSM systems, since the latter approaches can affect correctness and typically require a more substantial change to the application.

Minimization of Protocol Messages

The Shasta protocol is designed to minimize extraneous coherence messages, given the relatively high overheads associated with handling messages in software DSM implementations. In this protocol, the current owner node specified by the directory guarantees to service a request that is forwarded to it. The fact that the current owner guarantees to service a request that is forwarded to it allows the protocol to complete all directory state changes when a requestor first reaches the home. This property eliminates the need for extra messages that are sent back to the home to confirm that the forwareded request is satisfied. Since this protocol supports dirty sharing, it also eliminates the need for sending an up-to-date copy of the line back to the home in case of read transaction when the home node is remote and the data is dirty in another node. Supporting exclusive requests reduces the need for fetching data on a store if the requesting processor already has the line in shared state. Also, the number of invalidation acknowledgements that are expected for an exclusive request are piggybacked on on of the invalidation acknowledgements to the requestor instead of being sent as a separate message.

Batching

Batching together checks for multiple loads and stores is an important technique for reducing the overhead of miss checks. The batching technique also applies to loads and stores via multiple base registers. For each set of loads and stores that can be batched, the Shasta compiler generates code to check the lines that may be referenced via each base register that is used. A batch miss handling routine is called if any of the lines referenced via any of the base registers are not in the correct state. Batching can also be useful for eliminating and hiding communication latency in a parallel execution, since it allows load and store misses to the same line to be combined into a single store miss and misses on multiple lines to be serviced at the same time. The inline code calls a batch miss handler that issues all the necessary miss requests. Non-stalling stores are implemented by requiring the handler to wait only for outstanding read and read-exclusive replies and not for invalidation acknowledgments.

Exploitation of Relaxed Memory Models

This protocol emulates the behavior of a processor with non-blocking loads and stores and a lockup-free cache, thus exploiting release consistency. Non-blocking stores are supported by issuing a read-exclusive or exclusive request, recording where the stre occured, and continuing. This information allows the protocol to appropriately merge the reply data with the newly written data that is already in memory. This protocol also exhibits a limited form of non-blocking load behavior

due to the batching optimization, since batching can lead to multiple outstanding loads . Lockup-free behavior for lines that are in a pending state is also supported by allowing writes to a pending line to proceed by storing the newly written data into memory and recording the location of the stores in the miss handler invoked due to the pending state.

Detection of Migratory Sharing Patterns

The Shasta protocol provides a sophisticated mechanism for detecting data that is shared in a migratory fashion and optimizing accesses to such data. Migratory sharing occurs when data is read and modified by different processors, leading to the migration of the data from one processor to another. By keeping extra information at each directory entry, the protocol detects whether the data in each line exhibits migratory behavior. A line is designated for migratory conversion after the migratory sharing pattern is successfully observed for a threshold number of times. A read request to a line that is designated for migratory conversion is automatically converted to a read-exclusive request at the directory. This conversion avoids the load miss followed by a store miss to the same line that is typical for migratory shared data. The protocol provides a mechanism to revert a line from migratory conversion. The reply data for a converted read request is cached with a special caching state , which is called exclusive migratory. Operations by the owner processor treat the line as exclusive, and a subsequent store by that processor changes the line to the ordinary exclusive state. The protocol detects a break in the migratory behavior if an incoming request from another processor arrives before the owner processor writes to the line ,i.e., while line is still in exclusive-migratory state. In this case, a message is sent to the home directory to nullify or revert the migratory conversion for that line. The line may subsequently be designated for migratory conversion if migratory behavior is observed again.

Prefetch and Home Placement Directives

The Shasta protocol allows the application to explicitly specify the home processor for individual pages instead of relying on the default round-robin allocation. The protocol also supports non-binding prefetch and prefetch-exclusive directives. The Shasta system can optionally supply information on source code lines that suffer the most number of remote misses by keeping extra state within the protocol. The programmer can use this information to identify places where prefetching may be helpful.

References

<references />