Chapter 4a: Brandon Chisholm, Chris Barile

Automatic Parallelism is the process of automatically converting sequential code into code that will make use of multiple processors. One main reason for implementing automatic parallelism is to save time and energy compared to converting the code manually.<ref name="wiki" /> There are several techniques that have been created for parallelizing code, but each has limitations.

Techniques

Profile-Driven Parallelism

This technique involves running the program while closely looking at each memory access. The memory accesses are then looked at to determine what code can be parallelized. This technique is simple, but does not cover all the possible input combinations. These may lead to false positives in which code segments can be labelled as parallelizeable when they actually aren't.

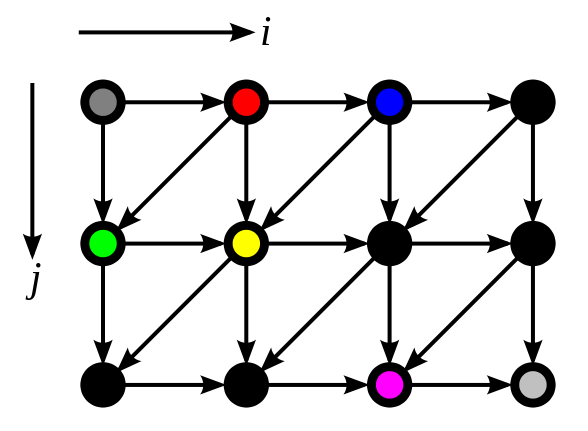

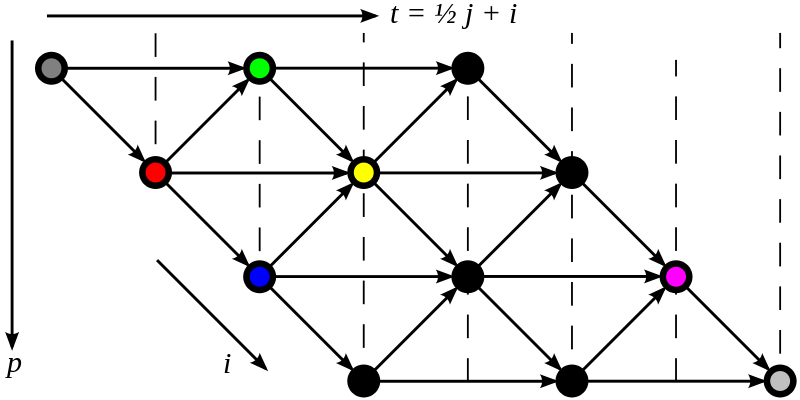

Polyhedral Transformation

Automatic Program Exploration

Scalar and Array Analysis

Commutativity Analysis

Low Level Virtual Machine

Limitations

One of the major limitations of automatic parallelization is that a computer lacks the insight into the overall intention of a program that a human would have. The programmer understands what the program must do, and can use that to determine if there are alternate approaches or algorithms for parallelizing the code. Even when parallelizing manually, a programmer may not have enough insight into parallel programming, and will need the assistance of an expert to improve code performance.<ref name="ncsa" />

References

<references> <ref name="chia">http://www.eecs.berkeley.edu/%7Echiayuan/cs262a/cs262a_parallel.pdf</ref> <ref name="dipa">http://www.csc.villanova.edu/%7Etway/publications/DiPasquale_Masplas05_Paper5.pdf</ref> <ref name="wiki">http://en.wikipedia.org/wiki/Automatic_parallelization</ref> <ref name="ncsa">http://www.ncsa.illinois.edu/extremeideas/site/on_the_limits_of_automatic_parallelization</ref> </references>