CSC/ECE 506 Fall 2007/wiki2 4 md

Parallel Application: Shuffled Complex Evolution Metropolis

Overview

The scope of environmental sciences increases significantly each year. With the advancement of computer systems, more intricate (and thus realistic) models are developed every year. As with in advancement in technology, the number of parameters for computation increase each year as well. The Shuffled Complex Evolution Metropolis (SCEM-UA) algorithm is used for global optimization of the estimation of environmental models. This algorithm is then implemented in a parallel in a very user-friendly way for further optimization. The algorithm can be used for several model case studies, such as the prediction of migratory bird flight paths.

Steps of Parallelization

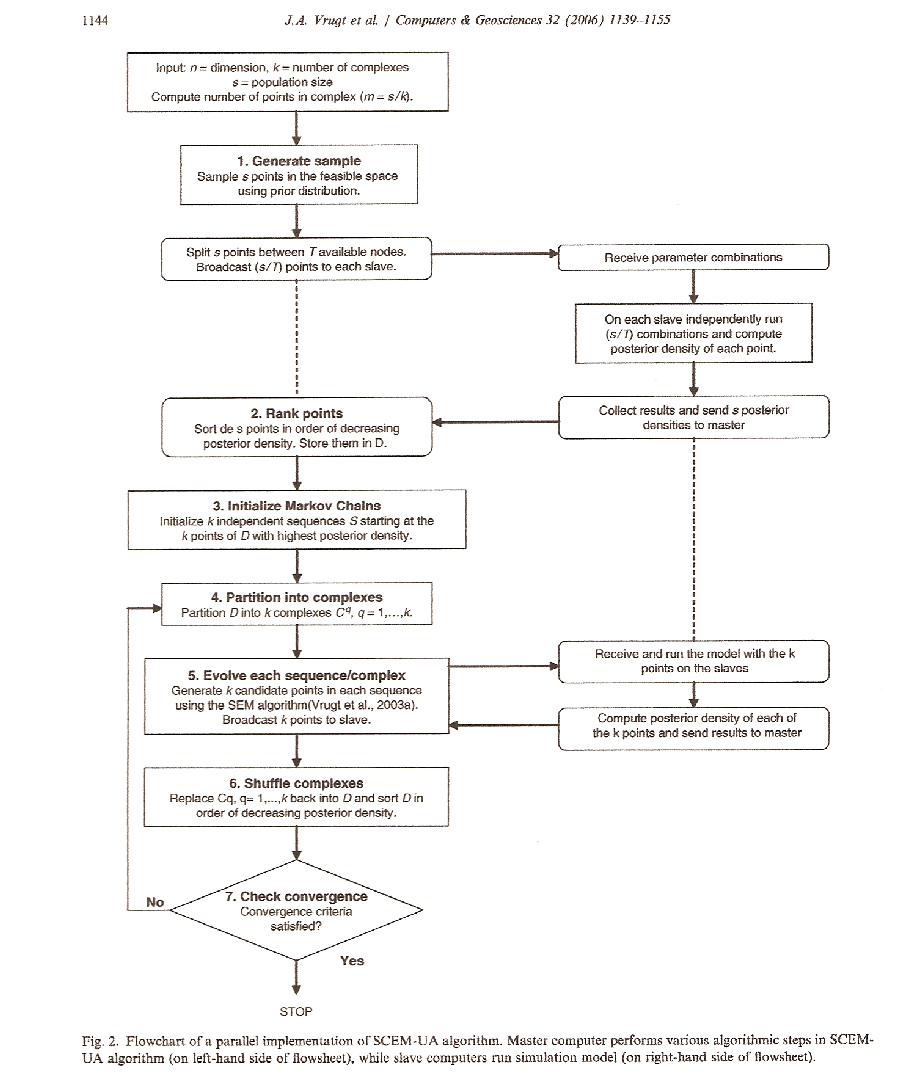

Flow Chart Overview of Parallelization

Decomposition

Decomposition in parallel processes is described as the division of the computation into various tasks. In the SCEM-UA algorithm, a dimension (n ), number of complexes (k ) and a population size (s ) are taken. The number of points, m, is then calculated using the formula:

m = s/k

Assignment

Once the algorithm is completed and split into different parts, it is then partitioned into different complexes. The s points of D are partitioned into k different complexes {C1 ... Ck}. Each different complex contains m points. The first complex contains every k(j-1)+1 point of D, the second contains k(j-1)+2 and so on (where j = 1,...m).

Orchestration

In the orchestration stage of parallelization, processes take the necessary actions of data access, communication and synchronization. In this example,

Mapping

In the mapping of the SCEM-UA algorithm, it is vital that each processor has a different data set on which to work. This allows for no communication between the different nodes. By having each node work on a different set of data without the need for communication allows for huge efficiency gains because one is not concerned with sequential execution. Instead, the majority of computation time is alloted toward running model code and generating the desired output.