CSC/ECE 506 Spring 2011/ch7 ss

Though the migration from uniprocessor system to multiprocessing systems is not new, the world of parallel computers is undergoing a continuous change. Parallel computers, which started as high-end super-computing systems for carrying out huge calculations, are now ubiquitous and are present in all mainstream architectures for servers, desktops, and embedded systems. In order to design parallel architectures to meet programmer's needs and expectations more closely, exciting and challenging changes exist. The three main areas which are being considered by scientists today are: cache coherence, memory consistency and synchronization.

This article discusses these three issues and how they can be solved efficiently to meet programmer's requirements. A related topic - TLB coherence - is also dealth with. The wiki supplement also addresses the challenges that Peterson's algorithm demonstrates.

Cache Coherence

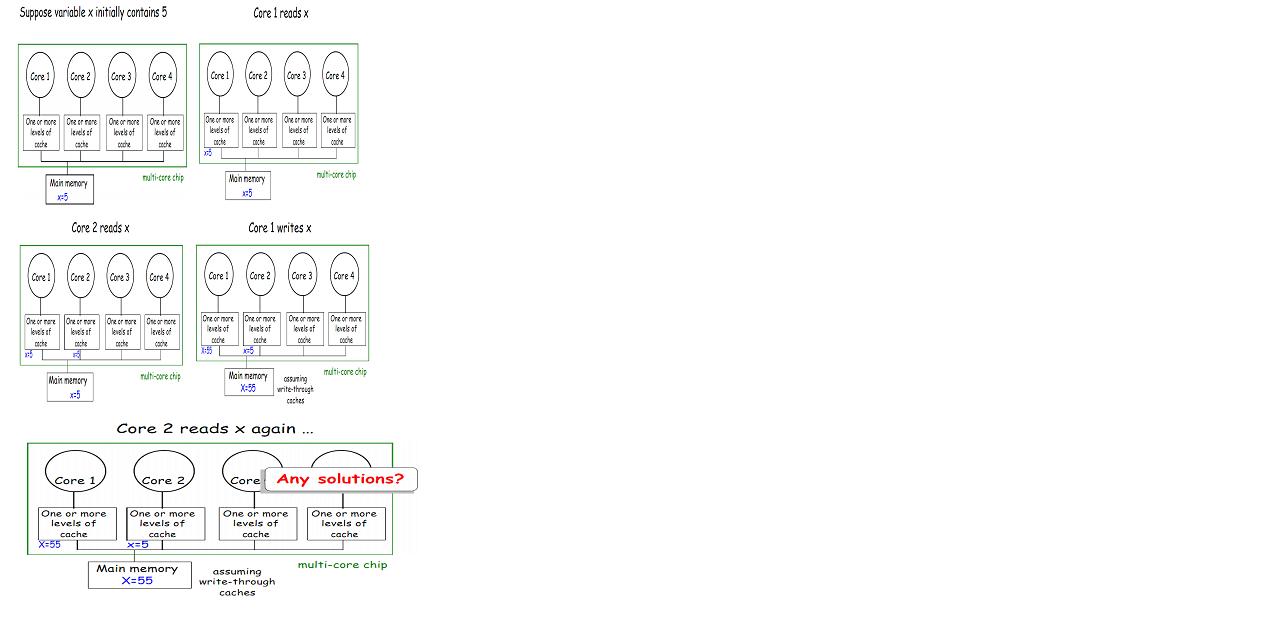

Here, by cache, we mean CPU cache. These are the small memories on or close to the CPU can operate faster than the much larger main memory.Cache coherency refers to the consistency of data stored in local caches of a shared resource. The following scenario shows the problems arising from inconsistent data when clients maintain caches of a common memory resource:

Thus, as evident from the above example, multiple copies of a block can easily get inconsistent. Hence, caches are critical to modern high-speed processors. The following section discusses the different solutions used to solve this problem.

Cache Coherence Solutions

Cache coherence solutions are mainly classified as - software-based and hardware-based solutions.

Software-based solutions are further classified as:

- Compiler-based or with run-time system support

- With or without hardware assist

Hardware-based solutions can be differentiated as:

- Shared caches or Snoopy schemes or Directory-based schemes

- Write-through vs write-back protocols

- Update vs invalidation protocols

- Dirty-sharing vs. no-dirty-sharing protocols

The main concern in case of software-based solutions is - perfect information is needed at all times when memory aliasing and explicit parallelism are required.So, the focus is more on improving hardware-based solutions and they are more common. Studies have shown that different snoop-based cache coherency schemes have a strong sensitivity to the write-policy more than the specific coherency protocol. Write-back schemes are more efficient than despite the increased hardware complexity involved in cache-coherency support. [1]

Hardware-based cache-coherence protocols, though more competitive in terms of performance with respect to basic architectures with no hardware support, incur significant power cost as coherence traffic grows. Thus, as power constraints become tighter and the degree of multiprocessing increases, viability of hardware-based solutions becomes doubtful.

Cache Coherence Protocols

The two basic methods to utilize the inter-core bus to notify other cores when a core changes something in its cache are update and invalidate. In the update method, if variable 'x' is modified by core 1, core 1 has to send the updated value of 'x' onto the inter-core bus. Each cache listens to the inter-core bus and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable. [2]

In case of invalidation, an invalidation message is sent onto the inter-core bus when a variable is changed. The other caches will read this invalidation signal and if its core attempts to access that variable, it will result in a cache miss and the variable will be read from main memory.

The update method results in significant amount of traffic on the inter-core bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method.

References

[1] Loghi Mirko,Massimo Poncino , and Luca Benini. "Cache Coherence Tradeoffs in Shared-memory MPSoCs." ACM Digital Library. Web. 18 Mar. 2011. <http://portal.acm.org/citation.cfm?id=1151081>.

[2] http://www.windowsnetworking.com/articles_tutorials/Cache-Coherency.html