CSC/ECE 517 Spring 2025 - E2536. Improve assessment360 controller

Problem Overview

The Assessment360 controller is a crucial component of the Expertiza platform that provides instructors with a comprehensive view of student performance through multiple assessment perspectives. Currently, it offers two main views:

- all_students_all_reviews: Shows teammate and meta-review scores for each student

- course_student_grade_summary: Displays project grades and peer review scores

Objective

The primary goal is to enhance the UI/UX and code quality of the assessment360 controller. This involves:

- Merging the two existing views (

all_students_all_reviewsandcourse_student_grade_summary) into a single unified view. - Improving the presentation and clarity of data (student grades, peer reviews).

- Refactoring logic for better separation of concerns and maintainability.

- Implementing feedback from the previous team.

Current Implementation Summary

The assessment360 controller exposes two main methods:

all_students_all_reviews: Displays peer review scores per student.course_student_grade_summary: Displays projects and grades for each student.

Limitations noted in previous feedback:

- Separate views instead of a unified dashboard.

- Excessive decimal precision in grade averages.

- Some layout/design issues (e.g., narrow name column).

- Business logic within controllers (should be moved to mixins).

- Sparse code comments.

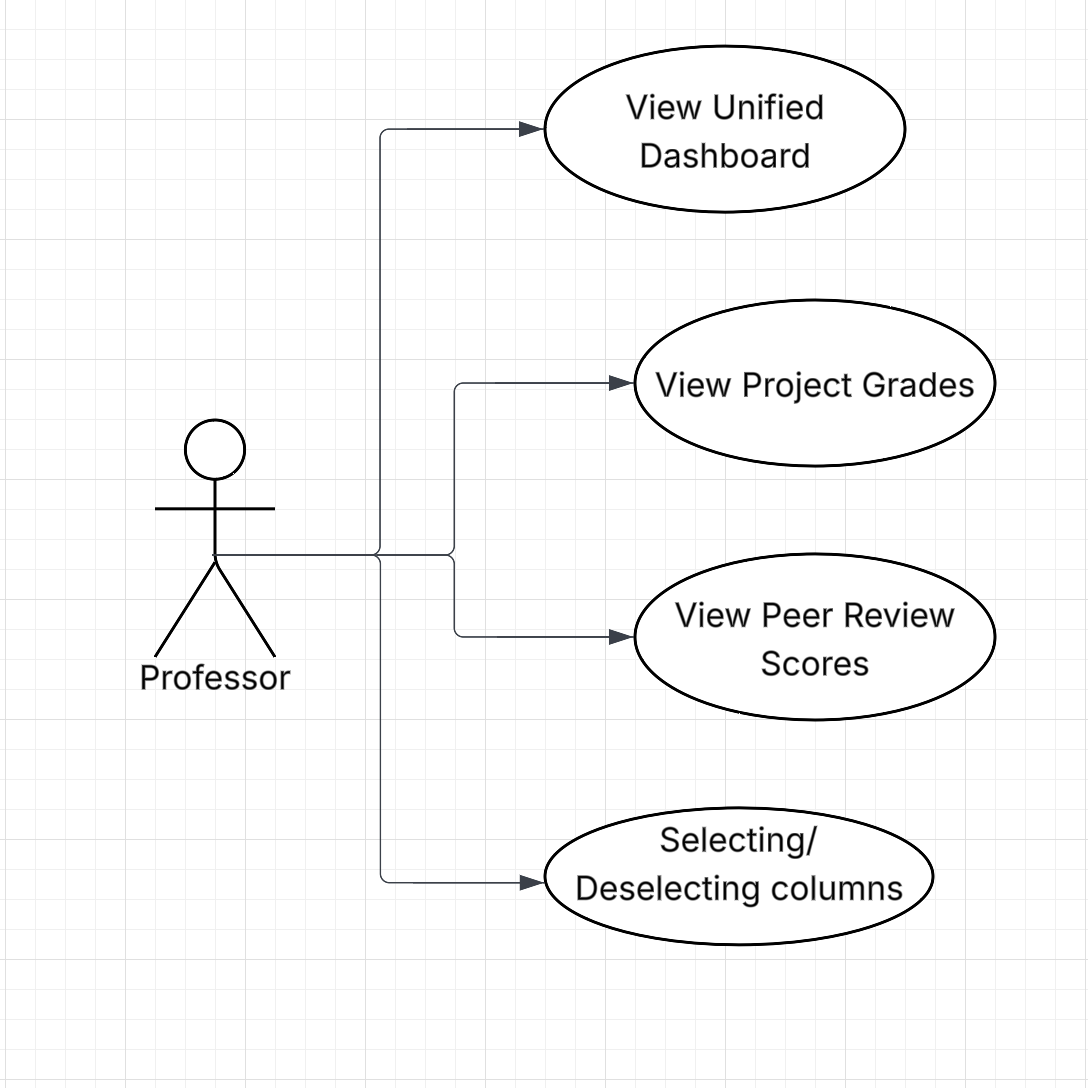

Use Case Diagram

For this project our job is to unify the view of the two files, i.e. all_students_all_reviews and course_student_grade_summary, which were created in the previous implementation to one. This would result in a single view which would help in evaluating performances by combining a lot of different perspectives. In our case, if we merge the two files and create a new view, the user should be able to view all the information that is there to be seen in one page. This includes:

- Viewing a Unified Dashboard

- Viewing all the Project Grades

- Viewing Peer Evaluation Scores

- Selecting and Deselecting Columns

Using this information we have created the following Use Case Diagram:

Design Pattern Usage in Assessment360Controller

The Assessment360Controller implements multiple design patterns that improve clarity, modularity, and maintainability of the code.

1. Facade Pattern

The controller acts as a facade, hiding complex interactions between various models (Course, Assignment, Participant, Team, etc.) and exposing a simplified interface for the view. It aggregates data like grades, reviews, and penalties and prepares it in a structured format, simplifying the presentation layer.

2. Strategy Pattern

The use of helper modules such as:

- GradesHelper

- AuthorizationHelper

- Scoring

- PenaltyHelper

follows the Strategy Pattern. Each module encapsulates a specific piece of functionality, and the controller delegates responsibility to these interchangeable strategies, promoting clean separation of concerns and easier testing.

3. Template Method Pattern

The method action_allowed? is a hook method that overrides a predefined template method in ApplicationController. This allows customized access control behavior while maintaining a consistent interface across all controllers — an example of the Template Method Pattern in action.

4. Separation of Concerns

Responsibility is split between:

- Controller: Orchestrates data flow

- Models: Store and retrieve business data

- Helpers: Encapsulate computation and utility logic

- Views: Render formatted output

This adheres to the Separation of Concerns principle and keeps each layer clean and testable.

These design patterns contribute to the robustness and scalability of the 360-degree assessment feature, allowing future teams to maintain and extend it with ease.

Planned Enhancements

Functional Changes

| Feature | Description |

|---|---|

| Unified View | Combine both views into a single HTML page that includes both project grades and review scores. |

| Column Selection | Allow users to toggle visible columns using checkboxes. |

| Exclude Unreviewed Assignments | Retain the functionality to exclude unreviewed assignments from averages. |

| Averages Precision | Limit decimal precision to 2 points for clarity. |

| Wider Name Column | Adjust layout to prioritize readability (e.g., wider name column than username). |

Code Refactor

| Task | Reason |

|---|---|

| Move averaging logic to mixins or model concerns | Improve separation of concerns and reusability. |

| Commenting | Add meaningful comments for helper methods and business logic. |

UI/UX Design

The new unified view will feature:

- A tabular layout showing all relevant data.

- Sectioned headers for each student.

- Collapsible panels (if needed) for clarity.

- Visual cues for peer review vs. project grades.

- Responsive design for usability across devices.

Testing Strategy

| Type | Description | Tests |

|---|---|---|

| Unit Tests | Test individual controller methods | - Test grade calculations - Test data formatting |

| Integration Tests | Test interactions between components | - Test view rendering - Test database queries - Test caching functionality |

| Manual Testing | Test UI functionality, performance, and error handling | - Test UI functionality - Test performance with large datasets - Test error scenarios |

Success Criteria

The success of this feature implementation will be evaluated based on the following criteria:

| Criterion | Description |

|---|---|

| UI Enhancements Implemented and Working | All proposed UI enhancements, including the unified view, column management features, and data presentation improvements, must be fully implemented and functioning as expected. The UI should be intuitive, responsive, and meet the design goals outlined in the document. |

| Performance Improvements Measurable | The feature should demonstrate a measurable improvement in performance, particularly in terms of load times and responsiveness when rendering large datasets or performing grade calculations. Performance benchmarking should indicate a noticeable reduction in latency. |

| Test Coverage > 80% | Comprehensive unit and integration tests must cover more than 80% of the new code and critical paths in the controller. This ensures that the feature is reliable, and potential issues are identified early in the development cycle. Test results should be stable, with no significant failures. |

| Code Documentation Complete | All new and modified code must be thoroughly documented. This includes clear inline comments, explanations for complex logic, and appropriate documentation for new functions, classes, and components. This ensures that future developers can understand and maintain the code efficiently. |

| No Regression Bugs | After implementing the new feature, no existing functionality in the Assessment360 controller should break or behave unexpectedly. Regression testing should show that previously working parts of the system continue to function without issues. |

| Positive User Feedback | End-users (instructors and administrators) must provide positive feedback about the usability and effectiveness of the feature. Feedback should indicate that the feature adds value, improves user experience, and meets the goals outlined in the design document. |

Team Information

Mentor: Mitesh Anil Agarwal

Team:

- Jap Ashokbhai Purohit - jpurohi@ncsu.edu

- Sharmeen Momin - smomin@ncsu.edu

- Prithish Samanta - psamant2@ncsu.edu