CSC/ECE 517 Spring 2021 - E2109. Completion/Progress view

Problem Statement

Expertiza allows users to complete peer reviews on fellow students' work. However, not all peer reviews are helpful, and some are more useful than others. Therefore, the application allows for the project's authors to provide feedback on the peer review, this is called "author feedback." The instructors have no easy way to access the author feedback while grading peer reviews, which would be a useful feature to have since this shows how helpful the peer review actually was to the group that received it. Thus, making the author feedback more accessible is the aim of this project. However, a group in 2018 was tasked with this project as well, and most of the functionality appears to have been implemented already, but that is in an older version of Expertiza. Our primary task is then to follow their implementation, refactor any code that may require it, make the suggested improvements that were left in their project feedback and make the feature compatible with the latest beta branch of Expertiza.

Goal

- Our primary goal is to take the author feedback column functionality from the 2018 branch and update it so that it merges successfully with the current beta branch.

- In the 2018 branch, there is an author feedback column to the review report, but the previous team's submission added new code to calculate the average. However, this most likely already exists somewhere else in the system, and so we will identify where that functionality exists and refactor the code to reuse it to follow the DRY principle.

- Part of the 2018 group's feedback said that the review report UI began to look somewhat crowded in its appearance. However, there is often no author feedback, and so the column for that should be made toggleable. So we will make it so that the column is dynamic and will only be present if there is author feedback to display. The user could then toggle the column to show or hide that information depending on their own preference.

Design

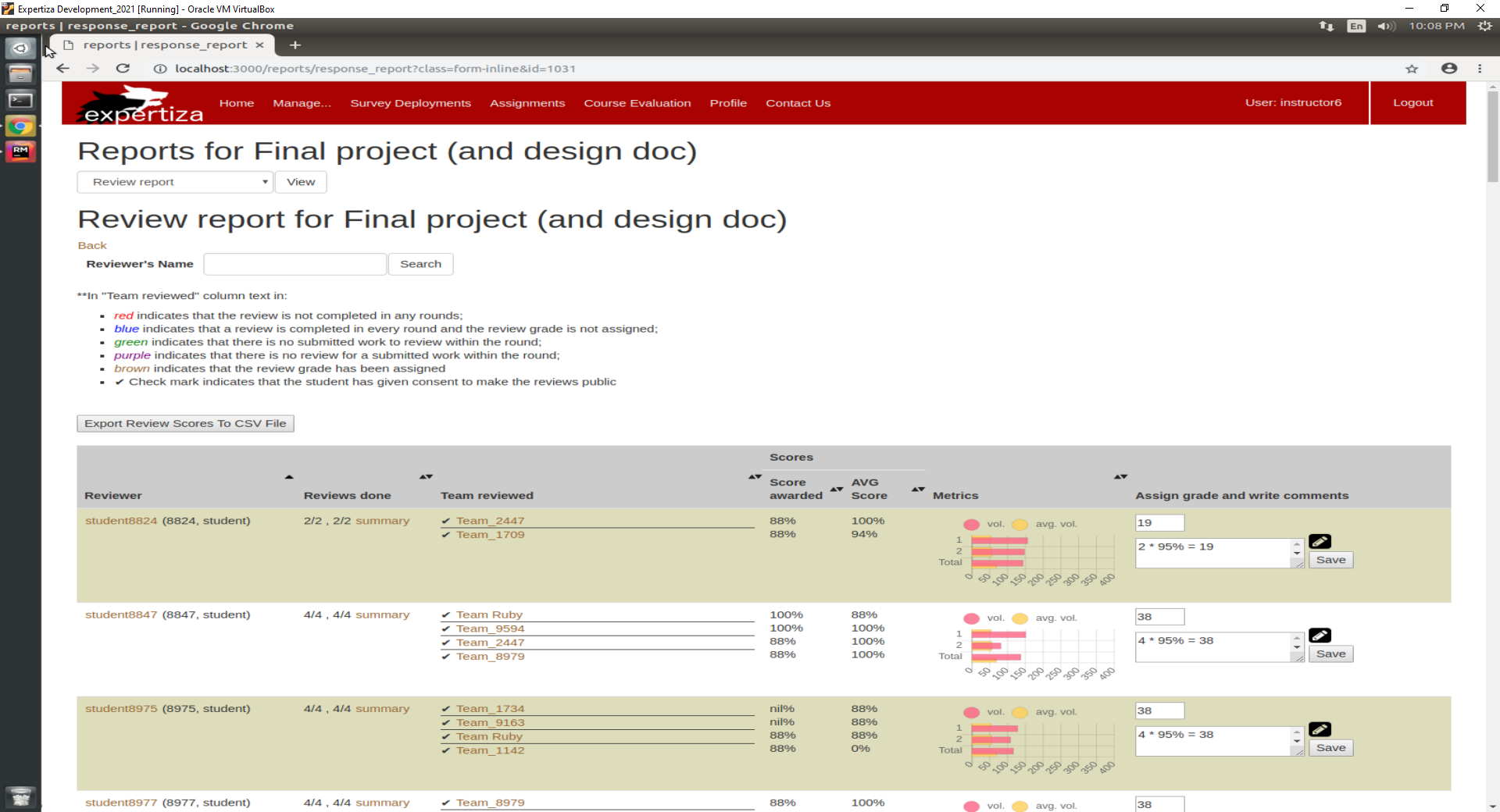

Below is the current implementation of the page we are going to edit on the beta branch. It can be seen that the "author feedback" column is not present in this view at all.

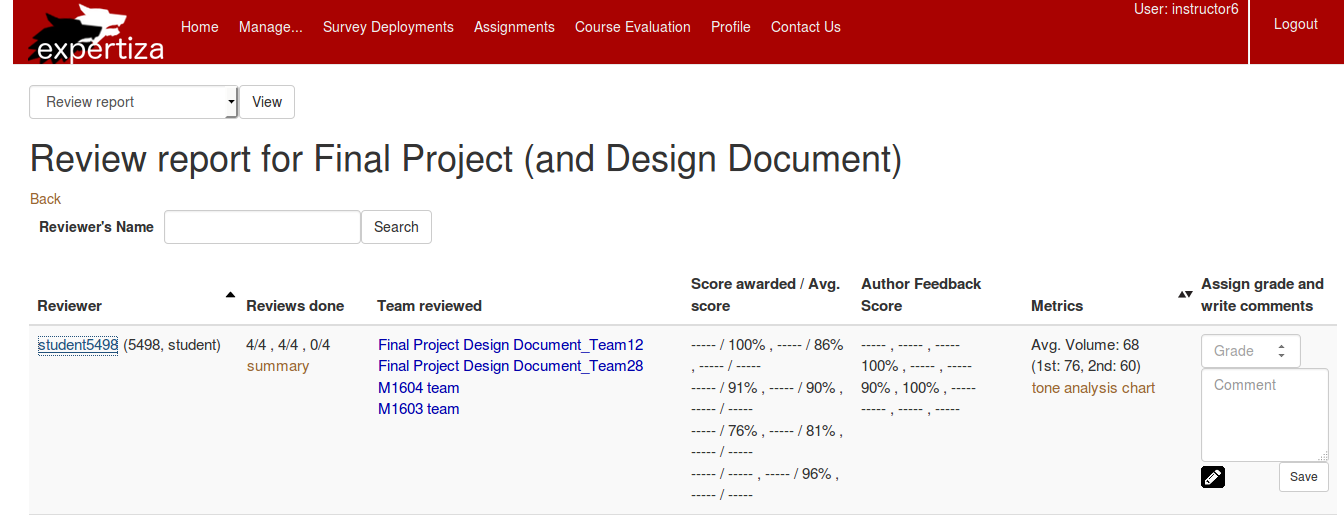

Here is the 2018 group's version of the page we are editing. It has the "author feedback" column as well as the necessary functionality. However, the user interface has clearly changed since 2018, and therefore, there will likely be merge conflicts between their branch and the current beta branch. Part of our task would then be to resolve those merge conflicts and port the functionality back over to the most recent beta branch. (Sourced from this link)

Currently, in the 2018 group's branch, the entries in the "Score awarded/Avg. score" and "Author Feedback Score" columns contain a lot of missing entries. Though, this does not appear to be the case in the most recent beta branch available in 2021. Therefore, we would add this filter (i.e. code that removes the missing entries represented by the dashes) to the missing entries available in the beta branch to the 2018 group's branch code.

In addition, the 2018 group's branch has the author feedback in its own column in-between "AVG score" and "Metrics". Instead, we will move it under the same header column "Scores" as seen in the current beta branch, just to the right of the "AVG Score" column. To accommodate for this additional column being introduced, the "Team reviewed" column width would be reduced a bit so that the "Scores" column renders approximately at the same location on the page.

Files to be Changed

The below files are expected to receive edits by our group to complete this project:

- app/views/reports/_review_report.html.erb

- app/controllers/review_mapping_controller.rb

- app/models/on_the_fly_calc.rb

- app/views/reports/response_report.html.haml

- app/views/reports/_team_score.html.erb

Test Plan

The 2018 group wrote a RSpec test when they wrote their function to calculate the author feedback scores. It was located in the on_the_fly_calc_spec.rb file and the method they wrote was called 'compute_author_feedback_scores'. Below is the snippet of the RSpec test that they wrote:

describe '#compute_author_feedback_score' do

let(:reviewer) { build(:participant, id: 1) }

let(:feedback) { Answer.new(answer: 2, response_id: 1, comments: 'Feedback Text', question_id: 2) }

let(:feedback_question) { build(:question, questionnaire: questionnaire2, weight: 1, id: 2) }

let(:questionnaire2) { build(:questionnaire, name: "feedback", private: 0, min_question_score: 0, max_question_score: 10, instructor_id: 1234) }

let(:reviewer1) { build(:participant, id: 2) }

let score = {}

let(:team_user) { build(:team_user, team: 2, user: 2) }

let(:feedback_response) { build(:response, id: 2, map_id: 2, scores: [feedback]) }

let(:feedback_response_map) { build(:response_map, id: 2, reviewed_object_id: 1, reviewer_id: 2, reviewee_id: 1) }

before(:each) do

allow(on_the_fly_calc).to receive(:rounds_of_reviews).and_return(1)

allow(on_the_fly_calc).to receive(:review_questionnaire_id).and_return(1)

end

context 'verifies feedback score' do

it 'computes feedback score based on reviews' do

expect(assignment.compute_author_feedback_scores).to eq(score)

end

end

end

We will examine any possible edge cases that this RSpec test might not cover, and try to further elaborate upon it to ensure that the introduced functionality is safe to add to the Expertiza beta branch.

Also, we will prepare instructions testers can use when interacting with our final result, so that they can quickly find our functionality and evaluate its performance.