CSC/ECE 517 Fall 2019 - E1979. Completion/Progress view

Introduction

- In Expertiza, peer reviews are used as a metric to evaluate someone’s project. Once someone has peer reviewed a project, the authors of the project can also provide feedback for this review, called “author feedback.” While grading peer reviews, it would be nice for the instructors to include the author feedback, since it shows how helpful the peer review actually was to the author of the project.

Current Implementation

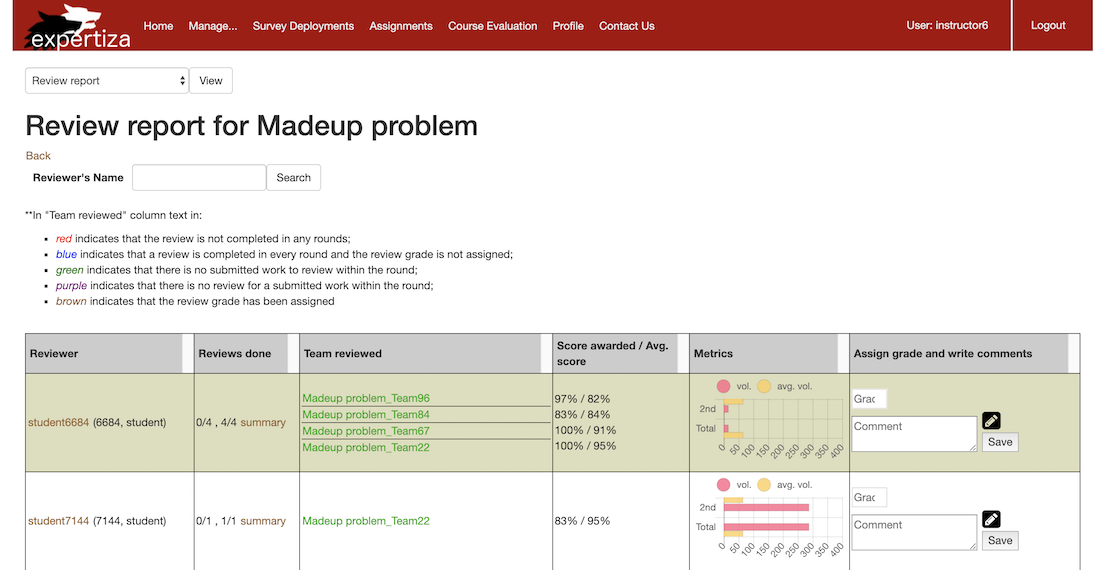

- Currently, the instructor can only see several information, including numbers of review done, team the student which have reviewed, about author feedback. The current view report can be shown as below.

- However, the instructor has no easy way of seeing the author-feedback scores, so it would be far too much trouble to include them in grades for reviewing.

- So the aim of this project is to build more feedback information into the reviewer report. So that the instructor of the course is able to grade reviewers based on author feedback and review data.

Problem Statement

- We need to implement the integration of review performance which includes:

- # of reviews completed

- Length of reviews

- [Summary of reviews]

- Whether reviewers added a file or link to their review

- The average ratings they received from the authors

- An interactive visualization or table that showed this would be GREAT (We may use “HighChart” javascript library to do it.)

- After analysis the current code, we found that the number of reviews, summary of reviews and visualization of length of reviews have already existed in the system. So we only need to finished the following tasks.

- Whether reviewers added a file or link to their review

- The average ratings they received from the authors

- As the description of our object, the average ratings part of this project has been done last year. And they add a new column (author feedback) to review report. But their functions still have some drawbacks. So we also need to improve the several functions of author feedback.

- Fix code to calculate the average score of feedback

- Make the author feedback column adjust to existing view.

- So here is our plan and solutions to finish this project.

Project Design

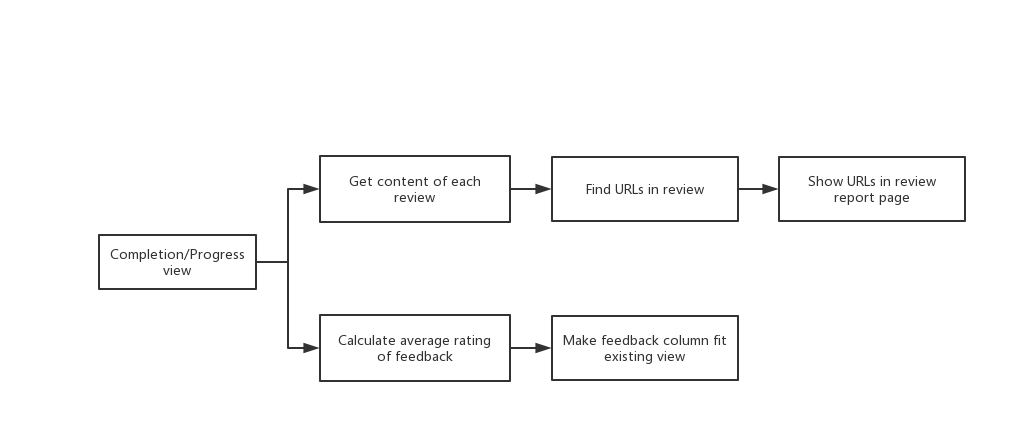

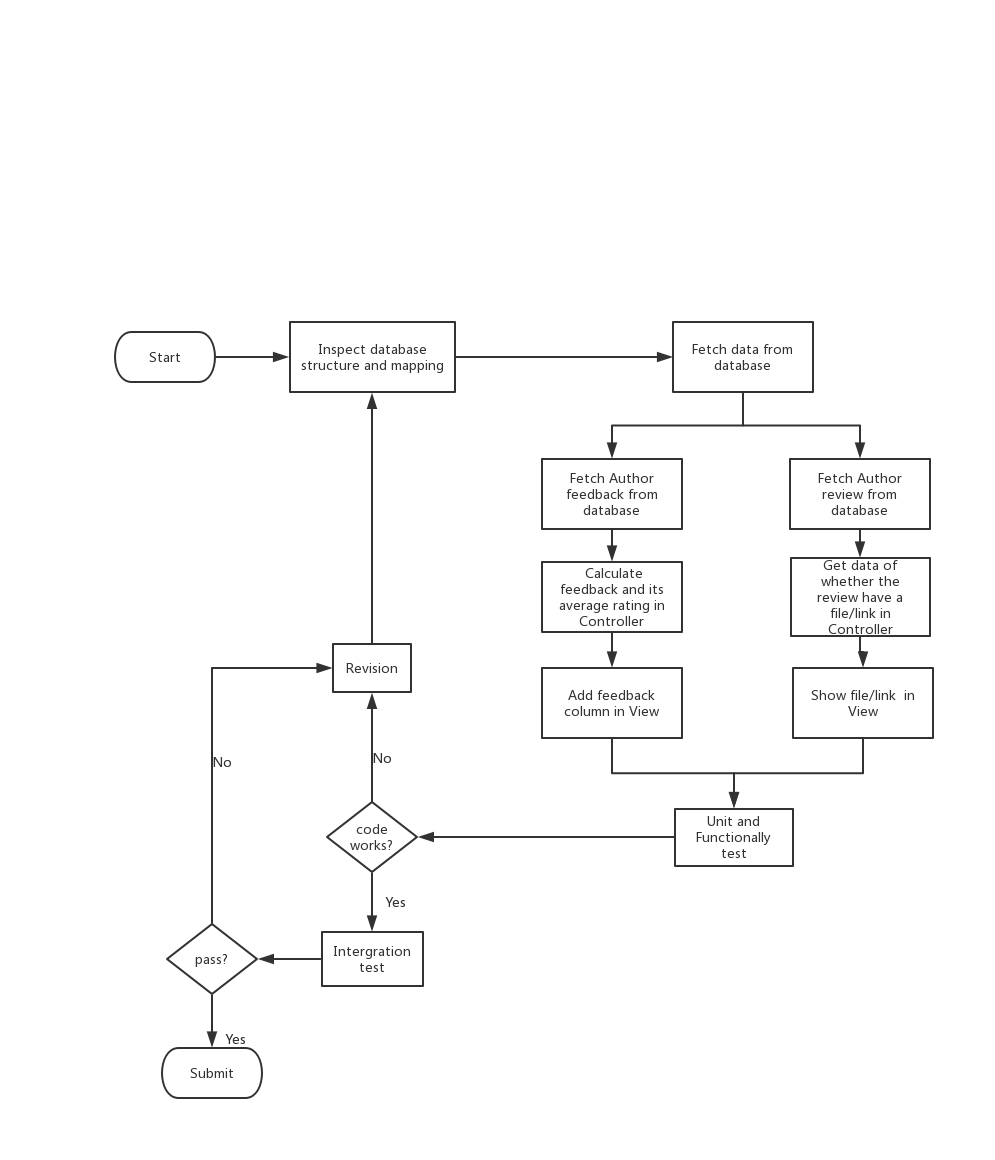

The basic design of this project can be shown in the UML flow chart below.

View Improvement

- We decide mainly change the page of "Review report" (Log in as an instructor then go to Manage -> Assignments -> View review report.) from three aspects.

- We are planning to add one more column to show the average ratings for feedback for a student's review of a particular assignment. The logic for calculating the average score for the metareviews would be similar to already implemented logic for the "Score Awarded/Average Score" column.

- We are planning to improve the column of review length. Now it is just bare data and average data. We will make the review length into visualized chart by using “HighChart” javascript library. So that length of review will become more clear for instructors. The chart will be shown like below.

- We are planning to add all links which attached to their reviews below Team name. If there are links in the review, the links will be shown below.

Controller Improvement

The Controller design is based on the data we need for view. As we found that the links and files in review are both stored as URLs, so we only need to list url in reviews.

So We change following methods. Specify changes will be shown in File Change.

- calcutate_average_author_feedback_score

- list_url_in_comments

Code Changes

Here is the file we have changed.

1. _review_report.html.erb

We add a new column to show average score of feedback score.

<!--Author feedback-->

<!--Dr.Kidd required to add a "author feedback" column that

shows the average score the reviewers received from the authors. In this case, she can see all the data on a single screen.-->

<!--Dr.Kidd's course-->

<td align='left'>

<% @response_maps.each_with_index do |ri, index| %>

<% if Team.where(id: ri.reviewee_id).length > 0 %>

<% if index == 0 %>

<div>

<% else %>

<div style="border-top: solid; border-width: 1px;">

<% end %>

<%= calcutate_average_author_feedback_score(@assignment.id, @assignment.max_team_size, ri.id, ri.reviewee_id) %>

</div>

<% end %>

<% end %>

</td>

Then we use new-added method to show all link below each Team name.

<!-- E1979: only list all url(start with http or https) in comments-->

<% urls = list_url_in_comments(ri.id)%>

</div>

<% if !urls.nil? %>

<% urls.each_with_index do |url| %>

<%= link_to url.to_s, url %>

<% end %>

<% end %>

2. review_mapping_helper.rb

We first fix calcutate_average_author_feedback_score method.

# E1979: Completion/Progress view changes

def calcutate_average_author_feedback_score(assignment_id, max_team_size, response_map_id, reviewee_id)

review_response = ResponseMap.where(id: response_map_id).try(:first).try(:response).try(:last)

author_feedback_avg_score = "-- / --"

unless review_response.nil?

# Total score of author feedback given by all team members.

author_feedback_total_score = 0

# Max score of author feedback

author_feedback_max_score = 0

# Number of author feedback given by all team members.

author_feedback_count = 0

# For each user in teamsUser find author feedback score.

TeamsUser.where(team_id: reviewee_id).try(:each) do |teamsUser|

user = teamsUser.try(:user)

author = Participant.where(parent_id: assignment_id, user_id: user.id).try(:first) unless user.nil?

feedback_response = ResponseMap.where(reviewed_object_id: review_response.id, reviewer_id: author.id).try(:first).try(:response).try(:last) unless author.nil?

unless feedback_response.nil?

author_feedback_total_score += feedback_response.total_score

author_feedback_max_score = feedback_response.maximum_score

author_feedback_count +=1

end

end

# return "-- / --" if no author feedback, otherwise return avg_score

author_feedback_avg_score = author_feedback_count == 0 ? "-- / --" : "#{author_feedback_total_score/author_feedback_count} / #{author_feedback_max_score}"

end

author_feedback_avg_score

end

Then we add a new method called list_url_in_comments to shown all urls.

# E1979: Completion/Progress view changes

# list all urls in comments for each review map

def list_url_in_comments(response_map_id)

response = Response.where(map_id: response_map_id)

# find all comments in each repsonse

Answer.where(response_id: response.ids).try(:each) do |ans|

@comments = ''

@comments += ans.try(:comments)

end

if @comments.nil?

return @comments

end

urls = []

# get every words in the comment

words = @comments.split(" ")

words.each do |element|

# see if it starts with http or https

if element =~ /https?:\/\/[\S]+/

urls.append(element)

end

end

urls

end

3. review_mapping_helper_spec.rb

4. review_mapping_spec.rb

Finally, we add some tests for the changes made. The specific test cases will be shown in Test Plan.

Test Plan

We will use unit test and functionally test with Rspec, and integration test by using TravisCI and Coveralls bot.

Automated Testing Using Rspec

As we add new column for Average Author feedback, we add corresponding Rspec test in review_mapping_helper_spec.rb.

describe "Test assignment review report" do

before(:each) do

create(:instructor)

create(:assignment, course: nil, name: 'Test Assignment')

assignment_id = Assignment.where(name: 'Test Assignment')[0].id

login_as 'instructor6'

visit "/reports/response_report?id=#{assignment_id}"

click_button "View"

end

it 'should contain Average Author feedback' do

create(:review_response_map)

expect(page).to have_content('Average Author feedback')

end

end

During the testing process, we found that there are no tests for other columns as well. So we also add some tests for number of reviews completed, length of reviews and summary of reviews in review_mapping_spec.rb

it "should calculate number of reviews correctly" do

create(:assignment_due_date, deadline_type: DeadlineType.where(name: "submission").first, due_at: DateTime.now.in_time_zone + 1.day)

create(:assignment_due_date, deadline_type: DeadlineType.where(name: "review").first, due_at: DateTime.now.in_time_zone + 1.day)

participant_reviewer = create :participant, assignment: @assignment

participant_reviewer2 = create :participant, assignment: @assignment

login_as("instructor6")

visit "/review_mapping/list_mappings?id=#{@assignment.id}"

# assign 2 reviews to student 4

visit "/review_mapping/select_reviewer?contributor_id=1&id=1"

add_reviewer("student4")

visit "/review_mapping/select_reviewer?contributor_id=3&id=1"

add_reviewer("student4")

visit "/reports/response_report?id=#{@assignment.id}"

click_button "View"

expect(page).to have_content('0/2')

end

it "can show length of reviews", js: true do

create(:assignment_due_date, deadline_type: DeadlineType.where(name: "submission").first, due_at: DateTime.now.in_time_zone + 1.day)

create(:assignment_due_date, deadline_type: DeadlineType.where(name: "review").first, due_at: DateTime.now.in_time_zone + 1.day)

login_as("instructor6")

visit "/review_mapping/list_mappings?id=#{@assignment.id}"

# assign 2 reviews to student 4

visit "/review_mapping/select_reviewer?contributor_id=1&id=1"

add_reviewer("student4")

visit "/review_mapping/select_reviewer?contributor_id=3&id=1"

add_reviewer("student4")

visit "/reports/response_report?id=#{@assignment.id}"

click_button "View"

# it should find there is a chart in the view that shows the review length

expect(page).to have_selector("#chart-0")

end

it "can show summary" do

participant_reviewer = create :participant, assignment: @assignment

participant_reviewer2 = create :participant, assignment: @assignment

login_as("instructor6")

visit "/review_mapping/list_mappings?id=#{@assignment.id}"

# add_reviewer

first(:link, 'add reviewer').click

add_reviewer(participant_reviewer.user.name)

expect(page).to have_content participant_reviewer.user.name

# add_meta_reviewer

first(:link, 'add reviewer').click

add_reviewer(participant_reviewer.user.name)

click_link('add metareviewer')

add_matareviewer(participant_reviewer2.user.name)

expect(page).to have_content participant_reviewer2.user.name

visit "/reports/response_report?id=#{@assignment.id}"

click_button "View"

expect(page).to have_content('summary')

end

Coverage

The coverage of Coveralls is 24.272% for whole expertiza.

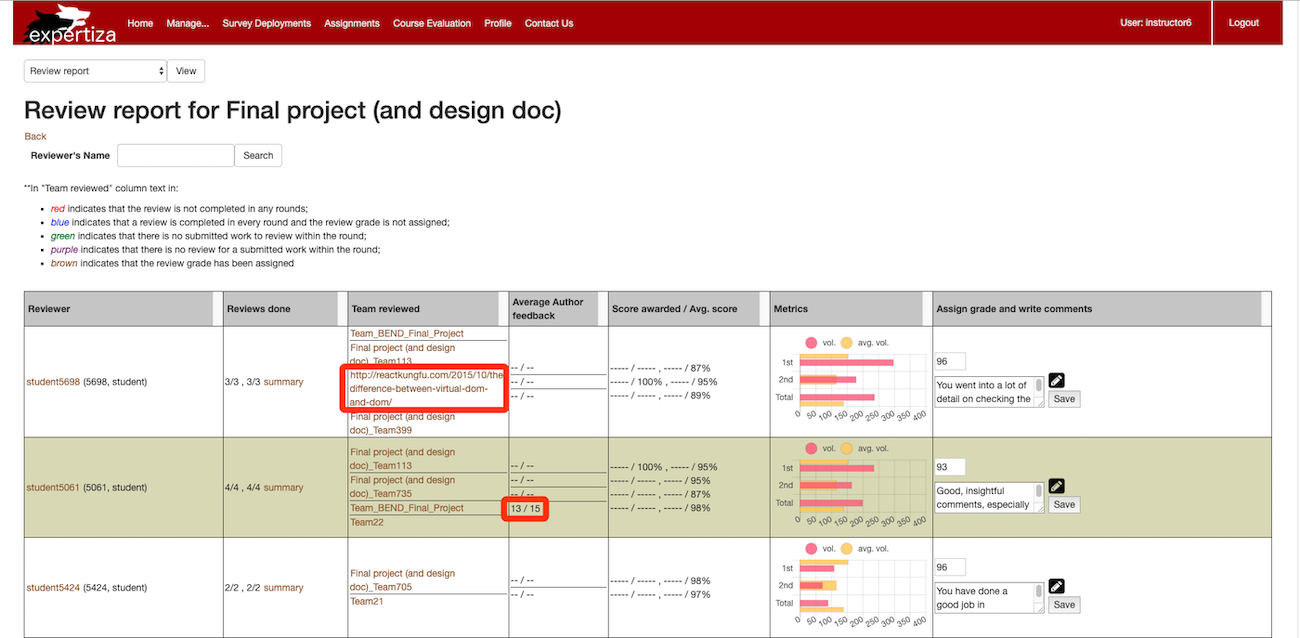

Manual UI Testing

- Log in as an instructor

- Click Manage and choose Assignments

- Select the Assignment you want to further check, for example, Final project (and design doc)

- Choose review report and click view

- Check "Average Feedback Score" column, for example, student5061 have reviewed Team_BEND_Final_Project and his Average Feedback Score is 13 of total score 15.

- Check urls below each "Team reviewed", for example, student5698 have reviewed Final project(and design doc)_Team_113, and there is a URL about reactkungfu in his review

The anticipated result can be same as below.

Team Information

Mentor: Mohit Jain

- Mentor: Mohit Jain

- Jing Cai

- Yongjian Zhu

- Weiran Fu

- Dongyuan Wang