CSC/ECE 517 Fall 2017/E1783 Convolutional data extraction from Github

GOAL

A feature that integrates Github metrics into Expertiza to help instructors grade the projects by providing more information of the workload of individuals and could do early detection on failing projects based on groups’ working pattern. This wiki page documents the changes made as a part of E1783 which allows users with instructor to view Github metrics for a students' submitted assignment.

Team: Kashish Aggarwal

Sanya Kathuria

Madhu Vamsi Kalyan

Mentor - Yifan Guo

INTRODUCTION

Expertiza is a web application where students can submit and peer-review learning objects such as assignments, articles, code, web sites, etc. Convolutional data extraction from Github integrates Github metrics into 'Expertiza'- an Open source project in order to help instructors grade the projects by providing more information of the workload of individuals and could do early detection on failing projects based on groups’ working pattern. By convolutional data, here we refer to the fields of the dataset which cannot be extracted directly, such as the working pattern, which is the amount of commits/code/files the pull request added/modified/deleted on each day in the whole project period. The project period is a range of days that can be tracked on Expertiza.

For metrics we have considered following :

o Number of commits everyday throughout the project’ s period.

o Number of files changed everyday throughout the project’ s period.

o Lines of code changed everyday throughout the project’ s period.

SETUP FOR EXPERTIZA PROJECT

Docker:

The Docker has been set up on a Windows PC and steps are given below:

Start Docker on Windows

docker run --expose 3000 -p 3000:3000 -v //c//Users//Srikar//Expertiza://c//Users//Srikar//Expertiza -it winbobob/expertiza-fall2016

/etc/init.d/mysql start mysql -uroot -p show databases; quit

git clone https://github.com/srikarpotta/expertiza.git cd expertiza cp config/database.yml.example config/database.yml cp config/secrets.yml.example config/secrets.yml

bundle install rake db:migrate sudo apt-get install npm npm install -g bower

On a Local Machine:

We have used MacOS, so the following steps are in regard to MacOS. The following steps will help you set up Expertiza project in your local machine. The steps are comprehensive amalgamation of steps mentioned in Development Setup, the documentprovided and also few steps that were missed in both. The order we followed:

- Fork the git repository mentioned above

- Clone the repository to your local machine

Install Homebrew

- Install RBENV

brew update brew install rbenv brew install ruby-build

- Install dependencies

brew install aspell gcc47 libxml2 libxslt graphviz

- Install gems

export JAVA_HOME=/etc/alternatives/java_sdk bundle install

- Change yml files

- Go to expertiza/config and rename secrets.yml.example to secrets.yml

- Go to expertiza/config and rename database.yml.example to database.yml

- Edit database.yml to include the root password for your local MySQL in the “password” field

- Install mysql (https://gist.github.com/nrollr/a8d156206fa1e53c6cd6)

- Log into MySql as root (mysql is generally present in /usr/local/mysql/bin/)

mysql -uroot -p

- Create expertiza user

create user expertiza@localhost;

- Create the databases

create database pg_development; create database pg_test;

- Set privileges for expertiza user

grant all on pg_development.to expertiza@localhost; grant all on pg_test.to expertiza@localhost;

- Install javascript libraries

sudo apt-get install npm sudo npm install bower bower install

- Download the expertiza scrubbed library (https://drive.google.com/file/d/0B2vDvVjH76uEMDJhNjZVOUFTWmM/view)

- Load this sql file into pg_development database created using the method mentioned here.

- Run bundle exec rake db:migrate

- Run bundle exec rake db:test:prepare

- Run rails s

IMPORTANT : EXPERTIZA_GITHUB_TOKEN : Export this variable in bashrc file which contains personal access token value.

- Open browser and test localhost:3000

- Login credentials

Username - instructor6 Password - password

sudo rm /usr/bin/node

sudo ln –s /usr/bin/nodejs /usr/bin/node

bower install --allow-root

thin start

MODIFIED FILES

We have modified the following files:

o submission_records_controller.rb : Business Logic. The existing submission controller is responsible to retrieve submissions from submission_records table for a particular team id. We are leveraging the same page to show GitHub metrics for the submitted GitHub link which is stored in the 'content' column in the same table. Our code will pick the latest GitHub URL submitted for an assignment and then update the git_data table to store metrics for the same. At the same time, the code also clean up the dit_data table by deleting data for any previous submissions that are removed by the user. It is also responsible to initialize necessary instance variables including an array of authors that will work as an index for our graphs in the view.

def index

latest_record_counter = 0

@submission_records = SubmissionRecord.where(team_id: params[:team_id])

@submission_records.reverse.each do |record|

matches = GIT_HUB_REGEX.match(record.content)

if matches.nil?

else

if record.operation == "Submit Hyperlink"

if latest_record_counter.zero?

update_git_data(record.id)

else

git_data_cleanup(record.id)

end

end

latest_record_counter += 1

end

end

end

o git_data_helper.rb

We have written this helper class which is a wrapper on top of our rest service GitHub api calls. The methods need owner and repository name as parameters. An additional parameter i.e. pull request number and sha for commit is needs to fetch pull/commit specific data. These methods make the service calls and return the response as json.

def self.fetch_files(owner, repo, file_pull)

fetch("#{BASE_API}/repos/#{owner}/#{repo}/pulls/#{file_pull}/files")

end

def self.fetch_pull_by_number(owner, repo, pull)

fetch("#{BASE_API}/repos/#{owner}/#{repo}/pulls/#{pull}")

end

def self.fetch_commit(owner, repo, sha)

fetch("#{BASE_API}/repos/#{owner}/#{repo}/commits/#{sha}")

end

o git_datum.rb

This is a model corresponding to our git_data table which have following attributes 1. pull request number. 2. author, who committed in that pull request. 3. Number of commit done by that author in the pull request. 4. number of files changed by that author in the pull request. 5. number of lines added by that author in the pull request. 6. number of lines deleted by that author in the pull request. 7. number of lines modified by that author in the pull request. 8. data when the pull request was created.

The model has a static method called update_git_data which is responsible to update the data in the table for the active submission record. The method calls the mthods on git_data_helper to make the GitHub api calls, manipulate the data that is returned from the service and then push the data in the table. PS - Everytime the method is called it just process the delta (changes for the pull request since the last time this method was called). This make sure that the updates are faster and avoid data redundancy.

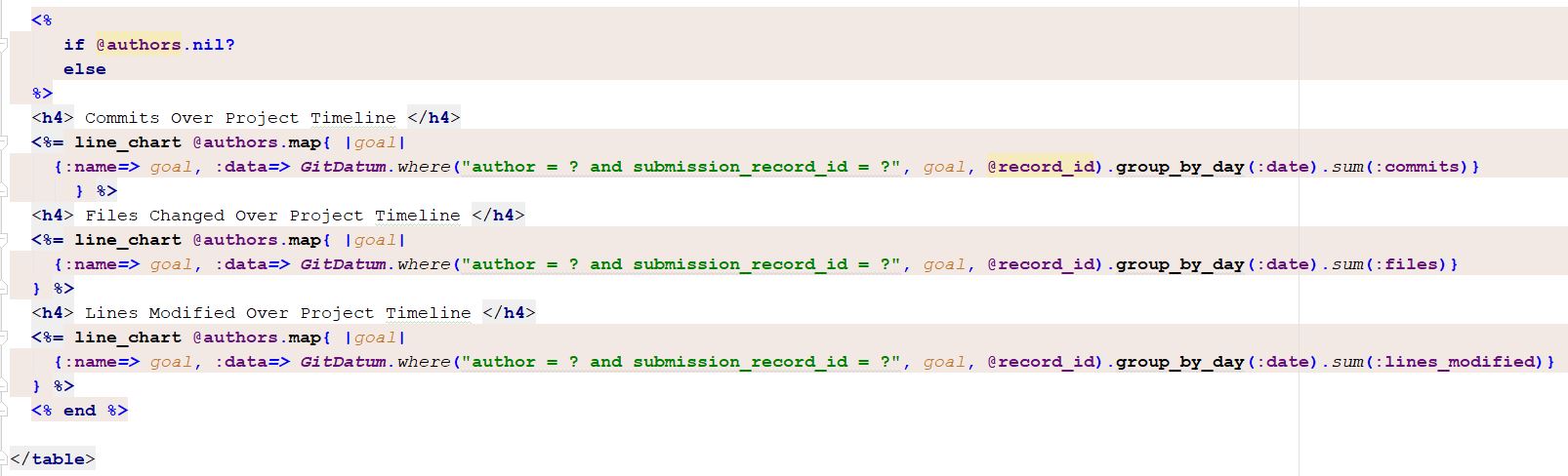

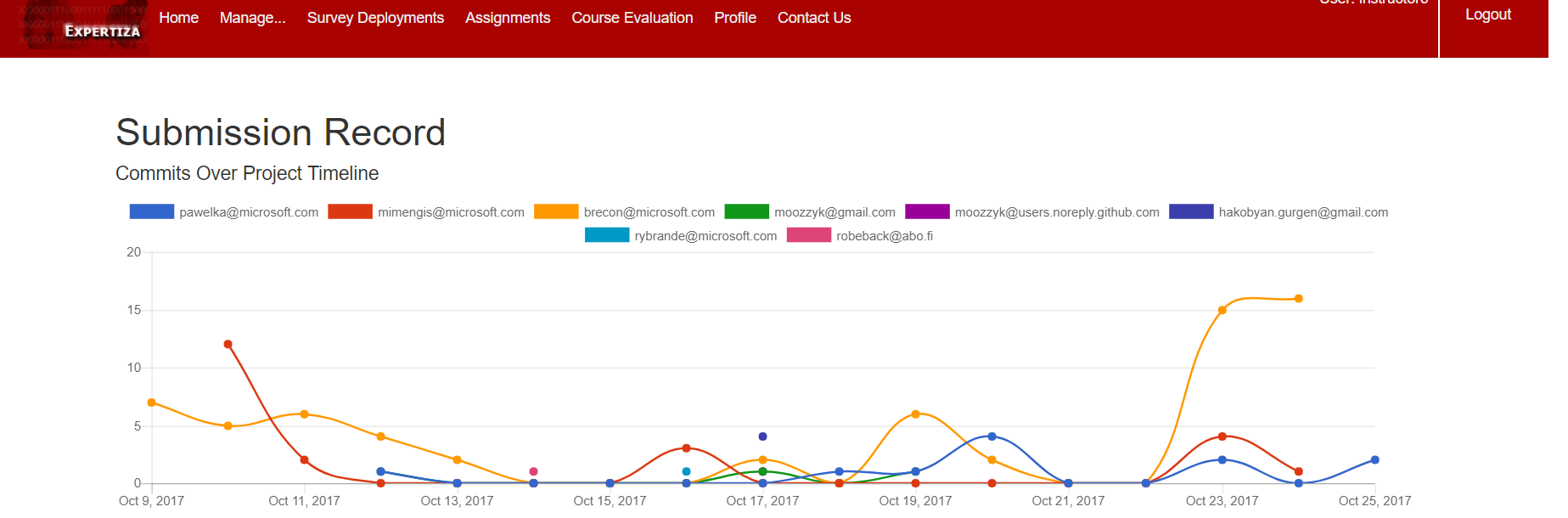

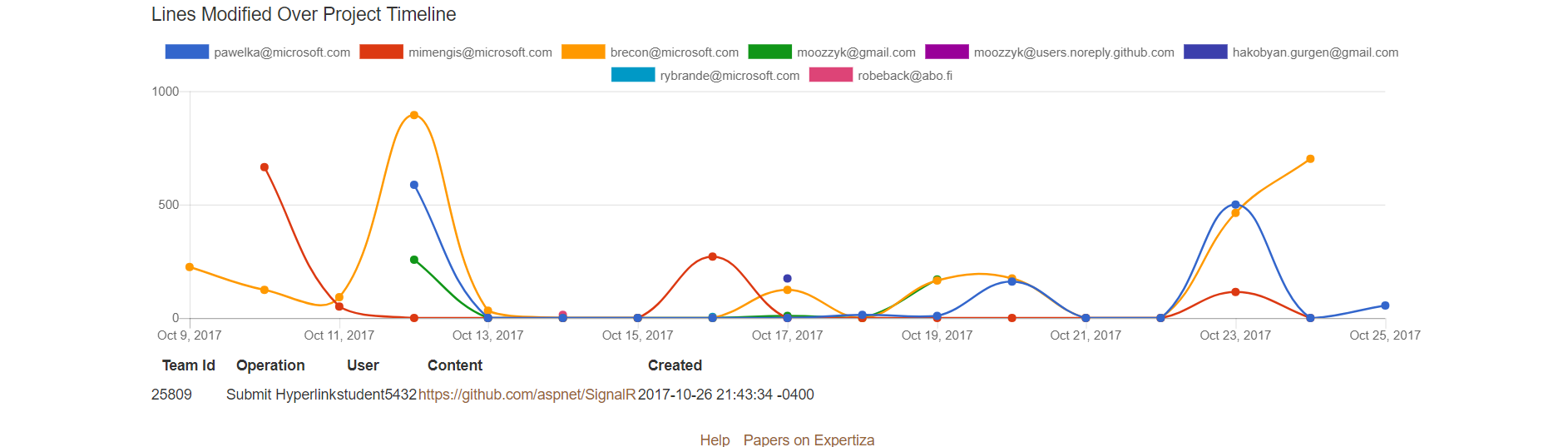

o submission_records/index.html.erb

The index view for submission_record has three charts which shows number of commits/files/lines that are committed/added/modified by every member of the team over the timeline. We have used two gems - 'chartkick' and 'groupdate' which helps us manipulate our data and visualize it in form of graphs on our view. Below is a screenshot of how these graphs appear on the page.

We have used the following tables mainly:

o submission_records For storing the data in the submission record array such which has the data for all the assignments related to a particular team id performing the 'Submit Operation' for an assignment.

o git_data For storing the data in the submission record array that has been updated and has to be used for visualization.

== OUTPUT ==