CSC/ECE 517 Fall 2016 E1709 Visualizations for instructors

Introduction

Expertiza<ref>https://expertiza.ncsu.edu/</ref> is an open-source web application to create re-usable learning objects through peer-reviews to facilitate incremental learning. Students can submit learning objects such as articles, wiki pages, repository links and with the help of peer reviews, improve them. The project has been developed using the Ruby on Rails<ref>https://en.wikipedia.org/wiki/Ruby_on_Rails</ref> framework and is supported by the National Science Foundation.

Project Description

Purpose and Scope

Expertiza assignments are based on a peer review system where the instructor creates rubrics for an assignment through questionnaires which students use to review other students' submissions. The author of the submission is given an opportunity to provide feedback about these reviews. All questions in the questionnaire have an assigned grade which is based on a pre-defined grading scale. Instructors can see a report on the scores of a student given by reviewers, on the score of feedback given to the reviewers and many other reports. Based on these reports (which are all on separate pages), the instructors grade the student. These reports are, however, on separate pages and it is difficult for an instructor to navigate to many pages before grading the student. The first requirement is meant to solve this problem by merging the review scores with the author feedbacks on the same page.

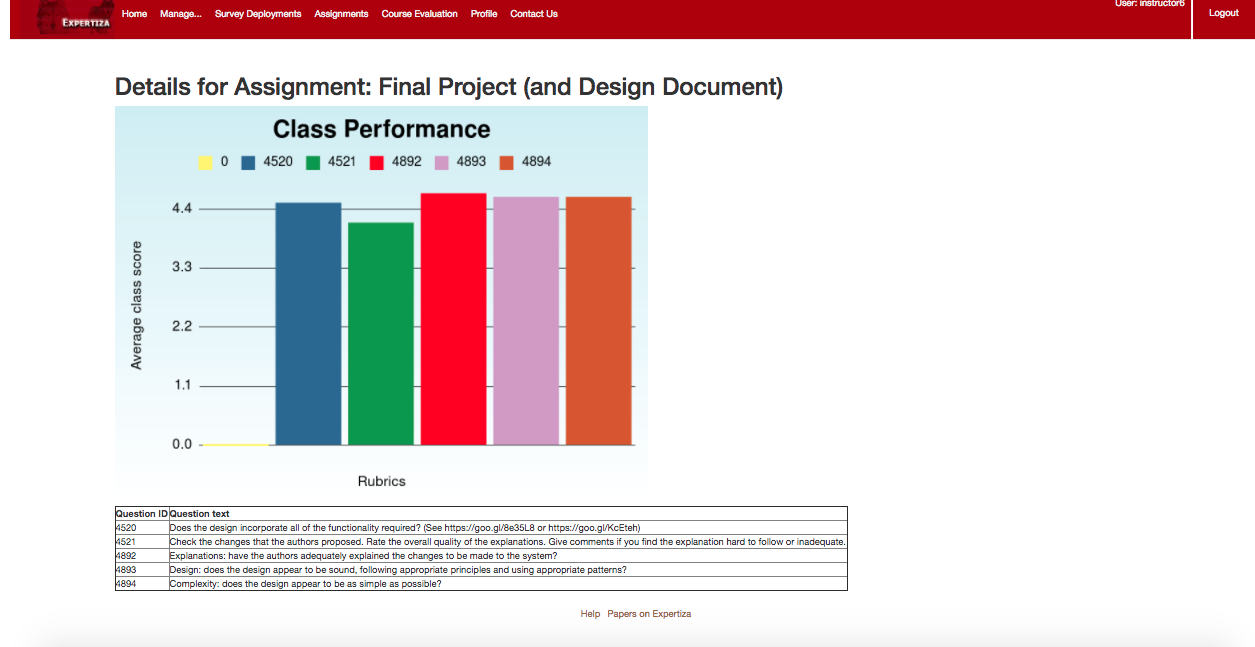

Furthermore, there is no way for an instructor to evaluate the rubric he/she has posted on assignments. Students may consistently be getting negative reviews for a rubric which might not be totally relevant to the assignment thereby reducing their scores. A report of average class scores for each rubric in the questionnaires would help instructors refactor their rubric and understand where students generally perform well and where they struggle. This new report forms the second part of the requirement.

We are not modifying any of the existing functionalities of Expertiza. Our work would involve modifying the review report and creating a new report for average class scores.

Task Description

The project requires completion of the following tasks:

- Integrate review data with author feedback data to help instructors grade the reviewers.

- Create a new table for review and author feedback report.

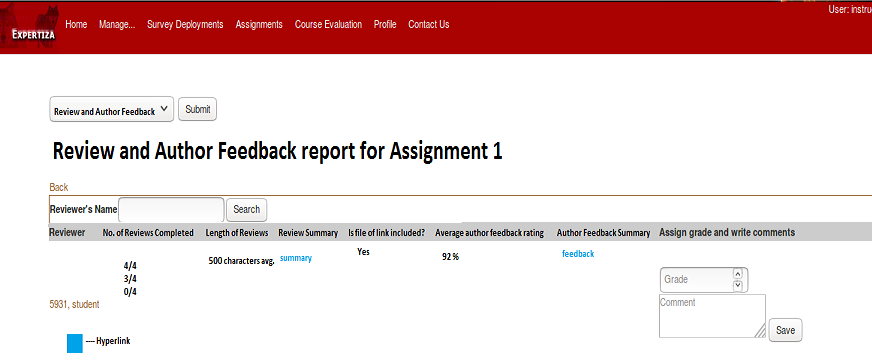

- The new table should have the following information: reviewer name, the number of reviews he has done, length of reviews, review summary, whether there is a link or a file attached, Average author feedback rating per team, Author feedback summary and a field where an instructor can give his grades and write comments.

- Add interactive visualization to show the class performance of an assignment to an instructor.

- Create a new route/view/controller for class performance.

- Add a new link to point to the new controller created. This new link will be created per assignment.

- Create two new views, one for selecting rubric criteria and second to show the graph.

- Create graphs to show the class performance as per the rubric metrics selected dynamically.

Project Design

Design Patterns

Iterator Pattern<ref>https://en.wikipedia.org/wiki/Iterator_pattern</ref>: The iterator design pattern uses an iterator to traverse a container and access its elements. When we are implementing the response and author feedback report, we will be iterating through each reviewer to get the review performed by them and then each author based on the feedback given for each review. This iteration will occur with the data returned by the ResponseMap model for all of the review and feedback information. For the class performance report, we will be iterating through each questionnaire per assignment, and thereafter each question per questionnaire. The same iteration will also be required to get answers per question per reviewer.

MVC Pattern<ref>https://www.tutorialspoint.com/design_pattern/mvc_pattern.htm</ref>: In the MVC design pattern a controller processes the request, interprets the data in model and then renders particular view. For rendering response and author feedback report, we check the the data, in the form that was submitted from the UI, in the ResponseMappingController. Depending on the data, we process various models and then display a particular view.

Review and Author Feedback Report

Workflow

Mockups

- When the author will select the review and author feedback, it will show him the screen shown below.

Implementation Thoughts

This report is an integration of two preexisting reports "Review"and "Author Feedback". Thus we will be reusing a lot of components with addition of few.

- A new option will be provided in the dropdown called as "Review and Author Feedback Report".

- On submitting this form, the user is redirected to action "response_report" in controller "ReviewMappingController".

- We will add new case for our requirement.

- We will require data from ReviewResponseMap, FeedbackResponseMap, AssignmentParticipant models.

- From ReviewResponseMap and AssignmentParticipant, for each reviewer we will get number of reviews completed, length of reviews, summary of reviews and whether reviewers had added a file or link for their review.

- From FeedbackResponseMap, we will get author feedback summary and the total score that author gave for the particular reviewer.

- All the above data will be rendered using a new partial "_response_and_feedback.html.erb" in "/app/views/review_mapping". This partial is called from "response_report.html.haml".

- In a similar tabular format for Review/ Author feedback report, we will show the data in a table.

- Hyperlinks will be provided where necessary, so instructor can view additional details. For e.g. to view review summary or author feedback summary.

Implementation

Pull Request and Demo

Class Performance Report

Workflow

Mockups

- The instructor can view the class performance on assignments by clicking on the graph icon on the assignments page as shown below.

- Once you click on the graph icon, it will take the instructor to the page shown below where the instructor can select various rubric questions used for evaluation of that assignment.

- Once you click on the graph icon, it will take the instructor to the page shown below where the instructor can see the performance of the class based on various selected rubric questions.

- The instructor can select the round 1 or round 2 from the drop-down menu.

Implementation Thoughts

In order to implement the above functionality for the class performance report, our initial observation resulted in the following path for implementation.

- We will need a new controller ClassPerformanceController.

- We will need the following two new views.

- A view to select_rubrics. This view will allow instructors to select a couple of rubrics to evaluate the class performance on.

- A view to show_class_performance. This view will display the class performance using relevant graphs to represent the information clearly.

- Routes for each of the views created.

- Model methods to facilitate getting the required information and calculations.

We need to provide a link to the instructor to see this view. As shown above, this will be a button in the assignment management page routed at tree_display/list which corresponds to the function list in the controller TreeDisplayController.rb. The button we are adding here to route to the newly created select_rubrics view.

The select_rubrics view will receive the following parameters.

assignment_id

The controller will get all the questionnaires related to that assignment from the AssignmentQuestionnaire model. It will then get a list of all the rubrics used in those questionnaires from the Questions model. These will be displayed to the instructor. It will then route the instructor to the show_class_performance view upon selection of rubrics. It will pass the following parameters to the show_class_performance view.

assignment_id Array[question_id]

The controller will take the list of questions for the assignment and find all the answers for those questions. It will then calculate the average score per question for the entire class from those answers and pass this to the view. The show_class_performance view will use the Gruff API in order to provide an aesthetically appealing visualization of the data.

Implementation

Pull Request and Demo

The demo for the class performance report can be seen here: https://youtu.be/_fp4YYCgY2w The pull request is here:

Use Cases

- View Review and Author Feedback Report as Instructor: As an instructor, he can see the different metrics of reviews and average feedback rating received per student done for an assignment or a project.

- View Class Performance as Instructor: As an instructor, he can select 5 rubric metrics used per assignment. The instructor is able to see the graph to check the class performance based upon the metrics selected.

- RSpec: RSpec is a behavior design development framework which is used for testing the functionality of the system.

- Capybara: We will be using capybara for automation of the manual test cases written in RSpec.

Test Plan

- For use case 1, test if the instructor can see the text metrics of reviews and author feedbacks received for an assignment or a project per student.

- For use case 2, test if the instructor can select 5 rubric metrics used for an assignment. Also, test if the instructor can view the class performance from a graph using the metrics selected by the instructor.

Requirements

While software and hardware requirements are the same as current Expertiza system, we will require following addition tools:

- Tools: Gruff API in addition to the current Expertiza system.

References

<references/>