CSC/ECE 517 Fall 2014/ch1a 20 rn

Scalability in Web Applications

This page starts with an introduction of scalability and its importance in web application. It then explains good programming practices and aspects to be considered to make a web application more scalable. In the end, there is a brief description on how to achieve scalability in Rails application

Introduction

Scalability is the ability of a system, network or process to handle an increase in load in a capable manner or its ability to expand to accommodate this increase. In a typical scenario, it can be understood as the ability of a system to perform extra work by the addition of resources (mostly hardware). It is generally difficult to define scalability precisely and in any particular case it is necessary to define the specific requirements for scalability on those dimensions that are deemed important. It is a highly significant issue in electronics systems, databases, routers, and networking. A system whose performance improves after adding hardware, proportionally to the capacity added, is said to be a scalable system. A very good example of a scalable system would be the Domain Name System. The distributed nature of the Domain Name System allows it to scale well even when new systems are added worldwide.

Scalability in Web Applications

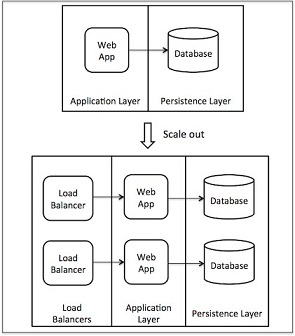

Scalability of a web application is its ability to handle an increase in number of users or site traffic in a timely manner. Ideally, the experience in using the web application should be the same if the site has a single user or a million user. This kind of scalability is usually achieved with the addition of hardware in either a scaling up (vertical scaling) or a scaling out (horizontal scaling) manner. A typical web application follows a multilayered architecture. The web application is considered to be scalable if each layer or component in its architecture is scalable. Fig 1 shows a web application which is able to linearly scale out with the addition of resources in its application layer and database layer.<ref>http://highscalability.com/blog/2014/5/12/4-architecture-issues-when-scaling-web-applications-bottlene.html</ref>

Need for scalability in web applications

In this age ruled by the internet, web applications or web sites are essential for a company’s survival. Most applications are generally developed and tested with an initial small load. As the application gains popularity, the number of users ie traffic to the web site increases. The server which was able to handle the initial load might not be able to handle the new traffic. This causes the application to become unresponsive and result in a bad user experience which would affect the web site in an economic and/or defaming manner. It is thus important to design a web application with scalability in mind i.e. the application should be able to scale with the addition of new hardware without affecting the usability of the web application. Many popular web applications, especially social networking sites like Facebook and Twitter thrive because of their ability to scale for their ever increasing user base. For eg. Twitter has grown from 400,000 tweets posted per quarter in 2007<ref>http://www.telegraph.co.uk/technology/twitter/7297541/Twitter-users-send-50-million-tweets-per-day.html</ref> to 200,000,000+ monthly users and 500,000,000+ daily tweets in <ref>http://www.sec.gov/Archives/edgar/data/1418091/000119312513390321/d564001ds1.htm</ref>. However there are instances where even the most scalable architecture might not be able to handle the traffic. As an example, when American singer Michael Jackson died on June 25, 2009, Twitter servers crashed after users started updating their status at a rate of 100,000 tweets per hour <ref>http://news.bbc.co.uk/2/hi/technology/8120324.stm</ref>. Therefore, it is important for a web application to be highly scalable so that it is able to adjust to increase in traffic. Even if the web application is overwhelmed by a sudden spike, it should be able to quickly recover by the addition of more resources to ensure that there is minimum downtime, if not zero.

Scalability in Rails applications

Ruby on Rails(RoR), simply referred to as Rails, is a very elegant open source web application framework for creating the full stack by emphasizing on the use of well-known software engineering patterns and paradigms.<ref> http://guides.rubyonrails.org/getting_started.html#what-is-rails-questionmark</ref>. Many high-profile web firms use Ruby on Rails to build agile, scalable web applications. Some of the largest sites running Ruby on Rails include GitHub, Yammer, Scribd, Shopify, Hulu, and Basecamp<ref> http://articles.businessinsider.com/2011-05-11/tech/30035869_1_ruby-rails-custom-software</ref>. As of May 2014, it is estimated that more than 600,000 web sites are running Ruby on Rails.<ref><http://trends.builtwith.com/framework/Ruby-on-Rails></ref>

When Twitter had some significant downtimes in early 2008, a widespread myth started about Rails, ‘Rails doesn’t scale’<ref> http://techcrunch.com/2008/05/01/twitter-said-to-be-abandoning-ruby-on-rails></ref><ref>http://canrailsscale.com/></ref>. However, when Twitter’s lead architect, Blaine Cook held Ruby blameless<ref> http://highscalability.com/scaling-twitter-making-twitter-10000-percent-faster</ref>, the developer community came to the general conclusion that it is not the languages or frameworks that is a bottleneck to scaling, but the architecture. Therefore, Rails does scale well, but it is dependent on the condition that it has been architected to scale well.

Even though it applies to web applications in general, the following sections describe practices which help in the development of scalable Rails applications.

Optimizing the application

In spite of the fact that Ruby has a lot of limitations in terms of lack of true support for multithreading, a lot of scaling issues can be solved by optimizing certain ways in which operations are done.

Scaling Reads by Precomputing<ref>http://devblog.moz.com/2011/09/scaling-the-f-out-of-a-rails-app/</ref>

Often, because of the quick nature of development of Rails applications, developers tend to miss anticipating operations which might require a lot of time to compute as data grows in the future. A typical example of such an operation would be an aggregate view which is generated based on usage in the past. At some point, calculating the aggregate view would take a lot of time and would result in a long time to render UI controls like graphs if it is done on-demand. To solve such issues, it is a good idea to precompute the values, frequency depending on the input volume, and store the results. Rendering would eventually be reduced to either displaying the data or doing minimal calculation so that the end-user does not have to wait long for results.

Cache expensive operations

Sometimes it is not possible to precompute for every possible set of operation. However, if such operations are going to be used frequently in a short period of time, it is a good idea to cache the first result so that subsequent results can be displayed faster. A typical example<ref>https://speakerd.s3.amazonaws.com/presentations/50044249a555b00002037c2c/Fast.pdf</ref> would look like:

Caching in Rails.rb (Slower first time)

Benchmark.measure do

Rails.cache.fetch(“cache_key”) do

User.some_expensive_query

end

end.real

=> 20.5s

Caching in Rails 2.rb (Super fast after that)

Benchmark.measure do

Rails.cache.fetch(“cache_key”) do

User.some_expensive_query

end

end.real

=> 0.00001s

Make the databases queries faster<ref>http://rakeroutes.com/blog/increase-rails-performance-with-database-indexes/</ref>

Database accesses is a significant factor affecting the throughput of a web application. Some of the common methods to make database IO faster are:

- Indexing

DB reads can increase significantly by the addition of database indexes. Indexes are helpful when a search has to be made for an attribute other than id. For example, a query in the Products table,

products.where(:name => :shampoo)

would result in the query having to look at every row of the table and see if the name of the product is shampoo. This unnecessarily expensive operation can be speeded up by the addition of an index as below

class AddNameIndexToProducts < ActiveRecord::Migration

def change

add_index: :products, :name

end

end

This eliminates the need to look-up every row as all the products with name ‘shampoo’ would have references stored together and thus can be quickly accessed. Another common example of indexes is in join statements. i.e if the design has belongs_to and has_many relationship. Rails does not create indexes by default for the foreign keys. Creation of an index similar to the one shown above in the table for the foreign key would result in a significant improvement for join queries. In Ruby 3.2, the automated explain plan<ref>http://weblog.rubyonrails.org/2011/12/6/what-s-new-in-edge-rails-explain</ref> was introduced which writes out warnings in application logs when the query takes longer than 0.5s(by default). It is a useful tool to analyze the performance of queries and can give important pointers to identify where indexes should be added. In most web applications, the percentage of reads is significantly higher than writes and hence they can really benefit by using indexes. However, if a particular table has mostly writes then it would not be a good idea to have indexes for the table as having the index causes extra work while inserting and updating entries.

- Eliminating n+1 queries

n+1 queries are solved by using eager load. Consider the following example<ref>http://guides.rubyonrails.org/active_record_querying.html</ref>,

users = User.limit(20)

users.each do |user|

puts user.address.city

end

Assuming users and address are different tables, the above set of statements would result in 21 queries. One for retrieving 20 users and then twenty queries for getting the city of each user. This is known as the n+1 problem. This solution can be mitigated by using an eager load. In this case, we can load the addresses along with user with a statement like this.

users = User.includes(:address).limit(20)

users.each do |user|

puts user.address.city

end

This code will only require 2 queries, one to get the users and one to get their associated addresses. This is known as eager loading where the data is loaded in advance. However, this should be used only when it is absolutely require. Unused eager loading might burden the IO bandwidth by retrieving unnecessary data. Gems like Bullet can be used analyze database queries and detect n+1 queries and unused eager loading.

Architecture

Irrespective of the efficiency of the code, at some point, the hardware resources reach the threshold of the maximum load it can take as the application goes popular. It is thus essential to design applications in a way that the application can take advantage of the additional resources added to it. The most essential to support this requirement is to write applications in a modularized way. Thus, a typical Rails application should have the following three parts as separate components:

- Web: The front end or the web module should run Ruby code. Its sole purpose is to handle requests and/or render UI to the user to display information

- Worker:

The worker module performs compute-intensive operations or other background jobs such as sending emails or running Resque which is a Redis-backed Ruby library for creating background jobs, placing them on multiple queues, and processing them later.

- Datastores:

Datastores persist the data. Typically, in the form of databases, datastores should exist on separate machines.

A modularized architecture like this can be scaled in two ways:

1) Scale Up: Scaling up refers to replacing the current hardware with a better performing and advanced hardware. Scaling up is relatively easy, but there is a limit on the extent to which the hardware can be scaled up. Fig 2 shows a representation of scale up of resources.

2) Scale Out: Scaling out refers to increasing the number of similar machines and thus distributing the load onto these machines. Scaling out is hard, but it can be extended in an unlimited manner. Fig. 3 shows a representation of scaling out of resources.

Application Deployment

At a future stage, when the user base grows beyond a certain threshold, it is a good idea to add load balancers to distribute the incoming requests. A very popular deployment model is the use of Unicorn Application Servers and Nginx Load Balancers.<ref>https://www.digitalocean.com/community/tutorials/how-to-scale-ruby-on-rails-applications-across-multiple-droplets-part-1</ref>

Unicorn Application Server<ref>https://github.com/blog/517-unicorn</ref>

Unicorn is an application server which runs Rails applications to process the incoming requests. These application servers are responsible for handling only those requests that need processing after having them filtered and pre-processed by the front-facing Nginx servers. Unicorn is simplistic in design by handling what needs to be done by a web application and it delegates the rest of the responsibilities to the operating system.

Unicorn's master process spawns slave processes to serve the requests. This master process also monitors the other processes in order to prevent memory and process related staggering issues.

Nginx HTTP Server / Reverse-Proxy / Load-Balancer<ref>http://nginx.org/en/docs/http/load_balancing.html</ref>

Nginx HTTP server is a multi-purpose, front-facing web server. It acts as the first entry point for requests and is capable of balancing connections and dealing with certain exploit attempts. It filters out the invalid requests and forwards the valid requests to the Unicorn Application servers to be processed. Together, they serve well to make Rails applications scalable.

References

<references/>