CSC/ECE 517 Fall 2010/ch3 3f ac

ORM for Ruby

Introduction

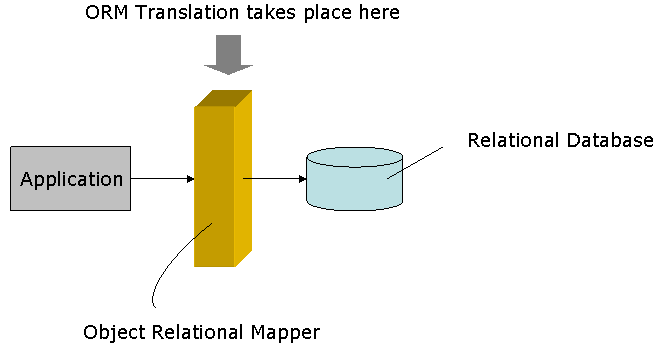

Object-relational mapping in computer software is a programming technique for converting data between incompatible type systems in object-oriented programming languages. This creates, in effect, a "virtual object database" that can be used from within the programming language. There are both free and commercial packages available that perform object-relational mapping, although some programmers opt to create their own ORM tools.

Advantages of ORM

- ORM often reduces the amount of code needed to be written.

- Facilitates implementing the Domain Model pattern.

- Changes to the object model are made in one place.

- Rich query capability. ORM tools provide an object oriented query language.

- Navigation. You can navigate object relationships transparently. Related objects are automatically loaded as needed.

- Data loads are completely configurable allowing you to load the data appropriate for each scenario.

- Concurrency support. Support for multiple users updating the same data simultaneously.

- Cache managment. Entities are cached in memory thereby reducing load on the database.

- Transaction management and Isolation.

- Key Management. Identifiers and keys are automatically propogated and managed.

Disdvantages

Most O/R mapping tools do not perform well during bulk deletions of data and particularly complex or even simple joins. Stored procedures may have better performance, but are not portable. In addition, heavy reliance on ORM software has been pointed to as a major factor in producing poorly designed databases.

List of ORM software for Ruby

- ActiveRecord, part of Ruby on Rails & Open Source

- Datamapper, MIT license

- iBATIS, free open source

- Sequel, free open source

- Merb

ActiveRecord

Active Record connects business objects and database tables to create a persistable domain model where logic and data are presented in one wrapping. An object that wraps a row in a database table or view, encapsulates the database access, and adds domain logic on that data.

Active Record‘s main contribution to the pattern is to relieve the original of two stunting problems: lack of associations and inheritance. By adding a simple domain language-like set of macros to describe the former and integrating the Single Table Inheritance pattern for the latter, Active Record narrows the gap of functionality between the data mapper and active record approach. A Ruby library is available which implements the object-relational mapping (ORM) design pattern. It creates a persistable domain model from business objects and database tables, where logic and data are presented as a unified package. ActiveRecord adds inheritance and associations to the pattern above, solving two substantial limitations of that pattern. A set of macros acts as a domain language for the latter, and the Single Table Inheritance pattern is integrated for the former; thus, ActiveRecord increases the functionality of the active record pattern approach to database interaction.

ActiveRecord is the default model component of the Model-view-controller web-application framework Ruby on Rails, and is also a stand-alone ORM package for other Ruby applications. In both forms, it was conceived of by David Heinemeier Hansson, and has been improved upon by a number of contributors. Other, less popular ORMs have been released since ActiveRecord first took the stage. Gems like DataMapper and Sequel show major improvements over the original ActiveRecord framework. As a response to their release and adoption by the Rails community, Ruby on Rails v3.0 will be independent of an ORM system, so Rails users can easily plug in DataMapper or Sequel to use as their ORM of choice. Some of the major features are given below.

Automated mapping between classes and tables, attributes and columns

Active Record objects don‘t specify their attributes directly. They infer them from the table definition with which they‘re linked. Adding, removing, and changing attributes and their type is done directly in the database. Any change is instantly reflected in the Active Record objects. The mapping that binds a given Active Record class to a certain database table will happen automatically in most common cases, but can be overwritten for the uncommon ones.

class Product < ActiveRecord::Base; end ...is automatically mapped to the table named "products", such as: CREATE TABLE products ( id int(11) NOT NULL auto_increment, name varchar(255), PRIMARY KEY (id) ); ...which again gives Product#name and Product#name=(new_name)

Associations between objects controlled by simple meta-programming macros

Associations are a set of macro-like class methods for tying objects together through foreign keys. They express relationships like Project has one Project Manager or Project belongs to a Portfolio. Each macro adds a number of methods to the class which are specialized according to the collection or association symbol and the options hash. It works much the same way as Ruby‘s attr* methods. Example:

class Project < ActiveRecord::Base

belongs_to :portfolio

has_one :project_manager

has_many :milestones

has_and_belongs_to_many :categories

end

Aggregations of value objects controlled by simple meta-programming macros

Active Record implements aggregation through a macro-like class method called composed_of for representing attributes as value objects. It expresses relationships like "Account [is] composed of Money [among other things]" or "Person [is] composed of [an] address". Each call to the macro adds a description of how the value objects are created from the attributes of the entity object (when the entity is initialized either as a new object or from finding an existing object) and how it can be turned back into attributes (when the entity is saved to the database). Example:

class Customer < ActiveRecord::Base

composed_of :balance, :class_name => "Money", :mapping => %w(balance amount)

composed_of :address, :mapping => [ %w(address_street street), %w(address_city city) ]

end

Validation rules that can differ for new or existing objects

Active Records implement validation by overwriting Base#validate (or the variations, validate_on_create and validate_on_update). Each of these methods can inspect the state of the object, which usually means ensuring that a number of attributes have a certain value (such as not empty, within a given range, matching a certain regular expression). Example:

class Account < ActiveRecord::Base validates_presence_of :subdomain, :name, :email_address, :password validates_uniqueness_of :subdomain validates_acceptance_of :terms_of_service, :on => :create validates_confirmation_of :password, :email_address, :on => :create end

Callbacks as methods or queues on the entire lifecycle (instantiation, saving, destroying, validating, etc)

Callbacks are hooks into the lifecycle of an Active Record object that allow you to trigger logic before or after an alteration of the object state. This can be used to make sure that associated and dependent objects are deleted when destroy is called (by overwriting before_destroy) or to massage attributes before they‘re validated (by overwriting before_validation).

class Person < ActiveRecord::Base

def before_destroy # is called just before Person#destroy

CreditCard.find(credit_card_id).destroy

end

end

class Account < ActiveRecord::Base

after_find :eager_load, 'self.class.announce(#{id})'

end

Observers for the entire lifecycle

Observer classes respond to lifecycle callbacks to implement trigger-like behavior outside the original class. This is a great way to reduce the clutter that normally comes when the model class is burdened with functionality that doesn‘t pertain to the core responsibility of the class. Example:

class CommentObserver < ActiveRecord::Observer

def after_create(comment) # is called just after Comment#save

Notifications.deliver_new_comment("david@loudthinking.com", comment)

end

end

Inheritance hierarchies

Active Record allows inheritance by storing the name of the class in a column that by default is named "type" (can be changed by overwriting Base.inheritance_column). Example of inheritance:

class Company < ActiveRecord::Base; end class Firm < Company; end class Client < Company; end class PriorityClient < Client; end

Transactions

Transactions are protective blocks where SQL statements are only permanent if they can all succeed as one atomic action. The classic example is a transfer between two accounts where you can only have a deposit if the withdrawal succeeded and vice versa. Transactions enforce the integrity of the database and guard the data against program errors or database break-downs. So basically transaction blocks are used if there are number of statements that must be executed together or not at all. Example:

# Database transaction Account.transaction do david.withdrawal(100) mary.deposit(100) end

Reflections on columns, associations, and aggregations

Reflection allows you to interrogate Active Record classes and objects about their associations and aggregations. This information can, for example, be used in a form builder that took an Active Record object and created input fields for all of the attributes depending on their type and displayed the associations to other objects.

reflection = Firm.reflect_on_association(:clients) reflection.klass # => Client (class) Firm.columns # Returns an array of column descriptors for the firms table

Data Definitions

Data definitions are specified only in the database. Active Record queries the database for the column names (that then serves to determine which attributes are valid) on regular object instantiation through the new constructor and relies on the column names in the rows with the finders.

# CREATE TABLE companies ( # id int(11) unsigned NOT NULL auto_increment, # client_of int(11), # name varchar(255), # type varchar(100), # PRIMARY KEY (id) # )

Active Record automatically links the "Company" object to the "companies" table

class Company < ActiveRecord::Base

has_many :people, :class_name => "Person"

end

class Firm < Company

has_many :clients

def people_with_all_clients

clients.inject([]) { |people, client| people + client.people }

end

end

The foreign_key is only necessary because we didn‘t use "firm_id" in the data definition

class Client < Company

belongs_to :firm, :foreign_key => "client_of"

end

# CREATE TABLE people ( # id int(11) unsigned NOT NULL auto_increment, # name text, # company_id text, # PRIMARY KEY (id) # )

Active Record will also automatically link the "Person" object to the "people" table

class Person < ActiveRecord::Base

belongs_to :company

end

Datamapper

Datamapper is an object-relational mapper library written in Ruby and commonly used with Merb. It was developed to address perceived shortcomings in Ruby on Rails' ActiveRecord library.

Features

Some features of Datamapper: eager loading of child associations to avoid (N+1) queries lazy loading of select properties, e.g., larger fields query chaining, and not evaluating the query until absolutely necessary (using a lazy array implementation) an API not too heavily oriented to SQL databases.

DataMapper differentiates itself from other Ruby Object/Relational Mappers in a number of ways:

Same API for different datastores

DataMapper comes with the ability to use the same API to talk to a multitude of different datastores. There are adapters for the usual RDBMS suspects, NoSQL stores, various file formats and even some popular webservices.

There’s a probably incomplete list of available datamapper adapters on the github wiki with new ones getting implemented regularly. A quick github search should give you further hints on what’s currently available.

Works well with databases

With DataMapper you define your mappings in your model. Your data-store can develop independently of your models using Migrations.

To support data-stores which you don’t have the ability to manage yourself, it’s simply a matter of telling DataMapper where to look. This makes DataMapper a good choice when Working with legacy databases

1 class Post 2 include DataMapper::Resource 3 4 # set the storage name for the :legacy repository 5 storage_names[:legacy] = 'tblPost' 6 7 # use the datastore's 'pid' field for the id property. 8 property :id, Serial, :field => :pid 9 10 # use a property called 'uid' as the child key (the foreign key) 11 belongs_to :user, :child_key => [ :uid ] 12 end

DataMapper only issues updates or creates for the properties it knows about. So it plays well with others. You can use it in an Integration Database without worrying that your application will be a bad actor causing trouble for all of your other processes.

DataMapper has full support for Composite Primary Keys (CPK) builtin. Specifying the properties that form the primary key is easy.

1 class LineItem 2 include DataMapper::Resource 3 4 property :order_id, Integer, :key => true 5 property :item_number, Integer, :key => true 6 end

If we were to know an order_id/item_number combination, retrieve the corresponding line item from the datastore.

1 order_id, item_number = 1, 1 2 LineItem.get(order_id, item_number) 3 # => [#<LineItem @orderid=1 @item_number=1>]

Less need for writing migrations

With DataMapper, you specify the datastore layout inside your ruby models. This allows DataMapper to create the underlying datastore schema based on the models you defined. The #auto_migrate! and #auto_upgrade! methods can be used to generate a schema in the datastore that matches your model definitions.

While #auto_migrate! desctructively drops and recreates tables to match your model definitions, #auto_upgrade! supports upgrading your datastore to match your model definitions, without actually destroying any already existing data.

There are still some limitations to the operations that #auto_upgrade! can perform. We’re working hard on making it smarter, but there will always be scenarios where an automatic upgrade of your schema won’t be possible. For example, there’s no sane strategy for automatically changing a column length constraint from VARCHAR(100) to VARCHAR(50). DataMapper can’t know what it should do when the data doesn’t validate against the new tightened constraints.

In situations where neither #auto_migrate! nor #auto_upgrade! quite cut it, you can still fall back to the classic migrations feature provided by dm-migrations.

Here’s some example code that puts #auto_migrate! and #auto_upgrade! to use.

1 require 'rubygems' 2 require 'dm-core' 3 require 'dm-migrations' 4 5 DataMapper::Logger.new($stdout, :debug) 6 DataMapper.setup(:default, 'mysql://localhost/test') 7 8 class Person 9 include DataMapper::Resource 10 property :id, Serial 11 property :name, String, :required => true 12 end 13 14 DataMapper.auto_migrate! 15 16 # ~ (0.015754) SET sql_auto_is_null = 0 17 # ~ (0.000335) SET SESSION sql_mode = 'ANSI,NO_BACKSLASH_ESCAPES,NO_DIR_IN_CREATE,NO_ENGINE_SUBSTITUTION,NO_UNSIGNED_SUBTRACTION,TRADITIONAL' 18 # ~ (0.283290) DROP TABLE IF EXISTS `people` 19 # ~ (0.029274) SHOW TABLES LIKE 'people' 20 # ~ (0.000103) SET sql_auto_is_null = 0 21 # ~ (0.000111) SET SESSION sql_mode = 'ANSI,NO_BACKSLASH_ESCAPES,NO_DIR_IN_CREATE,NO_ENGINE_SUBSTITUTION,NO_UNSIGNED_SUBTRACTION,TRADITIONAL' 22 # ~ (0.000932) SHOW VARIABLES LIKE 'character_set_connection' 23 # ~ (0.000393) SHOW VARIABLES LIKE 'collation_connection' 24 # ~ (0.080191) CREATE TABLE `people` (`id` INT(10) UNSIGNED NOT NULL AUTO_INCREMENT, `name` VARCHAR(50) NOT NULL, PRIMARY KEY(`id`)) ENGINE = InnoDB CHARACTER SET utf8 COLLATE utf8_general_ci 25 # => #<DataMapper::DescendantSet:0x101379a68 @descendants=[Person]> 26 27 class Person 28 property :hobby, String 29 end 30 31 DataMapper.auto_upgrade! 32 33 # ~ (0.000612) SHOW TABLES LIKE 'people' 34 # ~ (0.000079) SET sql_auto_is_null = 0 35 # ~ (0.000081) SET SESSION sql_mode = 'ANSI,NO_BACKSLASH_ESCAPES,NO_DIR_IN_CREATE,NO_ENGINE_SUBSTITUTION,NO_UNSIGNED_SUBTRACTION,TRADITIONAL' 36 # ~ (1.794475) SHOW COLUMNS FROM `people` LIKE 'id' 37 # ~ (0.001412) SHOW COLUMNS FROM `people` LIKE 'name' 38 # ~ (0.001121) SHOW COLUMNS FROM `people` LIKE 'hobby' 39 # ~ (0.153989) ALTER TABLE `people` ADD COLUMN `hobby` VARCHAR(50) 40 # => #<DataMapper::DescendantSet:0x101379a68 @descendants=[Person]>

DataMapper provides data integrity

DataMapper makes it easy to leverage native techniques for enforcing data integrity. The dm-constraints plugin provides support for establishing true foreign key constraints in databases that support that concept.

Strategic Eager Loading

DataMapper will only issue the very bare minimums of queries to your data-store that it needs to. For example, the following example will only issue 2 queries. Notice how we don’t supply any extra :include information.

1 zoos = Zoo.all

2 zoos.each do |zoo|

3 # on first iteration, DM loads up all of the exhibits for all of the items in zoos

4 # in 1 query to the data-store.

5

6 zoo.exhibits.each do |exhibit|

7 # n+1 queries in other ORMs, not in DataMapper

8 puts "Zoo: #{zoo.name}, Exhibit: #{exhibit.name}"

9 end

10 end

The idea is that you aren’t going to load a set of objects and use only an association in just one of them. This should hold up pretty well against a 99% rule.

When you don’t want it to work like this, just load the item you want in it’s own set. So DataMapper thinks ahead. We like to call it “performant by default”. This feature single-handedly wipes out the “N+1 Query Problem”.

DataMapper also waits until the very last second to actually issue the query to your data-store. For example, zoos = Zoo.all won’t run the query until you start iterating over zoos or call one of the ‘kicker’ methods like #length. If you never do anything with the results of a query, DataMapper won’t incur the latency of talking to your data-store.

Querying models by their associations

DataMapper allows you to create and search for any complex object graph simply by providing a nested hash of conditions. The following example uses a typical Customer - Order domain model to illustrate how nested conditions can be used to both create and query models by their associations.

For a complete definition of the Customer - Order domain models have a look at the Finders page.

1 # A hash specifying one customer with one order

2 #

3 # In general, possible keys are all property and relationship

4 # names that are available on the relationship's target model.

5 # Possible toplevel keys depend on the property and relationship

6 # names available in the model that receives the hash.

7 #

8 customer = {

9 :name => 'Dan Kubb',

10 :orders => [

11 {

12 :reference => 'TEST1234',

13 :order_lines => [

14 {

15 :item => {

16 :sku => 'BLUEWIDGET1',

17 :unit_price => 1.00,

18 },

19 },

20 ],

21 },

22 ]

23 }

24

25 # Create the Customer with the nested options hash

26 Customer.create(customer)

27 # => [#<Customer @id=1 @name="Dan Kubb">]

28

29 # The same options to create can also be used to query for the same object

30 p Customer.all(customer)

31 # => [#<Customer @id=1 @name="Dan Kubb">]

QueryPaths can be used to construct joins in a very declarative manner.

Starting from a root model, you can call any relationship by its name. The returned object again responds to all property and relationship names that are defined in the relationship’s target model.

This means that you can walk the chain of available relationships, and then match against a property at the end of that chain. The object returned by the last call to a property name also responds to all the comparison operators that we saw above. This makes for some powerful join construction!

1 Customer.all(Customer.orders.order_lines.item.sku.like => "%BLUE%") 2 # => [#<Customer @id=1 @name="Dan Kubb">]

You can even chain calls to all or first to continue refining your query or search within a scope.

Identity Map

One row in the database should equal one object reference. Pretty simple idea. Pretty profound impact. If you run the following code in ActiveRecord you’ll see all false results. Do the same in DataMapper and it’s true all the way down.

1 @parent = Tree.first(:conditions => { :name => 'bob' }) 2 3 @parent.children.each do |child| 4 puts @parent.object_id == child.parent.object_id 5 end

This makes DataMapper faster and allocate less resources to get things done.

Laziness Can Be A Virtue

Columns of potentially infinite length, like Text columns, are expensive in data-stores. They’re generally stored in a different place from the rest of your data. So instead of a fast sequential read from your hard-drive, your data-store has to hop around all over the place to get what it needs.

With DataMapper, these fields are treated like in-row associations by default, meaning they are loaded if and only if you access them. If you want more control you can enable or disable this feature for any column (not just text-fields) by passing a lazy option to your column mapping with a value of true or false.

1 class Animal 2 include DataMapper::Resource 3 4 property :id, Serial 5 property :name, String 6 property :notes, Text # lazy-loads by default 7 end

Plus, lazy-loading of Text property happens automatically and intelligently when working with associations. The following only issues 2 queries to load up all of the notes fields on each animal:

1 animals = Animal.all 2 animals.each do |pet| 3 pet.notes 4 end

Ruby Compatibility

DataMapper loves Ruby and is therefore tested regularly against all major Ruby versions. Before release, every gem is explicitly tested against MRI 1.8.7, 1.9.2, JRuby and Rubinius. We’re proud to say that almost all of our specs pass on all these different implementations.

Have a look at the testing matrix for detailed information about which gems pass or fail their specs on the various Ruby implementations.

DataMapper goes further than most Ruby ORMs in letting you avoid writing raw query fragments yourself. It provides more helpers and a unique hash-based conditions syntax to cover more of the use-cases where issuing your own SQL would have been the only way to go.

For example, any finder option that are non-standard is considered a condition. So you can write Zoo.all(:name => 'Dallas') and DataMapper will look for zoos with the name of ‘Dallas’.

It’s just a little thing, but it’s so much nicer than writing Zoo.find(:all, :conditions => [ 'name = ?', 'Dallas' ]) and won’t incur the Ruby overhead of Zoo.find_by_name('Dallas'), nor is it more difficult to understand once the number of parameters increases.

1 Zoo.first(:name => 'Galveston') 2 3 # 'gt' means greater-than. 'lt' is less-than. 4 Person.all(:age.gt => 30) 5 6 # 'gte' means greather-than-or-equal-to. 'lte' is also available 7 Person.all(:age.gte => 30) 8 9 Person.all(:name.not => 'bob') 10 11 # If the value of a pair is an Array, we do an IN-clause for you. 12 Person.all(:name.like => 'S%', :id => [ 1, 2, 3, 4, 5 ]) 13 14 # Does a NOT IN () clause for you. 15 Person.all(:name.not => [ 'bob', 'rick', 'steve' ]) 16 17 # Ordering 18 Person.all(:order => [ :age.desc ]) 19 # .asc is the default

Open Development

DataMapper sports a very accessible code-base and a welcoming community. Outside contributions and feedback are welcome and encouraged, especially constructive criticism. Go ahead, fork DataMapper, we’d love to see what you come up with!

Make your voice heard! Submit a ticket or patch, speak up on our mailing-list, chat with us on irc, write a spec, get it reviewed, ask for commit rights. It’s as easy as that to become a contributor.

iBATIS

iBATIS is a persistence framework which automates the mapping between SQL databases and objects in Java, .NET, and Ruby on Rails. iBatis for Ruby (RBatis) is a port of Apache's iBatis library to Ruby and Ruby on Rails. It is an O/R-mapper that allows for complete customization of SQL. Other persistence frameworks such as Hibernate allow the creation of an object model by the user, and create and maintain the relational database automatically. iBatis takes the reverse approach: the developer starts with an SQL database and iBatis automates the creation of the objects. Both approaches have advantages, and iBatis is a good choice when the developer does not have full control over the SQL database schema. For example, an application may need to access an existing SQL database used by other software, or access a new database whose schema is not fully under the application developer's control, such as when a specialized database design team has created the schema and carefully optimized it for high performance.

Essentially, iBatis is a very lightweight persistence solution that gives most of the semantics of an O/R Mapping toolkit. In other words ,iBATIS makes it easy for the development of data-driven applications by abstracting the low-level details involved in database communication (loading a database driver, obtaining and managing connections, managing transaction semantics, etc.), as well as providing higher-level ORM capabilities (automated and configurable mapping of objects to SQL calls, data type conversion management, support for static queries as well as dynamic queries based upon an object's state, mapping of complex joins to complex object graphs, etc.). iBATIS simply maps JavaBeans to SQL statements using a very simple XML descriptor. Simplicity is the key advantage of iBATIS over other frameworks and object relational mapping tools.

Merb

Merb, short for "Mongrel+Erb", is a model-view-controller web framework written in Ruby. Merb adopts an approach that focuses on essential core functionality, leaving most functionality to plugins. Merb is merged into Rails web framework since December 23, 2008.Merb were merged with Rails as part of the Ruby on Rails 3.0 release. The Merb project was started as a "clean-room" implementation of the Ruby on Rails controller stack, but has grown to incorporate a number of ideas which deviated from Rails's spirit and methodology, most notably, component modularity, extensible API design, and vertical scalability. Most of these capabilities have since been incorporated back into Rails during the Rails/Merb merger announced on December 23, 2008. Like Rails, Merb can also be used to write sophisticated applications and RESTful Web services. It has been suggested that Merb is more flexible and faster than Rails.

Sequel

Sequel includes a comprehensive ORM layer for mapping records to Ruby objects and handling associated records. Sequel provides thread safety, connection pooling and a concise DSL for constructing SQL queries and table schemas.It supports advanced database features such as prepared statements, bound variables, stored procedures, savepoints, two-phase commit, transaction isolation, master/slave configurations, and database sharding.Currently it has adapters for ADO, Amalgalite, DataObjects, DB2, DBI, Firebird, Informix, JDBC, MySQL, Mysql2, ODBC, OpenBase, Oracle, PostgreSQL, SQLite3, and Swift.

Sequel Example

require "rubygems"

require "sequel"

# connect to an in-memory database

DB = Sequel.sqlite

# create an items table

DB.create_table :items do

primary_key :id

String :name

Float :price

end

# create a dataset from the items table

items = DB[:items]

# populate the table

items.insert(:name => 'abc', :price => rand * 100)

items.insert(:name => 'def', :price => rand * 100)

items.insert(:name => 'ghi', :price => rand * 100)

# print out the number of records

puts "Item count: #{items.count}"

# print out the average price

puts "The average price is: #{items.avg(:price)}"

References

- Russ Olsen, Book on Design Patterns in Ruby

- Chris Richardson, "ORM in dynamic languages", http://portal.acm.org/citation.cfm?id=1498765.1498783

- Dr Bruce Scharlau, Paper-Teaching Ruby on Rails

- http://en.wikipedia.org/wiki/Object-relational_mapping

- http://en.wikipedia.org/wiki/ActiveRecord_(Rails)#Implementations

- http://en.wikipedia.org/wiki/Datamapper

- http://sequel.rubyforge.org/

- http://ibatis.apache.org/docs/ruby/

- http://en.wikipedia.org/wiki/IBATIS

- http://en.wikipedia.org/wiki/Merb