CSC/ECE 506 Spring 2010/ch 6 PP

CACHE STRUCTURES OF MULTI-CORE ARCHITECTURES

Overview

With the advent of multicore and many core architectures, we are facing a problem that is relatively new to parallel computing, namely, the management of hierarchical parallel caches. This chapter describes some of the mainstream memory organizations in multiprocessor architectures. It also focuses on cache coherence and memory consistency issues and protocols to handle them.

Shared Memory Multiprocessors

Scalable shared-memory multiprocessors are emerging as attractive platforms for applications with high-performance demands. What makes these machines attractive is the shared address space, which allows processors in a multiprocessor to share data the same way it is shared by multiple processes in a sequential machine. The shared-memory paradigm makes it easier to write parallel programs, but tuning the application to reduce the impact of frequent long-latency memory accesses still requires substantial programmer effort.

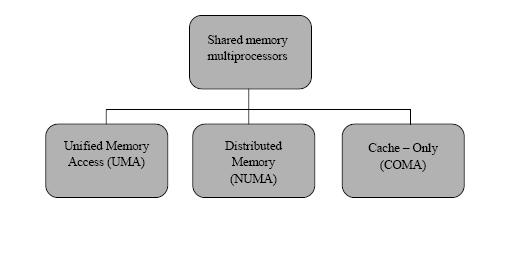

From the perspective of system architecture, current mainstream shared memory multiprocessors fall into three categories as shown in Figure 1 :

UMA(Unified Memory Access), NUMA (Non-Uniform Memory Access) and COMA (Cache-only Memory Architectures).

UMA-Uniform Memory Access

All the processors in the UMA model share the physical memory uniformly. In a UMA architecture, access time to a memory location is independent of which processor makes the request or which memory chip contains the transferred data. In the UMA architecture, each processor may use a private cache. Peripherals are also shared in some fashion; The UMA model is suitable for general purpose and time sharing applications by multiple users. It can be used to speed up the execution of a single large program in time critical applications.