M1852 Implement missing WebAudio node support

Introduction

Servo

Servo is a modern, high-performance browser engine designed for both application and embedded use and written in the Rust programming language. It is currently developed on 64bit OS X, 64bit Linux, and Android.

More information about Servo is available here

Rust

Rust is a systems programming language focuses on memory safety and concurrency. It is similar to C++ but ensures memory safety and high performance.

More information about Rust can be found here

Web Audio API

The Web Audio API involves handling audio operations inside an audio context, and has been designed to allow modular routing. Basic audio operations are performed with audio nodes, which are linked together to form an audio routing graph. Several sources — with different types of channel layout — are supported even within a single context. This modular design provides the flexibility to create complex audio functions with dynamic effects.

Audio nodes are linked into chains and simple webs by their inputs and outputs. They typically start with one or more sources. Sources provide arrays of sound intensities (samples) at very small timeslices, often tens of thousands of them per second. These could be either computed mathematically (such as OscillatorNode), or they can be recordings from sound/video files (like AudioBufferSourceNode and MediaElementAudioSourceNode) and audio streams (MediaStreamAudioSourceNode). In fact, sound files are just recordings of sound intensities themselves, which come in from microphones or electric instruments, and get mixed down into a single, complicated wave.

Outputs of these nodes could be linked to inputs of others, which mix or modify these streams of sound samples into different streams. A common modification is multiplying the samples by a value to make them louder or quieter (as is the case with GainNode). Once the sound has been sufficiently processed for the intended effect, it can be linked to the input of a destination (AudioContext.destination), which sends the sound to the speakers or headphones. This last connection is only necessary if the user is supposed to hear the audio.

A simple, typical workflow for web audio would look something like this:

1. Create audio context

2. Inside the context, create sources — such as <audio>, oscillator, stream

3. Create effects nodes, such as reverb, biquad filter, panner, compressor

4. Choose final destination of audio, for example your system speakers

5. Connect the sources up to the effects, and the effects to the destination.

Scope

Major browsers support the WebAudio standard which can be used to create complex media playback applications from low-level building blocks. Servo is a new, experimental browser that supports some of these building blocks (called audio nodes); the goal of this project is to to improve compatibility with web content that relies on the WebAudio API by implementing missing pieces of incomplete node types (OscillatorNode) along with entire missing nodes (ConstantSourceNode, StereoPannerNode, IIRFilterNode).

Additional project information is available here

Implementation

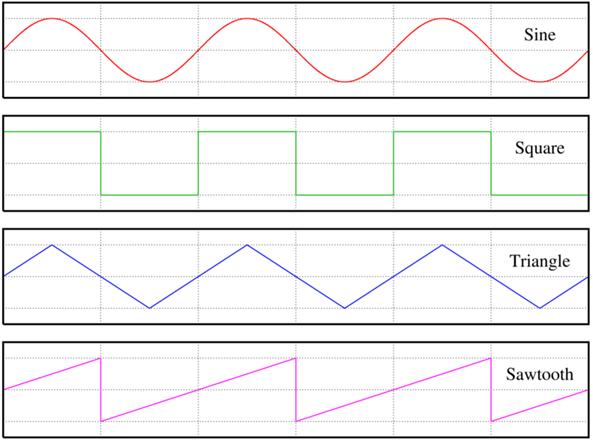

The implementation involved audio processing for Sine, Square, Sawtooth and Triangle waveforms. We created a oscillator.rs file which includes examples of the given waveforms. These waveforms can be visualized using the given image:

The "OscillatorType" variable is a string which has the name of the waveform associated with it. For each waveform, we calculate the value which is used by the OscilaterNode class to generate a wave. Each type of wave has a particular output. The output is a function of phase, with each waveform having its own function as follows:

Code Snippet

// Convert all our parameters to the target type for calculations

let vol: f32 = 1.0;

let sample_rate = info.sample_rate as f64;

let two_pi = 2.0 * PI;

// We're carrying a phase with up to 2pi around instead of working

// on the sample offset. High sample offsets cause too much inaccuracy when

// converted to floating point numbers and then iterated over in 1-steps

//

// Also, if the frequency changes the phase should not

let mut step = two_pi * self.frequency.value() as f64 / sample_rate;

while let Some(mut frame) = iter.next() {

let tick = frame.tick();

if tick < start_at {

continue

} else if tick > stop_at {

break;

}

if self.update_parameters(info, tick) {

step = two_pi * self.frequency.value() as f64 / sample_rate;

}

let mut value = vol;

//Based on the type of wave, the value is calculated using the formulae for the respective waveform

match self.oscillator_type {

OscillatorType::Sine => {

value = vol * f32::sin(NumCast::from(self.phase).unwrap());

}

OscillatorType::Square => {

if self.phase >= PI && self.phase < two_pi {

value = vol * 1.0;

}

else if self.phase > 0.0 && self.phase < PI {

value = vol * (-1.0);

}

}

OscillatorType::Sawtooth => {

value = vol * ((self.phase as f64) / (PI)) as f32;

}

OscillatorType::Triangle => {

if self.phase >= 0. && self.phase < PI/2.{

value = vol * 2.0 * ((self.phase as f64) / (PI)) as f32;

}

else if self.phase >= PI/2. && self.phase < PI {

value = vol * ( 1. - ( ( (self.phase as f64) - (PI/2.)) * (2./PI) ) as f32 );

}

else if self.phase >= PI && self.phase < (3.* PI/2.) {

value = vol * -1. * ( 1. - ( ( (self.phase as f64) - (PI/2.)) * (2./PI) ) as f32 );

}

else if self.phase >= 3.*PI/2. && self.phase < 2.*PI {

value = vol * (-2.0) * ((self.phase as f64) / (PI)) as f32;

}

}

After running the code, different sound patterns are created with respect to each waveform (Sine, Square, Sawtooth, Triangle)

Build

6/11 - The Pull Request was closed and the changes were merged upstream.

3/11 - Due to 3rd party service issue (Appveyor) our build is facing issues with the continuous integration. We are working with the Servo team to resolve this.

3/11- Members of the servo project informed us that Appveyor build status does not determine the success of a pull request. We were advised to run a bors-servo build and this succeeded. Therefore all build checks necessary for PR to pass have been completed successfully. We are waiting changes to be merged to upstream.

We have opened our pull request and are working on getting it merged based on the reviews received. Please use our forked repository till the pull request is merged.

Forked repository: https://github.com/CJ8664/servo

Clone command using git:

git clone https://github.com/CJ8664/servo.git

Once you have the forked repo, please follow steps here to do a build.

Note that build may take up to 30 minutes, based on your system configuration. You can build on Janitor to reduce the build time.

Building on the cloud

This is the simplest and the fastest way to deploy and test an instance of servo. No configuration is required on your machine.

1. Go to http://janitor.technology

2. Click on New Container for Servo

3. Enter your email address to gain access to your container

4. Once logged in, go Containers on the top right.

5. You will now see a container - Click on the IDE button to open your online IDE environment.

6. Change directory to /home/user and create a new directory, say servo_test

7. Go to this new directory and clone our repository as mentioned above

8. Upon cloning you should see a /servo directory within 'servo_test'

9. Go to /servo

10. It is now time to build - run the following command:

./mach build --dev

If all goes well you will see a success message - 'Build Completed'

Building locally

Local build instructions for Windows environments are given below -

1. Install Python for Windows (https://www.python.org/downloads/release/python-2714/).

The Windows x86-64 MSI installer is fine. You should change the installation to install the "Add python.exe to Path" feature.

2. Install virtualenv.

In a normal Windows Shell (cmd.exe or "Command Prompt" from the start menu), do:

pip install virtualenv

If this does not work, you may need to reboot for the changed PATH settings (by the python installer) to take effect.

3. Install Git for Windows (https://git-scm.com/download/win). DO allow it to add git.exe to the PATH (default settings for the installer are fine).

4. Install Visual Studio Community 2017 (https://www.visualstudio.com/vs/community/).

You MUST add "Visual C++" to the list of installed components. It is not on by default. Visual Studio 2017 MUST installed to the default location or mach.bat will not find it.

If you encountered errors with the environment above, do the following for a workaround:

Download and install Build Tools for Visual Studio 2017

Install python2.7 x86-x64 and virtualenv

5. Run mach.bat build -d to build

If you have troubles with x64 type prompt as mach.bat set by default:

you may need to choose and launch the type manually, such as x86_x64 Cross Tools Command Prompt for VS 2017 in the Windows menu.)

cd to/the/path/servo

python mach build -d

Build instructions for all other environments are available here

Verifying a build

We can quickly verify if the servo build is working by running the command

'./mach run http://www.google.com'

This will open a browser instance rendering the Google homepage.

This should be straightforward on any environment that has rendering support - Linus, Windows, MacOS, Android

If you are on Janitor environment, it's IDE will not provide rendering support. You might receive an error along the lines of 'No renderer found' upon executing the command.

Workaround: On the 'Container' page on janitor.technology click on VNC for your container. Click Connect on the new tab that opens up.

You should now have remote access to a UI with a command line. Simply run the above command and the web page should render.

Test Plan

Testing Approach

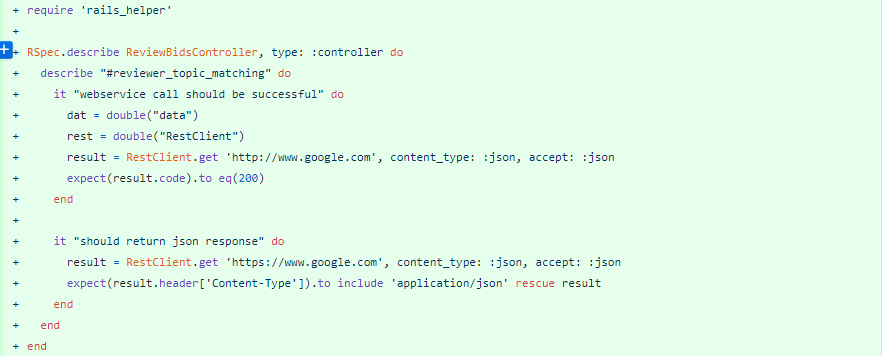

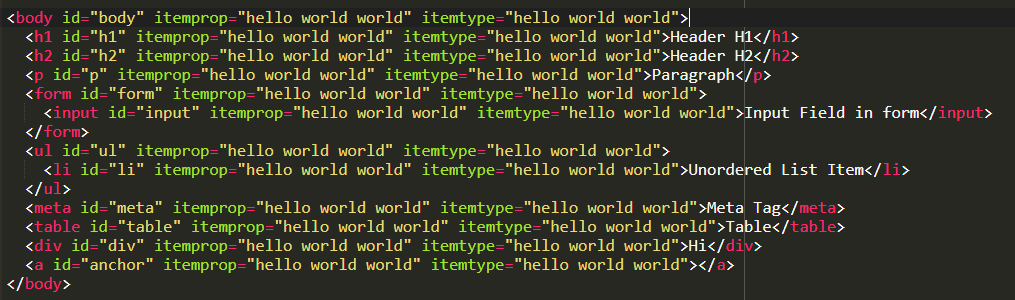

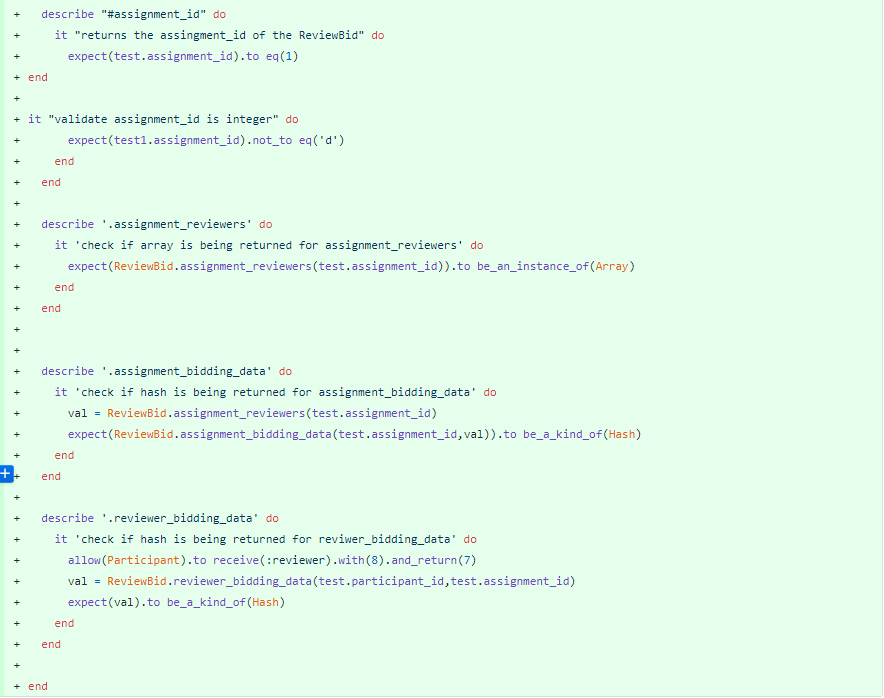

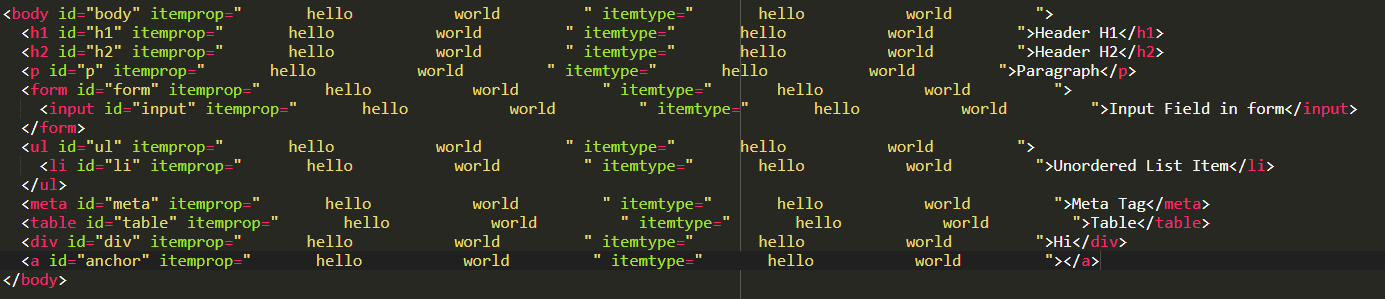

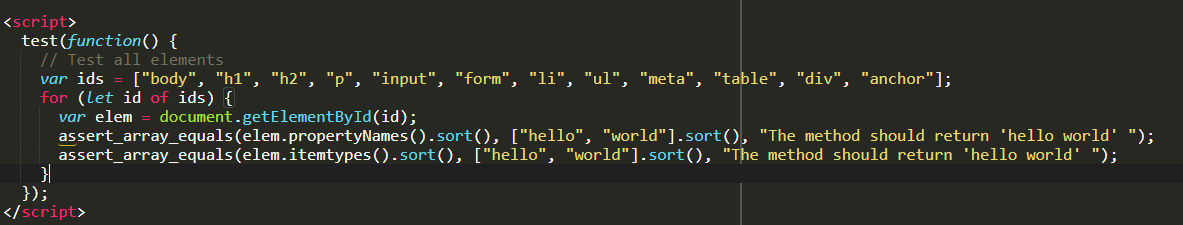

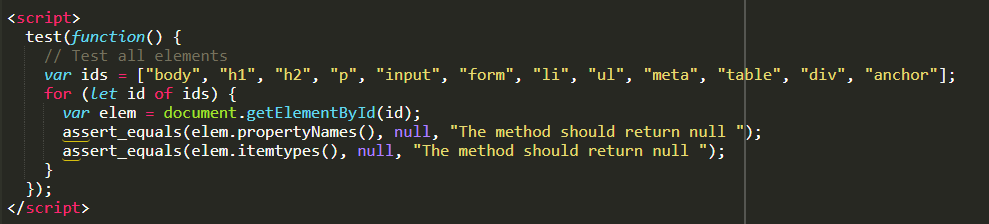

Since our implementation adds the Microdata tags into the DOM, the approach used for testing is to directly query the DOM tree using JavaScript to detect the presence of Microdata in an HTML tag within the DOM. This will confirm if the engine was able to parse these tags and add them to the DOM. Also, as per the microdata specifications, the tags can be contained in any HTML tag. Therefore, the test data consists of several HTML tags like 'div', 'ul', 'li', 'span' etc. each with a microdata ('itemprop' and 'itemtype') attribute.

Test Framework

We have used the 'web-platform-tests' (WPT) suite for testing. It is an existing test suite used in the Servo project. It generally consists of two test types: JavaScript tests (to test DOM features, for example) written using the testharness.js library and reference tests (to test rendered output with what's expected to ensure that the rendering is done properly) written using the W3C reftest format. Since the microdata tags do not render anything on the page, only DOM testing is in scope.

testharness.js has been used to write the tests; it complements our testing approach as it can be called directly via JS within an HTML page. It provides a convenient API for making common assertions, and to work both for testing synchronous and asynchronous DOM features in a way that promotes clear, robust, tests.

testharness.js returns the result of the test directly from the html page which is then used by WPT to interpret the result of the test.

Test Cases

In order to test our implementation, the following scenarios have been evaluated.

Attribute with a single value should be stored properly

Input Data

Test Script

Space separated values in the attributes should be stored as different values

Input Data

Test Script

Duplicate occurrence of attributes should be ignored

Input Data

Test Script

Extra whitespace in the attribute list should be ignored

Input Data

Test Script

Attribute has not been set (null or empty)

Input Data

Test Script

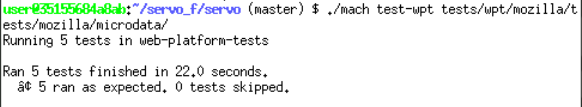

Testing Steps

Please read and perform the actions on the Build and Verification sections properly before testing.

1) Run the command ./mach tests-wpt /tests/wpt/mozilla/tests/mozilla/microdata/

2) A webpage should render showing the status of the test.

Here is the output of test-wpt after the tests have been run successfully.

Dependencies

html5ever - HTML attribute names are fetched in Servo from a lookup file in the html5ever module. The html5ever module was augmented with the 'itemprop' and 'itemtype' attributes for use in Servo.

Pull Request

The pull request used to incorporate our changes upstream is available here

References

http://html5doctor.com/microdata/

http://web-platform-tests.org/writing-tests/testharness-api.html

https://html.spec.whatwg.org/multipage/microdata.html

https://code.tutsplus.com/tutorials/html5-microdata-welcome-to-the-machine--net-12356