CSC/ECE 517 Fall 2017/E1790 Text metrics

Introduction

In this final project “Text Metric”, first, we will integrate a couple of external sources such as Github, Trello to fetch information. Second, we will introduce the idea of "Readability." To get the level of readability, we will import the content of write-ups written by students, split the sentences to get the number of sentences, the number of words, etc., and then we calculate the indices by using these numbers and formulas.

Our primary task for the final project is to design tables which allow the Expertiza app to store data fetched from external sources, such as GitHub, Trello, and write-ups. For the next step, we would like to utilize this raw data for virtualized charts and grading metrics.

Current Design

Currently, there are three models created to store the raw data from metrics source. (Metrics, Metric_data_points, Metric_data_point_types)

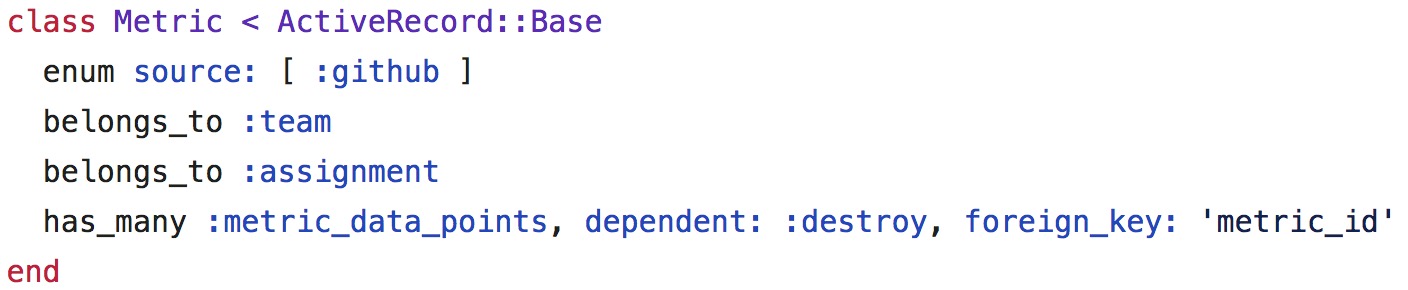

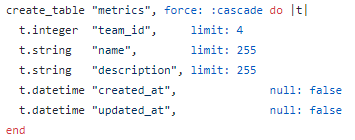

Metric

Model:

Schema:

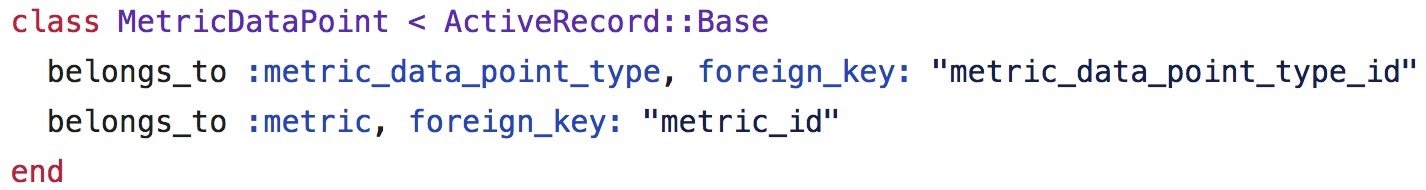

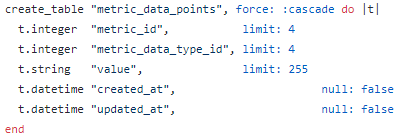

Metric_data_points

Model:

Schema:

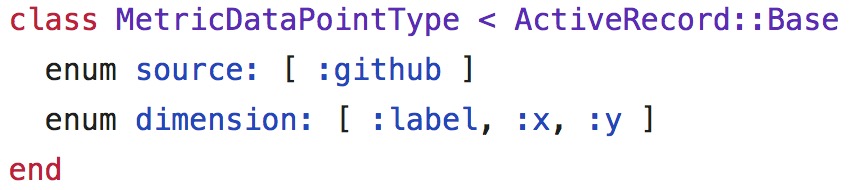

Metric_data_point_types

Model:

Schema:

The current framework only defined the schema, but the models are still empty, and the methods of the data parser have not been implemented yet.

This schema is a clever design because it follows the "Open to Extension and Closed to Modification" principle. When new data is added to the database, developers don't have to change the metric_data_point_types and metric_data_points tables. The developers only need to add two methods to translate the data type to and from strings. By browsing the code, the most basic types already have those methods to meet our requirements. But it is not flawless, and we will talk about the problems in the next section.

Besides, we only have GitHub to be our data source currently. As a result, we also need to find other data sources to be one of the grading metrics.

Analysis of the Problem

Proposed Design

Current source: GitHub

Our first and current source for grading metrics is GitHub, and Zach and Tyler have implemented the integration with GitHub API for fetching the commit data. However, the integration looks that it is still in the first stage; the app can fetch the data and store into the database. We don't have actual implementations of getting the valid data and the usage of this data to be one of the grading metrics yet.

From the API offered by GitHub, we can fetch the commit information that the number of additions and the number of deletions is made by each contributor in the repository. We can just use these numbers to calculate the contributions for the metrics, or we can put these numbers into some equations to get the impact factor to represent as contributions for each group member.