CSC/ECE 506 Spring 2012/3a kp

Patterns of parallel programming

Overview

A design pattern is a description of a solution to a frequently occurring problem in some domain. The description makes it easy for the reader to quickly understand both the problem and the proposed solution. Because the pattern has a name, a collection of patterns provides a vocabulary with which to talk about these solutions. They were first postulated for City Planning applications, but they promptly extended to Computer Science (http://en.wikipedia.org/wiki/Software_design_pattern). The idea would always be to have a library of reusable resources that would help us in the development of our product.

As an example – “Dense Linear Algebra” is a “Computational” design pattern for parallel programming. Under this pattern a computation is organized as a sequence of arithmetic expressions acting on dense arrays of data. The operations and data access patterns are well defined mathematically so data can be prefetched and CPUs execute close to their theoretically allowed peak performance.

Parallel programming design patterns have been identified at various levels of software development from high-level patterns that describe how an application is organized to midlevel patterns about specific classes of computations, and low level patterns describing specific execution strategies. Related to these classifications, there are also available “pattern languages” from several researchers, that guide the software developer through hierarchy of parallel programming design development.

In this article we first cover two “pattern languages” for parallel programming in some detail. These are attempts to classify these patterns and we then dwell on commonalities between these approaches. Thereafter, we cover a broad range of parallel programming design patterns highlighting the commonalities and differences.

It is important to appreciate that many design problems do not lend themselves to a top-down or bottom-up analysis. In many cases, the pathway through our patterns will be bounce around between layers with the designer working at whichever layer is most productive at a given time.

Parallel Pattern Programming Languages

We next review "Parallel Pattern Programming Languages" from two researchers and discuss their commonalities and differences.

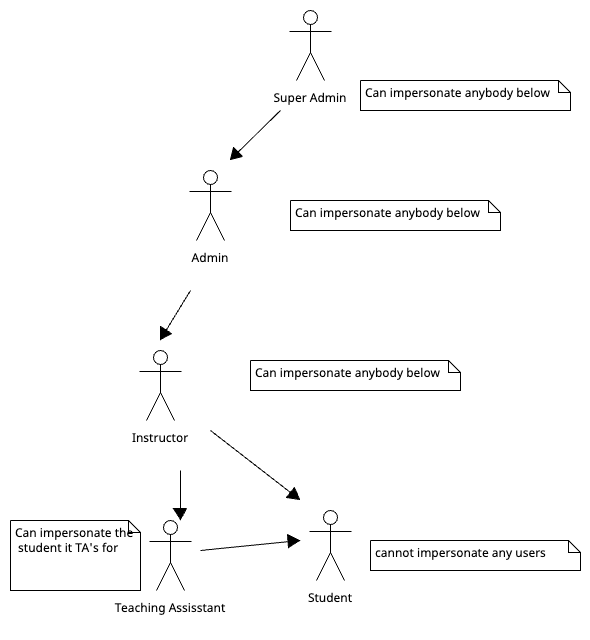

1. Classification by B.L.Massingelllink

This considers four levels of patterns design - A) Finding Concurrency B) Algorithm Structure C) Supporting Structures D) Implementation Mechanisms

A) "FindingConcurrency” considers high-level issues to expose potential concurrency.

There are three major design patterns at this level –

i) Decomposition Strategy pattern - focuses on decomposing the problem into parts that can be run simultaneously and also includes “Task Decomposition pattern” (decomposing into concurrent tasks) and “Data Decomposition pattern” (decomposing into data units that can be run independently).

ii) Dependency Analysis – After using "Decomposing Strategy Pattern" to break into entities, the dependency analysis pattern help the programmer understand how they depend on each other. These dependencies include “Grouping Tasks” (to simplify dependency), “Ordering Tasks” (to satisfy constraints) and “Data Sharing” (among tasks).

iii) Design Evaluation – This pattern is a consolidation pattern; it is used to evaluate the results of the other patterns in this design space.

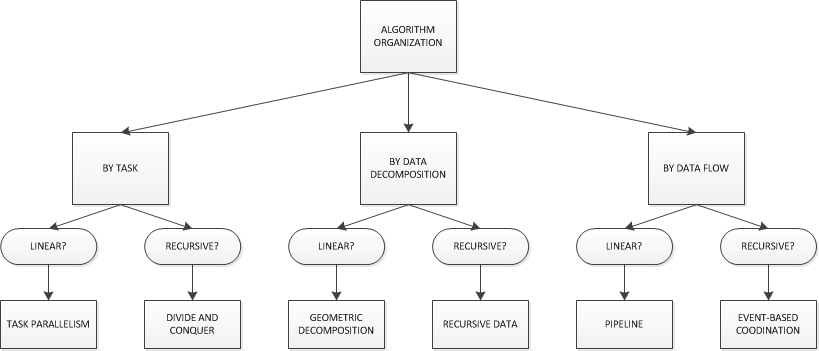

B) Algorithm Structure – This steps moves close to the final program and has six Patterns in three sub-groups -

i) Organize by flow of data – Pipeline Processing Pattern (decomposing into ordered tasks groups connected by data dependencies) and Asynchronous Decomposition Pattern(decomposed into task groups that interact through asynchronous events).

ii) Organize by tasks – Embarrassingly Parallel Pattern (decomposing into a set of independent tasks) and Divide-and-conquer Pattern (break the problem into subproblems that are solved separately and then recombined later).

iii) Organize by data – Geometric Decomposition Pattern (decompose and solve problem into discrete subspaces with the solution for each subspace typically requiring data from a small number of other subspaces) and Recursive Data Pattern (problem is defined in terms of following links through a recursive data structure).

C) The Supporting Structures design space-

The intermediate stage between algorithm structure design space and the machine-oriented “patterns” of the implementation mechanisms space has two types of patterns i) program-structuring constructs and ii) shared data structures. Program-structuring constructs include master/worker (a master process managing worker processes)/ SPMD (Single Program Multiple Data - similar to master/worker with no explicit master process), loop parallelism (distributing parallel loops processing) and Fork/Join (distribution of dynamically created tasks among processing units). Shared data patterns include - ‘shared data’ (explicit shared data among concurrent tasks), ‘shared queue’ (sharing of queue data structure among concurrent tasks), distributed array (distribution of arrays among multiple processing units).

D) The Implementation Mechanisms design space –

These patterns deal with common mechanisms for process or thread management (e.g., creating or destroying processes or threads), synchronization to enforce order of events (memory fences, barriers, mutual exclusion, monitors, semaphores) and communication (e.g., message-passing and collective communication).

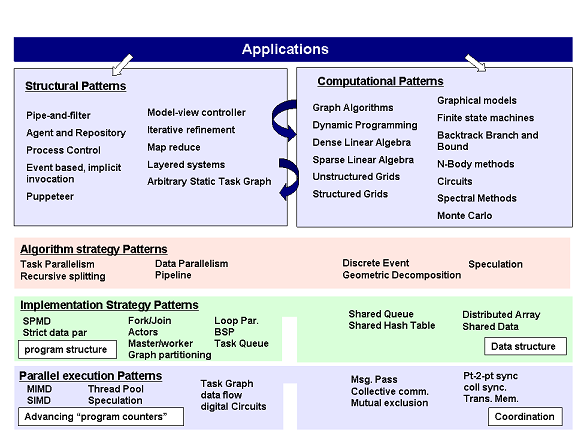

2. Classification by Keutzer and Mattson [1]

They define the application in terms of structural pattern (that define overall organization) and computational patterns at the top to low level details of parallel algorithm at the bottom.

A) The "structural and computational patterns" at the top are tightly coupled and a designer may iterate among them. In other words, a designer thinks about his or her problem, chooses a structure pattern, then considers the computational patterns required to solve the problem. The selection of computational patterns may suggest a different overall structure for the architecture and force a reconsideration of the appropriate structural patterns. This process, moving between structural and computational patterns, continues until the designer settles on a high level design for the problem.

The top level “Structural Pattern” and “Computational Pattern” correspond to “Finding Concurrency” in B.L. Massingell’s classification. The emphasis is on strategically overviewing the problem to find concurrency.

B) The algorithm strategy pattern at the next level is also very similar to Massingell's "Algorithm Structure". These deal with organizing by tasks (Tasks Parallelism, Recursive Splitting), data (Data Parallelism, Geometric Decomposition) and flow of data (Pipeline, Speculation, Discrete Event).

C) At the bottom two levels “Implementation Strategy” and D) “Parallel Execution Strategy” correspond very closely to the last two design patterns levels in B.L.Massingell. The “Implementation Strategy” deals with “Program Structure” and “Data Structure” patterns, while the “Parallel Execution Strategy” deals with task/creation process and management of communication between tasks.

In summary, there appears a commonality in thinking in classifying design patterns for parallel programming.

The First Level - Decomposition Strategy Patterns

In this section we cover, the decomposition strategy using Structural and Computational Design pattern. While a many of these patterns may appear familiar, however we emphasize the relevance of these paradigms in the parallel programming context i.e. in splitting the software design into subtasks that can be run parallely.

Structural Pattern is a high level view of program design. These represent "box and arcs" a software architect would draw on a whiteboard in describing an application. The "boxes" of the diagram represent the "computational" kernels. The problem may be viewed in terms of any of the structures patterns described in the glossaryGlossary. Identifying the pattern of the problem automatically points to parallelization that is possible. For example -

- pipe-and-filter views a problem as a series of processing boxes with inputs and outputs. Each of the processing box may be run concurrently/parallely.

- agent-and-repository - views a problem as many independent agents/processes acting on a central data repository. Each of the agents/processes may be run parallely under the control of a manager.

- process control - views a state being monitored continuously and actions being taken based on the monitor results. The actions may be run concurrently/parallely

- event based implicit invocation - this pattern involves agents reacting to events and generating events. This is quite easily amenable to be handled by parallel processors, since handling many events may be viewed as parallel tasks.

- model-view-controller - the roles of data processing, controlling the data processing and interaction with the user may be easily separated among different processing functions that may be run parallely.

- iterative refinement - An example of this is the ocean problem covered in the class. A process to refine the solution iteratively may be broken out among the parallel tasks e.g. red and black dot and antiparallel processing for the ocean problem.

- mapreduce - involves mapping a single function to independent data sets and reducing these exceution to solution. the task of running and reducing may be run parallely.

- puppeter - involves several agents running parallely and managed by a puppeter

- arbitray static task graph - is the most general pattern (when nothing else fits) with a general break-up of problem into tasks that may potentially be run in parallel.

PEDRO TO ADD COMPUTATIONAL PATTERN HERE => ALSO UPDATE GLOSSARY OF TERMS AS DONE FOR STRUCTURAL PATTERNS

Computational Patterns They are the structural patterns that describe our application, that is, the building blocks we can divide it upon. They can normally be split into smaller patterns in a hierarchical fashion and be adapted to particular hardware and software architectures.

The Second level - Algorithm Strategy Patterns

Once a structural/computational pattern has been identified there may be a variety of algorithms that may be used. The concurrencies are more directly identified at this stage.

The most common algorithm stratgy pattern include -

- Task parallelism - Simply means running independent tasks parallely example of this is embarrasingly parallel pattern.

- Pipeline - Running serial operations on a series of data set in parallel because one data set item does not need to wait for the operations to finish on the data item ahead.

- Discrete event - Multiple tasks interacting via an event handler with event handling by each tasks run parallely.

- Speculation - Speculatively and parallely run a series of tasks ahead and commit some of the parallel task as required by the outcome of previous step.

- Data parallelism -A single stream of instructions is applied to each element of data structure.

- Recursive splitting - Recursively generated task can be optmized by assigning these to parallel processing, using a balanced data structure, fork-join or task queue implementation, improving locality.

- Geometic decomposition - Breaks the problem into data chunks with each each data chunk processed parallely and data chunks exchanging data at boundaries.

The Third Level - Implementation Strategy Patterns

PEDRO TO ADD IMPLEMENTATION STRATEGY PATTERNS

The Fourth Level - Parallel Execution Patterns

These patterns deal with how a parallel algorithm is translated into software elements to fully exploit the specific hardware elements. . They are the strategies and facilities to build, support and synchronize our tasks. These include - (1) Process and thread control patterns (2) Coordination pattern

Process and thread controls patterns advance a program counter -

- MIMD pattern - Multiple process with each one acting on their own data and coordinating communication through discrete events

- Data flow - Computation steps are viewed as direct acylice graph of thread that are mapped to parallel computers

- SIMD pattern - Single Stream of instructions is applied to multiple data on different processing elements

- Thread pool - Rather than creating and destroying threads (which are expensive operations), a pool of threads is maintained. A thread is returned to a pool after use and steals works to ensure a balanced work load

- Speculative execution - Compiler or runtime system is enabled to do speculative executions and to roll back those executions that are not required

- Digital circuits - Functionality is hard wired for concurrency in digital circuits and available as separate instruction set.

Patterns that deal with coordination of threads or processes are well known ones -

- Message passing - Passing messages between process that need to be synchronized

- Collective Communication - Message is passed to a group of (rather than individual) threads. Example reductions, broadcasts, prefix sums, and scatter/gather

- Mutual exclusion - Block of code or memory that can be exeucted by one process at a time

- Point-to-point and collective synchronization - Mutex locks to enforce order of events for two threads or a collection of threads.

- Transactional memory - Folds into memory to detect access conflicts and roll back transactions when a conflict occurs. This is speculative parallelism and effective when conflicts are rare

Glossary Of Patterns

Structural Patterns

i) Pipe-and-filter - The problem is viewed as "filters" (computational elements) connected by pipe (data communication channels). The filters are "stateless" (produce no side effects) and simply take data from an input, transform it and thereafter pass it on for the next stage. Concurency emerges as multiple blocks of data move through "pipe-and-filter" so multiple filters can be active simultaneously.

ii) Agent-and-repository - There is a central set of data that gets modified by different tasks at irregular time. The structure is therefore a single repository of data with independent agents acting on the data and their access is managed by a manager to ensure consistency.

iii) Process control - This involves continuous monitoring of a process and taking an action as per the results of the monitor.

iv) Event-based-implicit invocation - A series of processes react to events and may issue events as reaction. The event handlers may be any of the other processes. The structure is highly dynamic and processes react asynchronously.

v) Model-view-controller - This consists of data state to be viewed, a controller to update the data state and an agent/agents to export the view of data. The user can modify the underlying data only through public interface of the model view and not directly. The view renderer does not have access to internal of the model.

vi) Iterative refinement - The problem is iteratively solved until a particular level of refinement/accuracy is reached.

vii) MapReduce - Same function is applied to independent data sets and the final result is a "reduction" of these results. In phase I involves mapping single function to independent data sets. Phase II is reduction.

viii) Layered systems - With multiple layers in software solution, each layer is so modeled that it interacts with the adjacent layer only and is concerned with the interfaces provided by adjacent layer only.

ix) Puppeter - A puppeter manages several agents that interact with each other (but do not work on shared data).

x) Arbitrary static task graph - If the problem does not fit into a pattern, define the problem as a number of tasks that interact like an arbitrary graph.

Computational Patterns

i) Backtrack, branch and bound

ii) Circuits

iii) Dynamic programming

iv) Dense linear algebra

v) Sparse Linear Algebra

vi) Finite state machine

vii) Graph algorithms

viii) Graphical models

ix) Monte Carlo

x) N-body

xi) Spectral methods

xii) Structured mesh

xiii) Unstructured mesh

Algorithm Strategy Patterns

i) Task parallelism - Problem is broken down into tasks which when run in (and balanced) amongst parallel computers produce the desired result. Example of this is embarrasingly parallel pattern.

ii)Pipeline - A pipeline of functions that are run serially on a series of data set. Each piece of data can enter the pipeline queue and there are several data sets being processed at the same time in the queue.

iii) Discrete event - When tasks are loosely organized and interact unpredictably, and event handler is set-up that sets up these tasks and then forwards the events for handling. The tasks do not know the source of specific event. (The multiple tasks and event handling may be parallely run)

iv) Speculation - When the dependencies are unpredictable, many next steps tasks may be speculatively/parallely run and some of these tasks may be commited depeding on the outcome of dependencies and the not required ones rolled back.

v) Data parallelism -A single stream of instructions is applied to each element of data structure.

vi) Recursive splitting - A problem may have tasks that may be recursively generated with data dependencies among recursive tasks. The solution is to express problem recursively with task/tasks assigned for each call, use a balanced data structure, fork-join or task queue implementation, use optimization to improve locality.

vii)Geometic decomposition - Breaks the problem into data chunks with each each data chunk processed parallely and data chunks exchanging data at boundaries. An example - in solution of the ocean problem the data points were split up into chunks of a few rows each.

Implementation strategy patterns

Structured as a Program:

i) Single-Program Multiple Data (SPMD)

ii) Strict data parallel

iii) Fork/join

iv) Actors

v) Master-worker

vi) Task queue

vii) Graph Partitioning

viii) Loop-level parallelism

ix) BSP

ii) Structured as Data:

1) Shared queue

2) Distributed array

3) Shared hash table

4) Shared data

Parallel Execution Patterns

i) With program counter advance:

1) MIMD

2) Data flow

3) SIMD

4) Thread pool

5) Speculative execution

6) Digital circuits

ii) Thread coordinated:

1) Message passing

2) Collective communication

3) Mutual exclusion

4) Point to point synchronization

5) Collective synchronization

6) Transactional memory

References

1."Parallel Programming with a Pattern Language", Berna Massingill, et al.

2."Parallel Numerical Methods - Multigrid", Michael Heath

3."Distributed Dynamic Data-Structures for Parallel Adaptive Mesh-Refinement", Manish Parashar, et al

4."ZPL Implementation of AMR Algorithm for Elastic Wave Propagation", Hongyu Wu, et al.

5."Reinventing Explicit Parallel Programming for Improved Engineering of High Performance Computing Software", Anthony Skjellum, et al.

6. "Building Parallel Applications using Design Patterns", Dhrubajyoti Goswami, et al.

Links

1. Resources on Parallel Pattern

2. A pattern language of parallel programming

3. [http://parlab.eecs.berkeley.edu/wiki/patterns/patterns/Link A Pattern Language for Parallel Programming ver2.0 ]

4. [http://msdn.microsoft.com/en-us/library/dd492418.aspx/Link Parallel Patterns Library (PPL) ]