CSC/ECE 506 Spring 2011/ch11 BB EP

History

Background

State Diagrams

Memory

Processors

Race Conditions

In a distributed shared memory system with caching, the emergence of race conditions is extremely likely. This is mainly due to the lack of a bus for serialization of actions. It is further compounded by the problem of network errors and congestion.

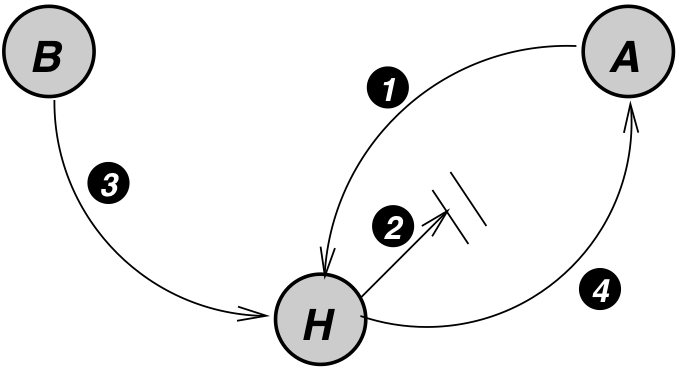

The early invalidation case from Section 11.4 in Solihin is an excellent example of a race condition that can arise in a distributed system. Recall the diagram from the text, and the cache coherence actions, as shown below.

The circled actions are as follows:

- A sends a read request to Home.

- Home replies with data (but the message gets delayed).

- B sends a write request to Home.

- Home sends invalidation to A, and it arrives before the ReplyD

The SCI protocol has a way to handle this race condition, as well as many others, and the following sections will discuss how the SCI protocol design can prevent this race condition.

Prevention in the SCI Protocol

Race conditions are almost non-existent in the SCI protocol, due primarily to the protocol's design. A brief discussion of how this is accomplished follows, followed by a discussion showing what happens if this condition arises in the SCI protocol.

Atomic Transactions

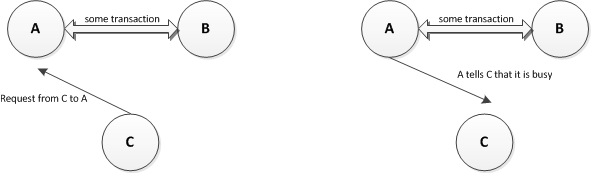

SCI's primary method for preventing race conditions is having atomic transactions. A transaction is defined as a set of sub-actions necessary to complete some requested action, such as reading from memory or writing to a variable. Suppose, for example, that node A is in the middle of a transaction with node B. Node C then tries to make a request of node A. Node A will respond to node C that it is busy, telling node C to try again, as shown in the following diagram.

Thus, in the Early Invalidation race, node A would be in the middle of a transaction, which would prevent node B from invalidating it.

Head Node

Another method for preventing race conditions is to have the Head Node of a sharing list perform many of the coherence actions. As a result, only one node is performing actions such as writes and invalidations of other sharers. Since only one node is performing these actions, the possibility of concurrent actions is decreased. If another node wants to write, for instance, it must become the Head Node of the sharing list for the cache line to which it wants to write, displacing the current Head Node as the node that can write. When simply sharing a read-only copy of a node, as in when the memory is a FRESH state, the Head Node is somewhat irrelevant. All the sharing nodes have their own cached value of the cache line. Likewise, when a sharing list is sharing a line of data in the dirty or GONE memory state, as long as the Head Node has not written to the line, then all the sharing nodes stay in the list with their cached line. However, at the point the Head Node wants to write, it can write it's data immediately, but it then must invalidate all other shared copies, via the forward pointers in the sharing list. This, however, does not protect against a race condition where the memory is FRESH but the Head Node wants to write, but another node also wants to join the list. The Memory Access mechanism prevents this condition.