CSC/ECE 517 Fall 2009/wiki3 2 pp

Clone detection and clone manipulation

The DRY principle says that a particular code fragment should not be repeated more than once in a program. But it happens. And when it does, it is good to be able to find the multiple code "clones" because Software cloning complicates the maintenance process by giving the maintainers unnecessary code to examine. As per Burd, it seems that when presented with the challenge of adding new functionality the natural instinct of a programmer is to copy, paste and modify the existing code to meet the new requirements and thus creating a software clone. While the basis behind such an approach is uncertain, one possible reason is due to time restrictions on maintainers to complete the maintenance change. Duccase points out that “making a code fragment is simpler and faster than writing from scratch” and that if a programmer’s pay is related to the amount of code they produce then the proliferation of software clones will continue.

Once a clone is created it is effectively lost within the source code and so both clones must therefore be maintained as separate units despite their similarities. Komondoor states that if errors are identified within one clone then it is likely that modifications may be necessary to the other counter-part clones. Detection is therefore required if any of the clones are to be re-identified to assist the maintenance process. So if clones can be detected then the similarities can be exploited and replaced during preventative maintenance with a new single code unit this will eliminate the problems identified above.

There are a good number of clone detection tools available both commercially and within academia. Within these tools several different approaches to software clone detection have been implemented, including string analysis, program slicing, metric analysis and abstract tree comparisons. This page will survey the a set of clone detection tools and compare them.

Clone Detection Techniques

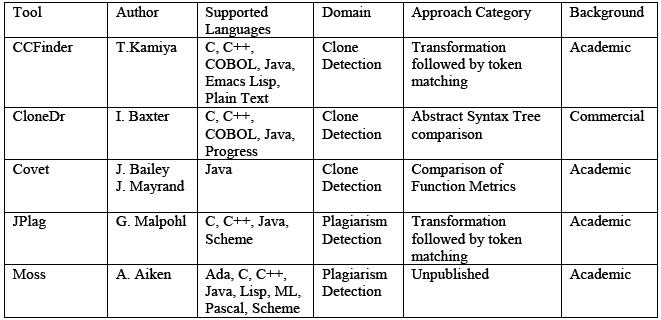

This article will be primarily focusing on below five established detection tools; JPlag, MOSS, Covet, CCFinder and CloneDr. Extract Method Refactoring used in Eclipse IDE will also be visited briefly. JPlag and MOSS are web-based academic tools for detecting plagiarism in student's source code. CloneDr and CCFinder are stand alone tools looking at code duplication in general.

Figure 1 summarizes the clone detection tools. The languages supported by the analysis process are highlighted, as is the analysis approach. The column labeled domain highlights the main purpose of the tools for either clone detection or for plagiarism detection.

CCFinder

CCFinder focuses on analyzing large-scale systems with a limited amount of language dependence. It transforms the source code into tokens. CCFinder aims to identify "portions of interest (but syntactically not exactly identical structures)". After the string is tokenised a token-by-token matching algorithms is performed. CCFinder also provides a dotplotting visualisation tool that allows visual recognition of matches within large amounts of code.

CloneDr

CloneDr analyses software at the syntactic level to produce abstract syntax tree (AST) representations. A series of algorithms are then applied to the tree to detect clones. The first algorithm searches for sub-tree matches within the ASTs. Then a “sequence detection” algorithm attempts to detect “variable size sequences of sub-tree clones”. A third algorithm uses combinations of previously detected clones and looks for “more complex near-miss clones”. The final clone set includes the clones detected in the second and third algorithms. CloneDr can automatically replace cloned code by producing a functionally equivalent subroutine or macro.

Covet

Covet uses a number of the metrics as defined by Mayrand. These metrics were selected by taking known clones and identifying which of the Datrix metrics best highlighted the known clone set. Covet does not apply the same scale of clone likelihood classification as Mayrand. Rather within Covet this is simplified; there is no scale of clone, functions are either classed as clones or distinct. The tool is still in the prototype stages and is not capable of processing industrial sized programs.

JPlag

JPlag uses tokenised substring matching to determine similarity in source code. Its specific purpose is to detect plagiarism within academic institutions. Firstly the source code is translated into tokens (this requires a language dependent process). JPlag aims to tokenise in such way that the "essence" of a program is captured and so can be effective for catching copied functionality. Once converted the tokenised strings are compared to detect the percentage of matching tokens which is used as a similarity value. JPlag is an online service freely available to academia.

MOSS

MOSS Aiken does not publish the method MOSS uses to detect source code plagiarism, as its ability to detect plagiarism may be compromised. Moss like JPlag is an online service provided freely for academic use. Source code is submitted via a perl script and then the results are posted on the MOSS’s webpage. Users are emailed a url of the results.

Extract Method Refactoring

Extract Method has been recognized as one of the most important refactorings, since it decomposes large methods and can be used in combination with other refactorings for fixing a variety of design problems. However, existing tools and methodologies support extraction of methods based on a set of statements selected by the user in the original method. The goal of the methodology proposed by Tsantalis is to automatically identify Extract Method refactoring opportunities and present them as suggestions to the designer of an object oriented system.

Method extraction has a positive effect on maintenance, since it simplifies the code by breaking large methods into smaller ones and creates new methods which can be reused. Method extraction techniques are based on the concept of program slicing. According to Weiser [11], a slice consists of all the statements in a program that may affect the value of a variable x at a specific point of interest p. The pair (p, x) is referred to as slicing criterion. In general, slices are computed by finding sets of directly or indirectly relevant statements based on control and data dependencies.

Comparing evaluation results

Burd performed the experiemental evaluation on these tools and the results of the analysis process was used to investigate which of the tools were best suited to assist the process of software maintenance in general and specifically preventative maintenance. The experiment results were obtained using two metrics; precision and recall. Also the results are gathered by performing detecting replication within a single program and replication across distinct programs. Precision is the measure to the extent to which the clones identified by the tool are extraneous or irrelevant. Recall is a measure to the extent to which the clones identified by the tool matches the clone base within the application being evaluated.

With this, Burd performed the analysis on the results by setting below six categories.

- Output of a high proportion or all of the clones present within the code - CCFinder identified more clones than the other tools but the greater proportion of these clones identified was across files. Proportionally CloneDr identified more clones that were internally replicated within a file. However, the most predictive assessment of this requirement is the metric of recall being to percentage of the clones identified from the total known set. CCFinder identified the greatest total number of clones, thus resulting in the highest level of recall 72%.

- Output of a low proportion or no incorrectly identified clones - CloneDr was the only tool who provided perfect precision, thereby identifying no false positive matches, and therefore not resulting in the incurring of wasted maintenance effort. This is due to the automation process for clone removal; if it can’t be automatically removed then its not identified as a clone.

- Matching and output of clones with a high frequencies of replication - The results of the analysis process showed that Covet followed by CCFinder best satisfied this requirement. However, the benefits CloneDr’s ability to automatically conduct an automated clone replacement process should be not underestimated.

- Output of clones that are large in terms of lines of code - The largest clone identified was by Covet at 123 LOC, but the tools generating the largest mean for all clones was JPlag. Overall, however, all tools showed fairly similar performance levels.

- Output of clones that can be modified or removed with minimum impact to the application - CloneDr was the only tool that could be tested since only that provides automatic clone removal.

- Ease of usability of the tool - There was no analysis performed towards this and hence no evaluation was performed.

The results have identified that there is no single and outright winner for clone detection for preventive maintenance. Each tool had some factors that may ultimately prove useful to the maintainer.

References

[1] Evaluating clone detection tools for use during preventative maintenance

[2] Investigating the maintenance implications of the replication of code

[3] A Language Independent Approach for Detecting Duplicated Code

[4] Using Slicing to Identify Duplication in Source Code

[5] Experiment on the automatic detection of function clones in a software system using metrics

[6] Clone detection using abstract syntax trees

[7] Maintenance Support Tools for JAVA Programs: CCFinder and JAAT

[8] Finding Plagiarisms among a Set of Programs with JPlag

[9] A System for Detecting Software Plagiarism

[10] Identification of Extract Method Refactoring Opportunities

[11] M. Weiser, "Program Slicing," IEEE Transactions on Software Engineering, vol. 10, no. 4, pp. 352-357, 1984.