CSC/ECE 506 Spring 2015/5b SA: Difference between revisions

| Line 65: | Line 65: | ||

</code> | </code> | ||

In the above code snippet, the linked list itself is considered as a stack of elements. In the concrete example above the immutable Lists from the '''Scala standard library''' has been used. Since, multiple workers will work on the elements of this list, we need to have atomic read and '''Compare-and-Set''' or CAS operations. | |||

The JVM defines volatile variables the writes to which are immediately visible to | |||

all other threads. In Java these variables are defined with the volatile keyword | |||

before the declaration, and in Scala with the ''@volatile'' annotation. Whenever we read a volatile variable x, the notation READ(x) is used in the pseudocode, to make it explicit that this read is atomic, i.e. it can represent a | |||

linearization point. When writing the value v to the volatile variable, the notation WRITE(x, v) is used. | |||

A special compare-and-set or CAS instruction atomically checks if the target memory | |||

location is equal to the specified expected value and, if it is, writes the new value, returning | |||

true. Otherwise, it returns false. It is basically equivalent to the corresponding | |||

synchronized block, but more efficient on most computer architectures. | |||

The stack is manipulated using atomic READ and CAS operations to ensure that every element is assigned to exactly one worker. After | |||

reading the stack field in line '''''val stack = inv.READ''''' , every worker checks whether it is empty, indicating | |||

there is no more work. If the list is non-empty, every worker attempts to replace the | |||

current list by its tail with the CAS line '''''if (inv.CAS(stack, tail)) workOn(stack)'''''. Success means that only that worker replaced, so he gains ownership of that element – he must call workOn to process the element. If the CAS is not successful, the worker retries by calling the tail-recursive compute again. | |||

The algorithm is correct – a successful CAS in line 89 guarantees that the stack field has | |||

not changed its value since the READ in line 84. No linked list suffix will ever appear | |||

more than once in the field stack because there are no more loops in the linked list | |||

when following the tail pointer. Furthermore, every linked list node will appear in the | |||

stack field at least once since the list is connected. Together, these properties ensure | |||

that every linked list element is assigned to exactly one worker. | |||

The algorithm shown in above is lock-free – although many threads compete to | |||

update the field stack at the same time, after a certain finite number of steps some | |||

thread will always complete its operation. In general this number of steps can depend | |||

on the size of the data structure or the number of competing workers, but in this case | |||

the number of steps is constant and equals the number of instructions between two | |||

consecutive CAS calls. | |||

== ''Example 2 Parallelized Queue Linked List'' == | == ''Example 2 Parallelized Queue Linked List'' == | ||

Revision as of 23:26, 25 February 2015

Introduction

Parallelization of computations in the presence of dynamic data structures has shown immense potential. Here we discuss how can we parallelize linked data structures. Efficiently exploiting parallelism is fundamental in parallel computing even in the context of dynamic data structures. Some amount of speculation is needed to figure out how to exploit it the best. The idea is to introduce concurrency in the existing data structures to enable multi-threading and hence parallelism. We discuss the major steps in parallelizing data structures and then discuss some techniques and algorithms existing in the literature to gain more insight into the process.

Overview

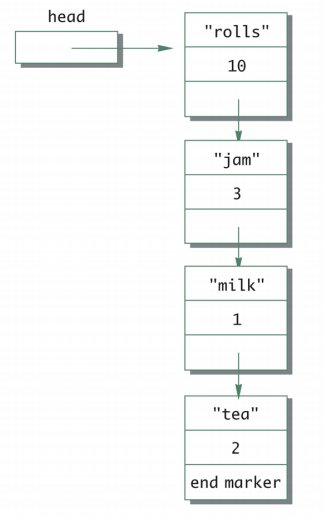

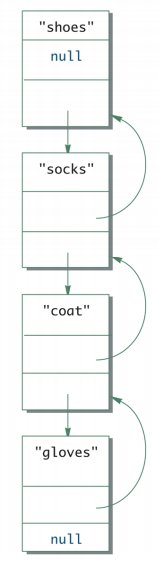

The simplest linked data structure we can think of is a linked list. A single linked list with one pointer pointing to the next node in the list or double linked list where nodes have two pointers pointing to the adjacent nodes to its left and right in the linked list are the most commonly used linked data structures. Following figures are some examples of the same. The first shows the head node to a singly linked list and the second shows a double linked list.

Linked Data Structure parallelization

Unlike data structures like arrays, linked lists are unsuitable for naive parallelization methods which employ divide and conquer policy, they split the input and assign them to multiple workers for parallel processing. Splitting an arbitrary linked list requires traversing half of its elements. For too many operation instances such an O(n) splitting is unacceptable. Hence, intuitively, it is more efficient to convert the linked list into an array and then use splitters on the array. One thing to be noticed is that these data structures does not retain information about the relative order of elements, hence the operators applied to their data-parallel operations need to be commutative.

As mentioned before, a linked list data structure consists of a set of nodes such that each node points to another node in the set. A special node in this set is considered to be the head of the linked list. We consider connected linked lists without loops, which means every node can be reached from the head, no two nodes point to the same node and no node points at the root.

Example 1 Parallelized Linked List

The following code snippet shows a parallelized linked list. [2]

:class Invocation[T](xs: List[T]) {

::@volatile var stack = xs

::def READ = unsafe.getObjectVolatile(this, OFFSET)

::def CAS(ov: List[T], nv: List[T]) = unsafe.compareAndSwapObject(this, OFFSET, ov, nv)

:}

:abstract class ListTask[T] extends RecursiveTask[T] {

::val inv: Invocation[T]

::def workOn(elem: T): Unit

::def compute() {

::: val stack = inv.READ

::: stack match {

:::: case Nil =>

::::// no more work

::: case head :: tail =>

:::: if (inv.CAS(stack, tail)) workOn(stack)

:::: compute()

:::}

::}

:}

:class ListForeach[T, U](f: T => U, val inv: Invocation[T]) extends ListTask[T] {

:: def workOn(elem: T) = f(elem)

:}

:implicit class ListOps[T](val par: Par[List[T]]) {

::def foreach[U](f: T => U) = {

:::val inv = new Invocation(par.seq)

:::val tasks = for (i <- 0 until P) yield new ListForeach[T](f, inv)

:::for (t <- tasks) t.fork()

:::for (t <- tasks) t.join()

::}

:}

In the above code snippet, the linked list itself is considered as a stack of elements. In the concrete example above the immutable Lists from the Scala standard library has been used. Since, multiple workers will work on the elements of this list, we need to have atomic read and Compare-and-Set or CAS operations.

The JVM defines volatile variables the writes to which are immediately visible to all other threads. In Java these variables are defined with the volatile keyword before the declaration, and in Scala with the @volatile annotation. Whenever we read a volatile variable x, the notation READ(x) is used in the pseudocode, to make it explicit that this read is atomic, i.e. it can represent a linearization point. When writing the value v to the volatile variable, the notation WRITE(x, v) is used. A special compare-and-set or CAS instruction atomically checks if the target memory location is equal to the specified expected value and, if it is, writes the new value, returning true. Otherwise, it returns false. It is basically equivalent to the corresponding synchronized block, but more efficient on most computer architectures.

The stack is manipulated using atomic READ and CAS operations to ensure that every element is assigned to exactly one worker. After

reading the stack field in line val stack = inv.READ , every worker checks whether it is empty, indicating

there is no more work. If the list is non-empty, every worker attempts to replace the

current list by its tail with the CAS line if (inv.CAS(stack, tail)) workOn(stack). Success means that only that worker replaced, so he gains ownership of that element – he must call workOn to process the element. If the CAS is not successful, the worker retries by calling the tail-recursive compute again.

The algorithm is correct – a successful CAS in line 89 guarantees that the stack field has

not changed its value since the READ in line 84. No linked list suffix will ever appear

more than once in the field stack because there are no more loops in the linked list

when following the tail pointer. Furthermore, every linked list node will appear in the

stack field at least once since the list is connected. Together, these properties ensure

that every linked list element is assigned to exactly one worker.

The algorithm shown in above is lock-free – although many threads compete to

update the field stack at the same time, after a certain finite number of steps some

thread will always complete its operation. In general this number of steps can depend

on the size of the data structure or the number of competing workers, but in this case

the number of steps is constant and equals the number of instructions between two

consecutive CAS calls.

Example 2 Parallelized Queue Linked List

Now, lets consider the queue data structure based on single linked list. The following code snippet explains the same.

Algorithms

One algorithm to perform parallelization of data structures is PLDS.

Use cases and Applications

Conclusions

References

1. http://idb.csie.ncku.edu.tw/tsengsm/COURSE/Java/Slides/Java-15-Linked_Data_Structure.pdf