CSC/ECE 506 Spring 2014/7b ks: Difference between revisions

No edit summary |

No edit summary |

||

| Line 5: | Line 5: | ||

== Overview == | == Overview == | ||

The cache coherence problem in multiprocessing architectures and their hardware based protocol solutions have received great attention. Another coherence problem in | The cache coherence problem in multiprocessing architectures and their hardware based protocol solutions have received great attention. Another coherence problem in multiprocessing is that of TLBs (Transaction Lookaside Buffers). A TLB is a fully associate hardware cache that maintains virtual to physical mapping of most recently used pages. TLB is used by CPU for fast look up of the physical page frame number for a virtual memory location. The same look up from the page-table for the process (software based construct) is slower. A page may become invalid due to a swap out to memory or may change protection level (read v/s read-write). Maintaining coherence between TLB entries on different processors for the same page (as its state changes) is called TLB coherence problem. This coherence is maintained most often by software based solutions by the operating systems. In this note, we explain a) the background for used of TLB hardware, b) current solutions for maintaining TLB coherence and c) finally a proposal for a unified cache-TLB coherence protocol (that is hardware based). | ||

== Background - Virtual Memory, Paging and TLB == | == Background - Virtual Memory, Paging and TLB == | ||

| Line 28: | Line 28: | ||

A page table typically has an additional bit per entry to indicate whether the frame number is read only or both read-write ('''protection level'''). Another bit '''valid-invalid''' is also added to indicate whether the frame is part of that particular process. | A page table typically has an additional bit per entry to indicate whether the frame number is read only or both read-write ('''protection level'''). Another bit '''valid-invalid''' is also added to indicate whether the frame is part of that particular process. | ||

''' | '''Multilevel page tables''' are often used rather than single page table per process. The first level page table points to the entry in the next level page table and so on until the mapping of virtual to physical page is obtained from the last level page table. | ||

It may be noted that caches typically use "Virtual Indexed Physically Tagged" solution that enables simultaneous look-up of both L-1 cache and TLB. If the page block is not found in L-1 cache, a TLB look-up of address is used to refresh the page block. | It may be noted that caches typically use "Virtual Indexed Physically Tagged" solution that enables simultaneous look-up of both L-1 cache and TLB. If the page block is not found in L-1 cache, a TLB look-up of address is used to refresh the page block. Simultaneous look-up of both L-1 cache and TLB makes the process efficient. | ||

== TLB coherence problem in multiprocessing == | == TLB coherence problem in multiprocessing == | ||

| Line 134: | Line 134: | ||

====Lazy devaluation==== | ====Lazy devaluation==== | ||

In this method, invalidating the TLB | In this method, invalidating the TLB entries is postponed till it is absolutely necessary. The eviction of a page will be postponed till all related TLB entries are invalidated by a system wide TLB flush. The remapping of the page will be done when no TLB contains an entry for the page. If a remapping cannot be stalled all TLBs are flushed so that the change can occur. This approach works well unless processes run in parallel in the same address space. | ||

Lazy devaluation works well when the target systems without excessive TLB flushing. The performance of this approach can be improved by doing a selective invalidation of TLB entries instead of doing a system-wide flushes. <ref> | Lazy devaluation works well when the target systems without excessive TLB flushing. The performance of this approach can be improved by doing a selective invalidation of TLB entries instead of doing a system-wide flushes. <ref> | ||

[http://www.cs.uwaterloo.ca/~brecht/courses/702/Possible-Readings/multiprocessor/tlb-consistency-computer-1990.pdf "Teller, Patricia J. Translation-Lookaside Buffer Consistency"]</ref> | [http://www.cs.uwaterloo.ca/~brecht/courses/702/Possible-Readings/multiprocessor/tlb-consistency-computer-1990.pdf "Teller, Patricia J. Translation-Lookaside Buffer Consistency"]</ref> | ||

| Line 189: | Line 189: | ||

Cache coherence protocols are all-hardware based because this provides a) higher performance and b) decoupling with architectural issues. On the Other hand, the shootdown protocol used for TLB coherence is essentially software based. A hardware based TLB coherence can a) improve TLB coherence performance significantly for high number of processors and b)provide a cleaner interface to the Operating system. | Cache coherence protocols are all-hardware based because this provides a) higher performance and b) decoupling with architectural issues. On the Other hand, the shootdown protocol used for TLB coherence is essentially software based. A hardware based TLB coherence can a) improve TLB coherence performance significantly for high number of processors and b)provide a cleaner interface to the Operating system. | ||

TLB shootdowns are invisible to user applications, although they directly impact user performance. The performance impact depends on - a) position of TLB in memory | TLB shootdowns are invisible to user applications, although they directly impact user performance. The performance impact depends on - a) position of TLB in memory hierarchy, b) shoot down algorithm used, c) number of processors. The position of TLB in memory hierarchy refers to whether the TLB is placed close to processor or is close to memory. Shoot down algorithms trade performance v/s complexity. The performance penalty for shootdown increases with the number of processors as can be seen from Figure-3 that shows latency of the processor issuing shootdown and the ones receiving shootdowns. The effective latency is more than shown in the graphs because of TLB invalidations resulting in extra cycles spent in repopulating the TLB that can happen either from either cache or main memory depending on the case. The latency from TLB shootdowns can be higher for application that use the routine more often. (Refer to Figure-4). | ||

=== UNITD Coherence Implementation === | === UNITD Coherence Implementation === | ||

Revision as of 03:19, 17 March 2014

TLB (Translation Lookaside Buffer) Coherence in Multiprocessing

Overview

The cache coherence problem in multiprocessing architectures and their hardware based protocol solutions have received great attention. Another coherence problem in multiprocessing is that of TLBs (Transaction Lookaside Buffers). A TLB is a fully associate hardware cache that maintains virtual to physical mapping of most recently used pages. TLB is used by CPU for fast look up of the physical page frame number for a virtual memory location. The same look up from the page-table for the process (software based construct) is slower. A page may become invalid due to a swap out to memory or may change protection level (read v/s read-write). Maintaining coherence between TLB entries on different processors for the same page (as its state changes) is called TLB coherence problem. This coherence is maintained most often by software based solutions by the operating systems. In this note, we explain a) the background for used of TLB hardware, b) current solutions for maintaining TLB coherence and c) finally a proposal for a unified cache-TLB coherence protocol (that is hardware based).

Background - Virtual Memory, Paging and TLB

In this section we introduce the basic terminology and the set-up where TLBs are used.

A process running on a CPU has its own view of memory - "Virtual Memory" (one-single space) that is mapped to actual physical memory. Virtual memory management scheme allows programs to exceed the size of physical memory space. Virtual memory operates by executing programs only partially resident in memory while relying on hardware and the operating system to bring the missing items into main memory when needed.

Paging is a memory-management scheme that permits the physical address space of a process to be non-contiguous. The basic method involves breaking physical memory into fixed-size blocks called frames and breaking the virtual memory into blocks of the same size called pages. When a process is to be executed its pages are loaded into any available memory frames from the backing storage (for example-a hard drive).

Each process has a page table that maintains a mapping of virtual pages to physical pages. A page table is a software construct managed by operating system that is kept in main memory. For a process, a pointer to the page table (Page-Table Base Register-PTBR) is stored in a register. Changing page table requires changing only this one register, substantially reducing the context-switch time. (A page table, with virtual page number that is 4 bytes long (32 bits) can point to 2^32 physical page frames. If frame size is 4Kb then a system with 4-byte entries can address 2^44 bytes (or 16 TB) of physical memory).

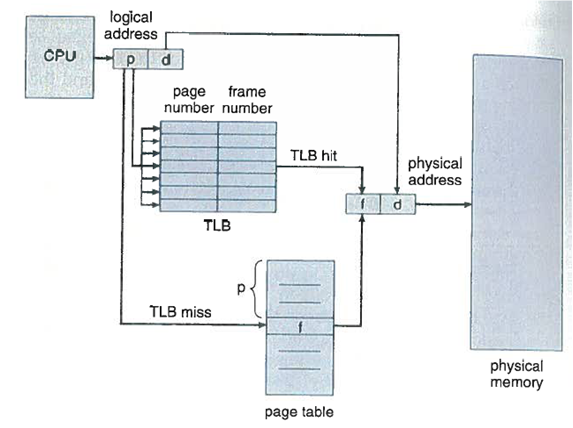

Every address is divided into two parts – a page number (p) and a page offset (d). The page number is used as an index into the page table. The page table contains the base address of each page in physical memory. The base address is combined with the page offset to define the physical memory address that is sent to the memory unit. The problem with this approach is the time required to access a user memory location. If we want to access location, we must first index into the page table (that requires a memory access of PTBR) and then find the frame number from the page table and thereafter access the required frame number. The memory access is slowed down by a factor of 2.

The standard solution to this problem is to use a special, small fast lookup hardware cache called a Translation Look-Aside Buffer (TLB). The TLB is fully associate, high-speed memory. Each entry in TLB consists of two parts –a key (tag) and a value. When the associative memory is presented with an item, the item is compared with all keys simultaneously. If the item is found, the corresponding value field is returned. The search is fast; the hardware, however is expensive. Typically, the number of entries in a TLB is small, often numbering between 64 to 1024.

The TLB is used with page tables in the following way. The TLB contains only a few of the page-table entries. When a logical address is generated by the CPU, its page number is presented to the TLB. If the page number is found its frame number is immediately available and is used to access memory.

If the page number is not in the TLB (known as a TLB miss), a memory reference to the page table must be made. When the frame is obtained, we can use it to access memory. In addition, we add the page number and frame number to the TLB, so that they will be found quickly on the next reference. If the TLB is already full of entries, the operating system must select one for replacement. Replacement policies range from least recently used (LRU) to random.

A page table typically has an additional bit per entry to indicate whether the frame number is read only or both read-write (protection level). Another bit valid-invalid is also added to indicate whether the frame is part of that particular process.

Multilevel page tables are often used rather than single page table per process. The first level page table points to the entry in the next level page table and so on until the mapping of virtual to physical page is obtained from the last level page table.

It may be noted that caches typically use "Virtual Indexed Physically Tagged" solution that enables simultaneous look-up of both L-1 cache and TLB. If the page block is not found in L-1 cache, a TLB look-up of address is used to refresh the page block. Simultaneous look-up of both L-1 cache and TLB makes the process efficient.

TLB coherence problem in multiprocessing

In multiprocessor systems with shared virtual memory and multiple Memory Management Units (MMUs), the mapping of a virtual address to physical address can be simultaneously cached in multiple TLBs. (This happens because of sharing of data across processors and process migration from one processor to another). Since the contents of these mapping can change in response to changes of state (and the related page in memory), multiple copies of a given mapping may pose a consistency problem. Keeping all copies of a mapping descriptor consistent with each other is sometimes referred to as TLB coherence problem.

A TLB entry changes because of the following events - a) A page is swapped in or out (because of context change caused by an interrupt), b) There is a TLB miss, c) A page is referenced by a process for the first time, d) A process terminates and TLB entries for it are no longer needed, e) A protection change, e.g., from read to read-write, f) Mapping changes.

Of these changes a) swap outs, e) protection changes and f) mapping changes lead to TLB coherence problem. A swap-out causes the corresponding virtual-physical page mapping to be no longer valid. (This is indicated by valid/invalid bit in the page table). If the TLB has a mapping from a page block that falls within an invalidated Page Table Entry (PTE), then it needs to be flushed out from all TLBs and should not be used. Further protection level changes for a TLB mapping need to be followed by all processors. Also mapping modification for a TLB entry (where a physical mapping changes for a virtual address) also needs to be seen coherently by all TLBs.

A page’s translation information can have multiple images; one is stored in the page table while the others can be stores in the TLBs. TLB images are used by processes for virtual-to-physical address translation and page tables are used by them to reload TLB or modify translation information. Thus, page table modification can make the TLB entries inconsistent. Since, inconsistent TLB entries can result in erroneous memory accesses, the TLB inconsistency problem must be solved.The inconsistencies occurring to TLBs due to modifications in other page tables can be categorized as safe and unsafe changes. The modify bit that indicates whether a page has been written or not is very crucial. If this is inaccurate, the only accurate representation of a page could be overwritten if it does not also reside in secondary memory. Whereas, the bit that indicates whether a page has been referenced need not be accurate. It at most results in replacing one page instead of the other. This might affect the performance, but doesn’t affect the accuracy like the modify bit does.

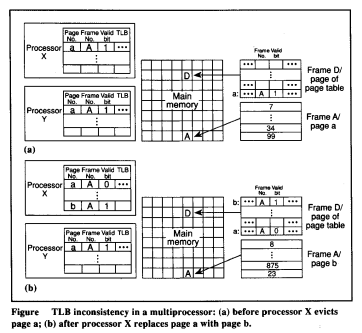

A safe change results from (a) modification of access rights from read-only to read/write or (b) a page is becoming memory-resident. A TLB entry that is inconsistent due to a safe change can be avoided by designing the hardware so that using the entry throws an exception and invokes the appropriate Operating System trap routine that invalidates the TLB entry. Consider an example shown in the figure: Consider the translation information of page a stored in a valid TLB entry is accessed by processor X which defines the page’s protection as read-only. Now, a process executing on processor Y subsequently modifies a’s page-table entry to change the protection from read-only to read/write. When process executing on processor X now attempts to write a, the Operating system finds that the protection has been increased and invalidates the stale TLB entry for a and resumes execution. Thus a is successfully modifies and this results in consistency.An unsafe change causes inconsistencies in the TLB that cannot be detected during program execution. This kind of multiprocessor architecture with multiple TLBs, in addition to a consistency problem between a page table and a TLB, a consistency problem exists among the system’s multiple TLBs and page tables.

Some architectures do not follow TLB coherence for each datum (i.e., TLBs may contain different values). These architectures require TLB coherence only for unsafe changes made to address translations. Unsafe changes include a) mapping modifications, b) decreasing the page privileges (e.g., from read-write to read-only) or c) marking the translation as invalid. The remaining possible changes (e.g., d) increasing page privileges, e) updating the accessed/dirty bits) are considered to be safe and do not require TLB coherence. Consider one core that has a translation marked as read-only in the TLB, while another core updates the translation in the page table to be read-write. This translation update does not have to be immediately visible to the first core. Instead, the first core's TLB can be lazily updated when the core attempts to execute a store instruction; an access violation will occur and the page fault handler can load the updated translation.

A variety of solutions have been used for TLB coherence. Software solutions through operating systems are popular since TLB coherence operations are less frequent than cache coherence operations. The exact solution depends on whether PTEs (Page Table Entries) are loaded into TLBs directly by hardware or under software control. Hardware solutions are used where TLBs are not visible to software.

In the next sections, we cover following approaches to TLB coherence: virtually addressed caches, software TLB shootdown, address space Identifiers, hardware TLB coherence. We also review UNified Instruction/Translation/Data (UNITD) Coherence, a hardware coherence framework that integrates TLB and cache coherence protocol.

TLB Coherence Approaches

TLB coherence refers to the consistency of the information stored in page-map tables (PMTs) with the cached copies of this data in each processor’s TLB. A PMT entry (and its TLB counterpart) store various data elements, including:

- The physical memory frame number for each virtual page resident in main memory.

- A protection field indicating how the page may be addressed (e.g., read-only or read-write).

- A presence bit that indicates whether the page is in main memory.

- A dirty bit that indicates whether the page was modified while in main memory.

- Page reference history used to indicate whether a page has been referenced during some time interval.<ref>

"Teller, Patricia J. Translation-Lookaside Buffer Consistency"</ref>

Consistency among TLBs and PMTs need not be maintained for all types of changes to the above data elements. For example, it is possible to safely modify page reference history in main memory without also updating the TLBs. Updates are therefore categorized as either safe or unsafe. Safe changes include:

- A reduction in page protection (moving access rights to a page from read-only to read/write).

- Setting the presence bit when a page becomes resident in main memory.

It is important to note that these inconsistencies are not ignored but rather are handled downstream by other mechanisms. For example, if the operating system kernel detects a process is attempting to write to a page marked as read-only, it will verify whether the page’s protection settings have been reduced before throwing a protection fault. If the protection settings in main memory have been reduced by another processor, the kernel would invalidate the acting processor’s TLB and retry the operation.

The above example also illustrates why a change to the same data element in the opposite direction (an increase in protection) would be unsafe without a TLB coherence scheme as there would be no mechanism for the operating system to trap the condition and update the processor’s TLB.

Any modifications to the mapping between a virtual page and main memory frame are also unsafe without a TLB coherence mechanism. This is in large part due to the fact that page numbers are formed by splitting a finite address space into discrete units. These pages in turn are mapped to physical memory frames as needed by the operating system. The mapping of a page to a frame is a transient condition and the same page may be mapped to different frames across time. Without a TLB coherence mechanism, it is possible that a processor may attempt to use a stale page-frame mapping to reference a frame that has been reassigned.

TLB coherence approaches are of two types : 1. Approaches requiring Hardware Cache consistency 2. Approaches without hardware cache consistency

Approaches without hardware cache consistency

Virtually Indexed Caches

Virtually indexed caches may be accessed using the virtual memory reference. This approach eliminates the TLB altogether and its associated coherence issue. This approach requires processors to index data cache values using virtual addresses and thereby avoid the need for virtual-physical address translations between the processor and the L1 cache. Ultimately, however, in order to address physical main memory on an L1 miss (or L2 miss depending on the indexing strategy of the L2 cache), translation is still required from the virtual reference to a physical address in memory. In a non-TLB architecture, this translation uses the page mapping tables directly.

Under this approach, PMT entries may be brought into the processor’s data cache. Though the cache coherence protocol in effect will manage consistency across all processor caches, it does not handle consistency between cached PMT entries and the page tables in main memory. Hence, even in the absence of a TLB there remains a coherence problem between data caches and main memory page tables. As PMT entries in main memory are modified by the operating system kernel, the processor data caches must be reconciled by invalidating stale PMT entries.

Since this coherence issues relates more to PMT-data cache coherence (versus TLB coherence), it will not be discussed further in this section.

TLB Shootdown

The TLB Shootdown method is the most widely used TLB coherence approach. The approach has variants that turn on several factors, how many processors participate in the TLB update (the victim list) and the extent of hardware support for the process, which in turn may determine the visibility of the TLB by operating system kernel.

In the discussion below, the initiating processor is defined as the processor servicing the operating system kernel during the course of the PMT update.

Broadly speaking, the shootdown approach includes the following two components:

- A synchronization strategy across all processors during the PMT update. This orchestration begins by the initiating processor locking the page table (or some portion thereof).

- A message broadcasting mechanism that allows the initiating processor to notify other processors that their TLB cache may be out-of-date and to suspend their use of the TLB during the course of the update.

This process is described in detail next followed by a discussion of implementations and their shootdown variants.

Details of the TLB Shootdown

- The initiating processor must first disable local interrupts, preventing another thread from preempting and modifying its TLB. It also clears its active flag for TLB usage. This flag is used to indicate whether a processor is currently using its TLB cache.

- The initiating processor locks the page table it is modifying or potentially some smaller portion of it. This prevents modification to the same page data by other processors.

- The shootdown method is primarily based on software that invalidates TLB entries. The initiating processor must place an inter-processor interrupt (IPI) request to its interrupt controller. The interrupt includes a data structure (IDT) that references the location of the shootdown program and the target TLB entries that should be invalidated. The initiator waits until all processors have responded.

- The recipients of the interrupt respond by disabling their local inter-processor interrupts and invalidating the affected TLB entries or the entire TLB (depending on the implementation) using the shootdown program. The receiving processor then clears its TLB active flag and enters into a wait state after servicing the interrupt. The wait state may be implemented by attempting to gain the same lock held by the initiating processor on the page table or polling shared memory.

- Once the interrupts have completed, the initiating processor makes changes to the PMTs and updates its own TLB cache. Before releasing the page lock, it sets its TLB active flag to true.

- The receiving processors exit their wait state and update their TLB cache and set their TLB active flag.

Modified TLB Shootdown

The Modified TLB shootdown method of TLB coherence allows a processor to modify the table without waiting for other processors to synchronize. A processor using the table need not busy wait while the table is being modified. Also, processors can use a shared page table during a modification, since modifications are performed atomically. This version of TLB shootdown requires extra hardware support, but it is as effective as the original method and performs better.<ref> "Teller, Patricia J. Translation-Lookaside Buffer Consistency"</ref>

Hierarchical TLBs

Hierarchical TLB includes a second level of TLB in the system called the L2 TLB which keeps a larger set of relevant data on the chip. This helps to keep relevant data on the chip for a longer time and improves the reload time of L1 TLBs. Many L1 TLBs can share a single L2 TLB. Having many cores share a single TLB helps improve the performance of the system many fold. Many possible coherence schemes can be used to maintain coherency between TLBs of different levels.<ref> "Address Translation for Manycore Systems" </ref>

Instruction-based Invalidation

Some processors have modified their instruction set to include a TLB invalidate instruction. The instruction is invoked by the operating system kernel to broadcast the modified page address from the initiating processor. The instruction is placed on the shared bus and a snooper on each processor detects the instruction and invalidates the appropriate TLB entries.

Lazy devaluation

In this method, invalidating the TLB entries is postponed till it is absolutely necessary. The eviction of a page will be postponed till all related TLB entries are invalidated by a system wide TLB flush. The remapping of the page will be done when no TLB contains an entry for the page. If a remapping cannot be stalled all TLBs are flushed so that the change can occur. This approach works well unless processes run in parallel in the same address space. Lazy devaluation works well when the target systems without excessive TLB flushing. The performance of this approach can be improved by doing a selective invalidation of TLB entries instead of doing a system-wide flushes. <ref> "Teller, Patricia J. Translation-Lookaside Buffer Consistency"</ref>

Address Space Identifier (ASID) based Approach

The MIPS family of processors assigns each TLB entry a unique identifier called an ASID. The ASID is similar to a process identifier (PID) except that its uniqueness is not guaranteed for the life of a process. Each process is assigned a unique ASID on each processor. Hence, a PID may have many ASIDs. A separate array is used to keep track of this mapping.

When a process modifies a PTE in main memory, the kernel sets all the process’ ASIDs to 0 on the other processors. Note: This does not modify the ASIDs in the TLB entries, only the array that maps a process to a processor.<ref>Culler, David E. et al. Parallel Computer Architecture: A Hardware/Software Approach, 1999, p. 440,441.</ref>

When the kernel find that a process is assigned a zero ASID value on a processor, it assigns it a new ASID. This new ASID will not match the stale TLB entries (and their ASIDs) on that processor, forcing a subsequent TLB invalidation.

Validation Based Approach

Under this approach, TLB invalidation is postponed until memory is accessed. This approach eliminates the need for interrupts and synchronization. Each frame in the page table is assigned a generation count. When a change modifies the mapping between a frame and a virtual page or when the permissions are restricted (e.g., from read-write to read-only), the memory manager modifies the generation count on the frame.

Each TLB entry stores the generation account associated with the page when the TLB entry was created. Should a processor request memory at a virtual page with a stale generation count, the request is denied and the processor is notified to update its TLB entry. <ref> "Teller, Patricia J. Translation-Lookaside Buffer Consistency" </ref>

Implementations

- TLB Shootdown : This algorithm is implemented by in Carnegie Mellon University’s Mach operating system, which has been ported to multiprocessor architectures including the BBN Butterfly, Encore’s Multimax, IBM’s RP3, and Sequent’s Balance and Symmetry systems.

- Modified TLB Shootdown : This method has been implemented in Mach operating system running on RP3.

- Hierarchical TLBs : The Intel Nehalem platform uses a hierarchical TLB.

- Instruction based invalidation : Examples of this include the Intel Itanium architecture (via the ptc.g and ptc.ga instructions) and the tlbivax instruction on IBM’s Power series (for Power ISA 2.06). An example of an operating system that uses the Itanium support for instruction based TLB invalidation is the Linux kernel

- Lazy Devaluation : This approach is used in MIPS R2000 processor running the System 5.3 Unix operating system.

- Address Space Identifier (ASID) based Approach : The IRIX 5.2 operating system uses this mechanism on MIPS as well as the AMD64 operating system.

- Validation Based Approach :

Approaches requiring Hardware Cache consistency

Unified Cache and TLB coherence solution

In this section the salient features of UNified Instruction/Translation/Data (UNITD) Coherence protocol proposed by Romanescu/Lebeck/Sorin/Bracy in their paper <ref>UNified Instruction Translation Data UNITD Coherence One Protocol to Rule Them All, Romanescu/Lebeck/Sorin/Bracy</ref> are discussed. This provides an example of hardware based unified protocol for cache-TLB coherence.

UNITD is a unified hardware coherence framework that integrates TLB coherence into existing cache coherence protocol. In this protocol, the TLBs participate in cache coherence updates without needing any change to existing cache coherence protocol. This protocol is an improvement over the software based shootdown approach for TLB coherence and reduces the performance penalty due to TLB coherence significantly.

Background

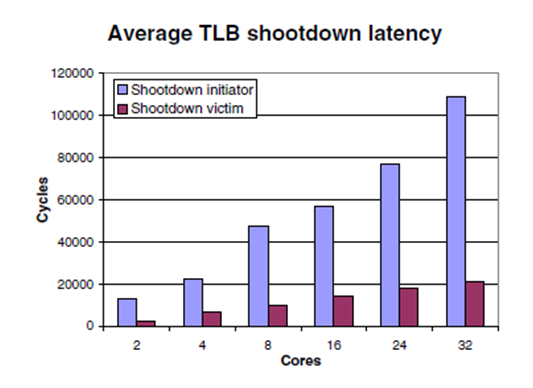

Cache coherence protocols are all-hardware based because this provides a) higher performance and b) decoupling with architectural issues. On the Other hand, the shootdown protocol used for TLB coherence is essentially software based. A hardware based TLB coherence can a) improve TLB coherence performance significantly for high number of processors and b)provide a cleaner interface to the Operating system.

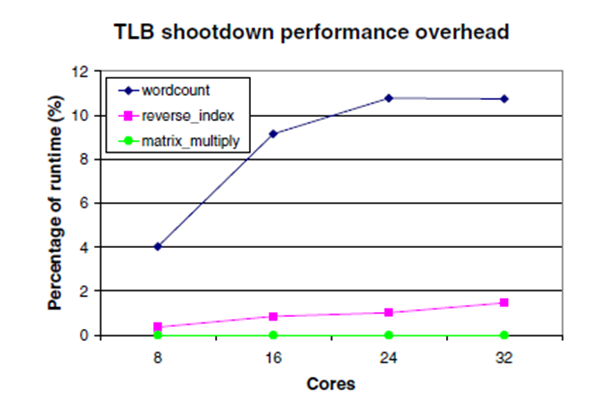

TLB shootdowns are invisible to user applications, although they directly impact user performance. The performance impact depends on - a) position of TLB in memory hierarchy, b) shoot down algorithm used, c) number of processors. The position of TLB in memory hierarchy refers to whether the TLB is placed close to processor or is close to memory. Shoot down algorithms trade performance v/s complexity. The performance penalty for shootdown increases with the number of processors as can be seen from Figure-3 that shows latency of the processor issuing shootdown and the ones receiving shootdowns. The effective latency is more than shown in the graphs because of TLB invalidations resulting in extra cycles spent in repopulating the TLB that can happen either from either cache or main memory depending on the case. The latency from TLB shootdowns can be higher for application that use the routine more often. (Refer to Figure-4).

UNITD Coherence Implementation

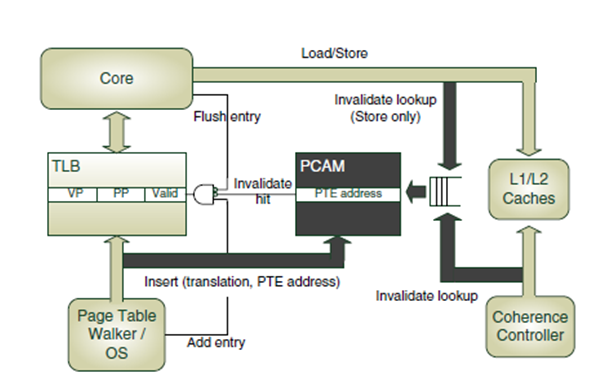

At a high level, UNITD integrates the TLBs into the existing cache coherence protocol (such as typical MOSI -Modified Owned Share Invalid coherence states). TLBs are simply additional caches that participate in the coherence protocol however like read-only instruction caches. UNITD has no impact on the cache coherence protocol and thus does not increase its complexity.

As mentioned before, TLB entries are read-only. (Virtual to physical address mapping are never modified in the TLBs themselves but rather in the page table entries). There are only two coherence states: Shared (read-only) and Invalid. UNITD uses a Valid bit in TLB to maintain an entry’s coherence state. When a translation is inserted into a TLB, it is marked as Shared. The cached translation can be accessed by the local core as long as it is in the Shared state. The translation remains in Shared state until a TLB coherence message invalidates it. Thereafter subsequent memory accesses depending on it will miss in the TLB and reacquire the translation from the memory system.

.

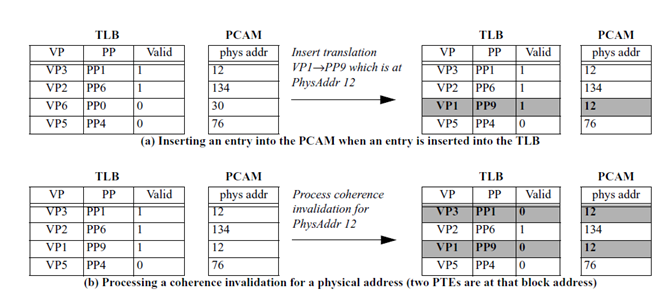

In order to integrate TLB coherence with the existing cache coherence protocol with minimal architectural changes, we relax the correspondence of the translations to the memory block containing the PTE, rather than the PTE itself. Maintaining translation granularity at a coarser grain (i.e., cache block, rather than PTE) trades a small performance penalty for ease of integration. Because multiple PTEs can be placed in the same cache block, the PCAM can hold multiple copies of the same datum. For simplicity, PCAM entries are referred as PTE addresses.

Because the PCAM has the same structure as the TLB, a PTE address is inserted in the PCAM at the same index as its corresponding translation in the TLB (physical address 12 in Figure 2(a) above. Note that there can be multiple PCAM entries with the same physical address, as in Figure 2a; this situation occurs when multiple cached translations correspond to PTEs residing in the same cache block.

PCAM entries are removed as a result of the replacement of the corresponding translation in the TLB or due to an incoming coherence request for read-write access. If a coherence request hits in the PCAM, the Valid bit for the corresponding TLB entry is cleared. If multiple TLB translations have the same PTE block address, a PCAM lookup on this block address results in the identification of all associated TLB entries. Figure 2(b) above illustrates a coherence invalidation of physical address 12 that hits in two PCAM entries.

Performance

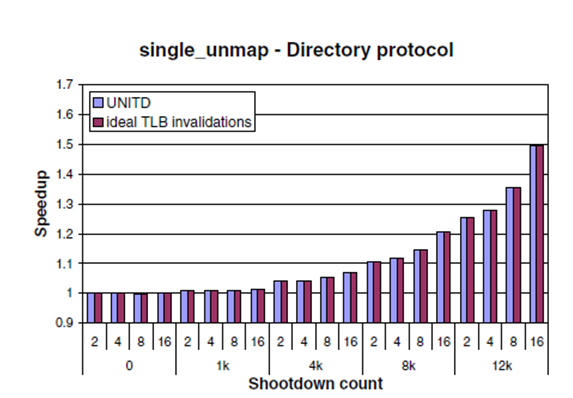

The performance of the UNITD protocol is compared to a) a baseline system that relies on TLB shootdowns and b) to a system with ideal (zero-latency) translation invalidations. (The ideal-invalidation system uses the same modified OS as UNITD (i.e., with no TLB shootdown support) and verifies that a translation is coherent whenever it is accessed in the TLB. The validation is done in the background and has no performance impact).

UNITD is efficient in ensuring translation coherence, as it performs as well as the system with ideal TLB invalidations. UNITD speedups increase with the number of TLB shootdowns and with the number of cores. Third, as expected, UNITD has no impact on performance in the absence of TLB shootdowns. (Refer to the graph below).

Definitions

- PMT(Page Mapping Table)

- This is an in-memory data structure that primarily helps the operating system map two address spaces two one another: virtual memory and physical memory. In addition to this mapping information, the PMT also stores additional data fields to keep track of whether the virtual memory has been loaded in physical memory, permissions and utilization metrics. Coupled with the TLB, the PMT is a critical component of virtual memory systems. Learn more about page tables here.

- PTE (Page Table Entry)

- A row in a page mapping table that relates a single page in virtual memory to a physical memory address or a page frame.

- TLB(Translation Look-Aside Buffer)

- Associative, high-speed memory used to cache page table information and speed up virtual memory references from the processor.

- Presence Bit

- One of the data elements in each PMT entry. Indicates whether the page is loaded in main memory (versus stored to disk).

- Safe Change

- A type of update that can be made to a PMT safely without also updating the cached TLB counterpart. A safe change results from (a) modification of access rights from read-only to read/write or (b) a page is becoming memory-resident.

- TLB Coherence Strategy

- A process or system for maintaining the consistency of information between the processor TLBs and the PMT entries.

- IDT (Interrupt Descriptor Table)

- A vector-based data structure used to communicate information between processors during an inter-processor interrupt.

- Interrupt

- A hardware or software based method for signalling to a processor that there is work for it.

- ASID (Address Space Identifier)

- A number assigned to process that is unique within the scope of a processor for a specified time period. A process may be assigned multiple ASIDs across time. Used with the TLB entries, the ASID helps the discard stale TLB entries.

- Hierarchical TLBs

- Is analogus concept for TLBS as multilevel L1 and L2 cache

- Page Table Base Register

- Pointer to the page table location is maintained in this register.

- UNITD protocol

- A protocol that combines TLB and existing cache coherence protocols (proposed by Romanescu/Lebeck/Sorin/Bracy)

- Validation based approach

- In this approach TLB entries are not updated until they are used.

- Virtual Index Cache

- Cache that stores data based on virtual page number.

- TLB Shootdown

- Software based TLB coherence method that requires one processor to shoot-down/disable TLB entries in other processors before providing them with a new TLB entry

Textbook References

1. Operating System Concepts - Silberschatz, Galvin, Gagne. Seventh Edition, Wiley publication, 2005

2. Fundamentals of Parallel Computer Architecture, Yan Solihin, 2008-2009

3. Culler, David E. et al. Parallel Computer Architecture: A Hardware/Software Approach, 1999, Gulf Professional Publishing

4. Microprocessor Memory Management Unit, Milan Milenkovic, IEEE, Vol10, Issue-2, 1990, Pages 70-85

Internet References

<references>

</references>

Quiz

1. Page Table is maintained as a software construct (choose one answer)

- a) True

- b) False

2. TLB keeps a mapping of ___ to ____

- a) Virtual Page to Physical Page

- b) Virtual Address to Physical Address

- c) Physical Page to Virtual Page

- d) Physical Address to Virtual Address

3. Currently TLB coherence is most often achieved through

- a) Software solutions

- b) Hardware solutions

4. UNITD is (choose one answer)

- a) A protocol for TLB coherence only

- b) A protocol for cache coherence only

- c) A protocol for cache and TLB coherence

5. Which of the following approaches provides a solution for TLB coherence (choose at least one)

- a) Virtual Cache address

- b) Shootdown

- c) Invalidation

- d) Hardware solutions

6. What messaging implementation is typically used with TLB Shootdown: (choose one answer)

- a) TLB active flag

- b) Hardware instructions

- c) Inter-Processor Interrupts

- d) PMT entries in main memory

7. Which data elements of a PMT entry are unsafe without a TLB coherence scheme: (choose all that apply)

- a) Increase in protection level;

- b) Changes to virtual-physical address mappings.

- c) Page table reference counts

- d) Presence bit

8. An ASID refers to: (choose one answer)

- a) The unique ID of a process for its lifetime

- b) The unique ID of a process-processor pair at a point in time

- c) The unique ID of a PMT entry

- d) The unique ID of a page

9. If Virtually Indexed data caches avoid the use of TLBs, where is the coherence issue identified? (choose one answer)

- a) Between PMT entries in main memory and the disk storage

- b) Between PMT entries in main memory and processor registers

- c) Between PMT entries in main memory and the instruction cache

- d) Between PMT entries in main memory and the data cache

10. How does the initiating processor prevent updates to the PMT entries by other processors during a PMT update? (Choose one answer)

- a) Via a shared memory semaphore

- b) Via the interrupt IDC

- c) Via a hardware instruction

- d) Via a lock to the page table in main memory

Answers 1-a, 2-a, 3-a, 4-c, 5-a,b,c,d, 6-c, 7-a,b, 8-b, 9-d, 10-d