CSC/ECE 517 Fall 2013/oss ssv: Difference between revisions

| Line 154: | Line 154: | ||

The following are the three main types of refactoring we have done in our project. | The following are the three main types of refactoring we have done in our project. | ||

* Move out common code in re-usable method: | * '''Move out common code in re-usable method:''' | ||

The following is a sample of the duplicated code which was present in many methods. We moved it to a separate method and just called this method with appropriate parameters wherever required. This implements nil condition check on an edge of the graph and if the id of the vertex is not invalid(negative). | The following is a sample of the duplicated code which was present in many methods. We moved it to a separate method and just called this method with appropriate parameters wherever required. This implements nil condition check on an edge of the graph and if the id of the vertex is not invalid(negative). | ||

| Line 162: | Line 162: | ||

end | end | ||

* Reduce number of lines of code per method. | * '''Reduce number of lines of code per method'''. | ||

We ensured that each method should be short enough to fit in less than one screen length(no need to scroll). | |||

** Combined conditions together in one statement instead of nesting them inside one another. | |||

** Took out code that can be re-used or logically separated in separate methods. | |||

** Removed commented debug statements. | |||

** | |||

Code sample before Refactoring: | |||

def compare_edges_diff_types(rev, subm, num_rev_edg, num_sub_edg) | def compare_edges_diff_types(rev, subm, num_rev_edg, num_sub_edg) | ||

# puts("*****Inside compareEdgesDiffTypes :: numRevEdg :: #{num_rev_edg} numSubEdg:: #{num_sub_edg}") | # puts("*****Inside compareEdgesDiffTypes :: numRevEdg :: #{num_rev_edg} numSubEdg:: #{num_sub_edg}") | ||

| Line 247: | Line 254: | ||

Code sample after Refactoring : | |||

def compare_edges_diff_types(vertex_match, rev, subm, num_rev_edg, num_sub_edg) | def compare_edges_diff_types(vertex_match, rev, subm, num_rev_edg, num_sub_edg) | ||

Revision as of 20:00, 30 October 2013

Refactoring and testing — degree_of_relevance.rb

Introduction

The class degree_of_relevance.rb is used to how relevant one piece of text is to another piece of text. It is used to evaluate the relevance between the submitted work and review(or metareview) for an assignment. It is important to evaluate the reviews for a submitted work to ensure that if the review is not relevant to the submission, it is considered to be invalid and does not impact student's grade. This class contains a lot of duplicated code and has long and complex methods. It has been assigned grade "F", according to metric like code complexity, duplication, lines of code per method etc. Since this class is important for the research on expertiza, it should be re-factored to reduce its complexity, duplication and introduce coding best practices. Our task for this project is to re-factor this class and test it thoroughly. This class can be found at the following location in expertiza source code - Expertiza\expertiza\app\models\automated_metareview

Theory of Relevance

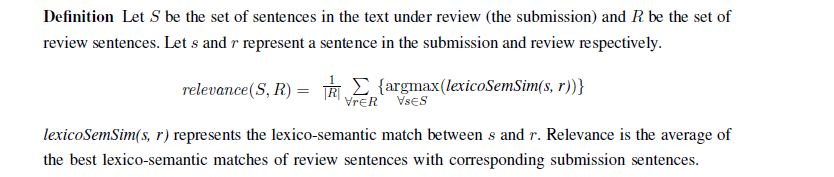

Relevance between two texts can be defined as:

degree_of_relevance.rb file calculates the relevance between submission and review graphs. The algorithm present in the file implements a variance of dependency graphs called word-order graph to capture both governance and ordering information crucial to obtaining more accurate results.

Word-order graph generation

Vertices represent noun, verb, adjective or adverbial words or phrases in a text, and edges represent relationships between vertices. The first step to graph generation is dividing input text into segments on the basis of a predefined list of punctuation. The text is then tagged with part-of-speech information which is used to determine how to group words into phrases while still maintaining the order. Stanford NLP POS tagger is used to generate the tagged text. The vertex and edges are created using the algorithm below. Graph edges that are subsequently created are then labeled with dependency(word-modifier) information.

WordNet is used to identify semantic relatedness between two terms. The comparison between reviews and submissions would involve checking for relatedness across verb, adjective or adverbial forms, checking for cases of normalizations (noun form of adjectives) etc.

Lexico-Semantic Graph-based Matching

Phrase or token matching

In phrase or token matching, vertices containing phrases or tokens are compared across graphs. This matching succeeds in capturing semantic relatedness between single or compound words.

Context matching

Context matching compares edges with same and different syntax, and edges of different types across two text graphs. We refer to the match as context matching since contiguous phrases, which capture additional context information, are chosen from a graph for comparison with those in another. Edge labels capture grammatical relations, and play an important role in matching.

r(e) and s(e) refer to review and submission edges. The formula calculates the average for each of the above three types of matches ordered, lexical and nominalized. Er and Es represent the sets of review and submission edges.

Sentence structure matching

Sentence structure matching compares double edges (two contiguous edges), which constitute a complete segment (e.g. subject–verb–object), across graphs. In this work we consider only single and double edges, and not more contiguous edges (triple edges etc.), for text matching. The matching captures similarity across segments and it captures voice changes.

r(t) and s(t) refer to double edges, and Tr and Ts are the number of double edges in the review and submission texts respectively. matchord and matchvoice are the averages of the best ordered and voice change matches.

Existing Design

The current design has a method get_relevance which is a single entry point to degree_of_relevance.rb. It takes as input submission and review graphs in array form along with other important parameters. The algorithm is broken down into different parts each of which is handled by a different helper method. The results obtained from these methods are used in the following formula to obtain degree of relevance.

The implementation in Expertiza leaves room for applying separate weights to different types of matches instead of a fixed value of 0.33 in above formula. For example, a possible set of values could be:

alpha = 0.55 beta = 0.35 gamma = 0.1

The rule generally followed is alpha > beta > gamma

Helper Methods

The helper methods used by get_relevance are:

compare_vertices

This method compares the vertices from across the two graphs(submission and revision) to identify matches and quantify various metrics. Every vertex is compared with every other vertex to obtain the comparison results.

compare_edges_non_syntax_diff

This is the method where SUBJECT-SUBJECT and VERB-VERB or VERB-VERB and OBJECT-OBJECT comparisons are done to identify matches and quantify various metrics. It compares edges of the same type ie SUBJECT-VERB edges are matched with SUBJECT-VERB matches.

compare_edges_syntax_diff

This method handles comparisons between edges of type SUBJECT-VERB with VERB-OBJECT. It also does comparison between SUBJECT-OBJECT and VERB_VERB edges. It compares edges of the same type.

compare_edges_diff_types

This method does comparison between edges of different types. Comparisons related to SUBJECT-VERB, VERB-SUBJECT, OBJECT-VERB, VERB-OBJECT are handled and relevant results returned.

compare_SVO_edges

compare_SVO_diff_syntax

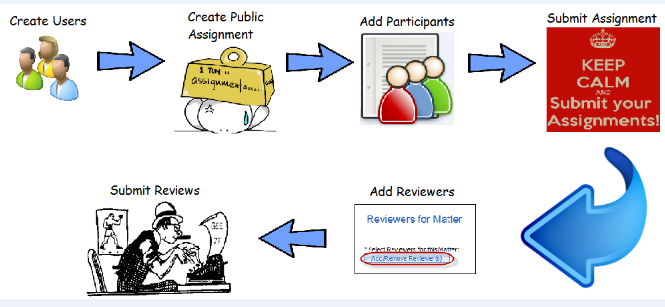

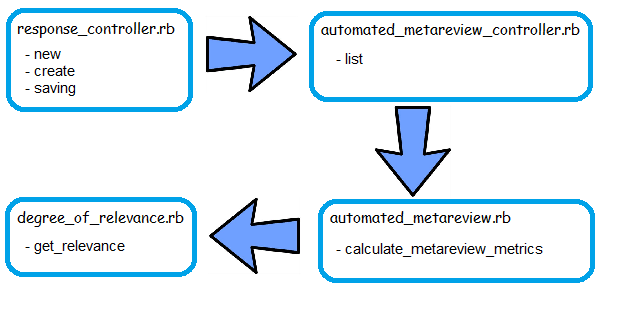

Program Flow

degree_of_relevance.rb is buried deep under models and to bring control to this file, the tester needs to undertake some tasks. The diagram below gives a quick look into what needs to be done.

Once a review is submitted, the further flow is as follows:

When a user tries to review an existing work, it calls new method in response_controller.rb which renders the page where user fills up the review. On submission, it calls create of the same file, which then redirects to saving in the same file again. Saving makes a call to calculate_metareview_metrics in automated_metareview.rb which eventually calls get_relevance in degree_of_relevance.rb.

Implementation

New Design and Refactoring

New Design

We have taken ideas from the Template design pattern to improve the design of the class. Although we did not directly implement this design pattern on the class, the idea of defining a skeleton of an algorithm in one class, and defering some steps to subclasses, allowed us to come up with a similar design which segregates different functionality to different classes. The following is a brief outline of the changes made:

- Divided the code into 4 classes to segregate the functionality making a logical separation of code.

- These classes are -

- compare_graph_edges.rb

- compare_graph_svo_edges.rb

- compare_graph_vertices.rb

- degree_of_relevance.rb

- Extracted common code in methods that be re-used.

- After refactoring the grade according to codeclimate for the class is "C".

Refactoring

Design of classes

The main class degree_of_relevance.rb calculates the scaled relevance using the formula described above. It calculates the relevance based on comparison of submission and review graphs. As described above the following types of comparison is made between the graphs and various metrics is calculated which is used to calculate the relevance:

- Class compare_graph_edges.rb:

- Comparing edges of graphs with non syntax difference: In this SUBJECT-VERB edges are compred with SUBJECT-VERB matches where SUBJECT-SUBJECT and VERB-VERB or VERB-VERB and OBJECT-OBJECT comparisons are done.

- Comparing edges with syntax diff: Compares the edges from across the two graphs to identify matches and quantify various metrics. Compare SUBJECT-VERB edges with VERB-OBJECT matches and vice-versa where SUBJECT-OBJECT and VERB_VERB comparisons are done - same type comparisons.

- Comparing edges with diff types: Compares the edges from across the two graphs to identify matches and quantify various metrics compare SUBJECT-VERB edges with VERB-OBJECT matches and vice-versa SUBJECT-VERB, VERB-SUBJECT, OBJECT-VERB, VERB-OBJECT comparisons are done. (Different type comparisons)

All the above functions are grouped in one class - compare_graph_edges.rb.

- Class compare_graph_vertices.rb:

- Comparing vertices of the corresponding graphs: Every vertex is compared with every other vertex. Compares the vertices from across the two graphs to identify matches and quantify various metrics.

This method is factored out to the class - compare_graph_vertices.rb

- Class compare_graph_SVO_edges

- comparing SVO edges.

- compare SVO edges with different syntax.

These methods are grouped in the compare_graph_SVO_edges.rb class.

The main class degree_of_relevance.rb calls each of these methods to get the appropriate metrics required for evaluating relevance.

Types of Refactoring

The following are the three main types of refactoring we have done in our project.

- Move out common code in re-usable method:

The following is a sample of the duplicated code which was present in many methods. We moved it to a separate method and just called this method with appropriate parameters wherever required. This implements nil condition check on an edge of the graph and if the id of the vertex is not invalid(negative).

def edge_nil_cond_check(edge) return (!edge.nil? && edge.in_vertex.node_id != -1 && edge.out_vertex.node_id != -1) end

- Reduce number of lines of code per method.

We ensured that each method should be short enough to fit in less than one screen length(no need to scroll).

- Combined conditions together in one statement instead of nesting them inside one another.

- Took out code that can be re-used or logically separated in separate methods.

- Removed commented debug statements.

Code sample before Refactoring:

def compare_edges_diff_types(rev, subm, num_rev_edg, num_sub_edg)

# puts("*****Inside compareEdgesDiffTypes :: numRevEdg :: #{num_rev_edg} numSubEdg:: #{num_sub_edg}")

best_SV_VS_match = Array.new(num_rev_edg){Array.new}

cum_edge_match = 0.0

count = 0

max = 0.0

flag = 0

wnet = WordnetBasedSimilarity.new

for i in (0..num_rev_edg - 1)

if(!rev[i].nil? and rev[i].in_vertex.node_id != -1 and rev[i].out_vertex.node_id != -1)

#skipping edges with frequent words for vertices

if(wnet.is_frequent_word(rev[i].in_vertex.name) and wnet.is_frequent_word(rev[i].out_vertex.name))

next

end

#identifying best match for edges

for j in (0..num_sub_edg - 1)

if(!subm[j].nil? and subm[j].in_vertex.node_id != -1 and subm[j].out_vertex.node_id != -1)

#checking if the subm token is a frequent word

if(wnet.is_frequent_word(subm[j].in_vertex.name) and wnet.is_frequent_word(subm[j].out_vertex.name))

next

end

#for S-V with S-V or V-O with V-O

if(rev[i].in_vertex.type == subm[j].in_vertex.type and rev[i].out_vertex.type == subm[j].out_vertex.type)

#taking each match separately because one or more of the terms may be a frequent word, for which no @vertex_match exists!

sum = 0.0

cou = 0

if(!@vertex_match[rev[i].in_vertex.node_id][subm[j].out_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].in_vertex.node_id][subm[j].out_vertex.node_id]

cou +=1

end

if(!@vertex_match[rev[i].out_vertex.node_id][subm[j].in_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].out_vertex.node_id][subm[j].in_vertex.node_id]

cou +=1

end

if(cou > 0)

best_SV_VS_match[i][j] = sum.to_f/cou.to_f

else

best_SV_VS_match[i][j] = 0.0

end

#-- Vertex and SRL

best_SV_VS_match[i][j] = best_SV_VS_match[i][j]/ compare_labels(rev[i], subm[j])

flag = 1

if(best_SV_VS_match[i][j] > max)

max = best_SV_VS_match[i][j]

end

#for S-V with V-O or V-O with S-V

elsif(rev[i].in_vertex.type == subm[j].out_vertex.type and rev[i].out_vertex.type == subm[j].in_vertex.type)

#taking each match separately because one or more of the terms may be a frequent word, for which no @vertex_match exists!

sum = 0.0

cou = 0

if(!@vertex_match[rev[i].in_vertex.node_id][subm[j].in_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].in_vertex.node_id][subm[j].in_vertex.node_id]

cou +=1

end

if(!@vertex_match[rev[i].out_vertex.node_id][subm[j].out_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].out_vertex.node_id][subm[j].out_vertex.node_id]

cou +=1

end

if(cou > 0)

best_SV_VS_match[i][j] = sum.to_f/cou.to_f

else

best_SV_VS_match[i][j] =0.0

end

flag = 1

if(best_SV_VS_match[i][j] > max)

max = best_SV_VS_match[i][j]

end

end

end #end of the if condition

end #end of the for loop for submission edges

if(flag != 0) #if the review edge had any submission edges with which it was matched, since not all S-V edges might have corresponding V-O edges to match with

# puts("**** Best match for:: #{rev[i].in_vertex.name} - #{rev[i].out_vertex.name} -- #{max}")

cum_edge_match = cum_edge_match + max

count+=1

max = 0.0 #re-initialize

flag = 0

end

end #end of if condition

end #end of for loop for review edges

Code sample after Refactoring :

def compare_edges_diff_types(vertex_match, rev, subm, num_rev_edg, num_sub_edg)

best_SV_VS_match = Array.new(num_rev_edg){Array.new}

cum_edge_match = max = 0.0

count = flag = 0

wnet = WordnetBasedSimilarity.new

for i in (0..num_rev_edg - 1)

if(edge_nil_cond_check(rev[i]) && !wnet_frequent_word_check(wnet, rev[i]))

#identifying best match for edges

for j in (0..num_sub_edg - 1)

if(edge_nil_cond_check(subm[j]) && !wnet_frequent_word_check(wnet, subm[j]))

#for S-V with S-V or V-O with V-O

if(edge_symm_vertex_type_check(rev[i],subm[j]) || edge_asymm_vertex_type_check(rev[i],subm[j]))

if(edge_symm_vertex_type_check(rev[i],subm[j]))

cou, sum = sum_cou_asymm_calculation(vertex_match, rev[i], subm[j])

else

cou, sum = sum_cou_symm_calculation(vertex_match, rev[i], subm[j])

end

max, best_SV_VS_match[i][j] = max_calculation(cou,sum, rev[i], subm[j], max)

flag = 1

end

end #end of the if condition

end #end of the for loop for submission edges

flag_cond_var_set(:cum_edge_match, :max, :count, :flag, binding)

end

end #end of 'for' loop for the review's edges

return calculate_avg_match(cum_edge_match, count)

end #end of the method

How to run the code

Here is a sample of the refactored code compared with original code.