ECE506 CSC/ECE 506 Spring 2013/11a ad: Difference between revisions

No edit summary |

|||

| Line 299: | Line 299: | ||

private buffer is empty.</blockquote> | private buffer is empty.</blockquote> | ||

='''References'''= | |||

<references/> | <references/> | ||

Revision as of 01:25, 19 April 2013

Introduction

When dealing with a relatively small number of processors (8-16), according to Solihin 320, using a bus based shared memory structure is fine. Unfortunately, when you need to provide a shared memory structure for processors much greater than that, you will need a different set of organization. This new organization is needed due to the physical limitations of the bus. There are two ways you can create such a system. These include Distributed Shared Memory (DSM) or Non-Uniform Memory Access (NUMA). The benefits of having a DSM and NUMA is that we can now scale to a larger amount of processors. The disadvantage is that scaling in such a way may not be the most cost-effective solution, Solihin 320. For the remainder of this section, we will be discussing the performance of DSM's.

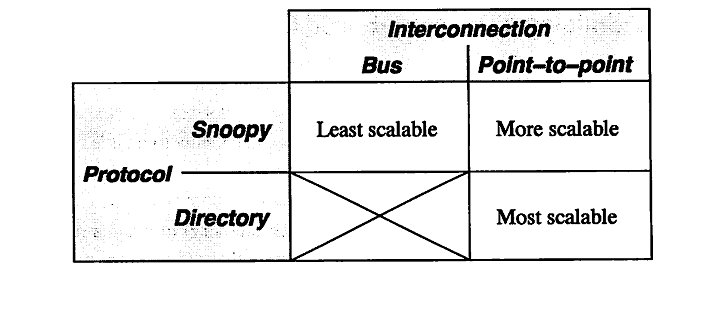

According to Solihin 320, there are two aspects that restrict the scalability of bus-based multiprocessors. These include the physical limitations of interconnections and the limitations of the protocol. To explain in detail, on a bus-based system, adding a processor will not affect any other physical restrictions on the system. Unfortunately, when adding a new processor, you will be reducing the speed of the bus. Second, the protocol needed to keep coherence does not scale well. As you increase the number of processors to the system, the amount of traffic also increases. This means that you might run the risk of overwhelming the bandwith. According to Solihin, there are a few ways that we can mitigate this problem. The following is from 321 of the Solihin textbook.

From the table, we can see that there is three ways to scale a multiprocessor system. The first being a single bus system. This is the least scalable due to the limitations of the bus wire itself. As you add processors you will decrease the bus speed due to having to increase the wire length. Also, you run into an issue of overwhelming the bus due to the amount of traffic. The second way is to use a point-to-point bus system. This allows for the speed of the bus to remain relatively fast, but since the traffic will also scale with the number of processors, there will be a limitation due to overwhelming the bus system with traffic. Lastly, the most scalable system to date is using a directory system. This allows for the bus to remain fast due to the short wires, and the bus traffic to remain low since the directory holds information on cache locations.

To provide an overview

of DSM, all possible platforms must be considered in DSM design.The choice relies on classifying all existing systems into appropriate non-overlapping subsets of systems.

DSM implementation level types-

1 Hardware

2 Software

2.1 Operating system

2.1.1 Inside the kernel

2.1.2 Outside the kernel

2.2 Runtime library routines

2.3 Compiler-inserted primitives

3 Hardware/software combination

The level of DSM implementation affects both the programming model and the overall system performance. While the

hardware solutions bring total transparency to the programmer, and achieve very low access latencies, software solutions can better exploit the application behavior and represent the

ideal polygon to experiment with new concepts and algorithms.

As the consequence, the number of software DSM systems presented in the open literature is considerably higher, but the

systems intending to become commercial products and standards are mostly hardware-oriented.Architectural configuration of the system affects the system performance, since it can offer or restrict a good potential for parallel processing of requests related to the DSM management. It also strongly affects the scalability. Since a system applying a DSM mechanism is usually organized as a set of clusters

connected by an interconnection network, architectural parameters include:

a) Cluster configuration (single/multiple processors, with/without, shared/private, single/multiple level caches, etc.) b) Interconnection network (bus hierarchy, ring, mesh, hyper- cube, specific LAN, etc.)

Cluster configuration is usually very important for the hardware-oriented proposals that integrate the mechanisms of cache

coherence on the lower level with the DSM mechanisms on the higher level of the system organization, or even store all shared data in large caches. Cluster configuration is mostly transparent for software solutions, It includes the memory organization and

the placement of directory, as well.

Almost all types of interconnection networks found in multiprocessors and distributed systems have also been used in DSM systems, The majority of software-oriented DSM systems were actually build on the top of Ethernet, although some of the solutions tend to be architecture independent and portable to various platforms. On the other hand, topologies such as bus hierarchy or mesh are typical for hardware solutions. The choice of topology can be also very important for the implementation of DSM algorithm, since it affects the possibility and cost of broad-

cast and multicast transactions.

The impact of organization to the overall system performance is closely related to the locality of data access.

Hardware solutions always deal with non-structured data objects (typically cache blocks), while many software implementations tend to use data items that represent logical entities, in order to take advantage of the locality naturally expressed by the application. On the other hand, some software solutions, based on virtual memory mechanisms, organize data in larger physical

blocks (pages), counting on the coarse-grain sharing.

Software DSM<ref>http://csag.ucsd.edu/individual/yskee/publication/parco04.pdf</ref>

Software distributed shared memory (SDSM) systems which provide shared address space have been of great importance to distributed memory architectures.

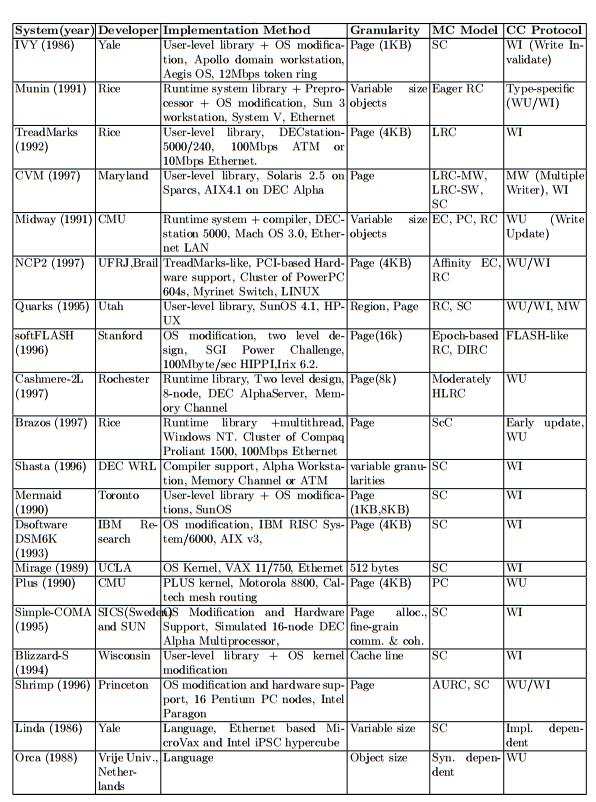

Early SDSM systems like IVY [1], Midway [2], Munin [3], and TreadMarks [4] assume uniprocessor nodes, thus allow only one thread per process on a node. Currently, commodity off-the-shelf microprocessors and network components are widely used as building blocks for parallel computers. This trend has made cluster systems consisting of symmetric multiprocessors (SMPs) attractive platforms for high performance computing. However, the early single-threaded SDSM systems are too restricted to exploit multiprocessors in SMP clusters. The next generation SDSM systems like Quarks [5], Brazos [6], DSM-Threads [7], and Murks [8] are aware of multiprocessors and exploit them by means of multiprocesses or multithreads. In general, naive process-based systems experience high context switchingoverhead and additional inter-process communication delay within a node, so the focus is on multi-threaded SDSM systems. Many single-threaded SDSM systems are implemented at user-level by using the

page fault handling mechanisms.

The SDSM system faces a dilemma when multiple threads compete to access an invalid

page within a short interval. On the first access to an invalid page, the system should set the page writable to replace with a valid one. Unfortunately, this change also allows other application threads to access the same page freely. This phenomenon is known as atomic page update and change right problem [7] or a race condition [8]. A known solution to this problem adopted by major multithreaded SDSM systems like TreadMarks [9], Brazos [6], and Strings [10] is to map a file to two different virtual addresses. Even though the file mapping method achieves good performance on some systems, file mapping is not always the best solution. Operating system and working environment may severely affect the performance of these systems. The file mapping method performs poorly in some cases; for example, an IBM SP Night Hawk system with AIX 4.3.3 PSSP 3.2 version .Moreover, file mapping has high initialization cost and reduces the available address space because SDSM and application partition the address space. We note the cause of the atomic page update problem is that SDSM and application share the same address space. When SDSM changes a page writable, the page is also accessible to the application. A general solution to this problem is to separate the application address space from the system address space for the same physical memory, and to assign different access permission to each address space.

These systems implement the shared memory abstraction completely in software on top of message-passing hardware. In terms of performance, software DSMs often exhibit high remote data access latencies when running real parallel applications. Relaxed consistency and multiple-writer coherence are common approaches to mitigating these latencies. Unfortunately, these techniques have not been enough to significantly broaden the class of applications for which software DSMs are efficient. As a result, several other techniques have been proposed to address these high latencies. The best-known of these techniques are prefetching and coherence protocol

adaptation to sharing patterns.<ref>http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=01247677</ref>

Hardware Support<ref>http://www.csl.cornell.edu/~espeight/papers/CSL-TR-2000-1008.pdf</ref>

Scalable cache-coherent distributed shared-memory (DSM) machines have received much attention in the literature since the late 1980s. To demonstrate their effectiveness, several cache-coherent non-uniform memory access (CC-NUMA) hardware DSM machines were built in the research com- munity (e.g. DASH [26], Alewife [2], FLASH [22], Typhoon [36]) and commercial machines followed (e.g. SGI Origin 2000 [23], Sun S3.mp [33], Sequent NUMA-Q [29], HP Exemplar [1], Data General Aviion [7]). At the same time, a large research effort produced a set of scientific benchmarks with which to evaluate DSM machines [48]. Most high-performance hardware DSM machines have tightly-integrated node or memory controllers that connect the microprocessor both to the memory system and to a proprietary high-speed switching network. The scalable coherence protocols (e.g., [6,14,27,38,41,42]) used in such machines are implemented either in hardware finite-state machines or in software running on an embedded programmable device in the controller. Despite the resulting high performance of these systems, and efforts to show that the necessary additional hardware to support hardware DSM in commodity workstations and servers is small [25], high-end PC servers and engineering workstations have yet to integrate the additional functionality needed to build seamless hardware DSM from COTS

(commodity of-the-shelf ) components.

Performance Concerns

A DSM machine has unique requirements compared to shared memory / bus based machines in order to provide cache coherence and memory consistency, as well as having interconnects. Performance concerns of each of these concepts in DSM machines are discussed in detail.

Maintaining cache coherence

To maintain correct cache coherence, write propagation and write serialization must be provided, both of which can have adverse effects on performance.

Write serialization requires that all writes to a memory location be seen in the same order by other processors. Earlier, an example was given indicating how write serialization can be violated by observing writes out of order. A naive implementation of write serialization would require that a request and all it's messages are performed atomically to avoid overlapping of requests [1, p. 338]. Solihin [1, p. 342-344] discusses correctness issues that can occur if requests are allowed to overlap without special consideration. A non-overlapping approach would require that each request has conditions defined that indicate when it was begun and when it ends, in order for the home node to observe and wait for completion prior to processing other requests to the same block.

The performance concern of disallowing overlapping of requests is that subsequent read or write operations to the same block would be delayed from initiating, even if some of the messages within the requests can be overlapped without correctness concerns.

Another performance problem that can arise through cache coherence are false sharing misses. False sharing can be explained by an example. Suppose two processors have a cache block caches in the shared state, but processor A is reading and writing to a variable x within this block, and processor B is reading and writing to a variable y within this block. Although both processors are not attempting to access each others variables, since both variables map to the same cache block, then each processor either invalidates or sends updates to the other without the other actually needing the data. in a DSM system, these invalidations or updates can unnecessarily utilize a significant amount of interconnect bandwidth.

Maintaining memory consistency

Depending on the memory consistency model that is being enforced, performance can be lost be having to ensure various degrees of atomicity of memory accesses and program ordering.

For sequential consistency, to follow program order requires that the program executes statements in the order defined by the source code for a thread. The implication is that statements within a thread cannot be executed out of order, so compiler optimizations and processor optimizations that include out of order execution as an attempt to reduce the latency of individual instructions and increase instruction level parallelism on pipelined architectures must be avoided to varying degrees. [1, p. 293]

For atomicity of memory accesses to be seen by all processors, special considerations need to be made for DSM systems. In general, all processors must be able to detect when a load or a store has completed. For a store, or write atomicity, on a bus based system, the completion can be assumed as occurring as soon as a read exclusive request reach the bus. This is because all processors on the bus are guaranteed to see the request and will immediately invalidate themselves, resulting in the next read to the location becoming a read miss, requiring the block to be re-cached with the most up to date value. On a DSM system, however, there is no bus, so a write cannot be assumed complete as soon as invalidations are sent on the network. Rather, they must wait until acknowledgements of the invalidations are received which can take many hops and incur high latency, especially if there is network congestion. [1, p.292]

Overall, memory consistency on DSM systems can require latencies while waiting for acknowledgements to see completion of writes and loss of performance from in-order execution.

Relaxed memory consistency models are techniques normally used to alleviate performance concerns, and are discussed in detail as improvements in specific DSM systems.

Latency of interconnections

A mentioned in the cache coherency and memory consistency sections, interconnections unique distinguish DSM systems from bus based systems. Interconnections are unlike a bus in that they do not guarantee that messages reach recipients, and certainly aren't seen by the receivers at the same moment. Each message must be sent as a transaction in a networking protocol, and each packet sent has at least the latency of hops through routers in the network, and can also incur latency in being generated.

Since messages can become ubiquitous if the DSM system was naively designed to perform identically to a bus based system, care must be taken to design coherence protocols and consistency models that minimize the sending and receiving of messages, and if they must be sent or received, allow for overlapping of execution without blocking while waiting for messages or their receipt.

Granularity<ref>http://www.cdk5.net/dsm/Ed4/Chapter%2018%20DSM.pdf</ref>

An issue that is related to the structure of DSM is the granularity of sharing.

Conceptually, all processes share the entire contents of a DSM. As programs sharing DSM execute, however, only certain parts of the data are actually shared and then only for certain times during the execution. It would clearly be very wasteful for the DSM implementation always to transmit the entire contents of DSM as processes access and update it. What should be the unit of sharing in a DSM implementation? That is, when a process has written to DSM, which data does the DSM runtime send in order to provide

consistent values elsewhere?

The focus here is on page-based implementations, although the granularity issue

does arise in other implementations. In a page-based DSM, the hardware supports alterations to an address space efficiently in units of pages – essentially by the placement of a new page frame pointer in the page table (see, for example, Bacon [2002] for a description of paging). Page sizes can typically range up to 8 kilobytes, so this is an appreciable amount of data that must be transmitted over a network to keep remote copies consistent when an update occurs. By default, the price of the whole page transfer must be paid whether the entire page has been updated, or just

one byte of it.

Using a smaller page size does not necessarily lead

to an improvement in overall performance. First, in cases where processes do update large amounts of contiguous data, it is better to send one large page rather than several smaller pages in separate updates, because of the fixed software overheads per network packet. Second, using a small page as the unit of distribution leads to a large number of units that must be administered separately by the DSM implementation. To complicate matters further, processes tend to contend more for pages when the page size is large, because the likelihood that the data they access will lie within the same page increases with the page size. Consider, for example, two processes, one of which accesses only data item A while the other accesses only data item B, which lie within the same page. For the sake of concreteness, let us assume that one process reads A and the other updates B. There is no contention at the application level. However, the entire page must be transmitted between the processes, since the DSM runtime does not by default know which locations in the page have been altered. This phenomenon is known as false sharing: two or more processes share parts of a page, but only one in fact accesses each part. In write-invalidate protocols, false sharing can lead to unnecessary invalidations. In write-update protocols, when several writers falsely share data items they may cause them to be overwritten with older versions. In practice, the choice of the unit of sharing has to be made based on the physical page sizes available, although a unit of several contiguous pages may be taken if the page size is small. The layout of data with respect to page boundaries is an important factor in determining the number of page transfers made when a program executes.

Thrashing

A potential problem with write-invalidate protocols is thrashing. Thrashing is said to occur where the DSM runtime spends an inordinate amount of time invalidating and transferring shared data compared with the time spent by application processes doing useful work. It occurs when several processes compete for the same data item, or for falsely shared data items. If, for example, one process repeatedly reads a data item that another is regularly updating, then this item will be constantly transferred from the writer and invalidated at the reader. This is an example of a sharing pattern for which write-invalidate is inappropriate and write-update would be better. The next section describes the Mirage approach to thrashing, in which computers ‘own’ pages for a minimum period; Section 18.4 describes how Munin allows the programmer to declare access patterns to the DSM system so that it can choose appropriate update options for each data item and avoid thrashing.

Saclabilty<ref>http://www.cdf.toronto.edu/~csc469h/fall/handouts/nitzberg91.pdf</ref>

A theoretical benefit of

DSM systems is that they scale better than tightly coupled shared-memory multiprocessors. The limits of scalability are greatly reduced by two factors: central bottlenecks (such as the bus of a tightly coupled shared- memory multiprocessor), and global common knowledge operations and storage (such as broadcast messages or full directories, whose sizes are proportional to the number of nodes). Li and Hudak2 went through several iterations to refine a coherence protocol for Ivy before arriving at their dy- namic distributed-manager algorithm,

which avoids centralized bottlenecks.

However, Ivy and most other DSM

systems are currently implemented on top of Ethernet (itself a centralized bottleneck), which can support only about 100 nodes at a time. This limitation is most likely a result of these systems being research tools rather than an indication of any real design flaw. ShivaY is an implementation of DSM on an Intel iPSCR hypercube, and it should scale nicely. Nodes in the Dash system are connected on two meshes. This implies that the machine should be expandable, but the Dash prototype is currently limited by its use of a full bit vector (one bit per node) to keep track of page replication. Heterogeneity. At first glance, sharing memory between two machines with different architectures seems almost impossible. The machines may not even use the same representation for basic data types (integers, floating-point numbers, and so on). It is a bit easier if the DSM system is structured as variables or objects in the source language. Then a DSM compiler can add conversion routines to all accesses to shared memory. In Agora, memory is structured as objects shared among heterogeneous machines. Mermaidlo explores another novel approach: Memory is shared in pages, and a page can contain only one type of data. Whenever a page is moved between two architecturally different systems, a conversion routine converts the data in the page to the appropriate

format.

Although heterogeneous DSM might allow more machines to participate in a computation, the overhead of conversion seems to outweigh the benefits. Related algorithms. To support a DSM system, synchronization operations and memory management must be specially tuned. Semaphores, for example, are typically implemented on shared- memory systems by using spin locks. In a DSM system, a spin lock can easily cause thrashing, because multiple nodes may heavily access shared data. For better performance, some systems pro- vide specialized synchronization primitives along with DSM. Clouds provides semaphore operations by grouping semaphores into centrally managed segments. Munin supports the synchroni- zation memory type with distributed locks. Plus supplies a variety of syn- chronization instructions, and supports delayed execution, in which the syn- chronization can be initiated, then later tested for successful completion. Dubois, Scheurich, and Briggs12 discuss the rela- tionship between coherence and synchronization. Memory management can be restructured for DSM. A typical memory- allocation scheme (as in the C library malloc()) allocates memory out of a common pool, which is searched each time a request is made. A linear search of all shared memory can be expensive. A better approach is to partition avail- able memory into private buffers on each node and allocate memory from the global buffer space only when the

private buffer is empty.

References

<references/>