CSC/ECE 506 Spring 2012/11a hn: Difference between revisions

No edit summary |

|||

| Line 57: | Line 57: | ||

In case of a distributed shared memory, it is imperative that a software component that can ease the burden of the programmer writing message passing programs. The software distributed shared memory is responsible for actually providing the abstraction of a shared memory over a hardware. | In case of a distributed shared memory, it is imperative that a software component that can ease the burden of the programmer writing message passing programs. The software distributed shared memory is responsible for actually providing the abstraction of a shared memory over a hardware. | ||

In the early days of the DSM development, a so-called "fine-grained | In the early days of the DSM development, a so-called "fine-grained" approach which used virtual memory based pages. Page faults caused invalidations and updates to occur. T | ||

==Performance of DSM== | ==Performance of DSM== | ||

Revision as of 02:30, 14 April 2012

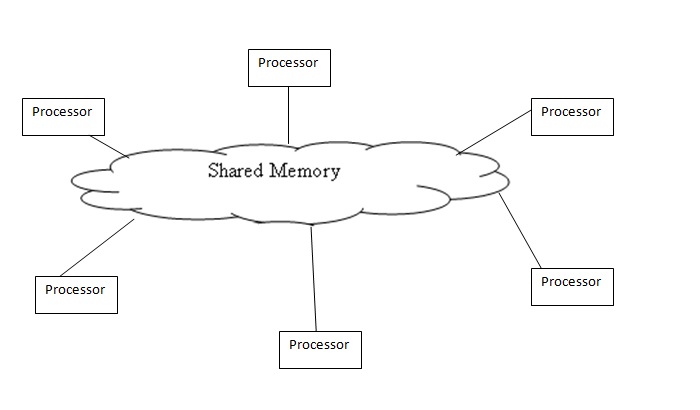

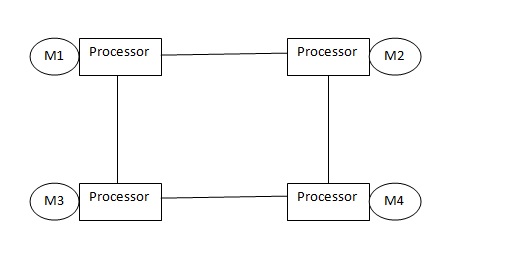

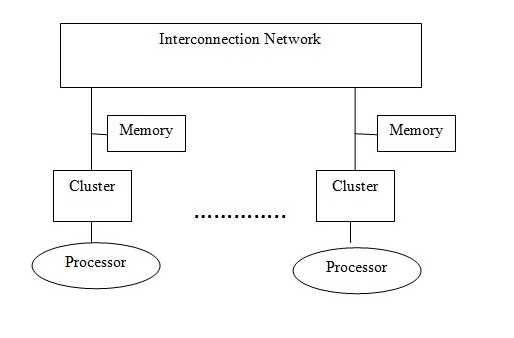

The Distributed Shared Memory (DSM) system is a combination of a Shared Memory system and a Distributed Memory systems. The Shared Memory system has a group of processors sharing global memory which allows any processor to access the memory location[1]. The Distributed memory systems have a cluster each (i.e each processor has its own memory location ) and use message passing to communicate between the clusters[2] The DSM on the other hand has several processors sharing the same address space i.e a location in memory will be the same physical address for all processors. Unlike the Shared Memory System they do not actually use a global memory which is accessible by all processors, instead it is a logical abstraction of a single address space for different memory locations which can be accessed by all processors.

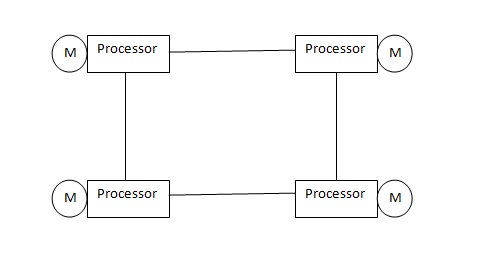

From the above pictures it can be noticed that in Distributed Systems each processor as its own memory,but in case of Distributed Shared memory though each processor has its own memory the memory is the same i.e The main memory of a cluster of processors is made to look like a single memory with a single address space. The Distributed systems are scalable for a large number of processors but is difficult to program because of message passing. The Shared Memory on the other hand is less scalable but is easy to program as all the processors can access each others data and makes parallel programming easier. Therefore DSM uses a more scalable and less expensive model by supporting the abstraction of shared memory by using message passing. The DSM allows the programmer to share and use variables without having to worry about their management. Hence a processor can access a address space held by other processors main memory. It allows end-users to use the shared memory without knowing the message passing,the idea is to allow inter processor communication which is invisible to the user.

A DSM system in general has clusters connected to a interconnection network. A cluster can have a uni-processor or multi-processor system with local memory and cache to remove memory latency. The local memory of a cluster is entirely or partially mapped to the DSM global memory system. A directory is maintained which has information of location of each data block and the state of the block. Directory organization can be that of a linked list,double linked list or a full map storage,the organization of directory will affect the system scalabilty[5].

A DSM system provides the shared memory abstraction by creating a software which would be cost efficient as it would not change anything in the cluster. Some systems provide the abstraction in hardware which could cost extra but the performance of hardware based DSM is better than software based [4].

Why not use Bus based multiprocessing for the DSM?

The Distributed Shared Memory(DSM) architecture requires a shared memory space. Message passing using interconnect networks is the preferred mode of communication to maintain coherence in a DSM. This is because

- Physically, the DSM is designed to allow a large number of nodes to interact with each other. With a bus-based system, the bus become simply too long to connect various nodes of a DSM. In a bus based multiprocessor for a smaller set of nodes having a common memory, latency of communication is not discernable. Also because the nodes of a bus-based system use snoopy protocols, clock frequency synchronization is also critical. With larger number of nodes and a longer bus, maintaining clock frequency becomes a bigger challenge. - Whatever cache coherence protocol is used, a larger number of nodes leads to a larger number of transactions on the bus. The bus using a shared channel to transfer cache blocks/lines, an increase in bus traffic causes greater latency in communcation. - It is preferable to design a DSM on a non-uniform memory access(NUMA) based architecture, because that would allow the use of memory to be addressed globally, but placed locally to each node.

For the above mentioned reasons, a message passing model is used for communication between nodes. A message passing model augmented with a point to point connection between nodes is also preferable. The following section explains why.

Not Bus based, so where does the coherence happen?

As explored in the previous section, it is not preferable to use a monlithic bus for communication. Instead, short wires are used for point-to-point interconnections. As a result, these wires can afford to have greater frequency clocked. The bandwidth can also now afford to increase.

As illustrated in the figure, a point-to-point interconnection is coupled with a directory based protocol for a DSM. Since the memory is distributed, a directory can be maintained, which has information about which caches have a copy of memory blocks. Having a directory based protocol, with each node having information about the blocks maintatined by the caches, each time a read/write is done by a cache of a node, based on it being a hit or miss, allows a node to contact the directory system, and then send updates/signals to only those nodes in the system, thus avoiding traffic.

Hardware DSM

To achieve the hardware DSM we need special kind of network interfaces and cache coherence circuits. There are 3 prominent groups of hardware which are interesting

- Cache Coherent Non Uniform Memory Architecture (CC-NUMA)

- Cache only Memory Architecture(COMA)

- Reflective Memory systems

CC-NUMA being the most important is discussed here.

CC-NUMA

A CC-NUMA architecture has its shared virtual address space distributed between various clusters local memories so that the local processor as well as other processors in the network can access with different access time as it is a non uniform memory architecture. "The address space of the architecture maps onto the local memories of each cluster in a NUMA fashion[5]". The cache in each cluster is used to replicate blocks of memory which are reserved in remote hosts.

There if a fixed physical memory location for each data block in CC-NUMA. Directory based architecture is used in which each cluster has equal parts of systems shared memory which is called the home property.The various coherence protocols, Memory consistency models like weak consistency and processor consistency are implemented in CC-NUMA. The CC-NUMA is simple and cost effective but when multiple processors want to access the same block in succession there can be a huge performance downfall for CC-NUMA [6].

The hardware DSM fairs better than software DSM in terms of performance but the cost of hardware is very high and hence changes are being made in the implementation of software DSM for better performance and hence this article will deal in detail with software DSM ,their performance and performance improvement measures.

Software components of a DSM

The software components of a DSM form a critical part of the DSM application. Robust Software design decisions better help the DSM system to work efficiently. The idea of a good software component for a DSM depends on factors like portability to different operating systems, efficient memory allocation, and good coherence protocols to support data up to date. As we shall see further, in modern day DSMs the software needs to co-operate with the underlying hardware in order to ensure ideal system performance.

In case of a distributed shared memory, it is imperative that a software component that can ease the burden of the programmer writing message passing programs. The software distributed shared memory is responsible for actually providing the abstraction of a shared memory over a hardware.

In the early days of the DSM development, a so-called "fine-grained" approach which used virtual memory based pages. Page faults caused invalidations and updates to occur. T